先自我介绍一下,小编浙江大学毕业,去过华为、字节跳动等大厂,目前阿里P7

深知大多数程序员,想要提升技能,往往是自己摸索成长,但自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年最新Golang全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上Go语言开发知识点,真正体系化!

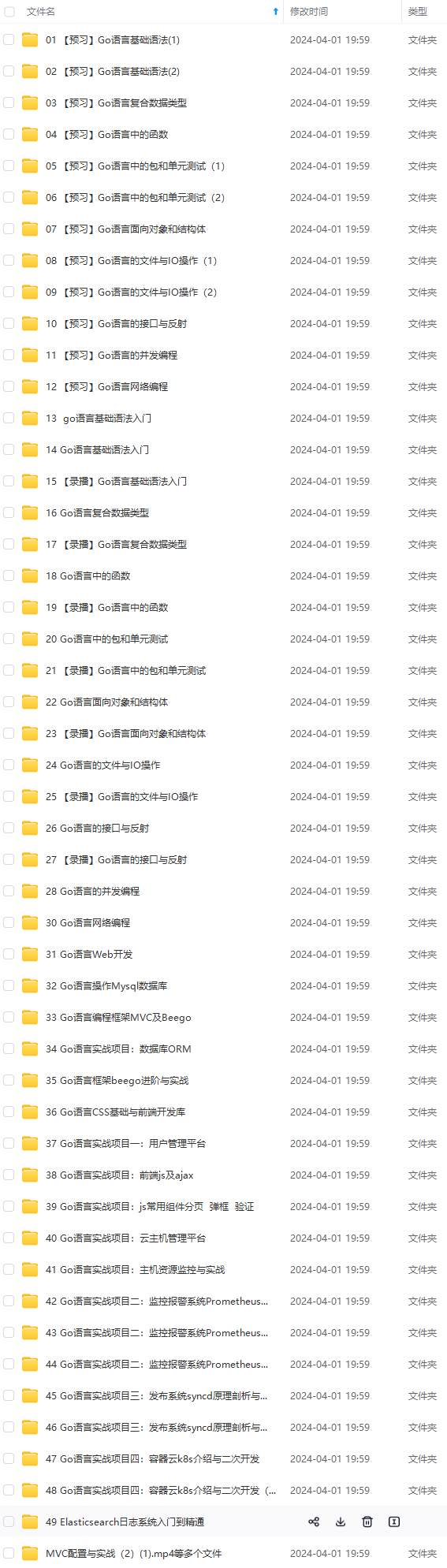

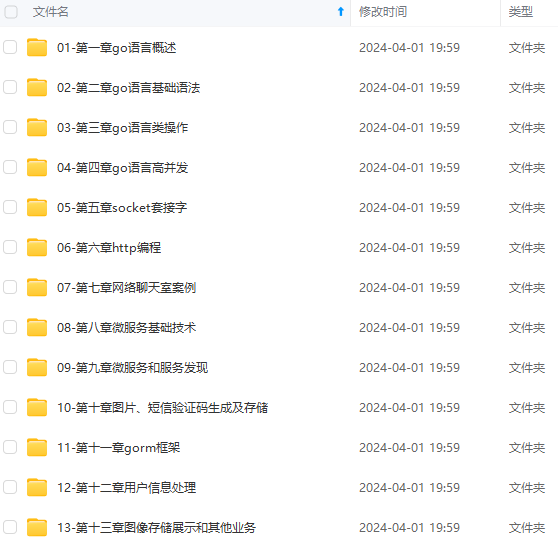

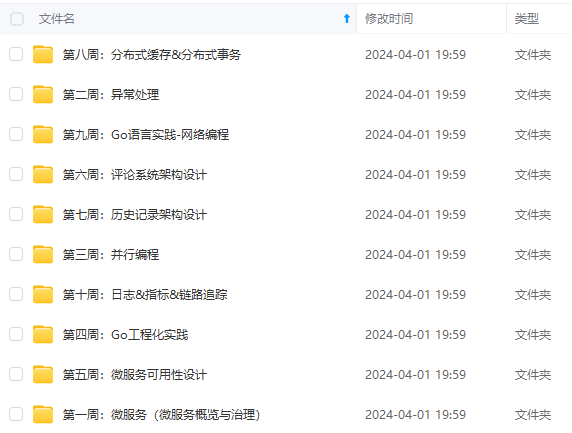

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

如果你需要这些资料,可以添加V获取:vip1024b (备注go)

正文

一、前言

毕导的公众号下贴了几篇文章:

1、Zheng, Shaodong, and Jinsong Zhao. “A Self-Adaptive Temporal-Spatial Self-Training Algorithm for Semi-Supervised Fault Diagnosis of Industrial Processes.” IEEE Transactions on Industrial Informatics (2021).

2、Wu, Deyang, and Jinsong Zhao. “Process topology convolutional network model for chemical process fault diagnosis.” Process Safety and Environmental Protection 150 (2021): 93-109.

3、Xiang, Shuaiyu, Yiming Bai, and Jinsong Zhao. “Medium-term Prediction of Key Chemical Process Parameter Trend with Small Data.” Chemical Engineering Science (2021): 117361.

发现他们老板也是个大牛,这是简介https://www.chemeng.tsinghua.edu.cn/info/1094/2385.htm

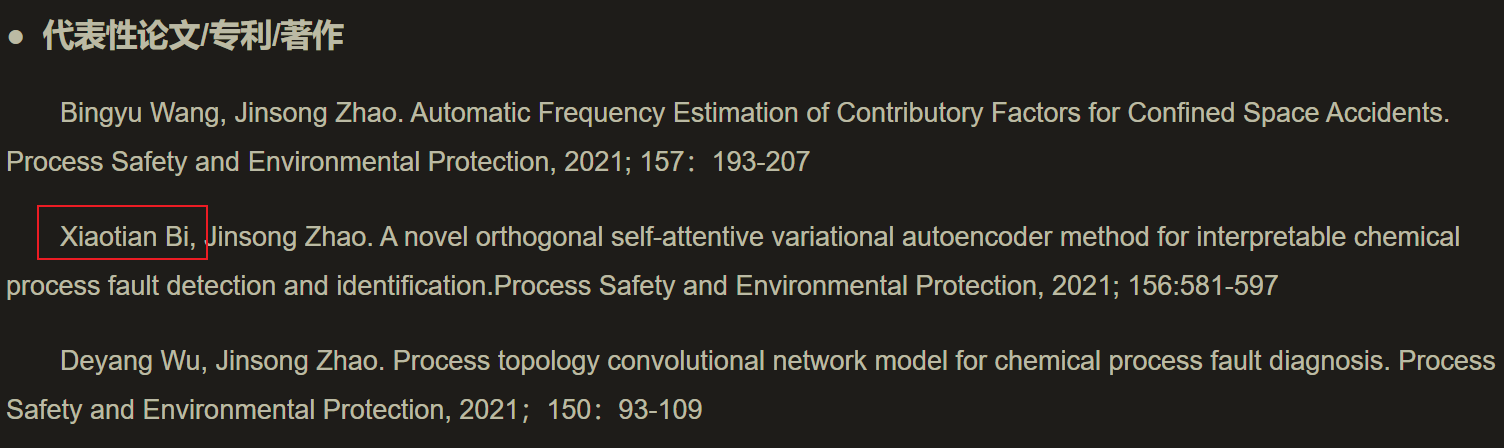

当然也碰巧发现了毕导的文章,毕导还是强啊,刚回归学校没多久就发文章了。

从他们文章的标题也可以看出,基本是都是采用深度学习模型去探测和识别化工过程故障。这种一般就需要对化工过程比较了解,积累了很多化工过程数据,再加上对深度学习的掌握,才可以产生一篇这样的文章。

二、文章内容

因为我本身对化工过程不是很了解,因此,本文只做简单解读,主要是了解深度学习怎么和化工过程结合起来的。

Abstract

Industrial processes are becoming increasingly large and complex, thus introducing potential safety risks and requiring an effective approach to maintain safe production. Intelligent process monitoring is critical to prevent losses and avoid casualties in modern industry. As the digitalization of process industry deepens, data-driven methods offer an exciting avenue to address the demands for monitoring complex systems. Nevertheless, many of these methods still suffer from low accuracy and slow response. Besides, most black-box models based on deep learning can only predict the existence of faults, but cannot provide further interpretable analysis, which greatly confines their usage in decision-critical scenarios. In this paper, we propose a novel orthogonal self-attentive variational autoencoder (OSAVA) model for process monitoring, consisting of two components, orthogonal attention (OA) and variational self-attentive autoencoder (VSAE). Specifically, OA is utilized to extract the correlations between different variables and the temporal dependency among different timesteps; VSAE is trained to detect faults through a reconstruction-based method, which employs self-attention mechanisms to comprehensively consider information from all timesteps and enhance detection performance. By jointly leveraging these two models, the OSAVA model can effectively perform fault detection and identification tasks simultaneously and deliver interpretable results. Finally, extensive evaluation on the Tennessee Eastman process (TEP) demonstrates that the proposed OSAVA-based fault detection and identification method shows promising fault detection rate as well as low detection delay and can provide interpretable identification of the abnormal variables, compared with representative statistical methods and state-of-the-art deep learning methods.

工业过程变得越来越庞大和复杂,从而引入了潜在的安全风险,需要一种有效的方法来维持安全生产。智能过程监控对于现代工业中防止损失和避免人员伤亡至关重要。随着过程工业数字化的深入,数据驱动的方法提供了一种令人兴奋的途径来满足监控复杂系统的需求。尽管如此,许多这些方法仍然存在准确性低和响应慢的问题。此外,大多数基于深度学习的黑盒模型只能预测故障的存在,而不能提供进一步的可解释性分析,这极大地限制了它们在关键决策场景中的使用。在本文中,我们提出了一种用于过程监控的新型正交自注意变分自编码器(OSAVA)模型,它由两个组件组成,正交注意(OA)和变分自注意自编码器(VSAE)。具体来说,OA用于提取不同变量之间的相关性以及不同时间步长之间的时间依赖性; VSAE 被训练通过基于重构的方法检测故障,该方法采用自注意力机制来综合考虑来自所有时间步的信息并提高检测性能。通过联合利用这两个模型,OSAVA 模型可以有效地同时执行故障检测和识别任务,并提供可解释的结果。最后,对田纳西伊士曼过程 (TEP) 的广泛评估表明,与代表性统计方法相比,所提出的基于 OSAVA 的故障检测和识别方法具有良好的故障检测率和低检测延迟,并且可以提供可解释的异常变量识别和最先进的深度学习方法。

Introduction

Safe production is a continuing concern within modern industry. With the development of automation and digitization, industrial processes can be efficiently controlled by systems like distributed control systems (DCS) and advanced process control (APC) (Shu et al., 2016). However, despite advances in control systems that have made production more intelligent, real-world processes are often rather complicated and inevitably in a fault state, leading to shutdowns, economic losses, injuries, or even catastrophic accidents in severe cases (Venkatasubramanian et al., 2003). Therefore, it is imperative to achieve higher levels of safety, efficiency, quality, and profitability by compensating for the effects of faults occurring in the processes (Qin, 2012, Ge et al., 2013, Weese et al., 2016).

Advances in process safety management have highlighted the importance of intelligent process monitoring (Madakyaru et al., 2017, Fazai et al., 2019). Overall, process monitoring is associated with four procedures: fault detection, fault identification, fault diagnosis, and process recovery (Chiang et al., 2001). Fault detection refers to determining whether a fault has occurred. Fault identification refers to identifying the variables most relevant to the fault. Fault diagnosis requires further specification of the type and cause of the fault. Customarily, fault detection and diagnosis are collectively referred to as FDD. Khan et al. described the development of process safety in terms of risk management (RA) and pointed out that the integration of FDD and RA had effectively improved the level of process safety (Khan et al., 2015). Arunthavanathan et al. elucidated the dialectical interrelation between FDD, RA, and abnormal situation management (ASM), further expanding the connotation of these concepts from safety perspectives (Arunthavanathan et al., 2021). ASM is a centralized and integrated process that implies instant detection of abnormal conditions, timely diagnosis of the root causes, and decision support to operators for the elimination of the faults (Hu et al., 2015, Dai et al., 2016). It has become a consensus in academia and industry that process monitoring including FDD is one of the most critical issues of ASM (Shu et al., 2016). Therefore, building an efficient, robust, and application-worthy process monitoring framework is of supreme importance for process safety.

In recent years, many researchers have enriched the scope of process monitoring from the standpoint of safety and risk management. For example, BahooToroody et al. proposed a process monitoring based signal filtering method for the safety assessment of the natural gas distribution process (BahooToroody et al., 2019). Yin et al. deployed a supervised data mining method for the risk assessment and smart alert of gas kick during the industrial drilling process (Yin et al., 2021). Amin et al. proposed a novel risk assessment method that integrated multivariate process monitoring and a logical dynamic failure prediction model (Amin et al., 2020). In summary, effective and reliable process monitoring can reduce the risk of accidents, keep operators informed of the status of the process, and thereby enhance process safety.

On the other hand, process monitoring methods can be divided into the following categories in line with the way of modeling: the first principle methods, also known as white-box models, and data-driven methods, also known as black-box models (Lam et al., 2017). First principle methods mainly use knowledge, mechanisms, and mathematical equations to describe the process quantitatively. On the contrary, data-driven methods analyze regular patterns of processes only from collected data without prescribing the meaning of faulty states. With the widespread use of big data and the strengthening of computing power, data-driven methods have gradually attracted attention from both academia and industry (Shardt, 2015).

Data-driven process monitoring methods can be roughly grouped into two lines of development: multivariate statistical methods and deep learning methods. Traditional statistical methods mainly include principal component analysis (PCA) (Wise et al., 1990), partial least squares (PLS) (Kresta et al., 1991), independent component analysis(ICA) (Comon, 1994), and Fisher discriminant analysis (FDA) (Chiang et al., 2000), etc. There have been insightful discussions on the merits of these methods (Joe Qin, 2003). In order to cope with the nonlinear and dynamic nature of actual industrial processes, kernel-based and dynamic-based methods are derived (Kaspar and Harmon Ray, 1993, Ku et al., 1995, Rosipal and Trejo, 2001, Cho et al., 2005). Unfortunately, these traditional methods are not well equipped to deal with complex problem and still suffer from low accuracy rates.

With the rapid rise of deep learning, methods based on deep neural networks (DNN) for process monitoring have drawn tremendous attention. Due to its powerful ability to learn the intrinsic regularities and representation hierarchies of data, deep-learning-based methods has become a mainstream area in the field of process monitoring today, with advanced models emerging in recent years. For example, deep belief networks (DBN) and convolutional neural networks (CNN) are used to perform chemical fault diagnosis tasks and have achieved excellent results (Lv et al., 2016, Zhang and Zhao, 2017, Wu and Zhao, 2018). However, these works consider fault diagnosis as a supervised classification task, and thus require the labels of all kinds of faults in advance. Nevertheless, the occurrence of a fault is a low probability event in reality, and even if a faulty event occurs, it is often uncharted. Such a situation makes it impractical to acquire well-labeled fault data and reduces the practical value of deploying supervised learning methods in industrial process monitoring.

By contrast, unsupervised process monitoring methods usually extract features from normal data only, which may have broader application prospects in practice. Among them, autoencoder (AE) and its derivatives are representative neural networks (Hinton, 2006). They have been successfully applied in many scenarios, including image feature extraction (Le, 2013), machine translation (Cheng et al., 2016), anomaly detection (Zhou and Paffenroth, 2017, Al-Qatf et al., 2018, Roy et al., 2018), and fault diagnosis (Zheng and Zhao, 2020). In principle, AE learns to reconstruct the normal data through dimension reduction and regards the data that cannot be reconstructed as anomalies (Längkvist et al., 2014).

Despite its wide application in many domains, one disadvantage of AE is that it is solely trained to reduce the reconstruction loss through encoding and decoding, but it does not regularize the latent space, which is prone to overfitting and does not give meaningful representations (Fu et al., 2019). Variational autoencoder (VAE) has a similar structure to AE but works in a perspective of probability and estimates a posterior distribution in latent space (Kingma and Welling, 2014). By introducing such explicit regularization, VAE can be trained to obtain a latent space with good properties such as continuity and completeness, which allows for interpolation and interpretation (Kingma and Welling, 2019). So far, VAE has been extensively applied to process monitoring (Lee et al., 2019, Wang et al., 2019; Zhang et al., 2019b) as well as many other fields (Walker et al., 2016; Zhang et al., 2019a; Pol et al., 2020).

However, AE and VAE are primarily static deep networks and do not consider the dynamic behavior of data (Längkvist et al., 2014). Since industrial process data is often in a form of complex multivariate time series, it is necessary to capture the temporal correlations and characteristics. To better process such time sequences, the model should be able to comprehensively consider the current time step as well as its relation to time steps from the past. Recurrent neural networks (RNN) use several internal states to memorize variable length sequences of inputs to model the temporal dynamic behavior. Benefiting from this, variational recurrent autoencoder (VRAE) is proposed and applied in many areas in the past couple of years. For example, Park et al. used an LSTM-VAE structure to perform multimodal anomaly detection tasks in a Robot-Assisted Feeding scene (Park et al., 2017). Lin et al. proposed a hybrid model of VAE and LSTM as an unsupervised approach for anomaly detection in time series (Lin et al., 2020). Cheng expounded a novel fault detection method based on VAE and GRU, which achieved both higher detection accuracy and lower detection delay than conventional methods on the Tennessee Eastman process (Cheng et al., 2019).

In such an RNN-based encoder-decoder structure, the encoder needs to gather all input sequences into one integrated feature. Unfortunately, there are certain concerns associated with the ability of modeling long input sequences of RNN-based methods (Cho et al., 2014). Especially in industrial processes, downstream data often have a strong dependence on long-term upstream data. To address this challenge, an attention-based encoder-decoder structure is adopted that allows the model to give different weights across all time steps of the sequence and automatically attend to the more important parts (Bahdanau et al., 2016). For example, Aliabadi et al. used an attention-based RNN model for multistep prediction of chemical process status which showed superior performance over conventional machine learning techniques (Aliabadi et al., 2020). Mu et al. introduced a temporal attention mechanism to augment LSTM and focus on local temporal information, resulting in a high-quality fault classification rate on the Tennessee Eastman process (Mu et al., 2021). Up till now, the attention mechanism is usually used in combination with RNNs or CNNs in most cases. However, recent work has proved that outstanding performance can be achieved on many tasks by using the attention mechanism only (Vaswani et al., 2017) and the application of a pure attention mechanism in industrial process monitoring has received scant attention in research literature.

From the review above, it is observed that the methods adopted in the field of process monitoring have been evolving in recent years along with the enrichment of state-of-the-art deep learning models. In recent years, a variety of novel and powerful deep networks have imparted greater representation capability to deep learning, thus overcoming the drawbacks of traditional methods. These performance advances also provide a solid backbone for process safety improvements (Dogan and Birant, 2021). Moreover, process safety relies on a global and proactive awareness of the process, which may not be achieved by one single approach or model. As the optimization space for the performance of single-task-oriented methods is gradually decreasing, it is also expected to broaden the comprehensiveness of process monitoring methods through multiple techniques. Several recent studies have focused on integrating single-task-oriented methods to obtain hybrid methods for fault detection, identification, diagnosis, and their contribution to process safety (Ge, 2017, Xiao et al., 2021, Amin et al., 2021). For example, Amin et al. utilized a hybrid method based on PCA and a Bayesian network to detect and diagnosis the faults at one time (Amin et al., 2018). Deng et al. used a serial PCA to perform fault detection and identification on nonlinear processes, which surpassed the performance of the KPCA method (Deng et al., 2018). The combination of attention mechanisms and deep models is also an effective hybrid method to perform multiple tasks in process monitoring. For example, Li proposed a nonlinear process monitoring method based on 1D convolution and self-attention mechanism to adaptively extract the features of both global and local inter-variable structures, which is validated on the Tennessee Eastman process for fault detection and fault identification (Li et al., 2021). The convergence of attention mechanisms and deep networks allows for better extraction of interrelationships between data, thus making the data-driven methods more rigorous and reliable for process safety. However, this exciting function of the attention mechanisms is still under-explored in the field of process monitoring.

Despite the satisfactory performance of deep learning methods in fault detection, the high accuracy comes at the expense of high abstraction. It is alarming that the complex network structure and the massive number of parameters may make the model an incomprehensible black box, thus hindering human understanding of how deep neural networks make judgments upon the occurrence of faults. Recently, how to improve the interpretability of AI has become a lively topic of discussion (Chakraborty et al., 2017, Zhang and Zhu, 2018). In the field of process monitoring and fault detection, we should not stop at assigning neural networks to give binary judgments about the existence of a fault but rather empower the model to help humans comprehend why certain judgments or predictions have been made (Kim et al., 2016). So far, many paths to this vision have been explored. For example, Bao et al. proposed a sparse dimensionality reduction method called SGLPP, which extract sparse transformation vectors to reveal meaningful correlations between variables and further construct variable contribution plots to produce interpretable fault diagnosis results (Bao et al., 2016). Wu and Zhao developed a PTCN method by incorporating process topology knowledge into graph convolutional neural networks, conducting a more rational and understandable feature extraction than other data-driven fault diagnosis models (Wu and Zhao, 2021). In summary, the predominant trails to improve the interpretability of a model include using intrinsically interpretable models, such as decision tree or Bayesian network; providing summary statistics for each feature, such as variable contribution plot; and revealing the practical implications of the internal parameters of the model, such as weight value of the attention mechanism (Ribeiro et al., 2016).

In recent studies, the attention mechanism has cut a conspicuous figure in conferring interpretability to deep neural networks in many fields (Mott et al., 2019). For example, in the multivariate process monitoring problem, there are two most critical points to consider: the causal relationship between variables and the temporal dependency along the time sequence dimension. By examining the weights of the attention mechanism, we can understand which part of data the model is attending to, which is beneficial to explain the internal parameters of a deep model. Gangopadhyay et al. proposed a spatiotemporal attention module to enhance understanding the contributions of different features on time series prediction outputs. The learned attention weights were also validated from a domain knowledge perspective (Gangopadhyay et al., 2020). Wang et al. designed a multi-attention 1D convolutional neural network, which can fully consider characteristics of rolling bearing faults to enhance fault-related features and ignore irrelevant features (Wang et al., 2020). It can be perceived that leveraging the attention mechanism between variables encourages better interpretability of the model and better evaluation of contributions of multiple variables. This could help isolate abnormal variables and performing fault identification tasks after a fault is detected, which in turn dramatically facilitates troubleshooting efficiency for field operators and improves system safety. However, to the best of our knowledge, this great superiority of the attention mechanism has yet received insufficient focus in chemical processes.

Motivated by the above observations, in this paper we propose a novel attention-based model — orthogonal self-attentive variational autoencoder (OSAVA for brevity) for industrial process monitoring. The OSAVA model consists of two parts: orthogonal attention (OA) and variational self-attentive autoencoder (VSAE). The OA model includes two independent branches: a spatial self-attention layer and a temporal self-attention layer. The former is used to extract the causal relationships between multiple process variables, while the latter focuses on the temporal dependency along the time dimension. The VSAE component utilizes a self-attention mechanism to aggregate information across all time steps and reconstructs the output of OA. Combining these two procedures, it is possible to perform fault detection and identification tasks simultaneously, rendering an improvement on the interpretability for process monitoring as well. We compare the OSAVA-based fault detection and identification method with representative statistical methods and DNN methods using the famous Tennessee Eastman Process. The results show that the proposed method can outperform existing methods by large margins and also highlights abnormal variables by assigning large attention weights for better interpretability. For industrial processes, it can take a long time for operators to determine the location and cause of an alarm after it has occurred. Our proposed method can detect the presence of a fault at an early stage and quickly isolate the fault variables, which will be effective in practical applications to enhance process safety and contribute to the management of abnormal situations.

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

需要这份系统化的资料的朋友,可以添加V获取:vip1024b (备注Go)

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

-FRg7MYWk-1713191759109)]

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

4977

4977

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?