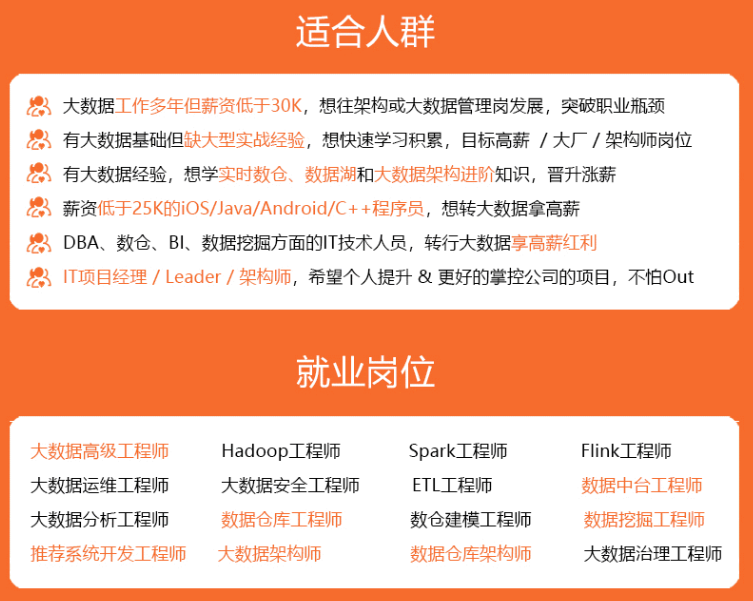

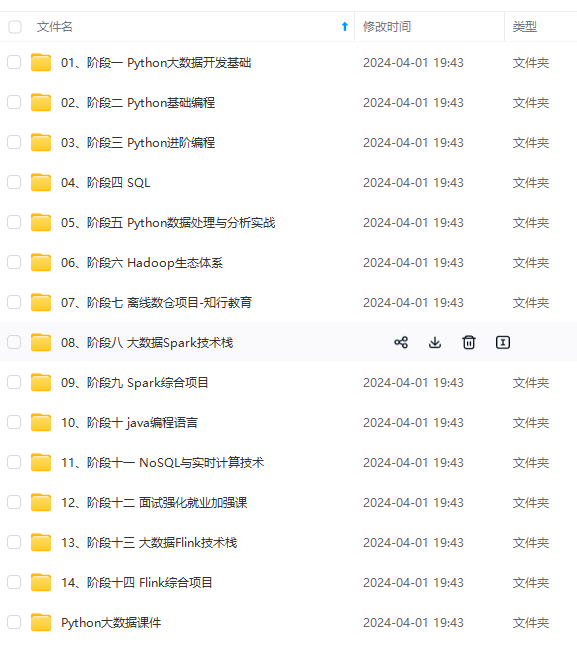

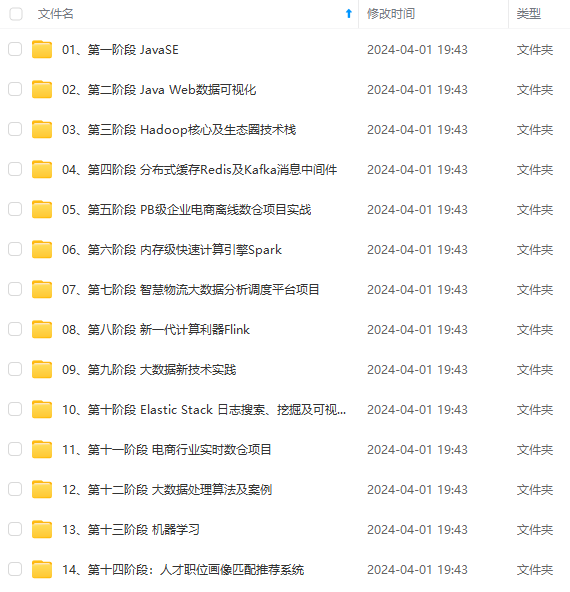

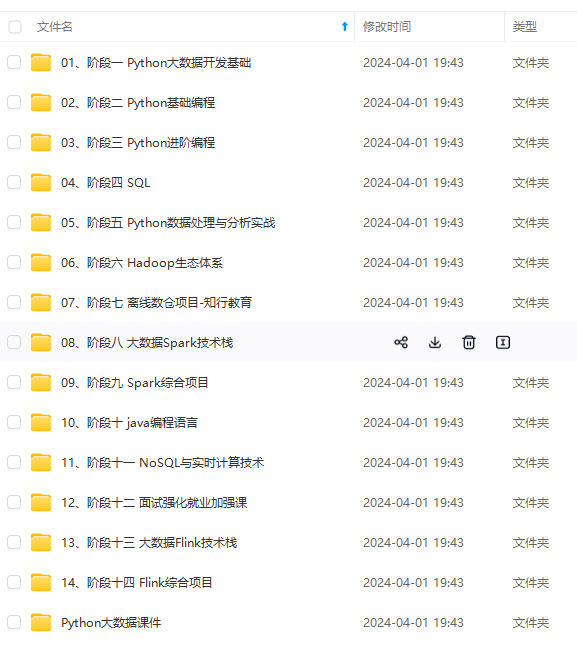

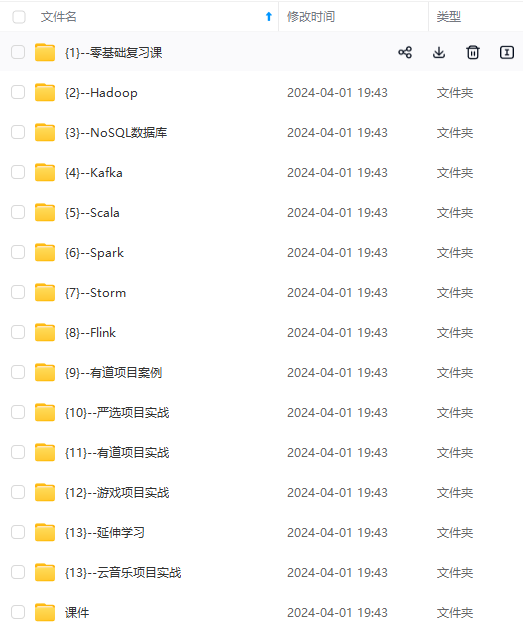

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

hive.querylog.location

/user/hadoop/hive/logs

Location of Hive run time structured log file

hive.metastore.schema.verification

false

Enforce metastore schema version consistency.

True: Verify that version information stored in is compatible with one from Hive jars. Also disable automatic

schema migration attempt. Users are required to manually migrate schema after Hive upgrade which ensures

proper metastore schema migration. (Default)

False: Warn if the version information stored in metastore doesn’t match with one from in Hive jars.

hive.metastore.db.type

mysql

Expects one of [derby, oracle, mysql, mssql, postgres].

Type of database used by the metastore. Information schema & JDBCStorageHandler depend on it.

javax.jdo.option.ConnectionURL

jdbc:mysql://hadoop001:3306/hive?createDatabaseIfNotExist=true&useSSL=false

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

Driver class name for a JDBC metastore

javax.jdo.option.ConnectionUserName

hive

Username to use against metastore database

javax.jdo.option.ConnectionPassword

hive

Comma separated list of configuration options which should not be read by normal user like passwords

datanucleus.schema.autoCreateAll

true

Auto creates necessary schema on a startup if one doesn’t exist. Set this to false, after creating it once.To enable auto create also set hive.metastore.schema.verification=false. Auto creation is not recommended for production use cases, run schematool command instead.

hive.server2.thrift.bind.host

hadoop001

Bind host on which to run the HiveServer2 Thrift service.

hive.server2.thrift.port

10000

Port number of HiveServer2 Thrift interface when hive.server2.transport.mode is ‘binary’.

hive.metastore.uris

Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.

hive.server2.logging.operation.log.location

/usr/local/hadoop/hive/tmp/operation_logs

Top level directory where operation logs are stored if logging functionality is enabled

hive.server2.webui.host

hadoop001

The host address the HiveServer2 WebUI will listen on

hive.server2.webui.port

10002

The port the HiveServer2 WebUI will listen on. This can beset to 0 or a negative integer to disable the web UI

hive.server2.webui.max.threads

50

The max HiveServer2 WebUI threads

hive.server2.webui.use.ssl

false

Set this to true for using SSL encryption for HiveServer2 WebUI.

hive.server2.thrift.client.user

root

Username to use against thrift client

hive.server2.thrift.client.password

root

Password to use against thrift client

五、初始化Hive

1、复制mysql jdbc驱动包到hive lib目录

cd $HIVE_HOME/lib

wget https://repo1.maven.org/maven2/mysql/mysql-connector-java/5.1.47/mysql-connector-java-5.1.47.jar

wget https://repo1.maven.org/maven2/mysql/mysql-connector-java/8.0.16/mysql-connector-java-8.0.16.jar

2、MySQL创建用户并赋予权限

– 创建hive用户,密码为hive

CREATE USER ‘hive’@‘%’ IDENTIFIED BY ‘hive’;

– 赋予hive用户全部权限

GRANT ALL PRIVILEGES ON . TO ‘hive’@‘%’ IDENTIFIED BY ‘hive’ WITH GRANT OPTION;

– 刷新权限

FLUSH PRIVILEGES;

3、启动zk和hadoop集群

zkServer.sh start

hdfs --daemon start zkfc

start-all.sh

4、创建hive目录并赋权

hadoop fs -mkdir /tmp

hadoop fs -mkdir /user/hive/warehouse

hadoop fs -chmod g+w /tmp

hadoop fs -chmod g+w /user/hive/warehouse

5、初始化hive数据库

schematool -dbType mysql -initSchema

6、查看hive初始化的数据库

六、启动Hive

1、启动hive客户端

hive

SHOW DATABASES;

CREATE DATABASE db01;

USE db01;

set hive.cli.print.current.db=true;

CREATE TABLE pokes (foo INT, bar STRING);

CREATE TABLE invites (foo INT, bar STRING) PARTITIONED BY (ds STRING);

SHOW TABLES;

SHOW TABLES ‘.*s’;

DESCRIBE invites;

2、HDFS查看Hive目录

http://hadoop001:9870/explorer.html#/user/hive/warehouse/db01.db

3、启动 HiveServer2 服务

nohup hiveserver2 > /dev/null 2>&1 &

HiveServer2服务支持多线程多用户同时连接,还同时还支持JDBC连接

JDBC驱动:org.apache.hive.jdbc.HiveDriver

JDBCURL:jdbc:hive2://hadoop001:10000/dbname

4、查看 Hive 日志

tail -n 300 /tmp/root/hive.log

5、查看 HiveServer2 webui

HiveServer2 webui 也可以查看 hive日志和配置文件

6、beeline连接Hive

beeline

!connect jdbc:hive2://hadoop001:10000

SHOW DATABASES;

SHOW TABLES;

SELECT * FROM sqoop_user;

!quit

七、Hive连接代码

1、hive jdbc连接

官方参考:HiveClient - Apache Hive - Apache Software Foundation

import java.sql.SQLException;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.Statement;

import java.sql.DriverManager;

public class TestHiveQuery {

private static final String driverName = “org.apache.hive.jdbc.HiveDriver”;

public static void main(String[] args) throws SQLException {

try {

Class.forName(driverName);

} catch (ClassNotFoundException e) {

// TODO Auto-generated catch block

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

stHiveQuery {

private static final String driverName = “org.apache.hive.jdbc.HiveDriver”;

public static void main(String[] args) throws SQLException {

try {

Class.forName(driverName);

} catch (ClassNotFoundException e) {

// TODO Auto-generated catch block

[外链图片转存中…(img-lOcJU2Le-1715621020834)]

[外链图片转存中…(img-jpE5LJMI-1715621020834)]

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

967

967

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?