self.conv1 = layers.Conv2D(filter_num, (3, 3), strides=stride, padding=‘same’)

self.bn1 = layers.BatchNormalization()

self.relu = layers.Activation(‘relu’)

self.conv2 = layers.Conv2D(filter_num, (3, 3), strides=1, padding=‘same’)

self.bn2 = layers.BatchNormalization()

if stride != 1:

self.downsample = Sequential()

self.downsample.add(layers.Conv2D(filter_num, (1, 1), strides=stride))

else:

self.downsample = lambda x: x

def call(self, input, training=None):

out = self.conv1(input)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

identity = self.downsample(input)

output = layers.add([out, identity])

output = tf.nn.relu(output)

return output

第二个残差模块

第二个残差模块

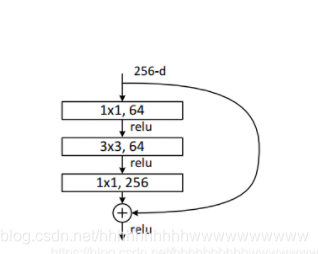

class Block(layers.Layer):

def init(self, filters, downsample=False, stride=1):

super(Block, self).init()

self.downsample = downsample

self.conv1 = layers.Conv2D(filters, (1, 1), strides=stride, padding=‘same’)

self.bn1 = layers.BatchNormalization()

self.relu = layers.Activation(‘relu’)

self.conv2 = layers.Conv2D(filters, (3, 3), strides=1, padding=‘same’)

self.bn2 = layers.BatchNormalization()

self.conv3 = layers.Conv2D(4 * filters, (1, 1), strides=1, padding=‘same’)

self.bn3 = layers.BatchNormalization()

if self.downsample:

self.shortcut = Sequential()

self.shortcut.add(layers.Conv2D(4 * filters, (1, 1), strides=stride))

self.shortcut.add(layers.BatchNormalization(axis=3))

def call(self, input, training=None):

out = self.conv1(input)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample:

shortcut = self.shortcut(input)

else:

shortcut = input

output = layers.add([out, shortcut])

output = tf.nn.relu(output)

return output

ResNet18, ResNet34

==================

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, Sequential

第一个残差模块

class BasicBlock(layers.Layer):

def init(self, filter_num, stride=1):

super(BasicBlock, self).init()

self.conv1 = layers.Conv2D(filter_num, (3, 3), strides=stride, padding=‘same’)

self.bn1 = layers.BatchNormalization()

self.relu = layers.Activation(‘relu’)

self.conv2 = layers.Conv2D(filter_num, (3, 3), strides=1, padding=‘same’)

self.bn2 = layers.BatchNormalization()

if stride != 1:

self.downsample = Sequential()

self.downsample.add(layers.Conv2D(filter_num, (1, 1), strides=stride))

else:

self.downsample = lambda x: x

def call(self, input, training=None):

out = self.conv1(input)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

identity = self.downsample(input)

output = layers.add([out, identity])

output = tf.nn.relu(output)

return output

class ResNet(keras.Model):

def init(self, layer_dims, num_classes=10):

super(ResNet, self).init()

预处理层

self.padding = keras.layers.ZeroPadding2D((3, 3))

self.stem = Sequential([

layers.Conv2D(64, (7, 7), strides=(2, 2)),

layers.BatchNormalization(),

layers.Activation(‘relu’),

layers.MaxPool2D(pool_size=(3, 3), strides=(2, 2), padding=‘same’)

])

resblock

self.layer1 = self.build_resblock(64, layer_dims[0])

self.layer2 = self.build_resblock(128, layer_dims[1], stride=2)

self.layer3 = self.build_resblock(256, layer_dims[2], stride=2)

self.layer4 = self.build_resblock(512, layer_dims[3], stride=2)

全局池化

self.avgpool = layers.GlobalAveragePooling2D()

全连接层

self.fc = layers.Dense(num_classes, activation=tf.keras.activations.softmax)

def call(self, input, training=None):

x=self.padding(input)

x = self.stem(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

[b,c]

x = self.avgpool(x)

x = self.fc(x)

return x

def build_resblock(self, filter_num, blocks, stride=1):

res_blocks = Sequential()

res_blocks.add(BasicBlock(filter_num, stride))

for pre in range(1, blocks):

res_blocks.add(BasicBlock(filter_num, stride=1))

return res_blocks

def ResNet34(num_classes=10):

return ResNet([2, 2, 2, 2], num_classes=num_classes)

def ResNet34(num_classes=10):

return ResNet([3, 4, 6, 3], num_classes=num_classes)

model = ResNet34(num_classes=1000)

model.build(input_shape=(1, 224, 224, 3))

print(model.summary()) # 统计网络参数

ResNet50、ResNet101、ResNet152

============================

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, Sequential

第一个残差模块

class Block(layers.Layer):

def init(self, filters, downsample=False, stride=1):

super(Block, self).init()

self.downsample = downsample

self.conv1 = layers.Conv2D(filters, (1, 1), strides=stride, padding=‘same’)

self.bn1 = layers.BatchNormalization()

self.relu = layers.Activation(‘relu’)

self.conv2 = layers.Conv2D(filters, (3, 3), strides=1, padding=‘same’)

self.bn2 = layers.BatchNormalization()

self.conv3 = layers.Conv2D(4 * filters, (1, 1), strides=1, padding=‘same’)

self.bn3 = layers.BatchNormalization()

if self.downsample:

self.shortcut = Sequential()

最后

不知道你们用的什么环境,我一般都是用的Python3.6环境和pycharm解释器,没有软件,或者没有资料,没人解答问题,都可以免费领取(包括今天的代码),过几天我还会做个视频教程出来,有需要也可以领取~

给大家准备的学习资料包括但不限于:

Python 环境、pycharm编辑器/永久激活/翻译插件

python 零基础视频教程

Python 界面开发实战教程

Python 爬虫实战教程

Python 数据分析实战教程

python 游戏开发实战教程

Python 电子书100本

Python 学习路线规划

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

本文详细介绍了深度残差网络(ResNet)中使用的两个关键模块:基本残差块(BasicBlock)和扩展残差块(Block),重点展示了它们的结构、层间连接方式和在ResNet架构中的应用,如ResNet18,ResNet34等。

本文详细介绍了深度残差网络(ResNet)中使用的两个关键模块:基本残差块(BasicBlock)和扩展残差块(Block),重点展示了它们的结构、层间连接方式和在ResNet架构中的应用,如ResNet18,ResNet34等。

8631

8631

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?