既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上C C++开发知识点,真正体系化!

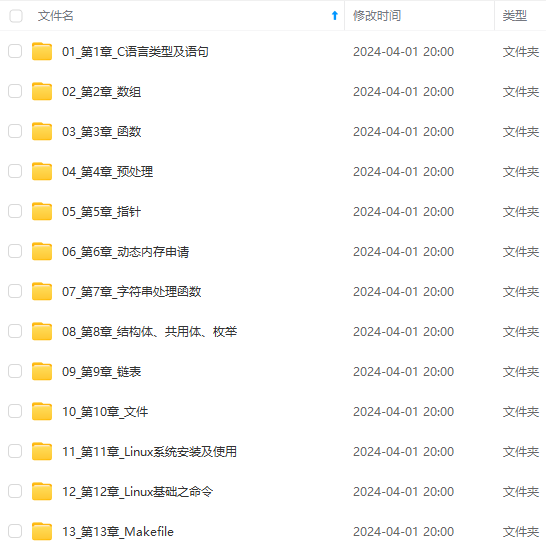

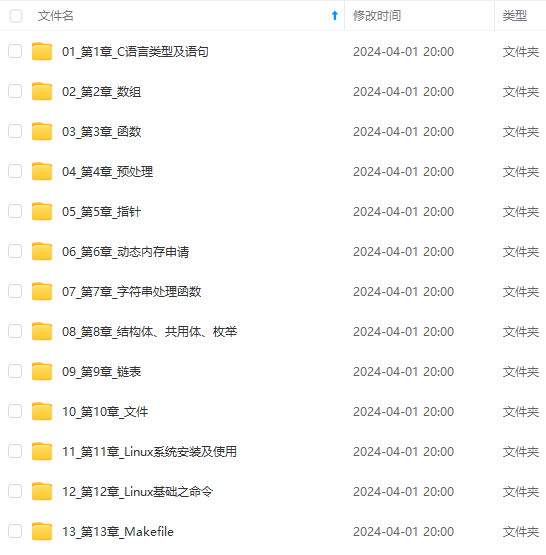

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

model.add(Dense(10, activation = “softmax”))

打印出model 看看

from keras.utils import plot_model

plot_model(model, to_file=‘model.png’, show_shapes=True, show_layer_names=True)

from IPython.display import Image

Image(“model.png”)

定义Optimizer

optimizer = RMSprop(lr=0.001, rho=0.9, epsilon=1e-08, decay=0.0)

编译model

model.compile(optimizer = optimizer , loss = “categorical_crossentropy”, metrics=[“accuracy”])

设置学习率的动态调整

learning_rate_reduction = ReduceLROnPlateau(monitor=‘val_acc’,

patience=3,

verbose=1,

factor=0.5,

min_lr=0.00001)

epochs = 50

batch_size = 128

通过数据增强来防止过度拟合

datagen = ImageDataGenerator(

featurewise_center=False, # set input mean to 0 over the dataset

samplewise_center=False, # set each sample mean to 0

featurewise_std_normalization=False, # divide inputs by std of the dataset

samplewise_std_normalization=False, # divide each input by its std

zca_whitening=False, # apply ZCA whitening

rotation_range=10, # randomly rotate images in the range (degrees, 0 to 180)

zoom_range = 0.1, # Randomly zoom image

width_shift_range=0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range=0.1, # randomly shift images vertically (fraction of total height)

horizontal_flip=False, # randomly flip images

vertical_flip=False) # randomly flip images

datagen.fit(X_train)

训练模型

history = model.fit_generator(datagen.flow(X_train,Y_train, batch_size=batch_size),

epochs = epochs, validation_data = (X_val,Y_val),

verbose = 2, steps_per_epoch=X_train.shape[0] // batch_size

, callbacks=[learning_rate_reduction])

Epoch 1/50

- 47s - loss: 0.1388 - acc: 0.9564 - val_loss: 0.0434 - val_acc: 0.9852

Epoch 2/50 - 43s - loss: 0.0496 - acc: 0.9845 - val_loss: 0.0880 - val_acc: 0.9767

Epoch 3/50 - 43s - loss: 0.0384 - acc: 0.9884 - val_loss: 0.0230 - val_acc: 0.9933

Epoch 4/50 - 44s - loss: 0.0331 - acc: 0.9898 - val_loss: 0.0224 - val_acc: 0.9942

Epoch 5/50 - 42s - loss: 0.0300 - acc: 0.9910 - val_loss: 0.0209 - val_acc: 0.9933

Epoch 6/50 - 42s - loss: 0.0257 - acc: 0.9924 - val_loss: 0.0167 - val_acc: 0.9953

Epoch 7/50 - 42s - loss: 0.0250 - acc: 0.9924 - val_loss: 0.0159 - val_acc: 0.9952

Epoch 8/50 - 43s - loss: 0.0248 - acc: 0.9928 - val_loss: 0.0149 - val_acc: 0.9951

Epoch 9/50 - 42s - loss: 0.0218 - acc: 0.9934 - val_loss: 0.0170 - val_acc: 0.9954

Epoch 00009: ReduceLROnPlateau reducing learning rate to 0.0005000000237487257.

Epoch 10/50

- 42s - loss: 0.0176 - acc: 0.9947 - val_loss: 0.0106 - val_acc: 0.9965

Epoch 11/50 - 43s - loss: 0.0149 - acc: 0.9956 - val_loss: 0.0101 - val_acc: 0.9969

Epoch 12/50 - 42s - loss: 0.0152 - acc: 0.9953 - val_loss: 0.0084 - val_acc: 0.9973

Epoch 13/50 - 42s - loss: 0.0146 - acc: 0.9958 - val_loss: 0.0079 - val_acc: 0.9980

Epoch 14/50 - 43s - loss: 0.0134 - acc: 0.9959 - val_loss: 0.0129 - val_acc: 0.9962

Epoch 15/50 - 42s - loss: 0.0135 - acc: 0.9959 - val_loss: 0.0093 - val_acc: 0.9971

Epoch 16/50 - 43s - loss: 0.0129 - acc: 0.9960 - val_loss: 0.0085 - val_acc: 0.9974

Epoch 00016: ReduceLROnPlateau reducing learning rate to 0.0002500000118743628.

Epoch 17/50

- 43s - loss: 0.0109 - acc: 0.9968 - val_loss: 0.0064 - val_acc: 0.9980

Epoch 18/50 - 44s - loss: 0.0107 - acc: 0.9966 - val_loss: 0.0068 - val_acc: 0.9984

Epoch 19/50 - 43s - loss: 0.0104 - acc: 0.9969 - val_loss: 0.0065 - val_acc: 0.9986

Epoch 20/50 - 43s - loss: 0.0097 - acc: 0.9969 - val_loss: 0.0057 - val_acc: 0.9985

Epoch 21/50 - 43s - loss: 0.0092 - acc: 0.9971 - val_loss: 0.0073 - val_acc: 0.9981

Epoch 22/50 - 43s - loss: 0.0097 - acc: 0.9970 - val_loss: 0.0068 - val_acc: 0.9982

Epoch 00022: ReduceLROnPlateau reducing learning rate to 0.0001250000059371814.

Epoch 23/50

- 43s - loss: 0.0083 - acc: 0.9975 - val_loss: 0.0064 - val_acc: 0.9984

Epoch 24/50 - 43s - loss: 0.0085 - acc: 0.9974 - val_loss: 0.0061 - val_acc: 0.9985

Epoch 25/50 - 43s - loss: 0.0081 - acc: 0.9976 - val_loss: 0.0058 - val_acc: 0.9988

Epoch 26/50 - 43s - loss: 0.0080 - acc: 0.9977 - val_loss: 0.0065 - val_acc: 0.9986

Epoch 27/50 - 43s - loss: 0.0078 - acc: 0.9977 - val_loss: 0.0066 - val_acc: 0.9984

Epoch 28/50 - 44s - loss: 0.0088 - acc: 0.9975 - val_loss: 0.0060 - val_acc: 0.9988

Epoch 00028: ReduceLROnPlateau reducing learning rate to 6.25000029685907e-05.

Epoch 29/50

- 44s - loss: 0.0077 - acc: 0.9975 - val_loss: 0.0056 - val_acc: 0.9988

Epoch 30/50 - 43s - loss: 0.0063 - acc: 0.9980 - val_loss: 0.0054 - val_acc: 0.9988

Epoch 31/50 - 44s - loss: 0.0069 - acc: 0.9980 - val_loss: 0.0056 - val_acc: 0.9988

Epoch 00031: ReduceLROnPlateau reducing learning rate to 3.125000148429535e-05.

Epoch 32/50

- 44s - loss: 0.0068 - acc: 0.9980 - val_loss: 0.0055 - val_acc: 0.9986

Epoch 33/50 - 43s - loss: 0.0066 - acc: 0.9981 - val_loss: 0.0055 - val_acc: 0.9987

Epoch 34/50 - 43s - loss: 0.0069 - acc: 0.9979 - val_loss: 0.0055 - val_acc: 0.9988

Epoch 00034: ReduceLROnPlateau reducing learning rate to 1.5625000742147677e-05.

Epoch 35/50

- 43s - loss: 0.0065 - acc: 0.9979 - val_loss: 0.0055 - val_acc: 0.9988

Epoch 36/50 - 42s - loss: 0.0069 - acc: 0.9980 - val_loss: 0.0054 - val_acc: 0.9988

Epoch 37/50 - 43s - loss: 0.0064 - acc: 0.9980 - val_loss: 0.0054 - val_acc: 0.9988

Epoch 00037: ReduceLROnPlateau reducing learning rate to 1e-05.

Epoch 38/50

- 42s - loss: 0.0067 - acc: 0.9979 - val_loss: 0.0054 - val_acc: 0.9989

Epoch 39/50 - 43s - loss: 0.0067 - acc: 0.9979 - val_loss: 0.0055 - val_acc: 0.9988

Epoch 40/50 - 43s - loss: 0.0060 - acc: 0.9983 - val_loss: 0.0055 - val_acc: 0.9988

Epoch 41/50 - 42s - loss: 0.0056 - acc: 0.9983 - val_loss: 0.0055 - val_acc: 0.9988

Epoch 42/50 - 43s - loss: 0.0064 - acc: 0.9981 - val_loss: 0.0055 - val_acc: 0.9988

Epoch 43/50 - 42s - loss: 0.0060 - acc: 0.9982 - val_loss: 0.0054 - val_acc: 0.9988

Epoch 44/50 - 42s - loss: 0.0062 - acc: 0.9981 - val_loss: 0.0054 - val_acc: 0.9989

Epoch 45/50 - 42s - loss: 0.0061 - acc: 0.9980 - val_loss: 0.0055 - val_acc: 0.9989

Epoch 46/50 - 42s - loss: 0.0059 - acc: 0.9983 - val_loss: 0.0056 - val_acc: 0.9989

Epoch 47/50 - 42s - loss: 0.0065 - acc: 0.9980 - val_loss: 0.0054 - val_acc: 0.9989

Epoch 48/50 - 43s - loss: 0.0069 - acc: 0.9980 - val_loss: 0.0055 - val_acc: 0.9989

Epoch 49/50 - 42s - loss: 0.0068 - acc: 0.9980 - val_loss: 0.0055 - val_acc: 0.9989

Epoch 50/50 - 42s - loss: 0.0065 - acc: 0.9981 - val_loss: 0.0054 - val_acc: 0.9988

画训练集和验证集的loss和accuracy曲线。可以判断是否欠拟合或过拟合

fig, ax = plt.subplots(2,1)

ax[0].plot(history.history[‘loss’], color=‘b’, label=“Training loss”)

ax[0].plot(history.history[‘val_loss’], color=‘r’, label=“validation loss”,axes =ax[0])

legend = ax[0].legend(loc=‘best’, shadow=True)

ax[1].plot(history.history[‘acc’], color=‘b’, label=“Training accuracy”)

ax[1].plot(history.history[‘val_acc’], color=‘r’,label=“Validation accuracy”)

legend = ax[1].legend(loc=‘best’, shadow=True)

画出混淆矩阵,可以用来观察误判比较高的情况

def plot_confusion_matrix(cm, classes,

normalize=False,

title=‘Confusion matrix’,

cmap=plt.cm.Blues):

“”"

This function prints and plots the confusion matrix.

Normalization can be applied by setting normalize=True.

“”"

plt.imshow(cm, interpolation=‘nearest’, cmap=cmap)

plt.title(title)

plt.colorbar()

tick_marks = np.arange(len(classes))

plt.xticks(tick_marks, classes, rotation=45)

plt.yticks(tick_marks, classes)

if normalize:

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

thresh = cm.max() / 2.

for i, j in itertools.product(range(cm.shape[0]), range(cm.shape[1])):

plt.text(j, i, cm[i, j],

horizontalalignment="center",

color="white" if cm[i, j] > thresh else "black")

plt.tight_layout()

plt.ylabel('True label')

plt.xlabel('Predicted label')

Predict the values from the validation dataset

Y_pred = model.predict(X_val)

Convert predictions classes to one hot vectors

Y_pred_classes = np.argmax(Y_pred,axis = 1)

Convert validation observations to one hot vectors

Y_true = np.argmax(Y_val,axis = 1)

compute the confusion matrix

confusion_mtx = confusion_matrix(Y_true, Y_pred_classes)

plot the confusion matrix

plot_confusion_matrix(confusion_mtx, classes = range(10))

显示一些错误结果,及预测标签和真实标签之间的不同

errors = (Y_pred_classes - Y_true != 0)

Y_pred_classes_errors = Y_pred_classes[errors]

Y_pred_errors = Y_pred[errors]

Y_true_errors = Y_true[errors]

X_val_errors = X_val[errors]

def display_errors(errors_index,img_errors,pred_errors, obs_errors):

“”" This function shows 6 images with their predicted and real labels"“”

n = 0

nrows = 2

ncols = 3

fig, ax = plt.subplots(nrows,ncols,sharex=True,sharey=True)

for row in range(nrows):

for col in range(ncols):

error = errors_index[n]

ax[row,col].imshow((img_errors[error]).reshape((28,28)))

ax[row,col].set_title(“Predicted label :{}\nTrue label :{}”.format(pred_errors[error],obs_errors[error]))

n += 1

Probabilities of the wrong predicted numbers

Y_pred_errors_prob = np.max(Y_pred_errors,axis = 1)

Predicted probabilities of the true values in the error set

true_prob_errors = np.diagonal(np.take(Y_pred_errors, Y_true_errors, axis=1))

Difference between the probability of the predicted label and the true label

delta_pred_true_errors = Y_pred_errors_prob - true_prob_errors

Sorted list of the delta prob errors

sorted_dela_errors = np.argsort(delta_pred_true_errors)

Top 6 errors

most_important_errors = sorted_dela_errors[-6:]

Show the top 6 errors

display_errors(most_important_errors, X_val_errors, Y_pred_classes_errors, Y_true_errors)

对测试集做预测

results = model.predict(test)

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上C C++开发知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

results = model.predict(test)

[外链图片转存中…(img-qh2tAJGq-1715765348072)]

[外链图片转存中…(img-MoylRxrA-1715765348073)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上C C++开发知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

449

449

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?