直接上代码

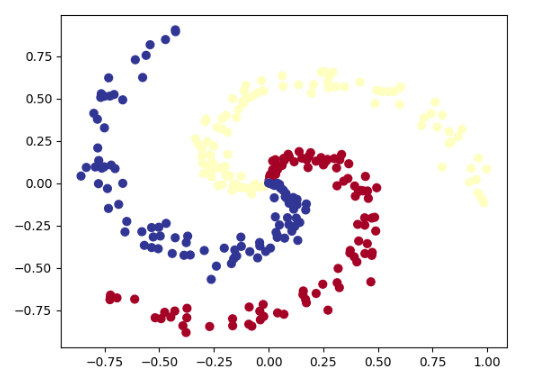

# Multi-class dataset

import numpy as np

RANDOM_SEED = 42

np.random.seed(RANDOM_SEED)

N = 100 # number of points per class

D = 2 # dimensionality

K = 3 # number of classes

X = np.zeros((N*K, D))

y = np.zeros(N*K, dtype='uint8')

for j in range(K):

ix = range(N*j, N*(j+1))

r = np.linspace(0.0, 1, N) # radius

t = np.linspace(j*4, (j+1)*4, N) + np.random.randn(N)*0.2

X[ix] = np.c_[r*np.sin(t), r*np.cos(t)]

y[ix] = j

plt.scatter(X[:, 0], X[:, 1], c = y, s = 40, cmap=plt.cm.RdYlBu)

plt.show()

# Turn data into tensors

X = torch.from_numpy(X).type(torch.float)

y = torch.from_numpy(y).type(torch.LongTensor)

# Create train and test splits

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = RANDOM_SEED)

#!pip -q install torchmetrics

from torchmetrics import Accuracy

acc_fn = Accuracy(task = "multiclass", num_classes = 3).to("cpu")

class SpiralModel(nn.Module):

def __init__(self):

super().__init__()

self.linear1 = nn.Linear(in_features = 2, out_features = 10)

self.linear2 = nn.Linear(in_features = 10, out_features = 10)

self.linear3 = nn.Linear(in_features = 10, out_features = 3)

self.relu = nn.ReLU()

def forward(self, x):

return self.linear3(self.relu(self.linear2(self.relu(self.linear1(x)))))

model_1 = SpiralModel().to("cpu")

# Setup data to be device agnostic

X_train, y_train = X_train.to("cpu"), y_train.to("cpu")

X_test, y_test = X_test.to("cpu"), y_test.to("cpu")

# Setup Loss function and optimizer

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model_1.parameters(),

lr = 0.02)

# Build a training loop for the model

epochs = 1000

# Loop over data

for epoch in range(epochs):

## Training

model_1.train()

y_logits = model_1(X_train)

y_pred = torch.softmax(y_logits, dim=1).argmax(dim=1)

loss = loss_fn(y_logits, y_train)

acc = acc_fn(y_pred, y_train)

optimizer.zero_grad()

loss.backward()

optimizer.step()

## Training

model_1.eval()

with torch.inference_mode():

test_logits = model_1(X_test)

test_pred = torch.softmax(test_logits, dim=1).argmax(dim=1)

test_loss = loss_fn(test_logits, y_test)

test_acc = acc_fn(test_pred, y_test)

if epoch % 100 == 0:

print(f"Epoch: {epoch} | Loss: {loss:.2f} Acc: {acc:.2f} | Test loss: {test_loss:.2f} Test acc: {test_acc:.2f}")

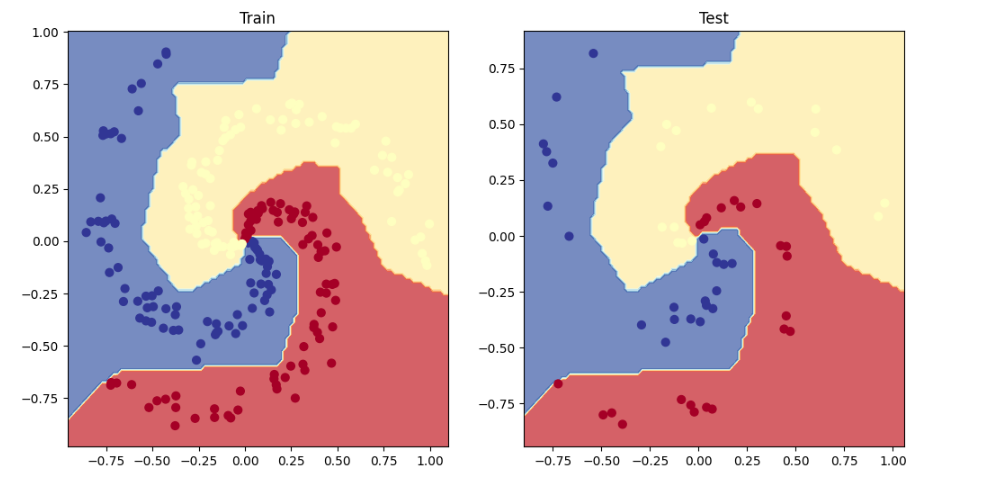

# Plot decision boundaries for training and test sets

plt.figure(figsize=(12, 6))

plt.subplot(1, 2, 1)

plt.title("Train")

plot_decision_boundary(model_1, X_train, y_train)

plt.subplot(1, 2, 2)

plt.title("Test")

plot_decision_boundary(model_1, X_test, y_test)

结果如下

Epoch: 0 | Loss: 1.13 Acc: 0.32 | Test loss: 1.12 Test acc: 0.37

Epoch: 100 | Loss: 0.09 Acc: 0.98 | Test loss: 0.06 Test acc: 1.00

Epoch: 200 | Loss: 0.04 Acc: 0.99 | Test loss: 0.01 Test acc: 1.00

Epoch: 300 | Loss: 0.02 Acc: 0.99 | Test loss: 0.00 Test acc: 1.00

Epoch: 400 | Loss: 0.02 Acc: 0.99 | Test loss: 0.00 Test acc: 1.00

Epoch: 500 | Loss: 0.02 Acc: 0.99 | Test loss: 0.00 Test acc: 1.00

Epoch: 600 | Loss: 0.02 Acc: 0.99 | Test loss: 0.00 Test acc: 1.00

Epoch: 700 | Loss: 0.02 Acc: 0.99 | Test loss: 0.00 Test acc: 1.00

Epoch: 800 | Loss: 0.02 Acc: 0.99 | Test loss: 0.00 Test acc: 1.00

Epoch: 900 | Loss: 0.01 Acc: 0.99 | Test loss: 0.00 Test acc: 1.00

给个赞呗~

2067

2067

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?