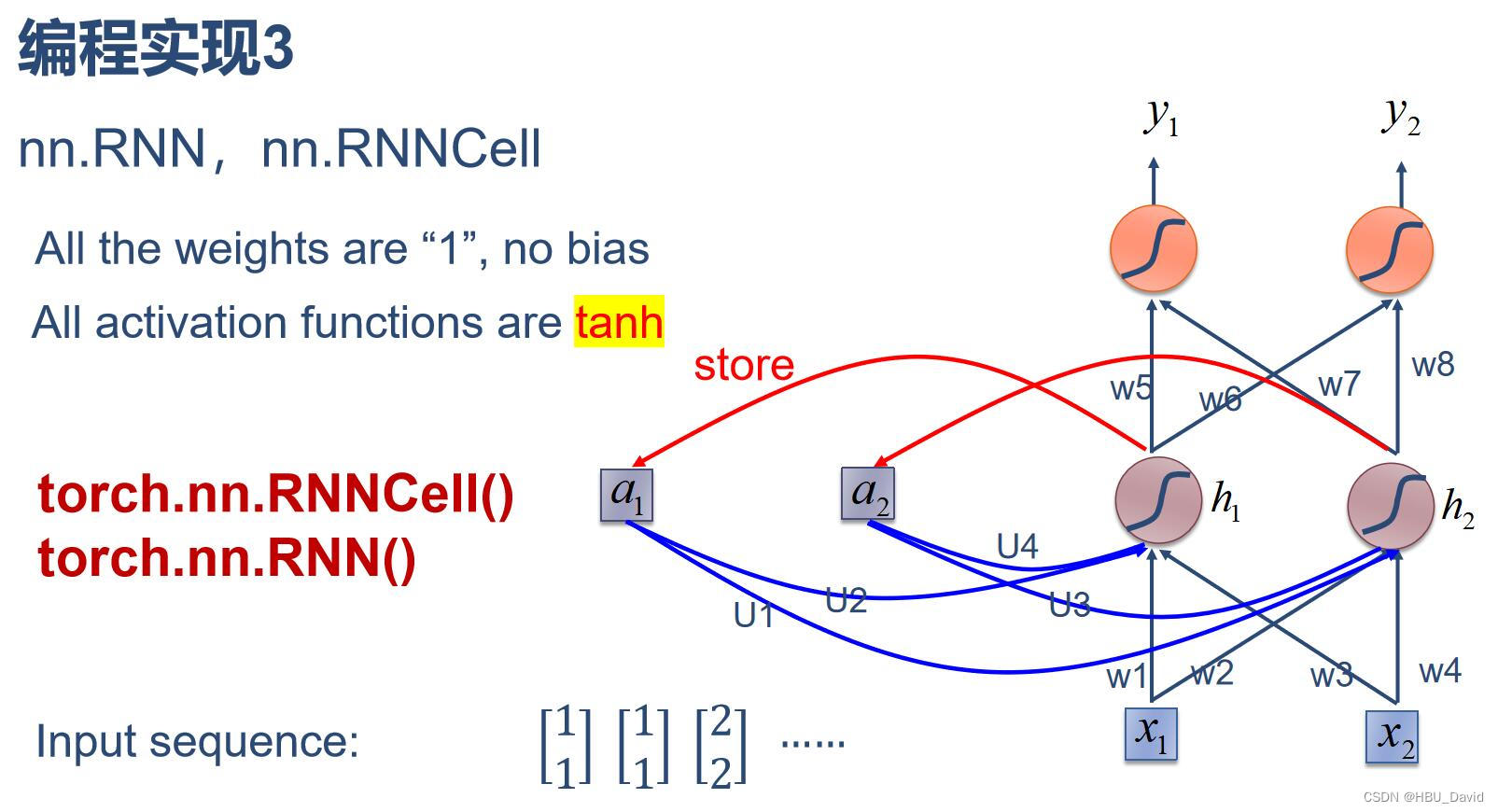

import numpy as np

inputs = np.array([[1., 1.],

[1., 1.],

[2., 2.]]) # 初始化输入序列

print('inputs is ', inputs)

state_t = np.zeros(2, ) # 初始化存储器

print('state_t is ', state_t)

w1, w2, w3, w4, w5, w6, w7, w8 = 1., 1., 1., 1., 1., 1., 1., 1.

U1, U2, U3, U4 = 1., 1., 1., 1.

print('--------------------------------------')

for input_t in inputs:

print('inputs is ', input_t)

print('state_t is ', state_t)

in_h1 = np.dot([w1, w3], input_t) + np.dot([U2, U4], state_t)

in_h2 = np.dot([w2, w4], input_t) + np.dot([U1, U3], state_t)

state_t = in_h1, in_h2

output_y1 = np.dot([w5, w7], [in_h1, in_h2])

output_y2 = np.dot([w6, w8], [in_h1, in_h2])

print('output_y is ', output_y1, output_y2)

print('---------------')

运行结果:

inputs is [[1. 1.]

[1. 1.]

[2. 2.]]

state_t is [0. 0.]

--------------------------------------

inputs is [1. 1.]

state_t is [0. 0.]

output_y is 4.0 4.0

---------------

inputs is [1. 1.]

state_t is (2.0, 2.0)

output_y is 12.0 12.0

---------------

inputs is [2. 2.]

state_t is (6.0, 6.0)

output_y is 32.0 32.0

---------------

Process finished with exit code 0

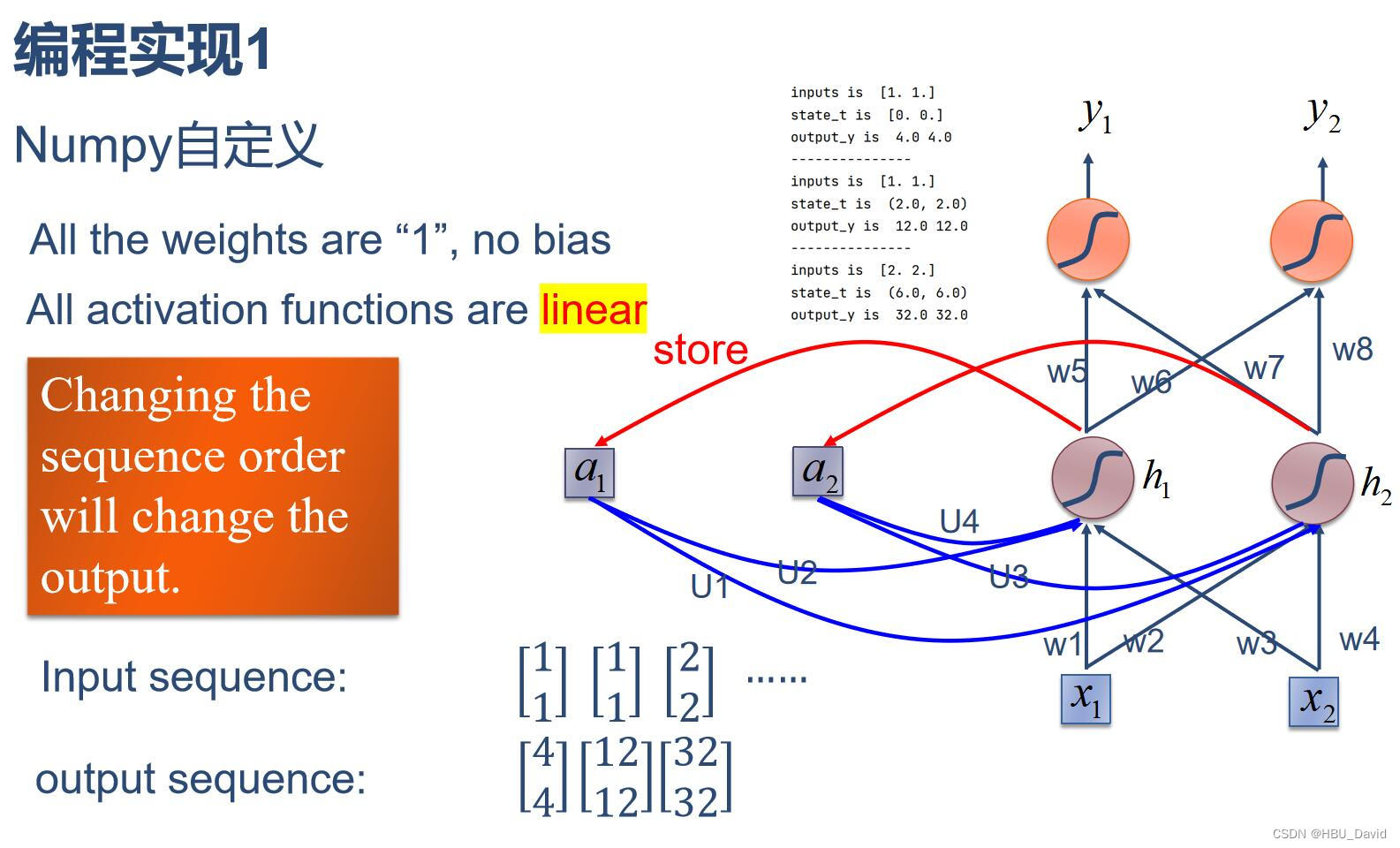

2. 在1的基础上,增加激活函数tanh

import numpy as np

inputs = np.array([[1., 1.],

[1., 1.],

[2., 2.]]) # 初始化输入序列

print('inputs is ', inputs)

state_t = np.zeros(2, ) # 初始化存储器

print('state_t is ', state_t)

w1, w2, w3, w4, w5, w6, w7, w8 = 1., 1., 1., 1., 1., 1., 1., 1.

U1, U2, U3, U4 = 1., 1., 1., 1.

print('--------------------------------------')

for input_t in inputs:

print('inputs is ', input_t)

print('state_t is ', state_t)

in_h1 = np.tanh(np.dot([w1, w3], input_t) + np.dot([U2, U4], state_t))

in_h2 = np.tanh(np.dot([w2, w4], input_t) + np.dot([U1, U3], state_t))

state_t = in_h1, in_h2

output_y1 = np.dot([w5, w7], [in_h1, in_h2])

output_y2 = np.dot([w6, w8], [in_h1, in_h2])

print('output_y is ', output_y1, output_y2)

print('---------------')

运行结果:

inputs is [[1. 1.]

[1. 1.]

[2. 2.]]

state_t is [0. 0.]

--------------------------------------

inputs is [1. 1.]

state_t is [0. 0.]

output_y is 1.9280551601516338 1.9280551601516338

---------------

inputs is [1. 1.]

state_t is (0.9640275800758169, 0.9640275800758169)

output_y is 1.9984510891336251 1.9984510891336251

---------------

inputs is [2. 2.]

state_t is (0.9992255445668126, 0.9992255445668126)

output_y is 1.9999753470497836 1.9999753470497836

---------------

————————————————

版权声明:本文为CSDN博主「白小码i」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/qq_52551768/article/details/127831761

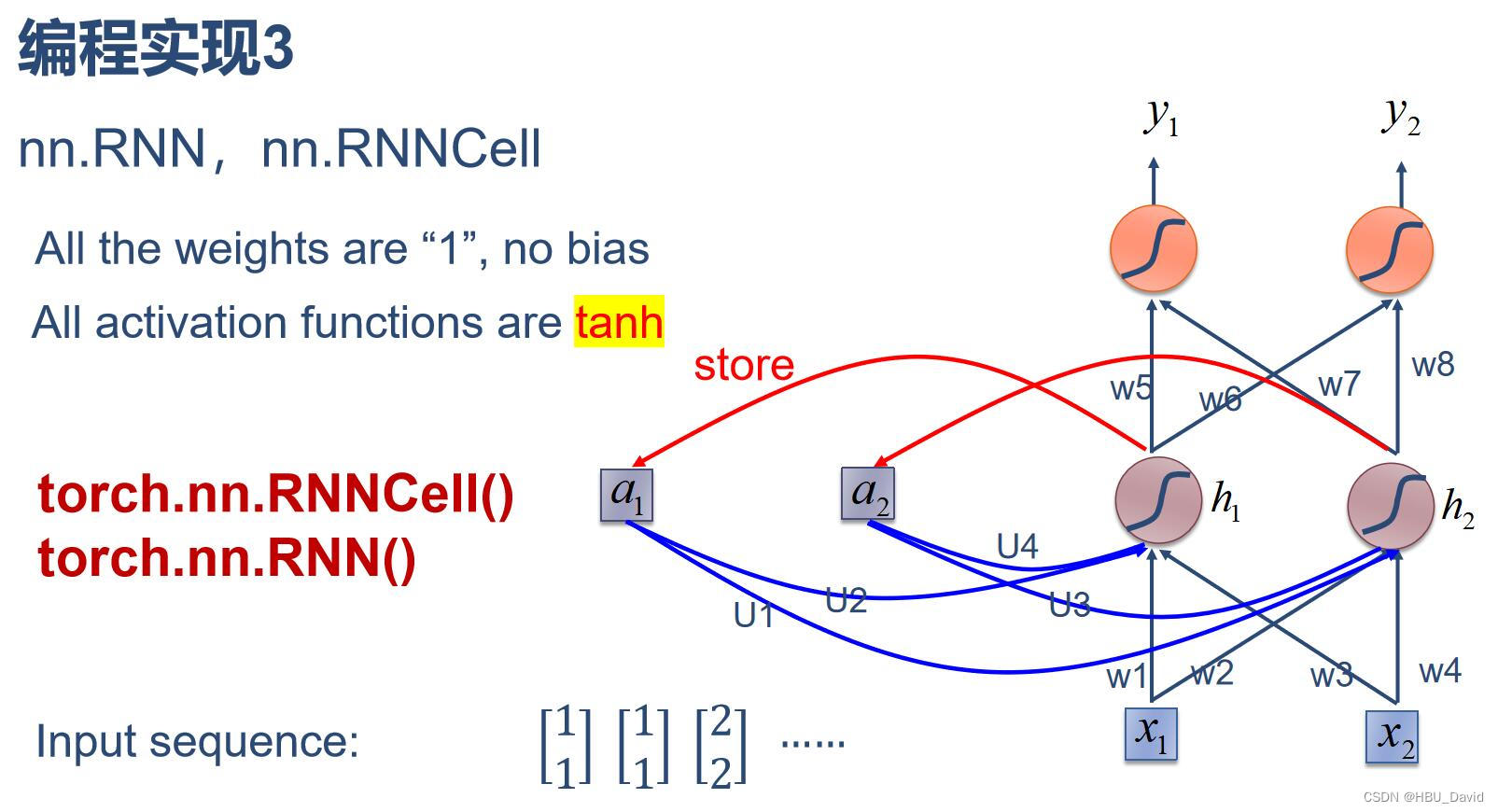

3. 分别使用nn.RNNCell、nn.RNN实现SRN

import torch

batch_size = 1

seq_len = 3 # 序列长度

input_size = 2 # 输入序列维度

hidden_size = 2 # 隐藏层维度

output_size = 2 # 输出层维度

# RNNCell

cell = torch.nn.RNNCell(input_size=input_size, hidden_size=hidden_size)

# 初始化参数 https://zhuanlan.zhihu.com/p/342012463

for name, param in cell.named_parameters():

if name.startswith("weight"):

torch.nn.init.ones_(param)

else:

torch.nn.init.zeros_(param)

# 线性层

liner = torch.nn.Linear(hidden_size, output_size)

liner.weight.data = torch.Tensor([[1, 1], [1, 1]])

liner.bias.data = torch.Tensor([0.0])

seq = torch.Tensor([[[1, 1]],

[[1, 1]],

[[2, 2]]])

hidden = torch.zeros(batch_size, hidden_size)

output = torch.zeros(batch_size, output_size)

for idx, input in enumerate(seq):

print('=' * 20, idx, '=' * 20)

print('Input :', input)

print('hidden :', hidden)

hidden = cell(input, hidden)

output = liner(hidden)

print('output :', output)

运行结果:

==================== 0 ====================

Input : tensor([[1., 1.]])

hidden : tensor([[0., 0.]])

output : tensor([[1.9281, 1.9281]], grad_fn=<AddmmBackward0>)

==================== 1 ====================

Input : tensor([[1., 1.]])

hidden : tensor([[0.9640, 0.9640]], grad_fn=<TanhBackward0>)

output : tensor([[1.9985, 1.9985]], grad_fn=<AddmmBackward0>)

==================== 2 ====================

Input :

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

7177

7177

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?