目录

2.1.之前安装的opencv,用 jtop无CUDA加速,卸载重装。

2.2.下载opencv版本https://opencv.org/releases/

2.4.设置编译项:(-D CUDA_ARCH_BIN=xx 这个jtop查询 cuda arch 6.2)

2.5.1. sudo gedit /etc/ld.so.conf.d/opencv.conf添加 /usr/local/lib

2.5.2.sudo gedit /etc/bash.bashrc添加

1.opencv x86安装

sudo apt-get install build-essential libgtk2.0-dev libgtk-3-dev libavcodec-dev libavformat-dev libjpeg-dev libswscale-dev libtiff5-dev# python3支持

sudo apt install python3-dev python3-numpy# streamer支持

sudo apt install libgstreamer-plugins-base1.0-dev libgstreamer1.0-dev# 可选的依赖

sudo apt install libpng-dev libopenexr-dev libtiff-dev libwebp-dev源碼下載

https://opencv.org/releases/

unzip opencv-4.1.0.zip

cd opencv-4.1.0

mkdir build

cd build/編譯

cmake -D CMAKE_BUILD_TYPE=Release -D OPENCV_GENERATE_PKGCONFIG=ON -D WITH_GTK=ON -D WITH_OPENGL=ON -D WITH_V4L=ON -D WITH_VTK=ON -D WITH_TBB=ON -D CMAKE_INSTALL_PREFIX=/usr/local ..

make

sudo make install 配置環境

1. sudo gedit /etc/ld.so.conf.d/opencv.conf

添加 /usr/local/lib

2.sudo gedit /etc/bash.bashrc

添加

PKG_CONFIG_PATH=$PKG_CONFIG_PATH:/usr/local/lib/pkgconfig

export PKG_CONFIG_PATH

source /etc/bash.bashrc #更新源链接

sudo updatedb #更新文件库2.opencv tx2 cuda

2.1.之前安装的opencv,用 jtop无CUDA加速,卸载重装。

OpenCV使能CUDA加速_迷途小书童的Note-CSDN博客

卸载opencv

cd opencv/build

sudo make uninstall && make clean

sudo rm -r /usr/local/include/opencv2 /usr/local/include/opencv /usr/include/opencv /usr/include/opencv2 /usr/local/share/opencv /usr/local/share/OpenCV /usr/share/opencv /usr/share/OpenCV /usr/local/bin/opencv* /usr/local/lib/libopencv*

2.1.1未装过opencv

sudo apt-get install build-essential libgtk2.0-dev libgtk-3-dev libavcodec-dev libavformat-dev libjpeg-dev libswscale-dev libtiff5-dev

sudo apt install python3-dev python3-numpy

sudo apt install libgstreamer-plugins-base1.0-dev libgstreamer1.0-devopencv_cuda: opencv opencv_contrib

2.2.下载opencv版本https://opencv.org/releases/

mkdir opencv_cuda

cd opencv_cuda

git clone https://github.com/opencv/opencv.git2.3.下载opencv_contrib.git

git clone https://github.com/opencv/opencv_contrib.git2.4.设置编译项:(-D CUDA_ARCH_BIN=xx 这个jtop查询 cuda arch 6.2)

cd ./opencv

mkdir build

cd build

cmake -D CMAKE_BUILD_TYPE=RELEASE -D CMAKE_INSTALL_PREFIX=/usr/local -D CUDA_CUDA_LIBRARY=/usr/local/cuda/lib64/stubs/libcuda.so -D CUDA_ARCH_BIN=6.2 -D CUDA_ARCH_PTX="" -D WITH_CUDA=ON -D WITH_TBB=ON -D BUILD_NEW_PYTHON_SUPPORT=ON -D WITH_V4L=ON -D INSTALL_C_EXAMPLES=ON -D INSTALL_PYTHON_EXAMPLES=ON -D BUILD_EXAMPLES=ON -D WITH_QT=ON -D WITH_OPENGL=ON -D ENABLE_FAST_MATH=1 -D CUDA_FAST_MATH=1 -D WITH_CUBLAS=1 -D WITH_NVCUVID=ON -D BUILD_opencv_cudacodec=OFF -D OPENCV_GENERATE_PKGCONFIG=ON -DOPENCV_EXTRA_MODULES_PATH=../../opencv_contrib/modules ../../opencv

sudo make -j4

sudo make install2.5.配置環境

2.5.1. sudo gedit /etc/ld.so.conf.d/opencv.conf

添加 /usr/local/lib

2.5.2.sudo gedit /etc/bash.bashrc添加

PKG_CONFIG_PATH=$PKG_CONFIG_PATH:/usr/local/lib/pkgconfig

export PKG_CONFIG_PATH

source /etc/bash.bashrc

sudo updatedb

2.6.可能遇到的错误

opencv_contrib位置错误,OPENCV_EXTRA_MODULES_PATH后面跟的路径千万不能有空格。

CMake Error at modules/core/CMakeLists.txt:52 (message):

CUDA: OpenCV requires enabled 'cudev' module from 'opencv_contrib'

repository: https://github.com/opencv/opencv_contrib

GitHub - opencv/opencv_contrib: Repository for OpenCV's extra modules

cd <opencv_build_directory>

cmake -DOPENCV_EXTRA_MODULES_PATH=<opencv_contrib>/modules <opencv_source_directory>

3.opencv单独编译,安装到文件中

cd opencv-3.2.0

mkdir build

cd build

mkdir source

cmake -D CMAKE_BUILD_TYPE=Release -D CMAKE_INSTALL_PREFIX=/sd_card/opencv-3.2.0/build/source -D WITH_GTK=ON -DWITH_CUDA=OFF -DENABLE_PRECOMPILED_HEADERS=OFF ..

make

make installmaincpp

#include "opencv2/opencv.hpp"

using namespace cv;

int main()

{

printf(CV_VERSION);

return 0;

}打印版本

CMakeLists.txt使用:

cmake_minimum_required (VERSION 2.8)

project (demo)

set(OpenCV_DIR /sd_card/opencv-3.2.0/build/source/share/OpenCV)

find_package( OpenCV 3 REQUIRED )

include_directories(${OpenCV_INCLUDE_DIRS})

add_executable(main main.cpp)

target_link_libraries(main ${OpenCV_LIBS} )build下添加环境变量 OpenCV_DIR ,值为能找到OpenCVConfig.cmake或者OpenCVConfig-version.cmake的opencv路径

4.opencv 图片验证

在终端打开到opencv-4.1.0/sample/cpp/example_cmake目录,执行下面的代码:

cmake .

make

./opencv_example

mkdir ~/opencv-test

cd ~/opencv-test

gedit DisplayImage.cpp

#include <stdio.h>

#include <opencv2/opencv.hpp>

using namespace cv;

int main(int argc, char** argv )

{

if ( argc != 2 )

{

printf("usage: DisplayImage.out <Image_Path>\n");

return -1;

}

Mat image;

image = imread( argv[1], 1 );

if ( !image.data )

{

printf("No image data \n");

return -1;

}

namedWindow("Display Image", WINDOW_AUTOSIZE );

imshow("Display Image", image);

waitKey(0);

return 0;

}gedit CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project( DisplayImage )

find_package( OpenCV REQUIRED )

add_executable( DisplayImage DisplayImage.cpp )

target_link_libraries( DisplayImage ${OpenCV_LIBS} )cd ~/opencv-test

cmake .

make

执行

此时opencv-test文件夹中已经产生了可执行文件DisplayImage,随便从网上下载一张图片放在opencv-test文件夹下,此处下载了opencv.jpg,然后运行

./DisplayImage 1.jpg

参考:ubuntu opencv 打开摄像头失败_shadowsland的专栏-CSDN博客

5.opencv相机采集

sudo apt install pkg-config v4l-utils查看相机:

v4l2-ctl --list-devices #查看摄像头设备

ls /dev/ #查看全部设备 video0 video1

ll /dev/video* #查看摄像头设备

v4l2-ctl -d /dev/video0 --all #查看摄像头所有信息

v4l2-ctl -d /dev/video0 --list-formats-ext 使用 --list-formats-ext 获取支持的分辨率和编码格式

摄像头设备号

find /lib/modules/ -name "*v4l2*.ko"

sudo modprobe adv7511-v4l2创建camera.cpp

#include <opencv2/core.hpp>

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main( int argc, char** argv )

{

cv::VideoCapture capture(atoi(argv[1]));

if (!capture.isOpened())

return 1;

cv::Mat Frame;

bool stop = false;

while (!stop)

{

capture >> Frame;

cv::imshow("视频", Frame);

waitKey(10);

}

return 0;

}创建CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project( DisplayCamera )

find_package( OpenCV REQUIRED )

add_executable( DisplayCamera camera.cpp )

target_link_libraries(DisplayCamera ${OpenCV_LIBS})cmake .&&make

sudo ./DisplayCamera 4

6.Opencv点云realsense点云

6.1.安装realsense库

6.2.在源码中将example.h拷到include文件

// License: Apache 2.0. See LICENSE file in root directory.

// Copyright(c) 2017 Intel Corporation. All Rights Reserved.

#include <librealsense2/rs.h>

#include <stdio.h>

#include <stdlib.h>

/* Function calls to librealsense may raise errors of type rs_error*/

void check_error(rs2_error* e)

{

if (e)

{

printf("rs_error was raised when calling %s(%s):\n", rs2_get_failed_function(e), rs2_get_failed_args(e));

printf(" %s\n", rs2_get_error_message(e));

exit(EXIT_FAILURE);

}

}

void print_device_info(rs2_device* dev)

{

rs2_error* e = 0;

printf("\nUsing device 0, an %s\n", rs2_get_device_info(dev, RS2_CAMERA_INFO_NAME, &e));

check_error(e);

printf(" Serial number: %s\n", rs2_get_device_info(dev, RS2_CAMERA_INFO_SERIAL_NUMBER, &e));

check_error(e);

printf(" Firmware version: %s\n\n", rs2_get_device_info(dev, RS2_CAMERA_INFO_FIRMWARE_VERSION, &e));

check_error(e);

}6.3.修改rs-depth.cpp

// License: Apache 2.0. See LICENSE file in root directory.

// Copyright(c) 2017 Intel Corporation. All Rights Reserved.

/* Include the librealsense C header files */

#include <librealsense2/rs.h>

#include <ctime>

#include <librealsense2/h/rs_pipeline.h>

#include <librealsense2/h/rs_option.h>

#include <librealsense2/h/rs_frame.h>

#include <opencv2/opencv.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/types_c.h>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/objdetect/objdetect.hpp>

#include <vector>

#include <fstream>

#include <iostream>

#include <string>

#include <stdlib.h>

#include <stdint.h>

#include <stdio.h>

#include "./include/example.h"

using namespace std;

using namespace cv;

// These parameters are reconfigurable //

#define STREAM RS2_STREAM_DEPTH // rs2_stream is a types of data provided by RealSense device //

#define FORMAT RS2_FORMAT_Z16 // rs2_format identifies how binary data is encoded within a frame //

#define WIDTH 640 // Defines the number of columns for each frame or zero for auto resolve//

#define HEIGHT 480 // Defines the number of lines for each frame or zero for auto resolve //

#define FPS 30 // Defines the rate of frames per second //

#define STREAM_INDEX 0 // Defines the stream index, used for multiple streams of the same type //

#define HEIGHT_RATIO 20 // Defines the height ratio between the original frame to the new frame //

#define WIDTH_RATIO 10 // Defines the width ratio between the original frame to the new frame //

#define CV_COLOR_DARKGRAY cv::Scalar(169,169,169)

#define CV_COLOR_BLACK cv::Scalar(0,0,0)

// The number of meters represented by a single depth unit

float get_depth_unit_value(const rs2_device* const dev)

{

rs2_error* e = 0;

rs2_sensor_list* sensor_list = rs2_query_sensors(dev, &e);

check_error(e);

int num_of_sensors = rs2_get_sensors_count(sensor_list, &e);

check_error(e);

float depth_scale = 0;

int is_depth_sensor_found = 0;

int i;

for (i = 0; i < num_of_sensors; ++i)

{

rs2_sensor* sensor = rs2_create_sensor(sensor_list, i, &e);

check_error(e);

// Check if the given sensor can be extended to depth sensor interface

is_depth_sensor_found = rs2_is_sensor_extendable_to(sensor, RS2_EXTENSION_DEPTH_SENSOR, &e);

check_error(e);

if (1 == is_depth_sensor_found)

{

depth_scale = rs2_get_option((const rs2_options*)sensor, RS2_OPTION_DEPTH_UNITS, &e);

check_error(e);

rs2_delete_sensor(sensor);

break;

}

rs2_delete_sensor(sensor);

}

rs2_delete_sensor_list(sensor_list);

if (0 == is_depth_sensor_found)

{

printf("Depth sensor not found!\n");

exit(EXIT_FAILURE);

}

return depth_scale;

}

int main()

{

const Size sizepx(640, 860);

Mat screen(sizepx.width, sizepx.height, CV_32FC3);

screen.setTo(Scalar(255, 0, 0));//背景颜色

clock_t startTime,endTime;

float timefps=0;

char str[10];//用于存放帧率的字符串

rs2_error* e = 0;

struct color_point

{

int x;

int y;

double color;

}color_Point;

// Create a context object. This object owns the handles to all connected realsense devices.

// The returned object should be released with rs2_delete_context(...)

rs2_context* ctx = rs2_create_context(RS2_API_VERSION, &e); //realsense 版本

check_error(e);

/* Get a list of all the connected devices. */

// The returned object should be released with rs2_delete_device_list(...)

rs2_device_list* device_list = rs2_query_devices(ctx, &e); //设备列表

check_error(e);

int dev_count = rs2_get_device_count(device_list, &e);//设备数量

check_error(e);

printf("There are %d connected RealSense devices.\n", dev_count);

if (0 == dev_count)

return EXIT_FAILURE;

// Get the first connected device

// The returned object should be released with rs2_delete_device(...)

rs2_device* dev = rs2_create_device(device_list, 0, &e);//连接设备

check_error(e);

print_device_info(dev);//打印设备信息

/* Determine depth value corresponding to one meter */

uint16_t one_meter = (uint16_t)(1.0f / get_depth_unit_value(dev));//计算深度信息距离,取反,结果>1在1m内

// Create a pipeline to configure, start and stop camera streaming

// The returned object should be released with rs2_delete_pipeline(...)

rs2_pipeline* pipeline = rs2_create_pipeline(ctx, &e);//创建管道 开始或结束相机数据ctx表示设备

check_error(e);

// Create a config instance, used to specify hardware configuration

// The retunred object should be released with rs2_delete_config(...)

rs2_config* config = rs2_create_config(&e);

check_error(e);

// Request a specific configuration

rs2_config_enable_stream(config, STREAM, STREAM_INDEX, WIDTH, HEIGHT, FORMAT, FPS, &e);

check_error(e);

// Start the pipeline streaming

// The retunred object should be released with rs2_delete_pipeline_profile(...)

rs2_pipeline_profile* pipeline_profile = rs2_pipeline_start_with_config(pipeline, config, &e);

if (e)

{

printf("The connected device doesn't support depth streaming!\n");

exit(EXIT_FAILURE);

}

rs2_stream_profile_list* stream_profile_list = rs2_pipeline_profile_get_streams(pipeline_profile, &e);

if (e)

{

printf("Failed to create stream profile list!\n");

exit(EXIT_FAILURE);

}

rs2_stream_profile* stream_profile = (rs2_stream_profile*)rs2_get_stream_profile(stream_profile_list, 0, &e);

if (e)

{

printf("Failed to create stream profile!\n");

exit(EXIT_FAILURE);

}

rs2_stream stream; rs2_format format; int index; int unique_id; int framerate;

rs2_get_stream_profile_data(stream_profile, &stream, &format, &index, &unique_id, &framerate, &e);

if (e)

{

printf("Failed to get stream profile data!\n");

exit(EXIT_FAILURE);

}

int width; int height;

rs2_get_video_stream_resolution(stream_profile, &width, &height, &e); //获取宽高与数据流

if (e)

{

printf("Failed to get video stream resolution data!\n");

exit(EXIT_FAILURE);

}

int rows = height / HEIGHT_RATIO;

int row_length = width / WIDTH_RATIO;

int display_size = (rows + 1) * (row_length + 1);

int buffer_size = display_size * sizeof(char);

char* buffer = (char *)calloc(display_size, sizeof(char));

char* out = NULL;

while (1)

{

// This call waits until a new composite_frame is available

// composite_frame holds a set of frames. It is used to prevent frame drops

// The returned object should be released with rs2_release_frame(...)

rs2_frame* frames = rs2_pipeline_wait_for_frames(pipeline, RS2_DEFAULT_TIMEOUT, &e);

check_error(e);

// Returns the number of frames embedded within the composite frame

int num_of_frames = rs2_embedded_frames_count(frames, &e);

check_error(e);

int i;

startTime = clock();//计时开始

for (i = 0; i < num_of_frames; ++i)

{

// The retunred object should be released with rs2_release_frame(...)

rs2_frame* frame = rs2_extract_frame(frames, i, &e);

check_error(e);

// Check if the given frame can be extended to depth frame interface

// Accept only depth frames and skip other frames

if (0 == rs2_is_frame_extendable_to(frame, RS2_EXTENSION_DEPTH_FRAME, &e))

{

rs2_release_frame(frame);

continue;

}

/* Retrieve depth data, configured as 16-bit depth values */

const uint16_t* depth_frame_data = (const uint16_t*)(rs2_get_frame_data(frame, &e));//点云数据1

check_error(e);

/* Print a simple text-based representation of the image, by breaking it into 10x5 pixel regions and approximating the coverage of pixels within one meter */

out = buffer;

int x, y, i;

int* coverage = (int*)calloc(row_length, sizeof(int));

vector<color_point> vectorp;//存放点容器

for (y = 0; y < height; ++y)

{

for (x = 0; x < width; ++x)

{

// Create a depth histogram to each row

int coverage_index = x / WIDTH_RATIO;

int depth = *depth_frame_data++; //指针指向数据

if (depth > 0 && depth < one_meter*3&&x%2==0)

{

++coverage[coverage_index];

color_Point.x=x;

color_Point.y=y;

color_Point.color=(double)depth/one_meter/3.0;

vectorp.push_back(color_Point);

}

}

if ((y % HEIGHT_RATIO) == (HEIGHT_RATIO-1))

{

for (i = 0; i < (row_length); ++i)

{

static const char* pixels = " .:nhBXWW";

int pixel_index = (coverage[i] / (HEIGHT_RATIO * WIDTH_RATIO / sizeof(pixels)));

*out++ = pixels[pixel_index];

coverage[i] = 0;

}

*out++ = '\n';

}

}

screen.setTo(Scalar(1, 1, 1));//背景蓝色

for(int point_num=0;point_num<vectorp.size();point_num++)

{

Point point_center;

point_center=Point(vectorp[point_num].x,vectorp[point_num].y);

circle(screen,point_center,1, Scalar(vectorp[point_num].color,vectorp[point_num].color/3.0,1-vectorp[point_num].color),3,8,0);//点是绿色

}

//显示文字

string text = "FPS:";

sprintf(str, "%.2f", timefps); // 帧率保留两位小数

text += str; // 在"FPS:"后加入帧率数值字符串

int font_face = cv::FONT_HERSHEY_COMPLEX;

double font_scale =0.5;

int thickness = 2,baseline;

//获取文本框的长宽

Size text_size = getTextSize(text, font_face, font_scale, thickness, &baseline);

//将文本框居中绘制

Point origin;

//origin.x = screen.cols / 2 - text_size.width / 2;

//origin.y = screen.rows / 2 + text_size.height / 2;

origin.x =text_size.width / 2;

origin.y = 50 + text_size.height / 2;

putText(screen, text, origin, font_face, font_scale, cv::Scalar(0, 0, 0), thickness, 8, 0);

//显示文字

imshow("屏幕", screen);

waitKey(10);

*out++ = 0;

//printf("\n%s", buffer);

free(coverage);

rs2_release_frame(frame);

}

rs2_release_frame(frames);

endTime = clock();//计时结束

timefps=1.0/((float)(endTime - startTime)/CLOCKS_PER_SEC);

}

// Stop the pipeline streaming

rs2_pipeline_stop(pipeline, &e);

check_error(e);

// Release resources

free(buffer);

rs2_delete_pipeline_profile(pipeline_profile);

rs2_delete_stream_profiles_list(stream_profile_list);

rs2_delete_stream_profile(stream_profile);

rs2_delete_config(config);

rs2_delete_pipeline(pipeline);

rs2_delete_device(dev);

rs2_delete_device_list(device_list);

rs2_delete_context(ctx);

return EXIT_SUCCESS;

}

修改CMakeLists.txt

# License: Apache 2.0. See LICENSE file in root directory.

# Copyright(c) 2019 Intel Corporation. All Rights Reserved.

# minimum required cmake version: 3.1.0

cmake_minimum_required(VERSION 3.1.0)

project(RealsenseExamples-Depth)

find_package( OpenCV REQUIRED )

if(NOT WIN32)

SET(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -pthread")

endif()

set(DEPENDENCIES realsense2 ${PCL_LIBRARIES})

find_package(OpenGL)

if(NOT OPENGL_FOUND)

message(FATAL_ERROR "\n\n OpenGL package is missing!\n\n")

endif()

list(APPEND DEPENDENCIES ${OPENGL_LIBRARIES})

if(WIN32)

list(APPEND DEPENDENCIES glfw3)

else()

find_package(glfw3 REQUIRED)

list(APPEND DEPENDENCIES glfw)

endif()

add_executable(PointDepth rs-depth.cpp )

target_link_libraries(PointDepth ${DEPENDENCIES} ${OpenCV_LIBS})

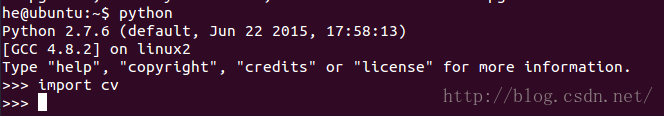

直接使用apt安装

sudo apt-get install python-opencv

sudo apt-get install python-numpy

测试:

打开python,importcv模块成功即可。

import cv

6929

6929

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?