图像风格迁移是指将一张图像的风格迁移到另一张图像上,得到一张新的图像,该图像既具有原图像的内容,又具有另一张图像的风格。基于卷积神经网络的图像风格迁移是最近比较流行的实现方法,其主要思路是利用卷积神经网络抽取不同层次的特征来表示图像,然后通过定义一个损失函数来优化得到迁移后的图像。

以下是基于卷积神经网络的图像风格迁移的实现步骤:

1. 定义损失函数

风格损失函数用于衡量迁移后图像与风格图像之间的差异,内容损失函数用于衡量迁移后图像与内容图像之间的差异,总损失函数是二者的加权和。

2. 加载预训练卷积神经网络

可以使用VGG16、VGG19等预训练的卷积神经网络,将其主干部分提取出来,作为图像特征的提取器。

3. 定义图像处理函数

定义图像风格迁移函数,该函数需要输入图像、风格图像和内容图像,输出迁移后的图像。

4. 训练模型

使用输入图像和目标图像对模型进行训练,更新权重,直到损失函数达到最小值。

5. 测试模型

使用已经训练好的模型对新的图像进行风格迁移,得到迁移后的图像。

总的来说,基于卷积神经网络的图像风格迁移实现过程较为复杂,需要对神经网络的结构和保存方式有一定的了解,同时需要对图像处理和优化算法有一定的实践经验。

直接上代码

# Copyright (c) 2015-2021 Anish Athalye. Released under GPLv3.

import numpy as np

import scipy.io

#import tensorflow.compat.v1 as tf

#tf.disable_v2_behavior()

import tensorflow._api.v2.compat.v1 as tf

tf.disable_v2_behavior()

# work-around for more recent versions of tensorflow

# https://github.com/tensorflow/tensorflow/issues/24496

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

sess = tf.Session(config=config)

VGG19_LAYERS = (

'conv1_1', 'relu1_1', 'conv1_2', 'relu1_2', 'pool1',

'conv2_1', 'relu2_1', 'conv2_2', 'relu2_2', 'pool2',

'conv3_1', 'relu3_1', 'conv3_2', 'relu3_2', 'conv3_3',

'relu3_3', 'conv3_4', 'relu3_4', 'pool3',

'conv4_1', 'relu4_1', 'conv4_2', 'relu4_2', 'conv4_3',

'relu4_3', 'conv4_4', 'relu4_4', 'pool4',

'conv5_1', 'relu5_1', 'conv5_2', 'relu5_2', 'conv5_3',

'relu5_3', 'conv5_4', 'relu5_4'

)

def load_net(data_path):

data = scipy.io.loadmat(data_path)

if 'normalization' in data:

# old format, for data where

# MD5(imagenet-vgg-verydeep-19.mat) = 8ee3263992981a1d26e73b3ca028a123

mean_pixel = np.mean(data['normalization'][0][0][0], axis=(0, 1))

else:

# new format, for data where

# MD5(imagenet-vgg-verydeep-19.mat) = 106118b7cf60435e6d8e04f6a6dc3657

mean_pixel = data['meta']['normalization'][0][0][0][0][2][0][0]

weights = data['layers'][0]

return weights, mean_pixel

def net_preloaded(weights, input_image, pooling):

net = {}

current = input_image

for i, name in enumerate(VGG19_LAYERS):

kind = name[:4]

if kind == 'conv':

if isinstance(weights[i][0][0][0][0], np.ndarray):

# old format

kernels, bias = weights[i][0][0][0][0]

else:

# new format

kernels, bias = weights[i][0][0][2][0]

# matconvnet: weights are [width, height, in_channels, out_channels]

# tensorflow: weights are [height, width, in_channels, out_channels]

kernels = np.transpose(kernels, (1, 0, 2, 3))

bias = bias.reshape(-1)

current = _conv_layer(current, kernels, bias)

elif kind == 'relu':

current = tf.nn.relu(current)

elif kind == 'pool':

current = _pool_layer(current, pooling)

net[name] = current

assert len(net) == len(VGG19_LAYERS)

return net

def _conv_layer(input, weights, bias):

conv = tf.nn.conv2d(input, tf.constant(weights), strides=(1, 1, 1, 1),

padding='SAME')

return tf.nn.bias_add(conv, bias)

def _pool_layer(input, pooling):

if pooling == 'avg':

return tf.nn.avg_pool(input, ksize=(1, 2, 2, 1), strides=(1, 2, 2, 1),

padding='SAME')

else:

return tf.nn.max_pool(input, ksize=(1, 2, 2, 1), strides=(1, 2, 2, 1),

padding='SAME')

def preprocess(image, mean_pixel):

return image - mean_pixel

def unprocess(image, mean_pixel):

return image + mean_pixel

# Copyright (c) 2015-2021 Anish Athalye. Released under GPLv3.

import os

import time

from collections import OrderedDict

from PIL import Image

import numpy as np

#import tensorflow.compat.v1 as tf

#tf.disable_v2_behavior()

import tensorflow._api.v2.compat.v1 as tf

tf.disable_v2_behavior()

import vgg

CONTENT_LAYERS = ('relu4_2', 'relu5_2')

STYLE_LAYERS = ('relu1_1', 'relu2_1', 'relu3_1', 'relu4_1', 'relu5_1')

try:

reduce

except NameError:

from functools import reduce

def get_loss_vals(loss_store):

return OrderedDict((key, val.eval()) for key,val in loss_store.items())

def print_progress(loss_vals):

for key,val in loss_vals.items():

print('{:>13s} {:g}'.format(key + ' loss:', val))

def stylize(network, initial, initial_noiseblend, content, styles, preserve_colors, iterations,

content_weight, content_weight_blend, style_weight, style_layer_weight_exp, style_blend_weights, tv_weight,

learning_rate, beta1, beta2, epsilon, pooling,

print_iterations=None, checkpoint_iterations=None):

"""

Stylize images.

This function yields tuples (iteration, image, loss_vals) at every

iteration. However `image` and `loss_vals` are None by default. Each

`checkpoint_iterations`, `image` is not None. Each `print_iterations`,

`loss_vals` is not None.

`loss_vals` is a dict with loss values for the current iteration, e.g.

``{'content': 1.23, 'style': 4.56, 'tv': 7.89, 'total': 13.68}``.

:rtype: iterator[tuple[int,image]]

"""

shape = (1,) + content.shape

style_shapes = [(1,) + style.shape for style in styles]

content_features = {}

style_features = [{} for _ in styles]

vgg_weights, vgg_mean_pixel = vgg.load_net(network)

layer_weight = 1.0

style_layers_weights = {}

for style_layer in STYLE_LAYERS:

style_layers_weights[style_layer] = layer_weight

layer_weight *= style_layer_weight_exp

# normalize style layer weights

layer_weights_sum = 0

for style_layer in STYLE_LAYERS:

layer_weights_sum += style_layers_weights[style_layer]

for style_layer in STYLE_LAYERS:

style_layers_weights[style_layer] /= layer_weights_sum

# compute content features in feedforward mode

g = tf.Graph()

with g.as_default(), g.device('/cpu:0'), tf.Session() as sess:

image = tf.placeholder('float', shape=shape)

net = vgg.net_preloaded(vgg_weights, image, pooling)

content_pre = np.array([vgg.preprocess(content, vgg_mean_pixel)])

for layer in CONTENT_LAYERS:

content_features[layer] = net[layer].eval(feed_dict={image: content_pre})

# compute style features in feedforward mode

for i in range(len(styles)):

g = tf.Graph()

with g.as_default(), g.device('/cpu:0'), tf.Session() as sess:

image = tf.placeholder('float', shape=style_shapes[i])

net = vgg.net_preloaded(vgg_weights, image, pooling)

style_pre = np.array([vgg.preprocess(styles[i], vgg_mean_pixel)])

for layer in STYLE_LAYERS:

features = net[layer].eval(feed_dict={image: style_pre})

features = np.reshape(features, (-1, features.shape[3]))

gram = np.matmul(features.T, features) / features.size

style_features[i][layer] = gram

initial_content_noise_coeff = 1.0 - initial_noiseblend

# make stylized image using backpropogation

with tf.Graph().as_default():

if initial is None:

noise = np.random.normal(size=shape, scale=np.std(content) * 0.1)

initial = tf.random_normal(shape) * 0.256

else:

initial = np.array([vgg.preprocess(initial, vgg_mean_pixel)])

initial = initial.astype('float32')

noise = np.random.normal(size=shape, scale=np.std(content) * 0.1)

initial = (initial) * initial_content_noise_coeff + (tf.random_normal(shape) * 0.256) * (1.0 - initial_content_noise_coeff)

image = tf.Variable(initial)

net = vgg.net_preloaded(vgg_weights, image, pooling)

# content loss

content_layers_weights = {}

content_layers_weights['relu4_2'] = content_weight_blend

content_layers_weights['relu5_2'] = 1.0 - content_weight_blend

content_loss = 0

content_losses = []

for content_layer in CONTENT_LAYERS:

content_losses.append(content_layers_weights[content_layer] * content_weight * (2 * tf.nn.l2_loss(

net[content_layer] - content_features[content_layer]) /

content_features[content_layer].size))

content_loss += reduce(tf.add, content_losses)

# style loss

style_loss = 0

for i in range(len(styles)):

style_losses = []

for style_layer in STYLE_LAYERS:

layer = net[style_layer]

_, height, width, number = map(lambda i: i.value, layer.get_shape())

size = height * width * number

feats = tf.reshape(layer, (-1, number))

gram = tf.matmul(tf.transpose(feats), feats) / size

style_gram = style_features[i][style_layer]

style_losses.append(style_layers_weights[style_layer] * 2 * tf.nn.l2_loss(gram - style_gram) / style_gram.size)

style_loss += style_weight * style_blend_weights[i] * reduce(tf.add, style_losses)

# total variation denoising

tv_y_size = _tensor_size(image[:,1:,:,:])

tv_x_size = _tensor_size(image[:,:,1:,:])

tv_loss = tv_weight * 2 * (

(tf.nn.l2_loss(image[:,1:,:,:] - image[:,:shape[1]-1,:,:]) /

tv_y_size) +

(tf.nn.l2_loss(image[:,:,1:,:] - image[:,:,:shape[2]-1,:]) /

tv_x_size))

# total loss

loss = content_loss + style_loss + tv_loss

# We use OrderedDict to make sure we have the same order of loss types

# (content, tv, style, total) as defined by the initial costruction of

# the loss_store dict. This is important for print_progress() and

# saving loss_arrs (column order) in the main script.

#

# Subtle Gotcha (tested with Python 3.5): The syntax

# OrderedDict(key1=val1, key2=val2, ...) does /not/ create the same

# order since, apparently, it first creates a normal dict with random

# order (< Python 3.7) and then wraps that in an OrderedDict. We have

# to pass in a data structure which is already ordered. I'd call this a

# bug, since both constructor syntax variants result in different

# objects. In 3.6, the order is preserved in dict() in CPython, in 3.7

# they finally made it part of the language spec. Thank you!

loss_store = OrderedDict([('content', content_loss),

('style', style_loss),

('tv', tv_loss),

('total', loss)])

# optimizer setup

train_step = tf.train.AdamOptimizer(learning_rate, beta1, beta2, epsilon).minimize(loss)

# optimization

best_loss = float('inf')

best = None

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

print('Optimization started...')

if (print_iterations and print_iterations != 0):

print_progress(get_loss_vals(loss_store))

iteration_times = []

start = time.time()

for i in range(iterations):

iteration_start = time.time()

if i > 0:

elapsed = time.time() - start

# take average of last couple steps to get time per iteration

remaining = np.mean(iteration_times[-10:]) * (iterations - i)

print('Iteration %4d/%4d (%s elapsed, %s remaining)' % (

i + 1,

iterations,

hms(elapsed),

hms(remaining)

))

else:

print('Iteration %4d/%4d' % (i + 1, iterations))

train_step.run()

last_step = (i == iterations - 1)

if last_step or (print_iterations and i % print_iterations == 0):

loss_vals = get_loss_vals(loss_store)

print_progress(loss_vals)

else:

loss_vals = None

if (checkpoint_iterations and i % checkpoint_iterations == 0) or last_step:

this_loss = loss.eval()

if this_loss < best_loss:

best_loss = this_loss

best = image.eval()

img_out = vgg.unprocess(best.reshape(shape[1:]), vgg_mean_pixel)

if preserve_colors:

original_image = np.clip(content, 0, 255)

styled_image = np.clip(img_out, 0, 255)

# Luminosity transfer steps:

# 1. Convert stylized RGB->grayscale accoriding to Rec.601 luma (0.299, 0.587, 0.114)

# 2. Convert stylized grayscale into YUV (YCbCr)

# 3. Convert original image into YUV (YCbCr)

# 4. Recombine (stylizedYUV.Y, originalYUV.U, originalYUV.V)

# 5. Convert recombined image from YUV back to RGB

# 1

styled_grayscale = rgb2gray(styled_image)

styled_grayscale_rgb = gray2rgb(styled_grayscale)

# 2

styled_grayscale_yuv = np.array(Image.fromarray(styled_grayscale_rgb.astype(np.uint8)).convert('YCbCr'))

# 3

original_yuv = np.array(Image.fromarray(original_image.astype(np.uint8)).convert('YCbCr'))

# 4

w, h, _ = original_image.shape

combined_yuv = np.empty((w, h, 3), dtype=np.uint8)

combined_yuv[..., 0] = styled_grayscale_yuv[..., 0]

combined_yuv[..., 1] = original_yuv[..., 1]

combined_yuv[..., 2] = original_yuv[..., 2]

# 5

img_out = np.array(Image.fromarray(combined_yuv, 'YCbCr').convert('RGB'))

else:

img_out = None

yield i+1 if last_step else i, img_out, loss_vals

iteration_end = time.time()

iteration_times.append(iteration_end - iteration_start)

def _tensor_size(tensor):

from operator import mul

return reduce(mul, (d.value for d in tensor.get_shape()), 1)

def rgb2gray(rgb):

return np.dot(rgb[...,:3], [0.299, 0.587, 0.114])

def gray2rgb(gray):

w, h = gray.shape

rgb = np.empty((w, h, 3), dtype=np.float32)

rgb[:, :, 2] = rgb[:, :, 1] = rgb[:, :, 0] = gray

return rgb

def hms(seconds):

seconds = int(seconds)

hours = (seconds // (60 * 60))

minutes = (seconds // 60) % 60

seconds = seconds % 60

if hours > 0:

return '%d hr %d min' % (hours, minutes)

elif minutes > 0:

return '%d min %d sec' % (minutes, seconds)

else:

return '%d sec' % seconds

# Copyright (c) 2015-2021 Anish Athalye. Released under GPLv3.

import os

import math

import re

from argparse import ArgumentParser

from collections import OrderedDict

from PIL import Image

import numpy as np

from stylize import stylize

# default arguments

CONTENT_WEIGHT = 5e0

CONTENT_WEIGHT_BLEND = 1

STYLE_WEIGHT = 5e2

TV_WEIGHT = 1e2

STYLE_LAYER_WEIGHT_EXP = 1

LEARNING_RATE = 1e1

BETA1 = 0.9

BETA2 = 0.999

EPSILON = 1e-08

STYLE_SCALE = 1.0

ITERATIONS = 2000

VGG_PATH = 'imagenet-vgg-verydeep-19.mat'

CONTENT_PATH = 'content.jpg'

STYLE_PATH = 'style.jpg'

content = 'examples/food4.jpg' # 此处为内容图片路径,可修改

styles = ['examples/2-style1.jpg'] # 此处为风格图片路径,可修改

POOLING = 'max'

def build_parser():

parser = ArgumentParser()

parser.add_argument('--content',

dest='content', help='content image',default=content,

metavar='CONTENT')

parser.add_argument('--styles',

dest='styles',

nargs='+', help='one or more style images',default=styles,

metavar='STYLE')

parser.add_argument('--output',

dest='output', help='output path',

default='./examples/1-res.jpg',

metavar='OUTPUT')

parser.add_argument('--iterations', type=int,

dest='iterations', help='iterations (default %(default)s)',

metavar='ITERATIONS', default=ITERATIONS)

parser.add_argument('--print-iterations', type=int,

dest='print_iterations', help='statistics printing frequency',

metavar='PRINT_ITERATIONS')

parser.add_argument('--checkpoint-output',

dest='checkpoint_output',

help='checkpoint output format, e.g. output_{:05}.jpg or '

'output_%%05d.jpg',

metavar='OUTPUT', default=None)

parser.add_argument('--checkpoint-iterations', type=int,

dest='checkpoint_iterations', help='checkpoint frequency',

metavar='CHECKPOINT_ITERATIONS', default=None)

parser.add_argument('--progress-write', default=False, action='store_true',

help="write iteration progess data to OUTPUT's dir",

required=False)

parser.add_argument('--progress-plot', default=False, action='store_true',

help="plot iteration progess data to OUTPUT's dir",

required=False)

parser.add_argument('--width', type=int,

dest='width', help='output width',

metavar='WIDTH')

parser.add_argument('--style-scales', type=float,

dest='style_scales',

nargs='+', help='one or more style scales',

metavar='STYLE_SCALE')

parser.add_argument('--network',

dest='network', help='path to network parameters (default %(default)s)',

metavar='VGG_PATH', default=VGG_PATH)

parser.add_argument('--content-weight-blend', type=float,

dest='content_weight_blend',

help='content weight blend, conv4_2 * blend + conv5_2 * (1-blend) '

'(default %(default)s)',

metavar='CONTENT_WEIGHT_BLEND', default=CONTENT_WEIGHT_BLEND)

parser.add_argument('--content-weight', type=float,

dest='content_weight', help='content weight (default %(default)s)',

metavar='CONTENT_WEIGHT', default=CONTENT_WEIGHT)

parser.add_argument('--style-weight', type=float,

dest='style_weight', help='style weight (default %(default)s)',

metavar='STYLE_WEIGHT', default=STYLE_WEIGHT)

parser.add_argument('--style-layer-weight-exp', type=float,

dest='style_layer_weight_exp',

help='style layer weight exponentional increase - '

'weight(layer<n+1>) = weight_exp*weight(layer<n>) '

'(default %(default)s)',

metavar='STYLE_LAYER_WEIGHT_EXP', default=STYLE_LAYER_WEIGHT_EXP)

parser.add_argument('--style-blend-weights', type=float,

dest='style_blend_weights', help='style blending weights',

nargs='+', metavar='STYLE_BLEND_WEIGHT')

parser.add_argument('--tv-weight', type=float,

dest='tv_weight',

help='total variation regularization weight (default %(default)s)',

metavar='TV_WEIGHT', default=TV_WEIGHT)

parser.add_argument('--learning-rate', type=float,

dest='learning_rate', help='learning rate (default %(default)s)',

metavar='LEARNING_RATE', default=LEARNING_RATE)

parser.add_argument('--beta1', type=float,

dest='beta1', help='Adam: beta1 parameter (default %(default)s)',

metavar='BETA1', default=BETA1)

parser.add_argument('--beta2', type=float,

dest='beta2', help='Adam: beta2 parameter (default %(default)s)',

metavar='BETA2', default=BETA2)

parser.add_argument('--eps', type=float,

dest='epsilon', help='Adam: epsilon parameter (default %(default)s)',

metavar='EPSILON', default=EPSILON)

parser.add_argument('--initial',

dest='initial', help='initial image',

metavar='INITIAL')

parser.add_argument('--initial-noiseblend', type=float,

dest='initial_noiseblend',

help='ratio of blending initial image with normalized noise '

'(if no initial image specified, content image is used) '

'(default %(default)s)',

metavar='INITIAL_NOISEBLEND')

parser.add_argument('--preserve-colors', action='store_true',

dest='preserve_colors',

help='style-only transfer (preserving colors) - if color transfer '

'is not needed')

parser.add_argument('--pooling',

dest='pooling',

help='pooling layer configuration: max or avg (default %(default)s)',

metavar='POOLING', default=POOLING)

parser.add_argument('--overwrite', action='store_true', dest='overwrite',

help='write file even if there is already a file with that name')

return parser

def fmt_imsave(fmt, iteration):

if re.match(r'^.*\{.*\}.*$', fmt):

return fmt.format(iteration)

elif '%' in fmt:

return fmt % iteration

else:

raise ValueError("illegal format string '{}'".format(fmt))

def main():

# https://stackoverflow.com/a/42121886

key = 'TF_CPP_MIN_LOG_LEVEL'

if key not in os.environ:

os.environ[key] = '2'

parser = build_parser()

options = parser.parse_args()

if not os.path.isfile(options.network):

parser.error("Network %s does not exist. (Did you forget to "

"download it?)" % options.network)

if [options.checkpoint_iterations,

options.checkpoint_output].count(None) == 1:

parser.error("use either both of checkpoint_output and "

"checkpoint_iterations or neither")

if options.checkpoint_output is not None:

if re.match(r'^.*(\{.*\}|%.*).*$', options.checkpoint_output) is None:

parser.error("To save intermediate images, the checkpoint_output "

"parameter must contain placeholders (e.g. "

"`foo_{}.jpg` or `foo_%d.jpg`")

content_image = imread(options.content)

style_images = [imread(style) for style in options.styles]

#style_images = imread(options.styles)

width = options.width

if width is not None:

new_shape = (int(math.floor(float(content_image.shape[0]) /

content_image.shape[1] * width)), width)

content_image = imresize(content_image, new_shape)

target_shape = content_image.shape

for i in range(len(style_images)):

style_scale = STYLE_SCALE

if options.style_scales is not None:

style_scale = options.style_scales[i]

# style_images[i] = imresize(style_images[i], style_scale *

# target_shape[1] / style_images[i].shape[1])

style_images[i] = imresize(style_images[i], style_scale *

target_shape[1] / style_images[i].shape[1])

style_blend_weights = options.style_blend_weights

if style_blend_weights is None:

# default is equal weights

style_blend_weights = [1.0/len(style_images) for _ in style_images]

else:

total_blend_weight = sum(style_blend_weights)

style_blend_weights = [weight/total_blend_weight

for weight in style_blend_weights]

initial = options.initial

if initial is not None:

initial = imresize(imread(initial), content_image.shape[:2])

# Initial guess is specified, but not noiseblend - no noise should be blended

if options.initial_noiseblend is None:

options.initial_noiseblend = 0.0

else:

# Neither inital, nor noiseblend is provided, falling back to random

# generated initial guess

if options.initial_noiseblend is None:

options.initial_noiseblend = 1.0

if options.initial_noiseblend < 1.0:

initial = content_image

# try saving a dummy image to the output path to make sure that it's writable

if os.path.isfile(options.output) and not options.overwrite:

raise IOError("%s already exists, will not replace it without "

"the '--overwrite' flag" % options.output)

try:

imsave(options.output, np.zeros((500, 500, 3)))

except:

raise IOError('%s is not writable or does not have a valid file '

'extension for an image file' % options.output)

loss_arrs = None

for iteration, image, loss_vals in stylize(

network=options.network,

initial=initial,

initial_noiseblend=options.initial_noiseblend,

content=content_image,

styles=style_images,

preserve_colors=options.preserve_colors,

iterations=options.iterations,

content_weight=options.content_weight,

content_weight_blend=options.content_weight_blend,

style_weight=options.style_weight,

style_layer_weight_exp=options.style_layer_weight_exp,

style_blend_weights=style_blend_weights,

tv_weight=options.tv_weight,

learning_rate=options.learning_rate,

beta1=options.beta1,

beta2=options.beta2,

epsilon=options.epsilon,

pooling=options.pooling,

print_iterations=options.print_iterations,

checkpoint_iterations=options.checkpoint_iterations,

):

if (image is not None) and (options.checkpoint_output is not None):

imsave(fmt_imsave(options.checkpoint_output, iteration), image)

if (loss_vals is not None) \

and (options.progress_plot or options.progress_write):

if loss_arrs is None:

itr = []

loss_arrs = OrderedDict((key, []) for key in loss_vals.keys())

for key,val in loss_vals.items():

loss_arrs[key].append(val)

itr.append(iteration)

imsave(options.output, image)

if options.progress_write:

fn = "{}/progress.txt".format(os.path.dirname(options.output))

tmp = np.empty((len(itr), len(loss_arrs)+1), dtype=float)

tmp[:,0] = np.array(itr)

for ii,val in enumerate(loss_arrs.values()):

tmp[:,ii+1] = np.array(val)

np.savetxt(fn, tmp, header=' '.join(['itr'] + list(loss_arrs.keys())))

if options.progress_plot:

import matplotlib

matplotlib.use('Agg')

from matplotlib import pyplot as plt

fig,ax = plt.subplots()

for key, val in loss_arrs.items():

ax.semilogy(itr, val, label=key)

ax.legend()

ax.set_xlabel("iterations")

ax.set_ylabel("loss")

fig.savefig("{}/progress.png".format(os.path.dirname(options.output)))

def imread(path):

img = np.array(Image.open(path)).astype(np.float)

if len(img.shape) == 2:

# grayscale

img = np.dstack((img,img,img))

elif img.shape[2] == 4:

# PNG with alpha channel

img = img[:,:,:3]

return img

def imsave(path, img):

img = np.clip(img, 0, 255).astype(np.uint8)

Image.fromarray(img).save(path, quality=95)

def imresize(arr, size):

img = Image.fromarray(np.clip(arr, 0, 255).astype(np.uint8))

if isinstance(size, tuple):

height, width = size

else:

width = int(img.width * size)

height = int(img.height * size)

return np.array(img)

if __name__ == '__main__':

main()

原图

风格样图

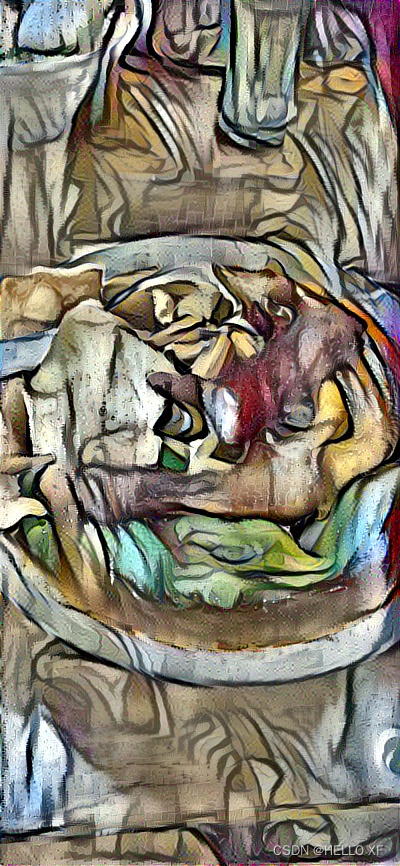

迭代1000次效果

迭代2000次效果

结论:基于卷积神经网络VGG,通过模型调参可以良好的实现风格迁移

部分内容来自网络仅供学习,如有侵权请联系删除

1925

1925

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?