from keras.datasets import mnist

from keras import models

from keras import layers

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

X_VAL=train_images[50000:]

Y_VAL=train_labels[50000:]

X_TRAIN=train_images[:50000]

Y_TRAIN=train_labels[:50000]

X_TRAIN = X_TRAIN.reshape(50000, 28 * 28).astype('float32') / 255.0

X_VAL =X_VAL.reshape(10000, 28 * 28).astype('float32') / 255.0

test_images = test_images.reshape(10000, 28 * 28).astype('float32') / 255.0

import numpy as np

from keras.utils import to_categorical

Y_TRAIN = to_categorical(Y_TRAIN)

Y_VAL = to_categorical(Y_VAL)

test_labels = to_categorical(test_labels)

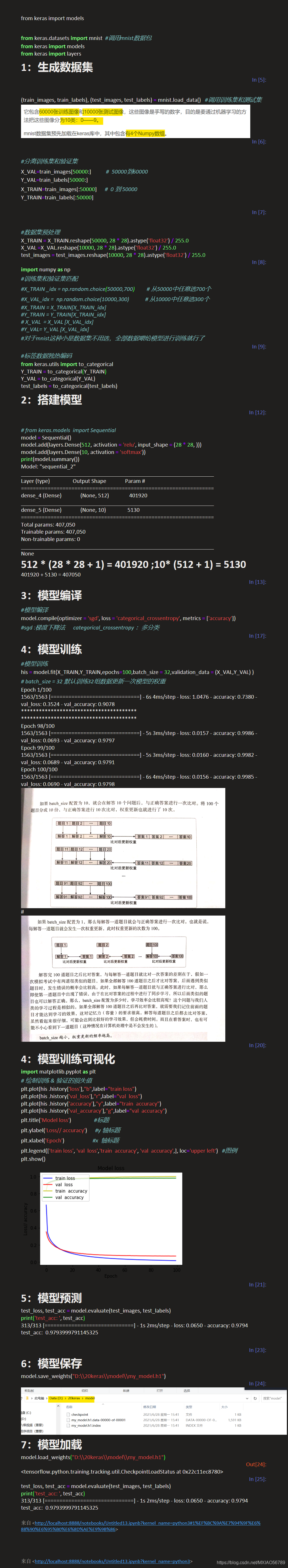

from keras.models import Sequential

model = Sequential()

model.add(layers.Dense(512, activation = 'relu', input_shape = (28 * 28, )))

model.add(layers.Dense(10, activation = 'softmax'))

print(model.summary())

model.compile(optimizer = 'sgd', loss = 'categorical_crossentropy', metrics = ['accuracy'])

his = model.fit(X_TRAIN,Y_TRAIN,epochs=100,batch_size = 32,validation_data = (X_VAL,Y_VAL) )

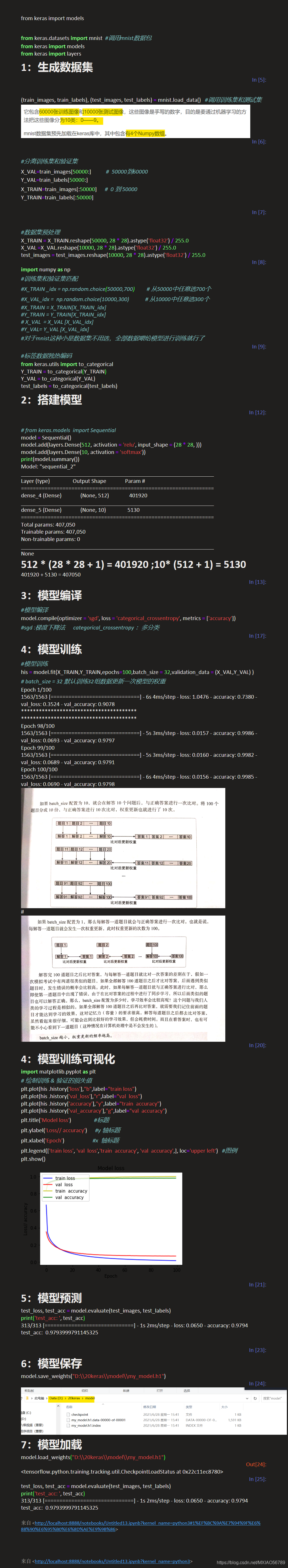

import matplotlib.pyplot as plt

plt.plot(his .history['loss'],"b",label="train loss")

plt.plot(his .history['val_loss'],"r",label="val loss")

plt.plot(his .history['accuracy'],"y",label="train accuracy")

plt.plot(his .history['val_accuracy'],"g",label="val accuracy")

plt.title('Model loss')

plt.ylabel('Loss// accuracy')

plt.xlabel('Epoch')

plt.legend(['train loss', 'val loss','train accuracy', 'val accuracy',], loc='upper left')

plt.show()

test_loss, test_acc = model.evaluate(test_images, test_labels)

print('test_acc: ', test_acc)

model.save_weights("D:\\20keras\\model\\my_model.h1")

model.load_weights("D:\\20keras\\model\\my_model.h1")

test_loss, test_acc = model.evaluate(test_images, test_labels)

print('test_acc: ', test_acc)

158

158

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?