知识要点

-

ResNet在2015年由微软实验室提出, 效果非常好.

一 ResNet模型

1.1 简介

ResNet在2015年由微软实验室提出,斩获当年ImageNet竞赛中分类任务第一名,目标检测第一名。获得COCO数据集中目标检测第一名,图像分割第一名。(啥也别说了,就是NB)

网络中的亮点:

- 超深的网络结构(突破1000层)

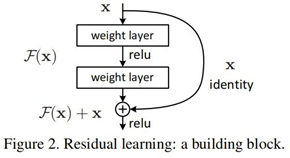

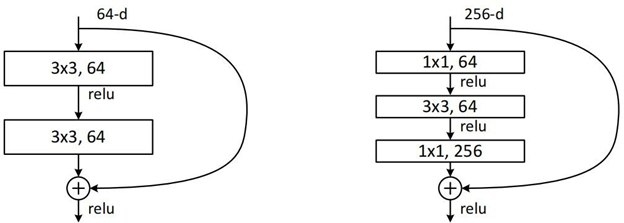

- 提出residual模块

- 使用Batch Normalization加速训练(丢弃dropout)

标准模块:

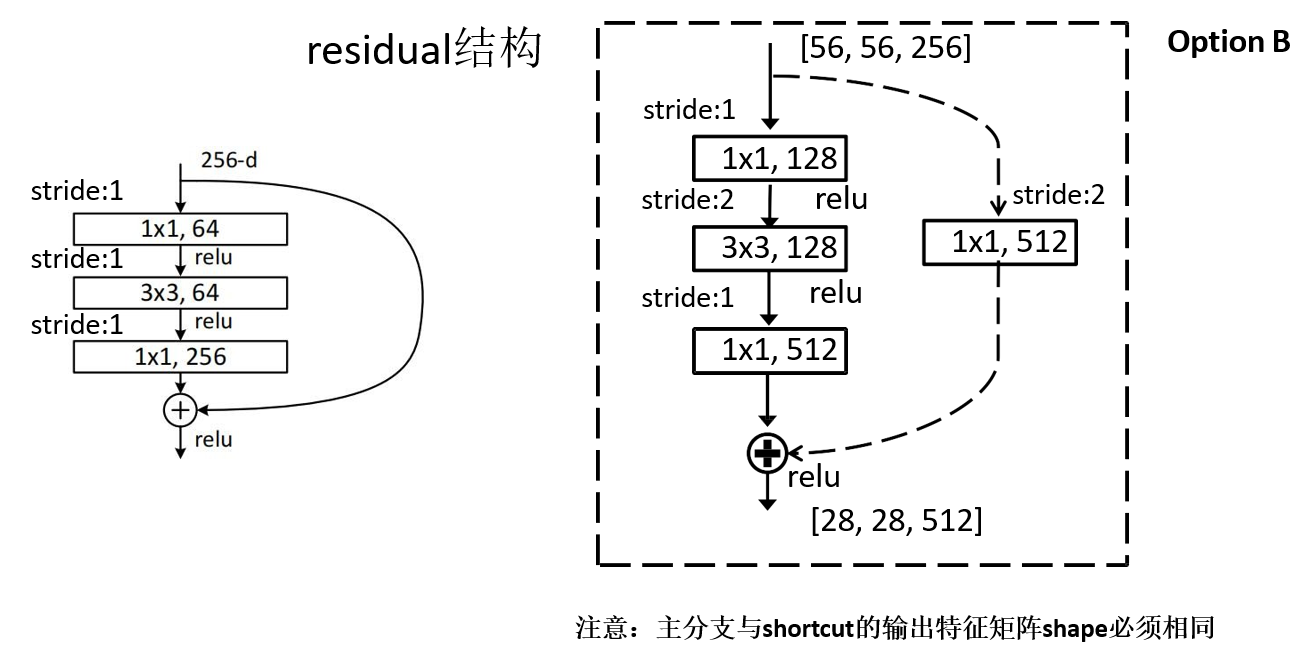

1.2 residual结构

- 1x1的卷积核 用来降维和升维

-

注意:主分支与shortcut的输出特征矩阵shape必须相同

1.3 Batch Normalization解析

- Batch Normalization解读: Batch Normalization详解

1.4 图示

二 代码实现

2.1 导包

from tensorflow import keras

import tensorflow as tf

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

cpu=tf.config.list_physical_devices("CPU")

tf.config.set_visible_devices(cpu)

print(tf.config.list_logical_devices())2.2 restnet 建模

class BasicBlock(keras.layers.Layer):

expansion = 1

def __init__(self, out_channel, strides=1, downsample=None, **kwargs):

super().__init__(**kwargs)

self.conv1 = keras.layers.Conv2D(out_channel,

kernel_size=3,

strides=strides, # !!!怎么少打的一行

padding='SAME',

use_bias=False)

self.bn1 = keras.layers.BatchNormalization(momentum=0.9, epsilon=1e-5)

self.conv2 = keras.layers.Conv2D(out_channel,

kernel_size=3,

strides=1,

padding='SAME',

use_bias=False)

self.bn2 = keras.layers.BatchNormalization(momentum=0.9, epsilon=1e-5)

self.relu = keras.layers.ReLU()

self.add = keras.layers.Add()

self.downsample = downsample

def call(self, inputs, training=False):

identity = inputs

if self.downsample is not None:

identity = self.downsample(inputs)

x = self.conv1(inputs)

x = self.bn1(x, training=training)

x = self.relu(x)

x = self.conv2(x)

x = self.bn2(x, training=training)

x = self.add([identity, x])

x = self.relu(x)

return x

class Bottleneck(keras.layers.Layer):

expansion = 4

def __init__(self, out_channel, strides=1, downsample=None, **kwargs):

super().__init__(**kwargs)

# 卷积

self.conv1 = keras.layers.Conv2D(out_channel,

kernel_size=1, # 卷积核大小

strides=strides, # 步长

padding='SAME',

use_bias=False) # 一般在BN层不使用bias

# BN层

self.bn1 = keras.layers.BatchNormalization(momentum=0.9, epsilon=1e-5)

self.conv2 = keras.layers.Conv2D(out_channel,

kernel_size=3, # 卷积核大小

strides=strides, # 步长

padding='SAME',

use_bias=False) # 一般在BN层不使用bias

self.bn2 = keras.layers.BatchNormalization(momentum=0.9, epsilon=1e-5)

self.conv3 = keras.layers.Conv2D(out_channel * self.expansion,

kernel_size=1, # 卷积核大小

strides=strides, # 步长

padding='SAME',

use_bias=False) # 一般在BN层不使用bias

self.bn3 = keras.layers.BatchNormalization(momentum=0.9, epsilon=1e-5)

self.relu = keras.layers.Relu()

self.add = keras.layers.Add()

self.downsample = downsample

# 基础的block

def call(self, inputs, training=False):

identity = inputs

if self.downsample is not None:

identity = self.downsample(inputs)

x = self.conv1(inputs)

x = self.bn1(x, training=training)

x = self.relu(x)

x = self.conv2(x)

x = self.bn2(x, training=training)

x = self.relu(x)

x = self.conv3(x)

x = self.bn3(x, training=training)

x = self.add([identity, x])

x = self.relu(x)

return x

def _make_layer(block, in_channel, channel, block_num, name, strides = 1):

downsample = None

if strides != 1 or in_channel != channel * block.expansion:

downsample = keras.Sequential([

keras.layers.Conv2D(channel * block.expansion, kernel_size = 1,

strides = strides,

use_bias = False,

name = 'conv1'),

keras.layers.BatchNormalization(momentum = 0.9, # BN层, 虚线操作

epsilon = 1e-5,

name = 'BatchNorm')

], name = 'shortcut')

layers_list = []

layers_list.append(block(channel,

downsample = downsample,

strides = strides,

name = 'unit_1'))

for index in range(1, block_num):

layers_list.append(block(channel, name = 'unit_' + str(index + 1)))

return keras.Sequential(layers_list, name = name)

def _resnet(block, blocks_num, im_width = 224, im_height = 224, num_classes = 1000, include_top = True):

input_image = keras.layers.Input(shape = (im_height, im_width, 3),

dtype = 'float32')

x = keras.layers.Conv2D(64, kernel_size = 7,

strides = 2,

padding = 'SAME',

name = 'conv1',

use_bias = False)(input_image)

x = keras.layers.BatchNormalization(momentum = 0.9, epsilon = 1e-5)(x)

# relu

x = keras.layers.ReLU()(x)

x = keras.layers.MaxPool2D(pool_size = 3, strides = 2, padding = 'SAME')(x)

# 导入循环包

x = _make_layer(block, x.shape[-1], 64, blocks_num[0], name = 'block1')(x)

x = _make_layer(block, x.shape[-1], 128, blocks_num[1], name='block2', strides=2)(x)

x = _make_layer(block, x.shape[-1], 256, blocks_num[2], name='block3', strides= 2)(x)

x = _make_layer(block, x.shape[-1], 512, blocks_num[3], name='block4', strides= 2)(x)

if include_top:

x = keras.layers.GlobalAvgPool2D()(x) # pool + flatten

x = keras.layers.Dense(num_classes)(x)

predict = keras.layers.Softmax()(x)

else:

predict = x

model = keras.models.Model(inputs = input_image, outputs = predict)

return model

# 生成具体模型

def resnet34(im_width = 224, im_height = 224, num_classes = 1000, include_top = True):

return _resnet(BasicBlock,

[3, 4, 6, 3],

im_width,

im_height,

num_classes,

include_top= include_top)

def restnet50(im_width = 224, im_height = 224, num_classes = 1000, include_top = True):

return _resnet(Bottleneck,

[3, 4, 6, 3],

im_width,

im_height,

num_classes,

include_top= include_top)2.3 数据导入并训练

train_dir = './training/training/'

valid_dir = './validation/validation/'

# 图片数据生成

# 整体封装

train_datagen = keras.preprocessing.image.ImageDataGenerator(

rescale = 1./255,

rotation_range = 40,

width_shift_range = 0.2,

height_shift_range = 0.2,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True,

vertical_flip = True,

fill_mode = 'nearest'

)

height = 224

width = 224

channels = 3

batch_size = 32

num_classes = 10

train_generator = train_datagen.flow_from_directory(train_dir,

target_size= (height, width),

batch_size = batch_size,

shuffle= True,

seed = 7,

class_mode= 'categorical')

valid_datagen = keras.preprocessing.image.ImageDataGenerator(rescale = 1./ 255)

valid_generator = valid_datagen.flow_from_directory(valid_dir,

target_size= (height, width),

batch_size= batch_size,

shuffle= True,

seed = 7,

class_mode= 'categorical')

print(train_generator.samples)

print(valid_generator.samples)

model34 = resnet34(num_classes=10)

model34.compile(optimizer = 'adam',

loss = 'categorical_crossentropy',

metrics = ['acc'])

histroy = model34.fit(train_generator,

steps_per_epoch= train_generator.samples // batch_size,

epochs = 10,

validation_data = valid_generator,

validation_steps= valid_generator.samples // batch_size)

2094

2094

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?