本文将对图学习中的链路预测任务进行系统性的介绍。

文章目录

1. 问题定义

早期论文,证明链路预测任务的重要性:(2003 CIKM) The Link-Prediction Problem for Social Networks1

2. 研究方法

2.1 基于图中结构相似性的链路预测

在社交网络中可能符合这一假设,在PPI网络中可能不符(有很多共同邻居的蛋白质相连(interact)可能性更低)2

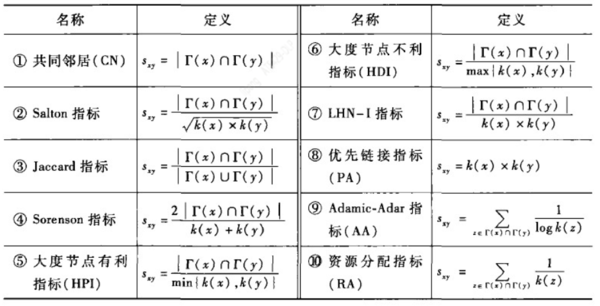

2.1.1 基于局部信息的相似性指标

- 共同邻居 Common neighbors

- 直接基于共同邻居指标的不同的规范化而得到的, k ( x ) = ∣ Γ ( x ) ∣ k(x) = |Γ(x)| k(x)=∣Γ(x)∣ 为节点x的度

相关早期工作:

- (2003 CIKM) The Link-Prediction Problem for Social Networks

- (2009 The European Physical Journal B) Predicting missing links via local information

- (2016 ICDE) Link prediction in graph streams

2.1.2 基于路径的相似性指标

2.1.3 基于随机游走的节点相似性指标

2.2 基于似然分析的链路预测

2.3 基于机器学习的链路预测

2.4 进行节点表征,用节点表征相似性实现链路预测:不同的相似度

一般范式是首先进行节点表征(可以有监督或无监督,解耦或耦合),然后用相似度度量指标(一般用点积或余弦相似度),用这个得分作为二分类(是否连边)任务的得分。

2.4.1 通用节点表征工作

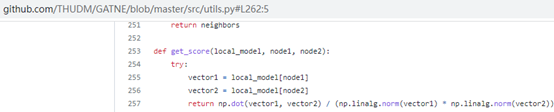

使用余弦相似度的工作:

- GATNE3

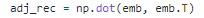

使用内积的工作:

- VGAE4

(对取出的边还要分别过sigmoid,在本博文中略)

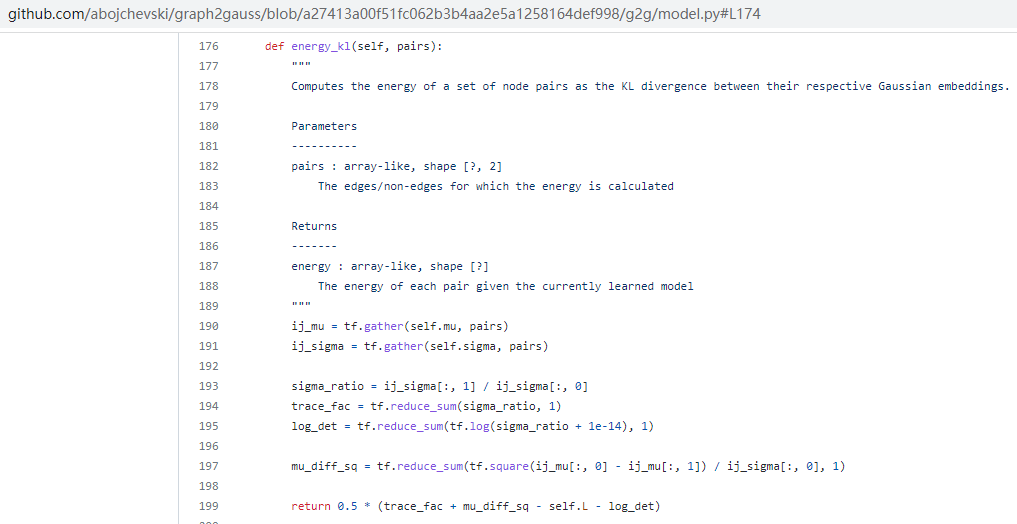

G2G5使用KL散度(这个是无监督节点表征模型,本来就以KL散度为基础建立的损失函数)

2.4.2 链路预测工作

DEAL6则是几种距离都测试过(点积/余弦相似度/欧氏距离),在论文中说余弦相似度效果最好(以下代码摘自https://github.com/working-yuhao/DEAL/blob/master/model.py:

class Hidden_Layer(nn.Module): #Hidden Layer, Binary classification

def __init__(self, emb_dim, device,BCE_mode, mode='all', dropout_p = 0.3):

super(Hidden_Layer, self).__init__()

self.emb_dim = emb_dim

self.mode = mode

self.device = device

self.BCE_mode = BCE_mode

self.Linear1 = nn.Linear(self.emb_dim*2, self.emb_dim).to(self.device)

self.Linear2 = nn.Linear(self.emb_dim, 32).to(self.device)

x_dim = 1

self.Linear3 = nn.Linear(32, x_dim).to(self.device)

if self.mode == 'all':

if self.BCE_mode:

self.linear_output = nn.Linear(x_dim+ 3, 1).to(self.device)

else:

self.linear_output = nn.Linear(x_dim+ 3, 2).to(self.device)

else:

self.linear_output = nn.Linear(1, 2).to(self.device)

self.linear_output.weight.data[1,:] = 1

self.linear_output.weight.data[0,:] = -1

self.cos = nn.CosineSimilarity(dim=1, eps=1e-6)

self.pdist = nn.PairwiseDistance(p=2,keepdim=True)

self.softmax = nn.Softmax(dim=1)

self.elu = nn.ELU()

assert (self.mode in ['all','cos','dot','pdist']),"Wrong mode type"

def forward(self, f_embs, s_embs):

if self.mode == 'all':

x = torch.cat([f_embs,s_embs],dim=1)

x = F.rrelu(self.Linear1(x))

x = F.rrelu(self.Linear2(x))

x = F.rrelu(self.Linear3(x))

cos_x = self.cos(f_embs,s_embs).unsqueeze(1)

dot_x = torch.mul(f_embs,s_embs).sum(dim=1,keepdim=True)

pdist_x = self.pdist(f_embs,s_embs)

x = torch.cat([x,cos_x,dot_x,pdist_x],dim=1)

elif self.mode == 'cos':

x = self.cos(f_embs,s_embs).unsqueeze(1)

elif self.mode == 'dot':

x = torch.mul(f_embs,s_embs).sum(dim=1,keepdim=True)

elif self.mode == 'pdist':

x = self.pdist(f_embs,s_embs)

if self.BCE_mode:

return x.squeeze()

# return (x/x.max()).squeeze()

else:

x = self.linear_output(x)

x = F.rrelu(x)

# x = torch.cat((x,-x),dim=1)

return x

def evaluate(self, f_embs, s_embs):

if self.mode == 'all':

x = torch.cat([f_embs,s_embs],dim=1)

x = F.rrelu(self.Linear1(x))

x = F.rrelu(self.Linear2(x))

x = F.rrelu(self.Linear3(x))

cos_x = self.cos(f_embs,s_embs).unsqueeze(1)

dot_x = torch.mul(f_embs,s_embs).sum(dim=1,keepdim=True)

pdist_x = self.pdist(f_embs,s_embs)

x = torch.cat([x,cos_x,dot_x,pdist_x],dim=1)

elif self.mode == 'cos':

x = self.cos(f_embs,s_embs)

elif self.mode == 'dot':

x = torch.mul(f_embs,s_embs).sum(dim=1)

elif self.mode == 'pdist':

x = -self.pdist(f_embs,s_embs).squeeze()

return

#(DEAL模型中,三个layer分别是上面那个类的实例)

def evaluate(self, nodes,data, lambdas=(1,1,1)):

node_emb = self.node_emb(torch.arange(self.node_num).to(self.device))

first_embs = node_emb[nodes[:,0]]

sec_embs = node_emb[nodes[:,1]]

res = self.node_layer(first_embs,sec_embs) * lambdas[0]

node_emb = self.attr_emb(data)

first_embs = node_emb[nodes[:,0]]

sec_embs = node_emb[nodes[:,1]]

res = res + self.attr_layer(first_embs,sec_embs)* lambdas[1]

first_nodes = nodes[:,0]

first_embs = self.attr_emb(data)[first_nodes]

sec_embs = self.node_emb(torch.LongTensor(nodes[:,1]).to(self.device))

res = res + self.inter_layer(first_embs,sec_embs)* lambdas[2]

return res

2.5 其他

- 利用子图结构

- WLNM:将所有子图切成相同大小输入全连接神经网络

- SEAL7:学习启发式方法(利用子图、浅嵌入和特征)

3. 链路预测工作分类和应用

- 将链路预测任务作为无监督图表征学习的训练任务(可能说是自监督学习任务更合适)(在原论文中,是在baseline中用到了有监督图表征学习方法HAN,用链路预测来作为无监督训练的任务):(2022 SDM) Structure-Enhanced Heterogeneous Graph Contrastive Learning

- inductive场景可用的模型

4. 正文及脚注中未提及的其他参考资料

- 链路预测 - 集智百科 - 复杂系统|人工智能|复杂科学|复杂网络|自组织:这篇主要讲的是传统的链路预测工作,这里面的相关内容我还没有补完

5. 待更新(flag)

- GIN

- GAT

- (2017 IJCAI) Link Prediction with Spatial and Temporal Consistency in Dynamic Networks

- (2020 AAAI) Temporal Network Embedding with High-Order Nonlinear Information

- (2019 ICDM) Social trust network embedding

2024.1.1补充:是这样的,我好久好久好久没做链路预测了,所以一直没有更新。

现在更新一下我之前整理过但是还没看的论文,作为参考资料吧:

- (2022 CIKM) RelpNet: Relation-based Link Prediction Neural Network

- (2022 ICICICT) Link Prediction in Citation Networks: A Survey

- (2022 TELKOMNIKA) LPCNN: convolutional neural network for link prediction based on network structured features

The Link-Prediction Problem for Social Networks

可参考的笔记博文:#Paper Reading# The Link Prediction Problem for Social Networks_John159151的博客-CSDN博客 看了一下这篇笔记,大致来说,这篇文章构建了一个无特征的学术合作网络,基于上一时间点(年份)预测下一时间点的新边。使用经典的节点相似性计算方法。

(表格画得跟统计学论文一样啊) ↩︎(2019 Nature Communications) Network-based prediction of protein interactions ↩︎

(2019 KDD) Representation Learning for Attributed Multiplex Heterogeneous Network ↩︎

(2016 NIPS) Variational Graph Auto-Encoders ↩︎ ↩︎

(2018 ICLR) Deep Gaussian Embedding of Graphs: Unsupervised Inductive Learning via Ranking

我写过笔记:Re37:读论文 G2G Graph2Gauss Deep Gaussian Embedding of Graphs: Unsupervised Inductive Learning via Rank ↩︎(2020 IJCAI) Re9:读论文 DEAL Inductive Link Prediction for Nodes Having Only Attribute Information ↩︎ ↩︎

(2018 NIPS) Link prediction based on graph neural networks

我参考了如下资料:

①论文分享|基于GNN的链路预测 - 知乎:这篇写得挺抽象的,不作推荐 ↩︎

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?