加载数据集

import torch

from torch import nn

from torch.nn import functional as F

from torch import optim

import torchvision

from matplotlib import pyplot as plt

from utils import plot_image,plot_curve,one_hot

batch_size=512

train_loader=torch.utils.data.DataLoader(

torchvision.datasets.MNIST('mnist data',train=True,download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(

(0.1307,),(0.3081,)

)

])),

batch_size=batch_size,shuffle=True

)

test_loader=torch.utils.data.DataLoader(

torchvision.datasets.MNIST('mnist data',train=False,download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(

(0.1307,),(0.3081,)

)

])),

batch_size=batch_size,shuffle=True

)

x,y=next(iter(train_loader))

print(x.shape,y.shape,x.min(),x.max())

plot_image(x,y,'image sample')结果

torch.Size([512, 1, 28, 28]) torch.Size([512]) tensor(-0.4242) tensor(2.8215)

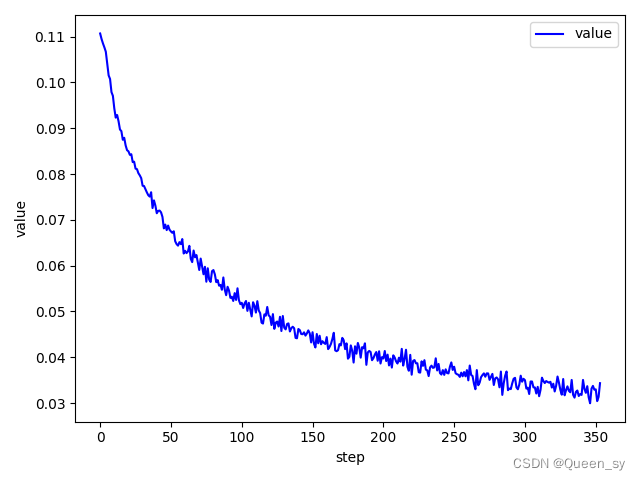

训练

# 创建网络:三层非线性层的嵌套

class Net(nn.Module):#继承nn.Module

def __init__(self):

super(Net, self).__init__()

# xw+b 线性层

self.fc1=nn.Linear(28*28,256)#输入的是28*28维特征(固定)的x->输出256(经验得出)维特征的y 此y就是一个中间过度的输出、

self.fc2=nn.Linear(256, 64)

self.fc3=nn.Linear(64,10)#10分类需要是个输出节点 所以一定是10 这是个维度就表示每一个样本对于0-9不同的概率

def forward(self, x):

#x:[b,1,28,28] b表示b章图片

x=F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

# 最后一层加不加激活函数取决与经验值 这个就不加

x=(self.fc3(x))

return x

# 训练 求导跟更新

# 网络对象

net=Net()

# 优化器

optimizer = optim.SGD(net.parameters(), lr=0.01,momentum=0.9)

train_loss=[]

# 对数据集迭代三次

for epoch in range(3):

# 对整个数据集迭代一次

for batch_idx,(x,y) in enumerate(train_loader):

# print(x.shape, y.shape)

# x:[b=512,1,28,28],y:[512]

# 全连接层xw+b层 此网络只能接受[b,feature]这样类型,而实际x:[b=512,1,28,28],所以需要打平

# 打平[b,1,28,28]=>[b,784]

x=x.view(x.size(0),28*28)

out=net(x)

# print(out.shape)=torch.Size([512, 10])

y_onehot=one_hot(y)

loss=F.mse_loss(out, y_onehot)

train_loss.append(loss.item())

optimizer.zero_grad()

loss.backward()

# w=w-lr*grad

optimizer.step()

if batch_idx % 10 == 0:

print(epoch,batch_idx,loss.item())

plot_curve(train_loss)

# 得到了[w1,b1,w2,b2,w3,b3]

0 0 0.11067488044500351

0 10 0.0943082645535469

0 20 0.08495017886161804

0 30 0.07744021713733673

0 40 0.0714396983385086

0 50 0.0674654096364975

0 60 0.06324262917041779

0 70 0.059055496007204056

0 80 0.05903492122888565

0 90 0.0553865060210228

0 100 0.051882218569517136

0 110 0.04975864663720131

1 0 0.05097641423344612

1 10 0.045732591301202774

1 20 0.04423248767852783

1 30 0.04524523392319679

1 40 0.04314319044351578

1 50 0.04154777526855469

1 60 0.041426219046115875

1 70 0.038350772112607956

1 80 0.03864293918013573

1 90 0.039954546838998795

1 100 0.03705741837620735

1 110 0.03812757134437561

2 0 0.0377984382212162

2 10 0.03647816926240921

2 20 0.03585110604763031

2 30 0.03720710426568985

2 40 0.03573155403137207

2 50 0.035967521369457245

2 60 0.03404301032423973

2 70 0.03343154117465019

2 80 0.03460792452096939

2 90 0.03182953968644142

2 100 0.032444968819618225

2 110 0.029968297109007835

测试 求准确率

#准确度测试

total_correct=0

for x,y in test_loader:

x=x.view(x.size(0), 28 * 28)

out = net(x)

# out:[b,10]

# 求第一个维度上面 (不是第0个 就是10所在的维度)最大值的索引

# pred:[b]

pred=out.argmax(dim=1)

correct = pred.eq(y).sum().float()

total_correct += correct.item()

total_num = len(test_loader.dataset)

acc=total_correct / total_num

print(acc)结果0.8792

import torch

from torch import nn

from torch.nn import functional as F

from torch import optim

import torchvision

from matplotlib import pyplot as plt

from utils import plot_image,plot_curve,one_hot

# 加载数据

batch_size=512

train_loader=torch.utils.data.DataLoader(

torchvision.datasets.MNIST('mnist data',train=True,download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(

(0.1307,),(0.3081,)

)

])),

batch_size=batch_size,shuffle=True

)

test_loader=torch.utils.data.DataLoader(

torchvision.datasets.MNIST('mnist data',train=False,download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(

(0.1307,),(0.3081,)

)

])),

batch_size=batch_size,shuffle=True

)

# x,y=next(iter(train_loader))

# print(x.shape,y.shape,x.min(),x.max())

# torch.Size([512, 1, 28, 28]) torch.Size([512]) tensor(-0.4242) tensor(2.8215)

# plot_image(x,y,'image sample')

# 创建网络:三层非线性层的嵌套

class Net(nn.Module):#继承nn.Module

def __init__(self):

super(Net, self).__init__()

# xw+b 线性层

self.fc1=nn.Linear(28*28,256)#输入的是28*28维特征(固定)的x->输出256(经验得出)维特征的y 此y就是一个中间过度的输出、

self.fc2=nn.Linear(256, 64)

self.fc3=nn.Linear(64,10)#10分类需要是个输出节点 所以一定是10 这是个维度就表示每一个样本对于0-9不同的概率

def forward(self, x):

#x:[b,1,28,28] b表示b章图片

x=F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

# 最后一层加不加激活函数取决与经验值 这个就不加

x=(self.fc3(x))

return x

# 训练 求导跟更新

# 网络对象

net=Net()

# 优化器

optimizer = optim.SGD(net.parameters(), lr=0.01,momentum=0.9)

train_loss=[]

# 对数据集迭代三次

for epoch in range(3):

# 对整个数据集迭代一次

for batch_idx,(x,y) in enumerate(train_loader):

# print(x.shape, y.shape)

# x:[b=512,1,28,28],y:[512]

# 全连接层xw+b层 此网络只能接受[b,feature]这样类型,而实际x:[b=512,1,28,28],所以需要打平

# 打平[b,1,28,28]=>[b,784]

x=x.view(x.size(0),28*28)

out=net(x)

# print(out.shape)=torch.Size([512, 10])

y_onehot=one_hot(y)

loss=F.mse_loss(out, y_onehot)

train_loss.append(loss.item())

optimizer.zero_grad()

loss.backward()

# w=w-lr*grad

optimizer.step()

# if batch_idx % 10 == 0:

# print(epoch,batch_idx,loss.item())

# plot_curve(train_loss)

# 得到了[w1,b1,w2,b2,w3,b3]

#准确度测试

total_correct=0

for x,y in test_loader:

x=x.view(x.size(0), 28 * 28)

out = net(x)

# out:[b,10]

# 求第一个维度上面 (不是第0个 就是10所在的维度)最大值的索引

# pred:[b]

pred=out.argmax(dim=1)

correct = pred.eq(y).sum().float()

total_correct += correct.item()

total_num = len(test_loader.dataset)

acc=total_correct / total_num

print(acc)

4409

4409

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?