目录

1 实验目的

1. 通过“示例:带有多层感知器的姓氏分类”,掌握多层感知器在多层分类中的应用

2. 掌握每种类型的神经网络层对它所计算的数据张量的大小和形状的影响

3. 尝试带有dropout的SurnameClassifier模型,看看它如何更改结果

2 实验内容

2.1 多层感知机的实现

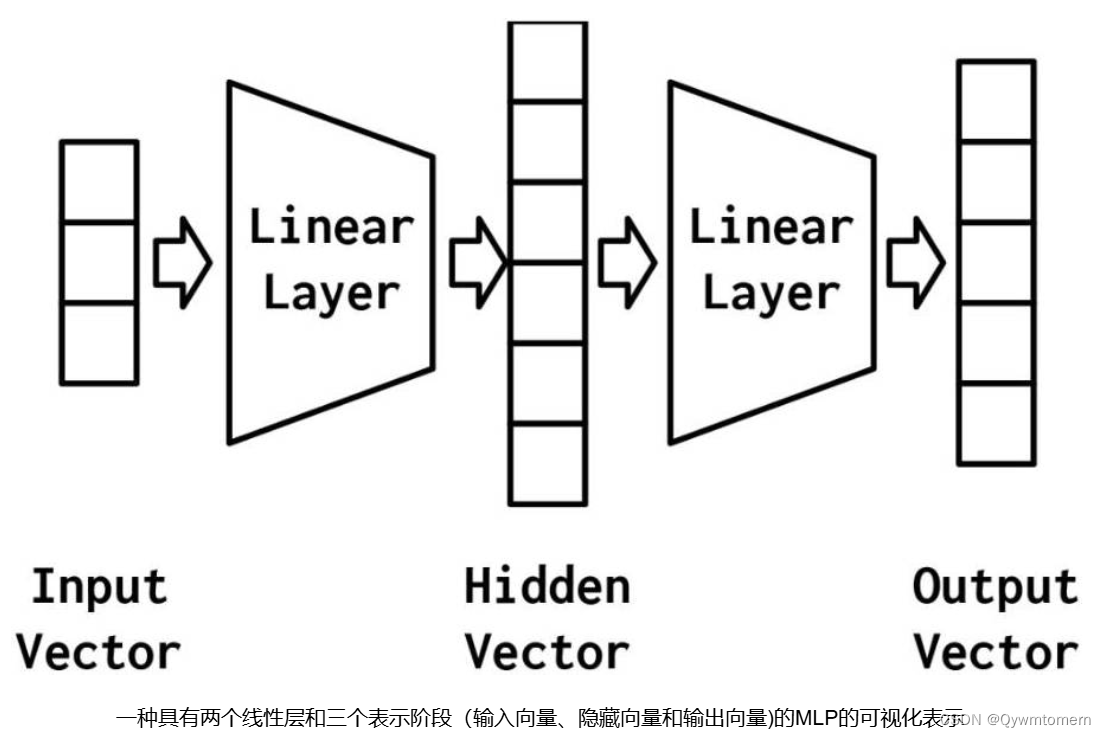

最简单的MLP由三个表示阶段和两个线性层组成。第一阶段是输入向量,这是给定给模型的向量。给定输入向量,第一个线性层计算一个隐藏向量——表示的第二阶段。使用这个隐藏的向量,第二个线性层计算一个输出向量。在多类设置中,输出向量是类数量的大小。图例只展示了一个隐藏的向量,但是有可能有多个中间阶段,每个阶段产生自己的隐藏向量。最终的隐藏向量总是通过线性层和非线性的组合映射到输出向量。

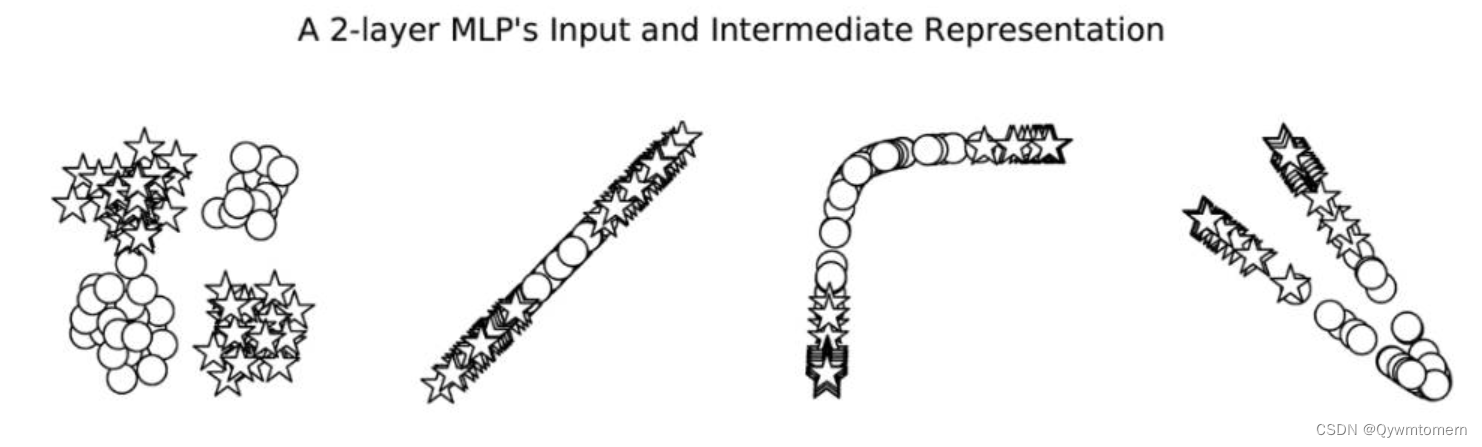

MLP的能力来自于使用线性层之间的非线性激活函数来扭曲样本的表示空间,使得最后能用一个超平面进行划分。

使用pytorch实现MLP

class MultilayerPerceptron(nn.Module):

def __init__(self, input_dim, hidden_dim, output_dim):

"""

Args:

input_dim (int): the size of the input vectors

hidden_dim (int): the output size of the first Linear layer

output_dim (int): the output size of the second Linear layer

"""

super(MultilayerPerceptron, self).__init__()

self.fc1 = nn.Linear(input_dim, hidden_dim)

self.fc2 = nn.Linear(hidden_dim, output_dim)

def forward(self, x_in, apply_softmax=False):

"""The forward pass of the MLP

Args:

x_in (torch.Tensor): an input data tensor.

x_in.shape should be (batch, input_dim)

apply_softmax (bool): a flag for the softmax activation

should be false if used with the Cross Entropy losses

Returns:

the resulting tensor. tensor.shape should be (batch, output_dim)

"""

intermediate = F.relu(self.fc1(x_in))

output = self.fc2(intermediate)

if apply_softmax:

output = F.softmax(output, dim=1)

return output结构和上述图例一致,使用nn.Linear构建线性层,nn,functional调用激活函数relu

2.2 使用多层感知机对姓氏的国籍进行分类

首先需要一个改写的数据集类对数据集进行划分处理(包括数据类别占比平衡,训练、验证、测试集划分等)。相应的,创建一个调用DataLoader实例的函数,用来载入每次训练所需数据。

class SurnameDataset(Dataset):

def __init__(self, surname_df, vectorizer):

"""

Args:

name_df (pandas.DataFrame): the dataset

vectorizer (SurnameVectorizer): vectorizer instatiated from dataset

"""

self.surname_df = surname_df

self._vectorizer = vectorizer

self.train_df = self.surname_df[self.surname_df.split=='train']

self.train_size = len(self.train_df)

self.val_df = self.surname_df[self.surname_df.split=='val']

self.validation_size = len(self.val_df)

self.test_df = self.surname_df[self.surname_df.split=='test']

self.test_size = len(self.test_df)

self._lookup_dict = {'train': (self.train_df, self.train_size),

'val': (self.val_df, self.validation_size),

'test': (self.test_df, self.test_size)}

self.set_split('train')

# Class weights

class_counts = surname_df.nationality.value_counts().to_dict()

def sort_key(item):

return self._vectorizer.nationality_vocab.lookup_token(item[0])

sorted_counts = sorted(class_counts.items(), key=sort_key)

frequencies = [count for _, count in sorted_counts]

self.class_weights = 1.0 / torch.tensor(frequencies, dtype=torch.float32)

@classmethod

def load_dataset_and_make_vectorizer(cls, surname_csv):

"""Load dataset and make a new vectorizer from scratch

Args:

surname_csv (str): location of the dataset

Returns:

an instance of SurnameDataset

"""

surname_df = pd.read_csv(surname_csv)

train_surname_df = surname_df[surname_df.split=='train']

return cls(surname_df, SurnameVectorizer.from_dataframe(train_surname_df))

@classmethod

def load_dataset_and_load_vectorizer(cls, surname_csv, vectorizer_filepath):

"""Load dataset and the corresponding vectorizer.

Used in the case in the vectorizer has been cached for re-use

Args:

surname_csv (str): location of the dataset

vectorizer_filepath (str): location of the saved vectorizer

Returns:

an instance of SurnameDataset

"""

surname_df = pd.read_csv(surname_csv)

vectorizer = cls.load_vectorizer_only(vectorizer_filepath)

return cls(surname_df, vectorizer)

@staticmethod

def load_vectorizer_only(vectorizer_filepath):

"""a static method for loading the vectorizer from file

Args:

vectorizer_filepath (str): the location of the serialized vectorizer

Returns:

an instance of SurnameDataset

"""

with open(vectorizer_filepath) as fp:

return SurnameVectorizer.from_serializable(json.load(fp))

def save_vectorizer(self, vectorizer_filepath):

"""saves the vectorizer to disk using json

Args:

vectorizer_filepath (str): the location to save the vectorizer

"""

with open(vectorizer_filepath, "w") as fp:

json.dump(self._vectorizer.to_serializable(), fp)

def get_vectorizer(self):

""" returns the vectorizer """

return self._vectorizer

def set_split(self, split="train"):

""" selects the splits in the dataset using a column in the dataframe """

self._target_split = split

self._target_df, self._target_size = self._lookup_dict[split]

def __len__(self):

return self._target_size

def __getitem__(self, index):

"""the primary entry point method for PyTorch datasets

Args:

index (int): the index to the data point

Returns:

a dictionary holding the data point's features (x_data) and label (y_target)

"""

row = self._target_df.iloc[index]

surname_matrix = \

self._vectorizer.vectorize(row.surname)

nationality_index = \

self._vectorizer.nationality_vocab.lookup_token(row.nationality)

return {'x_surname': surname_matrix,

'y_nationality': nationality_index}

def get_num_batches(self, batch_size):

"""Given a batch size, return the number of batches in the dataset

Args:

batch_size (int)

Returns:

number of batches in the dataset

"""

return len(self) // batch_size

def generate_batches(dataset, batch_size, shuffle=True,

drop_last=True, device="cpu"):

"""

A generator function which wraps the PyTorch DataLoader. It will

ensure each tensor is on the write device location.

"""

dataloader = DataLoader(dataset=dataset, batch_size=batch_size,

shuffle=shuffle, drop_last=drop_last)

for data_dict in dataloader:

out_data_dict = {}

for name, tensor in data_dict.items():

out_data_dict[name] = data_dict[name].to(device)

yield out_data_dict其次需要一个词汇表,将字符转换为可以输入的数字

class Vocabulary(object):

"""Class to process text and extract vocabulary for mapping"""

def __init__(self, token_to_idx=None, add_unk=True, unk_token="<UNK>"):

"""

Args:

token_to_idx (dict): a pre-existing map of tokens to indices

add_unk (bool): a flag that indicates whether to add the UNK token

unk_token (str): the UNK token to add into the Vocabulary

"""

if token_to_idx is None:

token_to_idx = {}

self._token_to_idx = token_to_idx

self._idx_to_token = {idx: token

for token, idx in self._token_to_idx.items()}

self._add_unk = add_unk

self._unk_token = unk_token

self.unk_index = -1

if add_unk:

self.unk_index = self.add_token(unk_token)

def to_serializable(self):

""" returns a dictionary that can be serialized """

return {'token_to_idx': self._token_to_idx,

'add_unk': self._add_unk,

'unk_token': self._unk_token}

@classmethod

def from_serializable(cls, contents):

""" instantiates the Vocabulary from a serialized dictionary """

return cls(**contents)

def add_token(self, token):

"""Update mapping dicts based on the token.

Args:

token (str): the item to add into the Vocabulary

Returns:

index (int): the integer corresponding to the token

"""

try:

index = self._token_to_idx[token]

except KeyError:

index = len(self._token_to_idx)

self._token_to_idx[token] = index

self._idx_to_token[index] = token

return index

def add_many(self, tokens):

"""Add a list of tokens into the Vocabulary

Args:

tokens (list): a list of string tokens

Returns:

indices (list): a list of indices corresponding to the tokens

"""

return [self.add_token(token) for token in tokens]

def lookup_token(self, token):

"""Retrieve the index associated with the token

or the UNK index if token isn't present.

Args:

token (str): the token to look up

Returns:

index (int): the index corresponding to the token

Notes:

`unk_index` needs to be >=0 (having been added into the Vocabulary)

for the UNK functionality

"""

if self.unk_index >= 0:

return self._token_to_idx.get(token, self.unk_index)

else:

return self._token_to_idx[token]

def lookup_index(self, index):

"""Return the token associated with the index

Args:

index (int): the index to look up

Returns:

token (str): the token corresponding to the index

Raises:

KeyError: if the index is not in the Vocabulary

"""

if index not in self._idx_to_token:

raise KeyError("the index (%d) is not in the Vocabulary" % index)

return self._idx_to_token[index]

def __str__(self):

return "<Vocabulary(size=%d)>" % len(self)

def __len__(self):

return len(self._token_to_idx)还需要向量化工具,使每个字符对应的数字转化为对应的数字向量

class SurnameVectorizer(object):

""" The Vectorizer which coordinates the Vocabularies and puts them to use"""

def __init__(self, surname_vocab, nationality_vocab):

self.surname_vocab = surname_vocab

self.nationality_vocab = nationality_vocab

def vectorize(self, surname):

"""Vectorize the provided surname

Args:

surname (str): the surname

Returns:

one_hot (np.ndarray): a collapsed one-hot encoding

"""

vocab = self.surname_vocab

one_hot = np.zeros(len(vocab), dtype=np.float32)

for token in surname:

one_hot[vocab.lookup_token(token)] = 1

return one_hot

@classmethod

def from_dataframe(cls, surname_df):

"""Instantiate the vectorizer from the dataset dataframe

Args:

surname_df (pandas.DataFrame): the surnames dataset

Returns:

an instance of the SurnameVectorizer

"""

surname_vocab = Vocabulary(unk_token="@")

nationality_vocab = Vocabulary(add_unk=False)

for index, row in surname_df.iterrows():

for letter in row.surname:

surname_vocab.add_token(letter)

nationality_vocab.add_token(row.nationality)

return cls(surname_vocab, nationality_vocab)满足上述条件,随后即可在MLP上实现姓氏分类

class SurnameClassifier(nn.Module):

def __init__(self, input_dim, hidden_dim, output_dim):

super(SurnameClassifier, self).__init__()

self.fc1 = nn.Linear(input_dim, hidden_dim)

self.fc2 = nn.Linear(hidden_dim, output_dim)

def forward(self, x_in, apply_softmax=False, apply_dropout=False):

intermediate_vector = F.relu(self.fc1(x_in))

if apply_softmax:

prediction_vector = self.fc2(F.dropout(intermediate_vector, p=0.5))

else:

prediction_vector = self.fc2(intermediate_vector)

if apply_softmax:

prediction_vector = F.softmax(prediction_vector, dim=1)

return prediction_vector使用Namespace设置训练参数

args = Namespace(

# Data and path information

surname_csv="data/surnames/surnames_with_splits.csv",

vectorizer_file="vectorizer.json",

model_state_file="model.pth",

save_dir="model_storage/ch4/surname_mlp",

# Model hyper parameters

hidden_dim=300,

# Training hyper parameters

seed=1337,

num_epochs=100,

early_stopping_criteria=5,

learning_rate=0.001,

batch_size=64,

# Runtime options

cuda=False,

reload_from_files=False,

expand_filepaths_to_save_dir=True,

)实例化数据集、姓氏分类模型、损失函数(多分类使用交叉熵损失比较有利)、优化器等

dataset = SurnameDataset.load_dataset_and_make_vectorizer(args.surname_csv)

vectorizer = dataset.get_vectorizer()

classifier = SurnameClassifier(input_dim=len(vectorizer.surname_vocab),

hidden_dim=args.hidden_dim,

output_dim=len(vectorizer.nationality_vocab))

loss_func = nn.CrossEntropyLoss(dataset.class_weights)

optimizer = optim.Adam(classifier.parameters(), lr=args.learning_rate, betas=(0.9, 0.999))利用训练数据,计算模型输出、损失和梯度。然后,使用梯度来更新模型

for batch_index, batch_dict in enumerate(batch_generator):

# the training routine is these 5 steps:

# --------------------------------------

# step 1. zero the gradients

optimizer.zero_grad()

# step 2. compute the output

y_pred = classifier(batch_dict['x_surname'], apply_dropout=True)

# y_pred = classifier(batch_dict['x_surname'])

# step 3. compute the loss

loss = loss_func(y_pred, batch_dict['y_nationality'])

loss_t = loss.item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

# step 4. use loss to produce gradients

loss.backward()

# step 5. use optimizer to take gradient step

optimizer.step()最后在测试集上计算损失和准确率,来评估模型的性能

for batch_index, batch_dict in enumerate(batch_generator):

# compute the output

y_pred = classifier(batch_dict['x_surname'])

# compute the loss

loss = loss_func(y_pred, batch_dict['y_nationality'])

loss_t = loss.item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

# compute the accuracy

acc_t = compute_accuracy(y_pred, batch_dict['y_nationality'])

running_acc += (acc_t - running_acc) / (batch_index + 1)2.3 使用卷积神经网络对姓氏的国籍进行分类

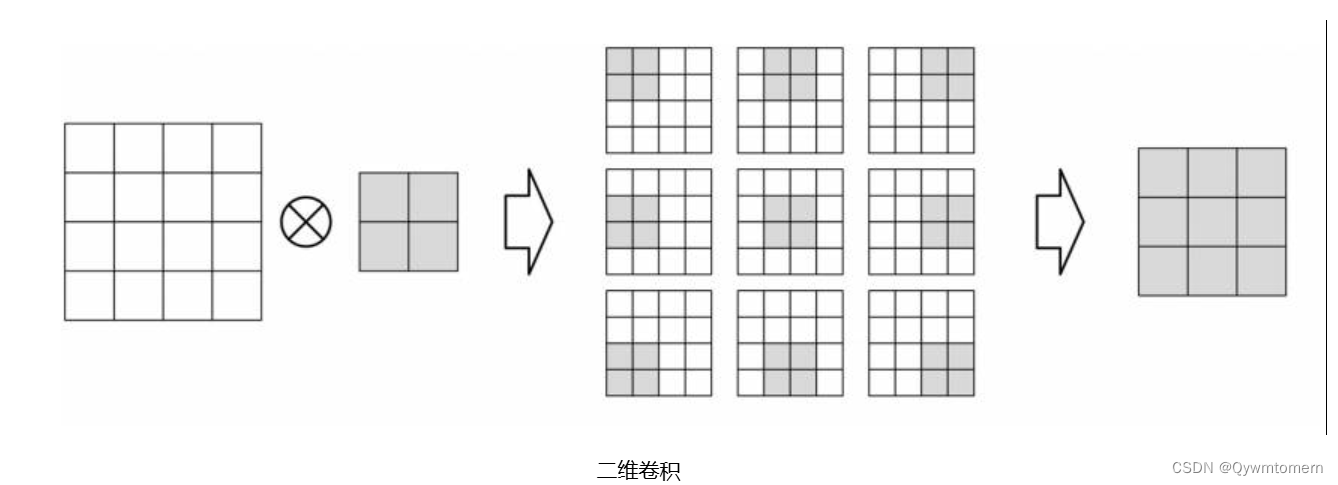

卷积神经网络就是用不同的卷积核(kernel)去提取输入信息的特征,然后根据提取的特征进行分类(深层是卷积层提取特征,上层一般是传统分类器)

卷积操作有六个参数,卷积核大小、步长、输入通道数、输出通道数、填充与膨胀

卷积操作有六个参数,卷积核大小、步长、输入通道数、输出通道数、填充与膨胀

步长是卷积核每次移动的距离。输出通道数是你想得到的特征图数量,每一个卷积核对应一张特征图。填充是指在进行卷积操作前对源输入进行扩充的操作,使得能够自由控制卷积前后的大小。膨胀控制卷积核如何应用于输入矩阵,即核内元素间的间隔大小。

在pytorch上实现卷积神经网络分类器

与MLP一样,依然需要数据集类、词汇表、向量化工具。与之不同的是,cnn的数据集采用one-hot矩阵表示,而不是收缩的one-hot向量。即输入是一个二维矩阵,维度大小分别是最长姓氏的长度与one-hot向量大小。

class SurnameClassifier(nn.Module):

def __init__(self, initial_num_channels, num_classes, num_channels):

super(SurnameClassifier, self).__init__()

self.convnet = nn.Sequential(

nn.Conv1d(in_channels=initial_num_channels,

out_channels=num_channels, kernel_size=3),

nn.ELU(),

nn.Conv1d(in_channels=num_channels, out_channels=num_channels,

kernel_size=3, stride=2),

nn.ELU(),

nn.Conv1d(in_channels=num_channels, out_channels=num_channels,

kernel_size=3, stride=2),

nn.ELU(),

nn.Conv1d(in_channels=num_channels, out_channels=num_channels,

kernel_size=3),

nn.ELU()

)

self.fc = nn.Linear(num_channels, num_classes)

def forward(self, x_surname, apply_softmax=False):

features = self.convnet(x_surname).squeeze(dim=2)

prediction_vector = self.fc(features)

if apply_softmax:

prediction_vector = F.softmax(prediction_vector, dim=1)

return prediction_vector序列模块是封装线性操作序列的方便包装器。在此模型中,我们使用它来封装Conv1d卷积操作。ELU是类似于实验3中介绍的ReLU的非线性函数,但是它不是将值裁剪到0以下,而是对它们求幂。

最后将特征(num_channels)线性转换为类别数(num_classes),便于分类。

同样使用Namespace设置训练参数

args = Namespace(

# Data and Path information

surname_csv="data/surnames/surnames_with_splits.csv",

vectorizer_file="vectorizer.json",

model_state_file="model.pth",

save_dir="model_storage/ch4/cnn",

# Model hyper parameters

hidden_dim=100,

num_channels=256,

# Training hyper parameters

seed=1337,

learning_rate=0.001,

batch_size=128,

num_epochs=100,

early_stopping_criteria=5,

dropout_p=0.1,

# Runtime options

cuda=False,

reload_from_files=False,

expand_filepaths_to_save_dir=True,

catch_keyboard_interrupt=True

)训练过程与MLP一致

2.4 不同类型的神经网络层计算前后的形状变化

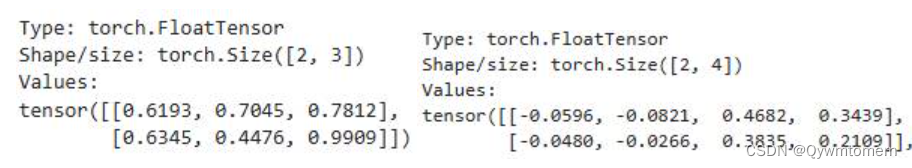

多层感知机(MLP)中只有全连接层(FC),所给输入计算前后形状变化仅与指定输出维度有关

nn.Linear(input_dim, output_dim)即output_dim

例如下列模型

batch_size = 2 # number of samples input at once

input_dim = 3

hidden_dim = 100

output_dim = 4

# Initialize model

mlp = MultilayerPerceptron(input_dim, hidden_dim, output_dim)

x_input = torch.rand(batch_size, input_dim)

describe(x_input)

y_output = mlp(x_input, apply_softmax=False)

describe(y_output)计算前后输入形状变化

卷积神经网络中特有的层是卷积层。在本次自然语言处理示例中使用的是Conv1d(in_channels, out_channels, kernel_size, stride)

卷积计算后的输出大小=(原有大小 - 卷积核大小)/2+1

通道数为out_channels,输出样本数不变。

batch_size = 2

one_hot_size = 10

sequence_width = 7

data = torch.randn(batch_size, one_hot_size, sequence_width)

conv1 = nn.Conv1d(in_channels=one_hot_size, out_channels=16,

kernel_size=3)

intermediate1 = conv1(data)

print(data.size())

print(intermediate1.size())

conv2 = nn.Conv1d(in_channels=16, out_channels=32, kernel_size=3)

conv3 = nn.Conv1d(in_channels=32, out_channels=64, kernel_size=3)

intermediate2 = conv2(intermediate1)

intermediate3 = conv3(intermediate2)

print(intermediate2.size())

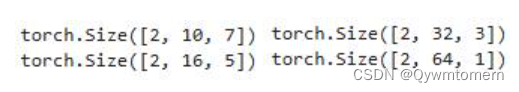

print(intermediate3.size())输出结果为

使用三种方式降维,以便输入后续的FC层

y_output = intermediate3.squeeze()

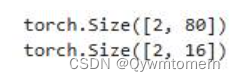

print(y_output.size())1、squeeze() 可以去掉size为1的维度,输出 torch.Size([2, 64])

# Method 2 of reducing to feature vectors

print(intermediate1.view(batch_size, -1).size())

# Method 3 of reducing to feature vectors

print(torch.mean(intermediate1, dim=2).size())

# print(torch.max(intermediate1, dim=2).size())

# print(torch.sum(intermediate1, dim=2).size())2、张量的view操作可以将多余的维度平展开

3、在指定维度方向求特殊值,可以将该维度去除

输出为

3 对模型性能的探究

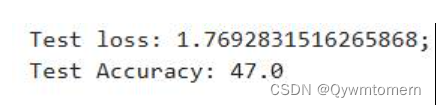

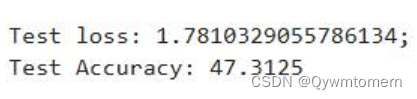

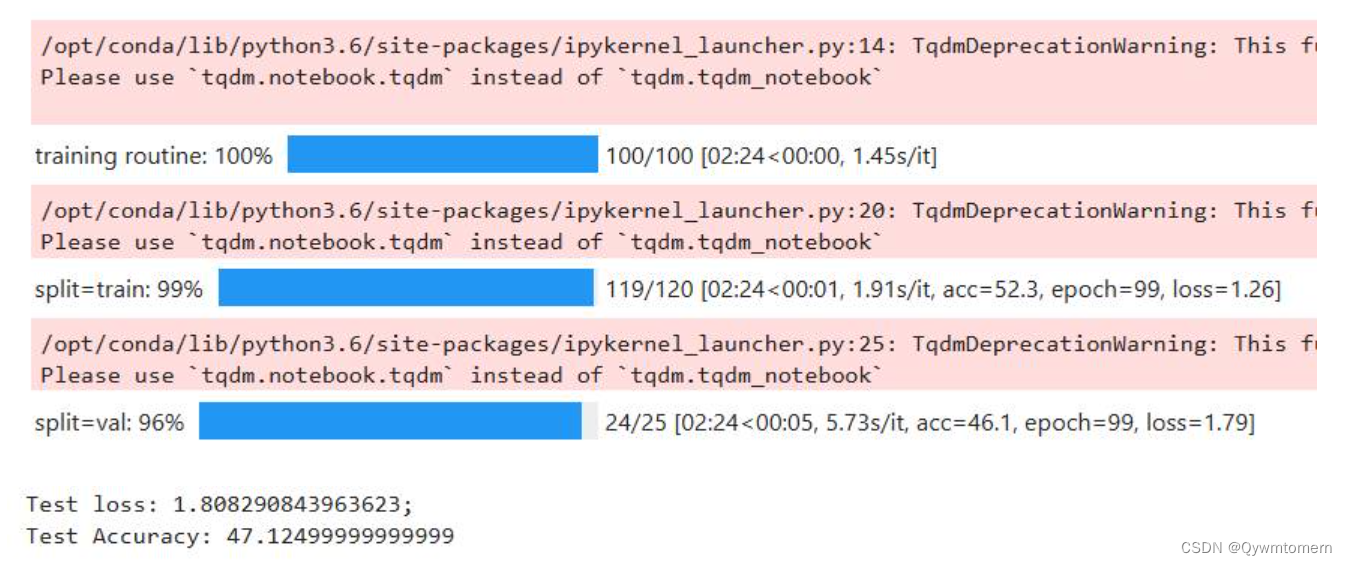

未使用任何优化方法时,模型在测试集上准确率为47%左右,并且陷入一个局部最优点

单独使用dropout操作后,依旧陷于这个局部最优点

单独使用adam(0.9, 0.999)(动量法、自适应梯度参数),也不能冲出局部最优点

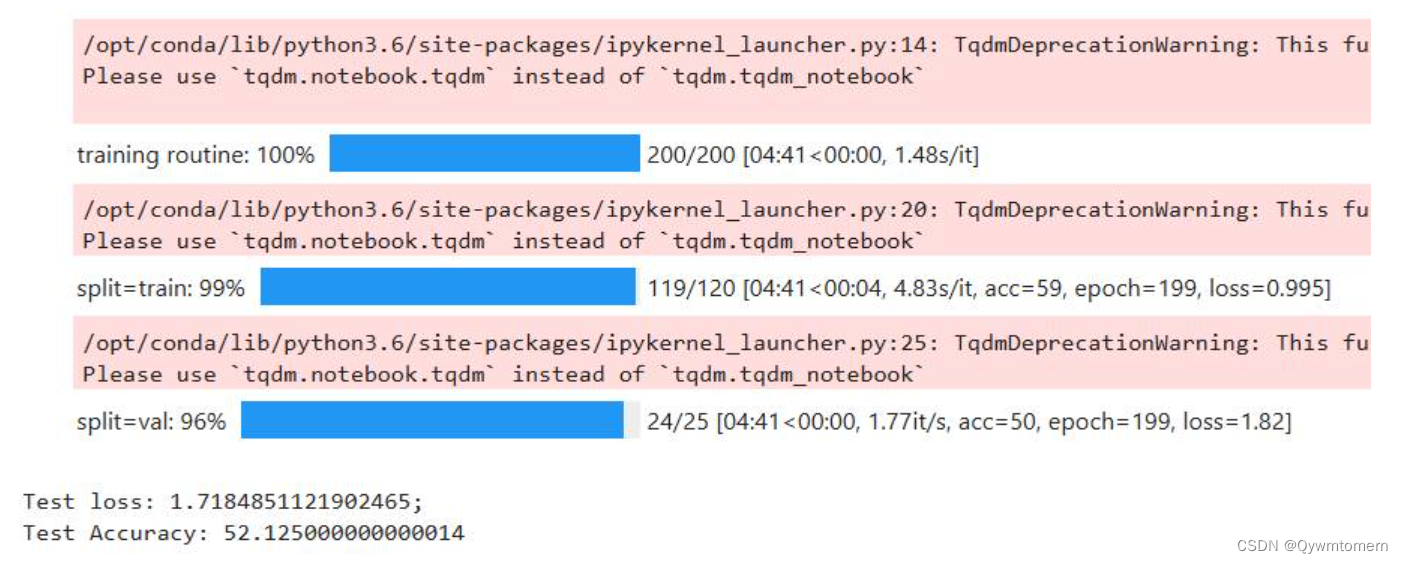

同时使用时,可以冲出此局部最优,来到52左右的准确率,同时陷入另一个局部最优

MLP仅依靠对样本空间的扭曲来进行多类划分,没有对原输入信息进行特征提取,准确率比不上对输入信息进行深度特征提取,并按照特征匹配打分的CNN

875

875

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?