PyTorch - Python deep learning neural network API

PyTorch - Python deep learning neural network API

- A tensor is an n-dimensional array.

- For example, PyTorch torch.Tensor objects that are created from NumPy

ndarray objects, share memory. This makes the transition between

PyTorch and NumPy very cheap from a performance perspective. - Tensors are super important for deep learning and neural networks

because they are the data structure that we ultimately use for

building and training our neural networks. - This table gives us a list of PyTorch packages and their

corresponding descriptions. These are the primary PyTorch components

we’ll be learning about and using as we build neural networks in this

series. - Primary Pytorch packages that will be used in this series.

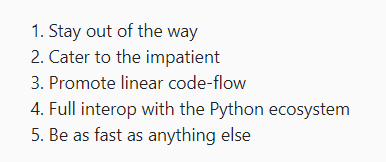

- Philosophy of PyTorch

Pytorch Install

-

[1] 首先下载Anaconda下载地址

-

[2] 进入Pytorch官网,选择对应版本的Pytorch下载地址

-

[3] 打开Anaconda Prompt,由于我之前已经创建好了虚拟环境,此时切换到虚拟环境,输入指令进行下载

-

[4] Notice that we are installing both PyTorch and torchvision. Also, there is no need to install CUDA separately. The needed CUDA software comes installed with PyTorch if a CUDA version is selected in step (2). All we need to do is select a version of CUDA if we have a supported Nvidia GPU on our system.

-

[5] Verify the PyTorch install

To use PyTorch we use import torch.

To check the version, we use torch.version

To verify our GPU capabilities, we use torch.cuda.is_available()

To check the cuda version, we use torch.version.cuda

Tensors Explained - Data Structures of Deep Learning

- What is a tensor?

A tensor is the primary data structure used by neural networks. Each of these examples are specific instances of the more general concept of a tensor:number — scalar — array — vector — 2d-array — matrix

Let’s organize these into two groups: [ number, array, 2d-array ] [scalar, vector, matrix] The first group of three terms (number, array, 2d-array) are terms that are typically used in computer science, while the second group (scalar, vector, matrix) are terms that are typically used in mathematics. - Index required to access an element (访问每个元素所需要的下标数量)

对于数组a = [1, 2, 3, 4]而言,要想访问元素3,需要 a[2]

对于数组d = [[1, 2, 3], [4, 5, 6], [7, 8, 9]]而言,要想访问元素3,需要d[0][2]

Note that, if we have a number or scalar, we don’t need an index,

we can just refer to the number or scalar directly.When more than two indexes are required to access a specific element, in computer science, we stop using words like, number, array, 2d-array, and start using the word multidimensional array or nd-array. The n tells us the number of indexes required to access a specific element within the structure.

2. One thing to note about the dimension of a tensor is that it differs from what we mean when we refer to the dimension of a vector in a vector space. The dimension of a tensor does not tell us how many components exist within the tensor.对于一个三维向量而言,我们有一个有三个分量的有序三元组。对于三维张量,可能不只有三个元素。比如对于这样一个二维张量

d = [[1, 2, 3],

[4, 5, 6],

[7, 8, 9]],包含9个元素。

Rank, Axes, and Shape Explained - Tensors for Deep Learning

1.Rank of a tensor张量的秩

The rank of a tensor refers to the number of dimensions present within the tensor.

张量的秩指的是张量维度的数量。Suppose we are told that we have a rank-2 tensor. This means all of the following:We have a matrix = We have a 2d-array = We have a 2d-tensor

A tensor’s rank tells us how many indexes are needed to refer to a specific element within the tensor.

一个张量的秩告诉我们访问其中的一个元素需要几个下标。

2.Axes of a tensor张量的轴

An axis of a tensor is a specific dimension of a tensor.

一个张量的轴是一个张量的特定维数。

If we say that a tensor is a rank 2 tensor, we mean that the tensor has 2 dimensions, or equivalently, the tensor has two axes. Elements are said to exist or run along an axis. This running is constrained by the length of each axis. 元素被称为沿轴存在或运行的元素。这种运行受到每个轴长度的限制。

The length of each axis tells us how many indexes are available along each axis.

比如有一个数组dd = [[1, 2, 3], [4, 5, 6], [7, 8, 9]],

每一个沿第一个轴的元素,是一个数组

dd[0] = [1, 2, 3] dd[1] = [4, 5, 6] dd[2] = [7, 8, 9]每一个沿第二个轴的元素,是一个数字

dd[0][0] = 1 dd[1][0] = 4 dd[2][0] = 7

dd[0][1] = 2 dd[1][1] = 5 dd[2][1] = 8

dd[0][2] = 3 dd[1][2] = 6 dd[2][2] = 9Note that, with tensors, the elements of the last axis are always numbers. Every other axis will contain n-dimensional arrays. This is what we see in this example, but this idea generalizes.The rank of a tensor tells us how many axes a tensor has, and the length of these axes leads us to the very important concept known as the shape of a tensor.

3.Shape of a tensor张量的形状

The shape of a tensor gives us the length of each axis, so if we know the shape of a given tensor, then we know the length of each axis**, and this tells us how many indexes are available along each axis.**

Let’s consider the same tensor dd as before:

dd[0] = [1, 2, 3] dd[1] = [4, 5, 6] dd[2] = [7, 8, 9]

To work with this tensor's shape, we’ll create a torch.Tensor object like so

t = torch.tensor(dd) ========> tensor([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

type(t) ========> torch.Tensor

t.shape ========> torch.Size([3,3])Note that, in PyTorch, size and shape of a tensor are the same thing.The shape of 3 x 3 tells us that each axis of this rank two tensor has a length of 3 which means that we have three indexes available along each axis.

4.Reshaping a tensor

The shape changes the grouping of the terms but does not change the underlying terms themselves.

形状改变元素的分组,但不改变基础元素本身。

This torch.Tensor is a rank 2 tensor with a shape of [3,3] or 3 x 3.

t = torch.tensor(dd) ========> tensor([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

t.reshape(1,9) ========> tensor([[1, 2, 3, 4, 5, 6, 7, 8, 9]])

t.reshape(1,9).shape ========> torch.Size([1, 9])Now, one thing to notice about reshaping is that the product of the component values in the shape must equal the total number of elements in the tensor.

Reshaping changes the shape but not the underlying data elements.

5.Shape of a CNN input

The shape of a CNN input typically has a length of four. This means that we have a rank-4 tensor with four axes. Each index in the tensor’s shape represents a specific axis, and the value at each index gives us the length of the corresponding axis.

5.1 Image height and width

The image height and width are represented on the last two axes.

5.2 Image color channels

The second axis represents the color channels. Typical values here are 3 for RGB images or 1 if we are working with grayscale images. This color channel interpretation only applies to the input tensor. As we will reveal in a moment, the interpretation of this axis changes after the tensor passes through a convolutional layer.颜色通道的值通常为3或者1,但是这只是对输入图片而言。因为在通过卷积层之后,该值会发生变化。

5.3 Image batches

This brings us to the first axis of the four which represents the batch size. In neural networks, we usually work with batches of samples opposed to single samples, so the length of this axis tells us how many samples are in our batch.

This allows us to see that an entire batch of images is represented using a single rank-4 tensor.

5.4 Conclusion

[Batch_size, Color_channels, Height, Width]

This gives us a single rank-4 tensor that will ultimately flow through our convolutional neural network.

6. Output channels and feature maps

6.1 Output channels

Suppose we have a tensor that contains data from a single 28 x 28 grayscale image. This gives us the following tensor shape: [1, 1, 28, 28].

Now suppose this image is passed to our CNN and passes through the first convolutional layer. When this happens, the shape of our tensor and the underlying data will be changed by the convolution operation.

The convolution changes the height and width dimensions as well as the number of channels. The number of output channels changes based on the number of filters being used in the convolutional layer.

Suppose we have three convolutional filters, and lets just see what happens to the channel axis.

Since we have three convolutional filters, we will have three channel outputs from the convolutional layer.

These channels are outputs from the convolutional layer.Each of the three filters convolves the original single input channel producing three output channels.

6.2 Feature maps

Feature maps are the output channels created from the convolutions.

With the output channels, we no longer have color channels, but modified channels that we call feature maps. These so-called feature maps are the outputs of the convolutions that take place using the input color channels and the convolutional filters.

The word “feature” is used because the outputs represent particular features from the image, like edges for example, and these mappings emerge as the network learns during the training process and become more complex as we move deeper into the network.

之所以使用“特征”这个词,是因为输出表示图像的特定特征,比如边缘,这些映射是在网络在训练过程中学习的过程中出现的,随着我们深入网络,它们变得更加复杂。

1164

1164

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?