摘自《Make Your Own Neural Network》

整理总结,用python实现自己简单的人工神经网络。

N

eural Network with Python

1.

The Skeleton Code

three functions:

●

initialisation

: to set the number of input, hidden and output nodes

●

train

: refine the weights after being given a training set example to learn from

●

query

: give an answer from the output nodes after being given an input

# neural network class definition

class neuralNetwork:

# initialise the neural network

def __init__():

pass

# train the neural network

def train():

pass

# query the neural network

def query():

pass

2.Initialising the Network

we need to set the number of input, hidden and output layer nodes. That defines the shape and size of the neural network.

Don’t forget the learning rate.

# initialise the neural network

def __init__(self, inputnodes, hiddennodes, outputnodes, learningrate):

# set number of nodes in each input, hidden, output layer

self.inodes = inputnodes

self.hnodes = hiddennodes

self.onodes = outputnodes

# learning rate

self.lr = learningrate

pass

e:

# number of input, hidden and output nodes input_nodes = 3

hidden_nodes = 3

output_nodes = 3

# learning rate is 0.3

learning_rate = 0.3

# create instance of neural network

n = neuralNetwork(input_nodes,hidden_nodes,output_nodes, learning_rate)

Weights - The Heart of the Network

So the next step is to create the network of nodes and links.

The most important part of the network is the

link weights

.

They’re used to calculate the signal being fed forward, the error as it’s propagated backwards, and it is the link weights themselves that are refined in an attempt to to improve the network.

● A matrix for the weights for links between the input and hidden layers, Winput_hidden, of size (hidden_nodesby input_nodes).

● And another matrix for the links between the hidden and output layers, Whidden_output, of size (output_nodesby hidden_nodes).

The first matrix is of size (hidden_nodesby input_nodes)and not the other way around (input_nodesby hidden_node)

The initial values of the link weights should be small and random.

The following numpy function generates an array of values selected randomly between 0 and 1, where the size is (rows by columns).

numpy.random.rand(rows, columns)

Each value in the array is random and between 0 and 1.

The weights could legitimately be negative not just positive.

The range could be between 1.0 and +1.0.

These weights are an intrinsic part of a neural network, and live with the neural network for all its life.

It needs to be part of the initialisation too, and accessible from other functions like the training and the querying.

# link weight matrices, wih and who

# weights inside the arrays are w_i_j, where link is from node i to node j in the next layer

# w11 w21

# w12 w22 etc

self.wih = (numpy.random.rand(self.hnodes, self.inodes) - 0.5)

self.who = (numpy.random.rand(self.onodes, self.hnodes) - 0.5)

Optional: More Sophisticated Weights

This bit is optional as it is just a simple but popular refinement to initialising weights.

A slightly more sophisticated approach to creating the initial random weights.

They sample the weights from a normal probability distribution centred around zero and with a standard deviation that is related to the number of incoming links into a node, 1/√(number of incoming links).

The numpy.random.normal() function

,helps us sample a normal distribution.

The parameters are the centre of the distribution, the standard deviation and the size of a numpy array if we want a matrix of random numbers instead of just a single number.

self

.wih = numpy.random.normal(

0.0

,

pow

(

self

.hnodes

,

-

0.5

)

,

(

self

.hnodes

,

self

.inodes))

self

.who = numpy.random.normal(

0.0

,

pow

(

self

.onodes

,

-

0.5

)

,

(

self

.onodes

,

self

.hnodes))

You can see we’ve set the centre of the normal distribution to 0.0.

You can see the expression for the standard deviation related to the number of nodes in next layer in Python form as pow(self.hnodes, 0.5) which is simply raising the number of nodes to the power of 0.5. That last parameter is the shape of the numpy array we want.

3.Querying the Network

The query() function takes the input to a neural network and returns the network’s output.

We need to pass the input signals from the input layer of nodes, through the hidden layer and out of the final output layer.

We use the link weights to moderate the signals as they feed into any given hidden or output node, and we also use the sigmoid activation function to squish the signal coming out of those nodes.

If we had lots of nodes,

how to write all these instructions in a simple concise matrix form.

The following shows how the matrix of weights for the link between the input and hidden layers can be combined with the matrix of inputs to give the signals into the hidden layer nodes:

X

hidden

=

W

input_hidden

∙

I

The following applies the numpy library’s dot product function for matrices to the link weights

W

input_hidden

and the inputs

I

.

hidden_inputs = numpy.dot(self.wih, inputs)

We don’t have to rewrite it either if next time we choose to use a different number of nodes for the input or hidden layers. It just works!

To get the signals emerging from the hidden node, we simply apply the sigmoid squashing function to each of these emerging signals:

O

hidden

= sigmoid(

X

hidden

)

The sigmoid function is called expit().

# scipy.special for the sigmoid function expit()

import scipy.special

Because we might want to experiment and tweak, or even completely change, the activation function, it makes sense to define it only once inside the neural network object when it is first initialised.

The following defines the activation function we want to use inside the neural network’s initialisation section.

# activation function is the sigmoid function

self.activation_function =

lambda

x: scipy.special.expit(x)

lambda (anonymous):

All we’ve done here is created a function like any other, but we’ve used a shorter way of writing it out. Instead of the usual def() definitions, we use the magic lambdato create a function there and then, quickly and easily.

The function here takes x and returns scipy.special.expit(x) which is the sigmoid function. Functions created with lambda are nameless,

but here we’ve assigned it to the name self.activation_function(). All this means is that whenever someone needs to user the activation function, all they need to do is call self.activation_function().

we want to apply the activation function to the combined and moderated signals into the hidden nodes.

# calculate the signals emerging from hidden layer

hidden_outputs = self.activation_function(hidden_inputs)

That is, the signals emerging from the hidden layer nodes are in the matrix called

hidden_outputs

# calculate signals into hidden layer

hidden_inputs = numpy.dot(self.wih, inputs)

# calculate the signals emerging from hidden layer

hidden_outputs = self.activation_function(hidden_inputs)

# calculate signals into final output layer

final_inputs = numpy.dot(self.who, hidden_outputs)

# calculate the signals emerging from final output layer

final_outputs = self.activation_function(final_inputs)

4.Training the Network

There are two phases to training,

the first is calculating the output just as query() does it,

and the second part is backpropagating the errors to inform how the link weights are refined.

● The first part is working out the output for a given training example. That is no different to what we just did with the query() function.

● The second part is taking this calculated output, comparing it with the desired output, and using the difference to guide the updating of the network weights.

The first part:

# train the neural network

def

train

(

self

,

inputs_list

,

targets_list):

# convert inputs list to 2d array

inputs = numpy.array(inputs_list

,

ndmin

=

2

).T

targets = numpy.array(targets_list

,

ndmin

=

2

).T

# calculate signals into hidden layer

hidden_inputs = numpy.dot(

self

.wih

,

inputs)

# calculate the signals emerging from hidden layer

hidden_outputs =

self

.activation_function(hidden_inputs)

# calculate signals into final output layer

final_inputs = numpy.dot(

self

.who

,

hidden_outputs)

# calculate the signals emerging from final output layer

final_outputs =

self

.activation_function(final_inputs)

pass

This code is almost exactly the same as that in the query() function, because we’re feeding forward the signal from the input layer to the final output layer in exactly the same way.

The only difference is that we have an additional parameter, targets_list, defined in the function name because you can’t train the network without the training examples which include the desired or target answer.

The code also turns the targets_listinto a numpy array, just as the inputs_listis turned into a numpy array.

targets = numpy.array(targets_list, ndmin=2).T

First we need to calculate the error, which is the difference between the desired target output provided by the training example, and the actual calculated output. That’s the difference between the matrices (targets final_outputs)done element by element.

# error is the (target - actual)

output_errors = targets - final_outputs

We can calculate the backpropagated errors for the hidden layer nodes. Remember how we split the errors according to the connected weights, and recombine them for each hidden layer node. We worked out the matrix form of this calculation as

errors

hidden

=

weights

T

hidden_output

∙

errors

output

# hidden layer error is the output_errors, split by weights, recombined at hidden nodes

hidden_errors = numpy.dot(self.who.T, output_errors)

So we have what we need to refine the weights at each layer. For the weights between the hidden and final layers, we use the

output_errors

. For the weights between the input and hidden layers, we use these

hidden_errors

we just calculated.

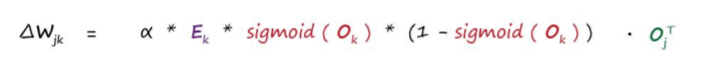

We previously worked out the expression for updating the weight for the link between a node

j

and a node

k

in the next layer in matrix form:

The alpha is the learning rate.

The sigmoid is the squashing activation function.

Remember that the * multiplication is the normal element by element multiplication, and the ∙ dot is the matrix dot product. That last bit, the matrix of outputs from the previous layer, is transposed. In effect this means the column of outputs becomes a row of outputs.

# update the weights for the links between the hidden and output layers

self.who += self.lr * numpy.dot((output_errors* final_outputs * (1.0 - final_outputs)), numpy.transpose(hidden_outputs)

5.The Complete Neural Network Code

# scipy.special for the sigmoid function expit()

import

scipy.special

import

numpy

# neural network class definition

class

neuralNetwork:

# initialise the neural network

def

__init__

(

self

,

inputnodes

,

hiddennodes

,

outputnodes

,

learningrate):

# set number of nodes in each input, hidden, output layer

self

.inodes = inputnodes

self

.hnodes = hiddennodes

self

.onodes = outputnodes

# link weight matrices, wih and who

# weights inside the arrays are w_i_j, where link is from node i to node j in the next layer

# w11 w21

# w12 w22 etc

self

.wih = numpy.random.normal(

0.0

,

pow

(

self

.hnodes

,

-

0.5

)

,

(

self

.hnodes

,

self

.inodes))

self

.who = numpy.random.normal(

0.0

,

pow

(

self

.onodes

,

-

0.5

)

,

(

self

.onodes

,

self

.hnodes))

# learning rate

self

.lr = learningrate

# activation function is the sigmoid function

self

.activation_function =

lambda

x: scipy.special.expit(x)

pass

# train the neural network

def

train

(

self

,

inputs_list

,

targets_list):

# convert inputs list to 2d array

inputs = numpy.array(inputs_list

,

ndmin

=

2

).T

targets = numpy.array(targets_list

,

ndmin

=

2

).T

# calculate signals into hidden layer

hidden_inputs = numpy.dot(

self

.wih

,

inputs)

# calculate the signals emerging from hidden layer

hidden_outputs =

self

.activation_function(hidden_inputs)

# calculate signals into final output layer

final_inputs = numpy.dot(

self

.who

,

hidden_outputs)

# calculate the signals emerging from final output layer

final_outputs =

self

.activation_function(final_inputs)

# output layer error is the (target actual)

output_errors = targets - final_outputs

# hidden layer error is the output_errors, split by weights, recombined at hidden nodes

hidden_errors = numpy.dot(

self

.who.T

,

output_errors)

# update the weights for the links between the hidden and output layers

self

.who +=

self

.lr * numpy.dot((output_errors * final_outputs * (

1.0

- final_outputs))

,

numpy.transpose(hidden_outputs))

# update the weights for the links between the input and hidden layers

self

.wih +=

self

.lr * numpy.dot((hidden_errors * hidden_outputs * (

1.0

- hidden_outputs))

,

numpy.transpose(inputs))

pass

# query the neural network

def

query

(

self

,

inputs_list):

# convert inputs list to 2d array

inputs = numpy.array(inputs_list

,

ndmin

=

2

).T

# calculate signals into hidden layer

hidden_inputs = numpy.dot(

self

.wih

,

inputs)

# calculate the signals emerging from hidden layer

hidden_outputs =

self

.activation_function(hidden_inputs)

# calculate signals into final output layer

final_inputs = numpy.dot(

self

.who

,

hidden_outputs)

# calculate the signals emerging from final output layer

final_outputs =

self

.activation_function(final_inputs)

return

final_outputs

338

338

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?