09-k8s的静态令牌文件认证、数字证书认证、Kubeconfig、RBAC和Ingress

1. 静态令牌文件认证

1.1 静态令牌认证的基础配置

◼ 令牌信息保存于文本文件中

◆文件格式为CSV,每行定义一个用户,由“令牌、用户名、用户ID和所属的用户组”四个字段组成,用户组为可选字段

◆格式:token,user,uid,“group1,group2,group3”

如 3e6745.42c03381b4162e8e,jack,998,kubeadmin

◼ 由kube-apiserver在启动时通过–token-auth-file选项加载

◼ 加载完成后的文件变动,仅能通过重启程序进行重载,因此,相关的令牌会长期有效

◼ 客户端在HTTP请求中,通过“Authorization Bearer TOKEN”标头附带令牌令牌以完成认证

1.2 静态令牌认证配置示例

1.2.1 生成token

root@master1:~# echo "$(openssl rand -hex 3).$(openssl rand -hex 8)"

cc0578.688a9946f8c14771

root@master1:~# echo "$(openssl rand -hex 3).$(openssl rand -hex 8)"

19eb3a.c1dc86da3fc6a458

root@master1:~# echo "$(openssl rand -hex 3).$(openssl rand -hex 8)"

377c53.9c0694de57246f94

1.2.2. 生成static token文件

root@master1:~# cd /etc/kubernetes/

root@master1:/etc/kubernetes# mkdir auth

root@master1:/etc/kubernetes/auth# cat /etc/kubernetes/auth/token.csv

cc0578.688a9946f8c14771,tom01,1001,kubeusers

19eb3a.c1dc86da3fc6a458,tom02,1002,kubeusers

377c53.9c0694de57246f94,tom03,999,kubeadmin

1.2.3. 配置kube-apiserver加载该静态令牌文件以启用相应的认证功能

- 修改的三处

- --token-auth-file=/etc/kubernetes/auth/token.csv

- mountPath: /etc/kubernetes/auth

name: static-auth-token

readOnly: true

- hostPath:

path: /etc/kubernetes/auth

type: DirectoryOrCreate

name: static-auth-token

----------

# 备份一下kube-apiserver.yaml

cp /etc/kubernetes/manifests/kube-apiserver.yaml /etc/kubernetes/manifests/kube-apiserver.yaml.back

# 添加上面三处修改的地方

cat > /etc/kubernetes/manifests/kube-apiserver.yaml <<EOF

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 10.0.0.70:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=10.0.0.70

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --token-auth-file=/etc/kubernetes/auth/token.csv

- --enable-admission-plugins=NodeRestriction

- --enable-bootstrap-token-auth=true

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --etcd-servers=https://127.0.0.1:2379

- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

- --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

- --requestheader-allowed-names=front-proxy-client

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --requestheader-extra-headers-prefix=X-Remote-Extra-

- --requestheader-group-headers=X-Remote-Group

- --requestheader-username-headers=X-Remote-User

- --secure-port=6443

- --service-account-issuer=https://kubernetes.default.svc.cluster.local

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-account-signing-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=10.96.0.0/12

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

image: registry.aliyuncs.com/google_containers/kube-apiserver:v1.25.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 10.0.0.70

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

name: kube-apiserver

readinessProbe:

failureThreshold: 3

httpGet:

host: 10.0.0.70

path: /readyz

port: 6443

scheme: HTTPS

periodSeconds: 1

timeoutSeconds: 15

resources:

requests:

cpu: 250m

startupProbe:

failureThreshold: 24

httpGet:

host: 10.0.0.70

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/ca-certificates

name: etc-ca-certificates

readOnly: true

- mountPath: /etc/pki

name: etc-pki

readOnly: true

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

- mountPath: /usr/local/share/ca-certificates

name: usr-local-share-ca-certificates

readOnly: true

- mountPath: /usr/share/ca-certificates

name: usr-share-ca-certificates

readOnly: true

- mountPath: /etc/kubernetes/auth

name: static-auth-token

readOnly: true

hostNetwork: true

priorityClassName: system-node-critical

securityContext:

seccompProfile:

type: RuntimeDefault

volumes:

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/ca-certificates

type: DirectoryOrCreate

name: etc-ca-certificates

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: etc-pki

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

- hostPath:

path: /usr/local/share/ca-certificates

type: DirectoryOrCreate

name: usr-local-share-ca-certificates

- hostPath:

path: /usr/share/ca-certificates

type: DirectoryOrCreate

name: usr-share-ca-certificates

- hostPath:

path: /etc/kubernetes/auth

type: DirectoryOrCreate

name: static-auth-token

status: {}

EOF

1.2.4.测试

# 命令:curl -k -H "Authorization: Bearer TOKEN" https://API_SERVER:HOST/api/v1/namespaces/default/pods/

root@master1:/etc/kubernetes/auth# cat token.csv

cc0578.688a9946f8c14771,tom01,1001,kubeusers

19eb3a.c1dc86da3fc6a458,tom02,1002,kubeusers

377c53.9c0694de57246f94,tom03,999,kubeadmin

# 其他节点

curl https://10.0.0.70:6443/api/v1/namespaces/default -H "Authorization: Bearer 3e6745.42c03381b4162e8e" -k

2. 数字证书认证

2.1 数字证书认证配置示例

2.1.1 生成私钥

cd /etc/kubernetes/pki

root@master1:/etc/kubernetes/pki# (umask 077; openssl genrsa -out mason.key 4096)

Generating RSA private key, 4096 bit long modulus (2 primes)

...........................................................................................................................++++

.........................................................................................................................................++++

e is 65537 (0x010001)

2.1.2 创建证书签署请求

root@master1:/etc/kubernetes/pki# openssl req -new -key mason.key -out mason.csr -subj "/CN=mason/O=kubeadmin"

root@master1:/etc/kubernetes/pki# ls -rlt mason*

-rw------- 1 root root 3243 Feb 11 20:58 mason.key

-rw-r--r-- 1 root root 1606 Feb 11 20:59 mason.csr

2.1.3 Kubernetes CA签署证书

root@master1:/etc/kubernetes/pki# openssl x509 -req -days 365 -CA ca.crt -CAkey ca.key -CAcreateserial -in mason.csr -out mason.crt

Signature ok

subject=CN = mason, O = kubeadmin

Getting CA Private Key

root@master1:/etc/kubernetes/pki# chmod +x mason.crt

root@master1:/etc/kubernetes/pki# chmod +x mason.key

root@master1:/etc/kubernetes/pki# ls -lrt mason*

-rwx--x--x 1 root root 3243 Feb 11 20:58 mason.key

-rw-r--r-- 1 root root 1606 Feb 11 20:59 mason.csr

-rwxr-xr-x 1 root root 1363 Feb 11 21:01 mason.crt

# 并拷贝到其他已有ca.crt的node节点上

scp -p mason.crt mason.key 10.0.0.72:/etc/kubernetes/pki/

2.1.4 客户端的验证

# 3种客户端的验证命令:

kubectl -s https://10.0.0.70:6443 --client-certificate=./mason.crt --client-key=./mason.key --insecure-skip-tls-verify=true get pods

kubectl -s https://10.0.0.70:6443 --client-certificate=./mason.crt --client-key=./mason.key --certificate-authority=./ca.crt get pods

curl --cert mason.crt --key mason.key --cacert ca.crt https://10.0.0.70:6443/api/v1/namespaces/default/pods

# 在node2节点上

root@node2:/etc/kubernetes/pki# ls -lrt

total 12

-rw-r--r-- 1 root root 1099 Feb 1 16:43 ca.crt

-rwx--x--x 1 root root 3243 Feb 11 20:58 mason.key

-rwxr-xr-x 1 root root 1363 Feb 11 21:01 mason.crt

# 命令一

root@node2:/etc/kubernetes/pki# kubectl -s https://10.0.0.70:6443 --client-certificate=./mason.crt --client-key=./mason.key --insecure-skip-tls-verify=true get pods

Error from server (Forbidden): pods is forbidden: User "mason" cannot list resource "pods" in API group "" in the namespace "default"

# 命令二

root@node2:/etc/kubernetes/pki# kubectl -s https://10.0.0.70:6443 --client-certificate=./mason.crt --client-key=./mason.key --certificate-authority=./ca.crt get pods

Error from server (Forbidden): pods is forbidden: User "mason" cannot list resource "pods" in API group "" in the namespace "default"

# 命令三

root@node2:/etc/kubernetes/pki# curl --cert mason.crt --key mason.key --cacert ca.crt https://10.0.0.70:6443/api/v1/namespaces/default/pods

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "pods is forbidden: User \"mason\" cannot list resource \"pods\" in API group \"\" in the namespace \"default\"",

"reason": "Forbidden",

"details": {

"kind": "pods"

},

"code": 403

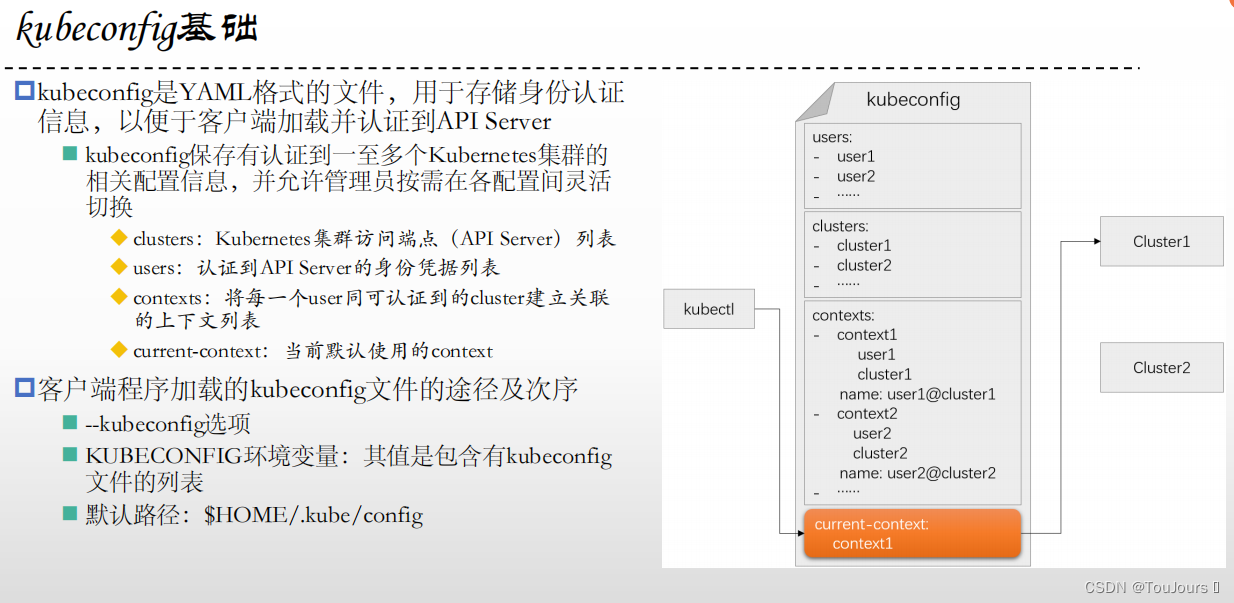

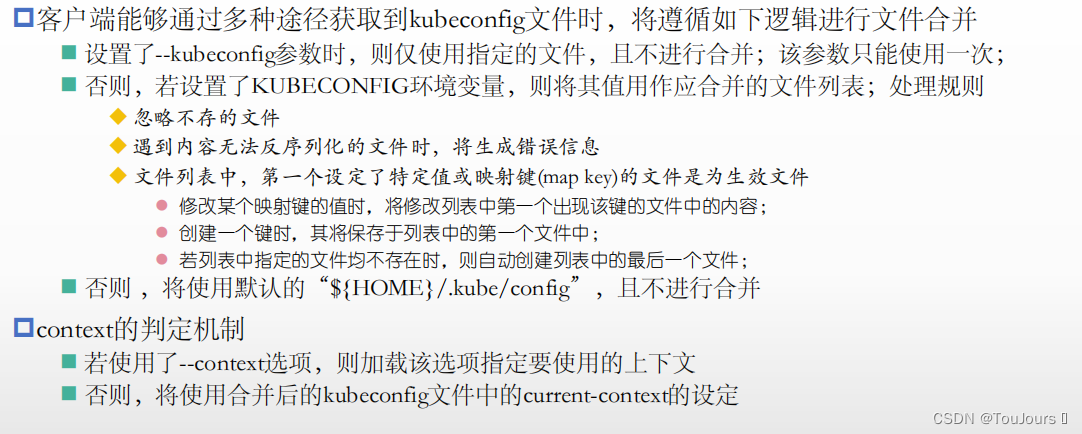

3. Kubeconfig

3.1 Kubeconfig基础

3.2 设定kubeconfig文件

3.3 为静态令牌认证的用户设定一个自定义的kubeconfig文件

cd /etc/kubernetes/pki

# a. 定义Cluster

kubectl config set-cluster mykube --embed-certs=true --certificate-authority=./ca.crt --server="https://10.0.0.70:6443" --kubeconfig=$HOME/.kube/mykube.conf

kubectl config view --kubeconfig=$HOME/.kube/mykube.conf

----------------------------

root@master1:/etc/kubernetes/pki# kubectl config set-cluster mykube --embed-certs=true --certificate-authority=./ca.crt --server="https://10.0.0.70:6443" --kubeconfig=$HOME/.kube/mykube.conf

Cluster "mykube" set.

root@master1:/etc/kubernetes/pki# kubectl config view --kubeconfig=$HOME/.kube/mykube.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.0.0.70:6443

name: mykube

contexts: null

current-context: ""

kind: Config

preferences: {}

users: null

root@master1:/etc/kubernetes/pki#

----------------------------

# b. 定义User

kubectl config set-credentials tom --token="cc0578.688a9946f8c14771" --kubeconfig=$HOME/.kube/mykube.conf

kubectl config view --kubeconfig=$HOME/.kube/mykube.conf

----------------------------

root@master1:/etc/kubernetes/pki# kubectl config set-credentials tom --token="cc0578.688a9946f8c14771" --kubeconfig=$HOME/.kube/mykube.conf

User "tom" set.

root@master1:/etc/kubernetes/pki# kubectl config view --kubeconfig=$HOME/.kube/mykube.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.0.0.70:6443

name: mykube

contexts: null

current-context: ""

kind: Config

preferences: {}

users:

- name: tom

user:

token: REDACTED

----------------------------

# c. 定义Context

kubectl config set-context tom@mykube --cluster=mykube --user=tom --kubeconfig=$HOME/.kube/mykube.conf

kubectl config view --kubeconfig=$HOME/.kube/mykube.conf

----------------------------

root@master1:/etc/kubernetes/pki# kubectl config set-context tom@mykube --cluster=mykube --user=tom --kubeconfig=$HOME/.kube/mykube.conf

Context "tom@mykube" created.

root@master1:/etc/kubernetes/pki# kubectl config view --kubeconfig=$HOME/.kube/mykube.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.0.0.70:6443

name: mykube

contexts:

- context:

cluster: mykube

user: tom

name: tom@mykube

current-context: ""

kind: Config

preferences: {}

users:

- name: tom

user:

token: REDACTED

----------------------------

# d. 设定Current-Context

kubectl config use-context tom@mykube --kubeconfig=$HOME/.kube/mykube.conf

kubectl get pods --kubeconfig=$HOME/.kube/mykube.conf

----------------------------

root@master1:/etc/kubernetes/pki# kubectl config use-context tom@mykube --kubeconfig=$HOME/.kube/mykube.conf

Switched to context "tom@mykube".

root@master1:/etc/kubernetes/pki# kubectl get pods --kubeconfig=$HOME/.kube/mykube.conf

Error from server (Forbidden): pods is forbidden: User "tom" cannot list resource "pods" in API group "" in the namespace "default"

----------------------------

# e. 验证

kubectl get pods --context='tom@mykube' --kubeconfig=$HOME/.kube/mykube.conf

export KUBECONFIG="$HOME/.kube/mykube.conf"

kubectl get pods

----------------------------

root@master1:/etc/kubernetes/pki# kubectl get pods --context='tom@mykube' --kubeconfig=$HOME/.kube/mykube.conf

Error from server (Forbidden): pods is forbidden: User "tom" cannot list resource "pods" in API group "" in the namespace "default"

root@master1:/etc/kubernetes/pki# export KUBECONFIG="$HOME/.kube/mykube.conf"

root@master1:/etc/kubernetes/pki# kubectl get pods

Error from server (Forbidden): pods is forbidden: User "tom" cannot list resource "pods" in API group "" in the namespace "default"

----------------------------

3.4 将基于X509客户端证书认证的mason用户添加至kubeusers.conf文件中

cd /etc/kubernetes/pki

# a. 定义Cluster

kubectl config set-cluster mykube --embed-certs=true --certificate-authority=./ca.crt --server="https://10.0.0.70:6443" --kubeconfig=$HOME/.kube/mykube.conf

kubectl config view --kubeconfig=$HOME/.kube/mykube.conf

----------------------------

root@master1:/etc/kubernetes/pki# kubectl config set-cluster mykube --embed-certs=true --certificate-authority=./ca.crt --server="https://10.0.0.70:6443" --kubeconfig=$HOME/.kube/mykube.conf

Cluster "mykube" set.

root@master1:/etc/kubernetes/pki# kubectl config view --kubeconfig=$HOME/.kube/mykube.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.0.0.70:6443

name: mykube

contexts: null

current-context: ""

kind: Config

preferences: {}

users: null

root@master1:/etc/kubernetes/pki#

----------------------------

# b. 定义User

kubectl config set-credentials mason --embed-certs=true --client-certificate=./mason.crt --client-key=./mason.key --kubeconfig=$HOME/.kube/mykube.conf

kubectl config view --kubeconfig=$HOME/.kube/mykube.conf

----------------------------

root@master1:/etc/kubernetes/pki# kubectl config set-credentials mason --embed-certs=true --client-certificate=./mason.crt --client-key=./mason.key --kubeconfig=$HOME/.kube/mykube.conf

User "mason" set.

root@master1:/etc/kubernetes/pki# kubectl config view --kubeconfig=$HOME/.kube/mykube.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.0.0.70:6443

name: mykube

contexts:

- context:

cluster: mykube

user: tom

name: tom@mykube

current-context: tom@mykube

kind: Config

preferences: {}

users:

- name: mason

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

- name: tom

user:

token: REDACTED

----------------------------

# c. 定义context

kubectl config set-context mason@mykube --cluster=mykube --user=mason --kubeconfig=$HOME/.kube/mykube.conf

kubectl config view --kubeconfig=$HOME/.kube/mykube.conf

----------------------------

root@master1:/etc/kubernetes/pki# kubectl config set-context mason@mykube --cluster=mykube --user=mason --kubeconfig=$HOME/.kube/mykube.conf

Context "mason@mykube" created.

root@master1:/etc/kubernetes/pki# kubectl config view --kubeconfig=$HOME/.kube/mykube.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.0.0.70:6443

name: mykube

contexts:

- context:

cluster: mykube

user: mason

name: mason@mykube

- context:

cluster: mykube

user: tom

name: tom@mykube

current-context: tom@mykube

kind: Config

preferences: {}

users:

- name: mason

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

- name: tom

user:

token: REDACTED

----------------------------

# d. 设定Current-Context

kubectl config use-context mason@mykube --kubeconfig=$HOME/.kube/mykube.conf

kubectl --context='mason@mykube' get pods

kubectl get pods

----------------------------

root@master1:/etc/kubernetes/pki# kubectl config use-context mason@mykube --kubeconfig=$HOME/.kube/mykube.conf

Switched to context "mason@mykube".

root@master1:/etc/kubernetes/pki# kubectl --context='mason@mykube' get pods

Error from server (Forbidden): pods is forbidden: User "mason" cannot list resource "pods" in API group "" in the namespace "default"

root@master1:/etc/kubernetes/pki# kubectl get pods

Error from server (Forbidden): pods is forbidden: User "mason" cannot list resource "pods" in API group "" in the namespace "default"

----------------------------

# e. 切换其他context账户

kubectl --context='tom@mykube' get pods

3.5 验证KUBECONFIG环境变量合并kubeconfig文件的方法

3.5.1 合并kubeconfig文件

3.5.2 实例

echo $KUBECONFIG

export KUBECONFIG="/root/.kube/mykube.conf:/etc/kubernetes/admin.conf"

kubectl config view

kubectl get pods

kubectl --context="tom@mykube" get pods

kubectl --context="kubernetes-admin@kubernetes" get pods

----------------------------

root@master1:/etc/kubernetes/pki# echo $KUBECONFIG

/root/.kube/mykube.conf

root@master1:/etc/kubernetes/pki# export KUBECONFIG="/root/.kube/mykube.conf:/etc/kubernetes/admin.conf"

root@master1:/etc/kubernetes/pki# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://kubeapi.lec.org:6443

name: kubernetes

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.0.0.70:6443

name: mykube

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

- context:

cluster: mykube

user: mason

name: mason@mykube

- context:

cluster: mykube

user: tom

name: tom@mykube

current-context: mason@mykube

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

- name: mason

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

- name: tom

user:

token: REDACTED

root@master1:/etc/kubernetes/pki# kubectl get pods

Error from server (Forbidden): pods is forbidden: User "mason" cannot list resource "pods" in API group "" in the namespace "default"

root@master1:/etc/kubernetes/pki# kubectl --context="tom@mykube" get pods

Error from server (Forbidden): pods is forbidden: User "tom" cannot list resource "pods" in API group "" in the namespace "default"

root@master1:/etc/kubernetes/pki# kubectl --context="kubernetes-admin@kubernetes" get pods

NAME READY STATUS RESTARTS AGE

mynginx 1/1 Running 1 (49m ago) 110m

----------------------------

# 切回kubernetes-admin@kubernetes

kubectl config use-context kubernetes-admin@kubernetes

----------------------------

root@master1:~# kubectl config use-context kubernetes-admin@kubernetes

Switched to context "kubernetes-admin@kubernetes".

----------------------------

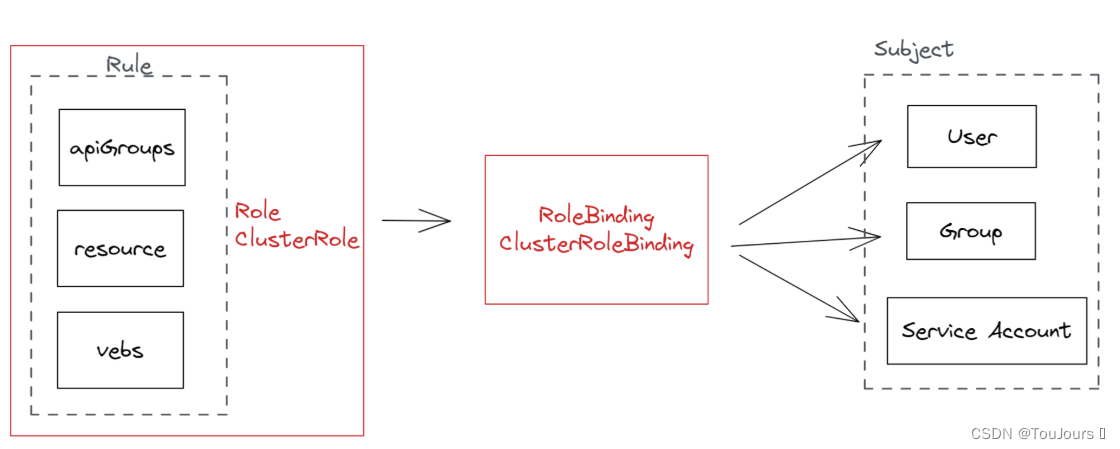

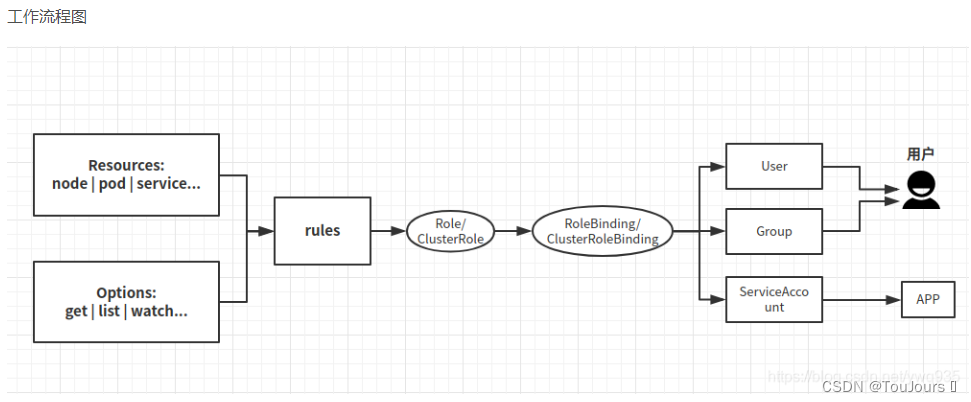

4. 使用RBAC实现访问权限控制

4.1 RoleBinding绑定Role和ClusterRoleBinding绑定ClusterRole

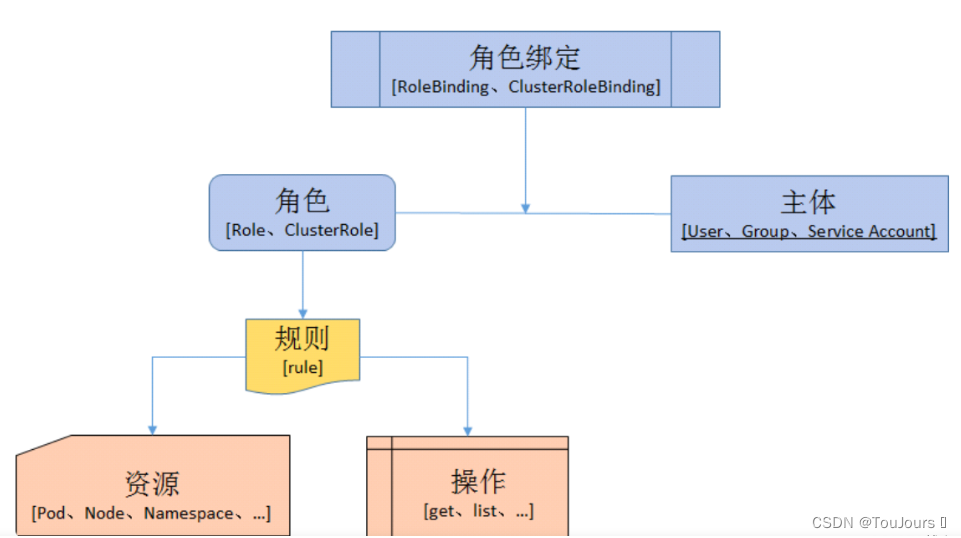

K8S的RBAC 主要由Role、ClusterRole、RoleBinding 和 ClusterRoleBinding 等资源实现。模型如下

1)授权介绍

在RABC API中,通过如下的步骤进行授权:

-

定义角色:在定义角色时会指定此角色对于资源的访问控制的规则。

-

绑定角色:将主体与角色进行绑定,对用户进行访问授权。

角色 -

Role:授权特定命名空间的访问权限

-

ClusterRole:授权所有命名空间的访问权限

角色绑定 -

RoleBinding:将角色绑定到主体(即subject)

-

ClusterRoleBinding:将集群角色绑定到主体

主体(subject) -

User:用户

-

Group:用户组

-

ServiceAccount:服务账号

4.1.1 给用户mason授权Role权限

----------------

# a. 创建rabc目录

root@master1:~# mkdir rabc

root@master1:~# cd rabc

# b. 编写RoleBinding绑定Role的yaml

root@master1:~/rabc# cat > role-admin-for-mason.yaml <<EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: role-admin

namespace: default

rules:

- apiGroups: [""] #对deploy具有增删改查的权限

resources: ["pods","deployments"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: role-admin-for-mason

namespace: default

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: mason

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: role-admin

EOF

# c. 创建

root@master1:~/rabc# kubectl apply -f role-admin-for-mason.yaml

role.rbac.authorization.k8s.io/role-admin created

rolebinding.rbac.authorization.k8s.io/role-admin-for-mason created

# d. mason用户查看pod

root@master1:~/rabc# kubectl get pods -n default

NAME READY STATUS RESTARTS AGE

mynginx 1/1 Running 1 (128m ago) 3h8m

# e. mason有了权限后,可以删除

root@master1:~/rabc# kubectl delete -f role-admin-for-mason.yaml

role.rbac.authorization.k8s.io "role-admin" deleted

rolebinding.rbac.authorization.k8s.io "role-admin-for-mason" deleted

4.1.2 给用户mason授权ClusterRole权限

# a. 编写RoleBinding绑定Role的yaml

root@master1:~/rabc# cat > clusterrole-admin-for-mason.yaml <<EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: clusterrole-admin

namespace: default

rules:

- apiGroups: [""] #对deploy具有增删改查的权限

resources: ["pods","deployments"]

verbs: ["get", "list", "watch", "create", "update", "patch", ""]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: clusterrole-admin-for-mason

namespace: default

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: mason

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: clusterrole-admin

EOF

# b. 创建

root@master1:~/rabc# kubectl apply -f clusterrole-admin-for-mason.yaml

clusterrole.rbac.authorization.k8s.io/clusterrole-admin created

clusterrolebinding.rbac.authorization.k8s.io/clusterrole-admin-for-mason created

# c. mason用户查看pod

root@master1:~/rabc# kubectl --context=mason@mykube get pods

NAME READY STATUS RESTARTS AGE

mynginx 1/1 Running 1 (140m ago) 3h21m

# d. mason有了权限后,可以删除

root@master1:~/rabc# kubectl delete -f clusterrole-admin-for-mason.yaml

clusterrole.rbac.authorization.k8s.io "clusterrole-admin" deleted

clusterrolebinding.rbac.authorization.k8s.io "clusterrole-admin-for-mason" deleted

4.2 ServiceAccount(服务账户)授权

- 正常情况下ServiceAccount主要用于Pod中的应用访问API Server时使用,Pod创建时会自动定义一个存储卷挂载在容器目录下

- 存在下面3个文件:

- ca.crt 是k8s集群的ca,用于访问API Server时校验API Server的证书

- namspace是Pod所在的名称空间

- token就是访问API Server的令牌,Pod中应用访问API Server时携带此token用于认证

4.2.1 创建Service Account类型的账户

cat > serviceaccount-for-mason.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: default

name: sa-for-mason

EOF

# 创建

root@master1:~/rabc# kubectl apply -f serviceaccount-for-mason.yaml

serviceaccount/sa-for-mason created

# 删除(实验完成在删除)

root@master1:~/rabc# kubectl delete -f serviceaccount-for-mason.yaml

serviceaccount "sa-for-mason" deleted

4.2.2 定义角色并将角色绑定到主体

cat > sa-role-admin-for-mason.yaml <<EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: role-admin-sa

namespace: default

rules:

- apiGroups: [""] #对deploy具有增删改查的权限

resources: ["pods","deployments"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: role-admin-for-mason

namespace: default

subjects:

- kind: ServiceAccount

name: sa-for-mason

namespace: default

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: role-admin-sa

EOF

# 创建

root@master1:~/rabc# kubectl apply -f sa-role-admin-for-mason.yaml

role.rbac.authorization.k8s.io/role-admin-sa created

rolebinding.rbac.authorization.k8s.io/role-admin-for-mason created

# 删除(实验完成在删除)

root@master1:~/rabc# kubectl delete -f sa-role-admin-for-mason.yaml

role.rbac.authorization.k8s.io "role-admin-sa" deleted

rolebinding.rbac.authorization.k8s.io "role-admin-for-mason" deleted

4.2.3 创建pod引用service Account

cat > nginx-sa-role.yaml <<EOF

apiVersion: v1

kind: Pod

metadata:

namespace: default

name: nginx-sa-role

spec:

containers:

- name: nginx

image: nginx:1.20.0

serviceAccountName: sa-for-mason

EOF

# 创建

root@master1:~/rabc# kubectl apply -f nginx-sa-role.yaml

pod/nginx-sa-role created

# 查看pod相关信息,发现挂载路径Mounts

root@master1:~/rabc# kubectl describe pod nginx-sa-role -n default

Name: nginx-sa-role

Namespace: default

Priority: 0

Service Account: sa-for-mason

Node: node2.lec.org/10.0.0.72

Start Time: Sat, 11 Feb 2023 23:57:33 +0800

Labels: <none>

Annotations: <none>

Status: Running

IP: 10.244.2.26

IPs:

IP: 10.244.2.26

Containers:

nginx:

Container ID: docker://8b74c01d44cb9ab05b4ea87e68b2ceec78428b00b019c96412f550ed90b3f5c1

Image: nginx:1.20.0

Image ID: docker-pullable://nginx@sha256:ea4560b87ff03479670d15df426f7d02e30cb6340dcd3004cdfc048d6a1d54b4

Port: <none>

Host Port: <none>

State: Running

Started: Sat, 11 Feb 2023 23:57:34 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-8qgq8 (ro)

# 进入nginx容器查看是否存在

root@master1:~/rabc# kubectl exec -it nginx-sa-role -- /bin/bash

root@nginx-sa-role:/# ls /var/run/secrets/kubernetes.io/serviceaccount

ca.crt namespace token

# 删除(实验完成在删除)

root@master1:~/rabc# kubectl delete -f nginx-sa-role.yaml

pod "nginx-sa-role" deleted

5. 使用Ingress发布服务

5.1 Ingress简介

- 使用 Ingress 可以解决这个问题,除了 Ingress 自身的服务向外发布以外,其他服务都不需要直接向外发布。用 Ingress 接收所有的外部请求,然后按照域名配置转发给对应的服务。

Ingress 包含 3 个组件

- 反向代理负载均衡器这个类似 Nginx、Apache ,在集群中可以使用 Deployment、DaemonSet 等控制器来部署反向代理负载均衡器。

- Ingress 控制器作为一个监控器不停地与 API Server 进行交互,实时的感知后端 Service、Pod 等的变化情况,例如新增或者减少,得到这些变化信息后,Ingress 控制器再结合 Ingress 服务自动生成配置,然后更新反向代理负载均衡器并且刷新其配置,达到服务发现的作用。

- Ingress 服务定义访问规则,加入某个域名对应某个 Service,或者某个域名下的子路径对应某个 Service,那么当这个域名的请求进来时,就把请求转发给对应的 Service。根据这个规则,Ingress 控制器会将访问的规则动态写入负载均衡器的配置中,从而实现整体的服务发现和负载均衡。

5.2 部署Ingress Controller----Ingress-Nginx(v1.5.1)

- 参考 https://kubernetes.github.io/ingress-nginx/deploy/

5.2.1 下载部署yaml并修改部分内容

5.2.1.1 下载部署yaml

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.5.1/deploy/static/provider/cloud/deploy.yaml

5.2.2 修改部署yaml

5.2.2.1 将国外的镜像修改国内的镜像

# ,只需要修改下面三处

# 修改前-----官方yaml

image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20220916-gd32f8c343@sha256:39c5b2e3310dc4264d638ad28d9d1d96c4cbb2b2dcfb52368fe4e3c63f61e10f

image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20220916-gd32f8c343@sha256:39c5b2e3310dc4264d638ad28d9d1d96c4cbb2b2dcfb52368fe4e3c63f61e10f

image: registry.k8s.io/ingress-nginx/controller:v1.5.1@sha256:4ba73c697770664c1e00e9f968de14e08f606ff961c76e5d7033a4a9c593c629

# 修改后-----国内的镜像

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v20220916-gd32f8c343

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v20220916-gd32f8c343

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.5.1

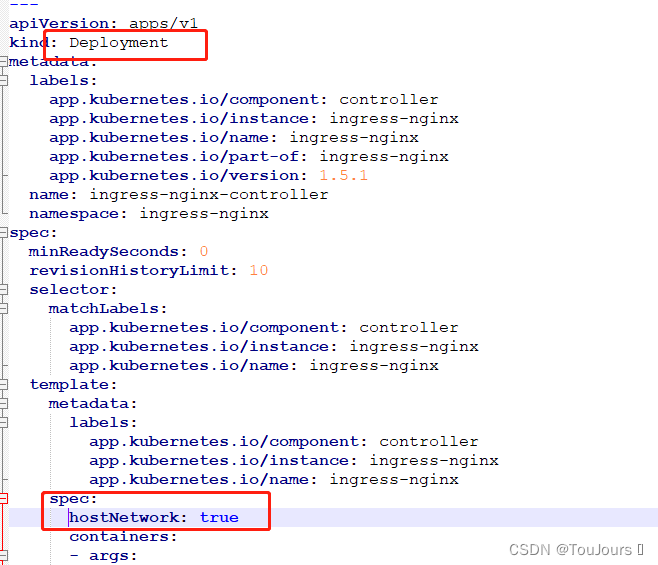

5.2.2.2 不使用内部DNS解析,让端口映射到主机上

# 查找 kind: Deployment,只有一处

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

hostNetwork: true # 该行为新添加的

containers:

- args:

- /nginx-ingress-controller

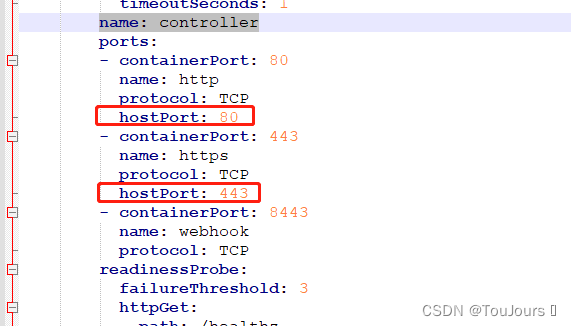

5.2.2.3 不设置ingress的pod所在的节点将访问不了

# 查找 name: controller,只有一处

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

hostPort: 80 # 该行为新添加的

- containerPort: 443

name: https

protocol: TCP

hostPort: 443 # 该行为新添加的

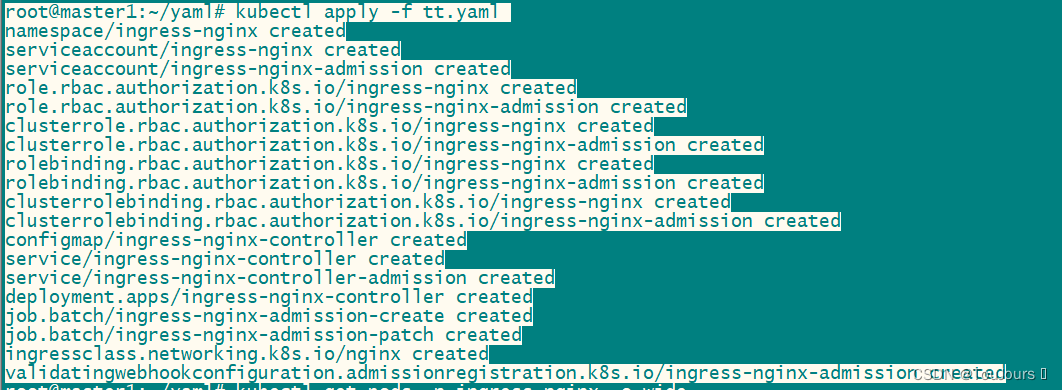

5.2.3 创建并查看

# 我把deploy.yaml改成tt.yaml了

kubectl apply -f tt.yaml

# 查看是否启动成功

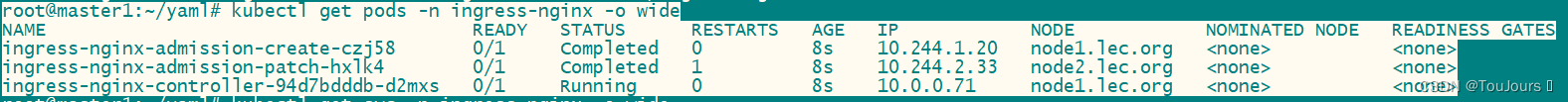

kubectl get pods -n ingress-nginx -o wide

5.2.4 创建示例

# 下面需要访问域名,先修改/etc/hostshosts文件

# 上面发现ingress-nginx-controller-94d7bdddb-d2mxs 挂在node1(ip 10.0.0.71)上了

# node1 和master1

echo "10.0.0.71 demo.test.nginx" >>/etc/hosts

# 创建pod

kubectl create deployment demo --image=httpd --port=80

# 暴露新的Service

kubectl expose deployment demo

# 创建ingress

kubectl create ingress demo-ingress-nginx --class=nginx --rule="demo.test.nginx/*=demo:80"

# 查看get pods,svc

root@master1:~/yaml# kubectl get pods,svc,ingress

NAME READY STATUS RESTARTS AGE

pod/demo-75ddddf99c-6l5lm 1/1 Running 0 29m

pod/mynginx 1/1 Running 2 (6h22m ago) 28h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/demo ClusterIP 10.98.25.252 <none> 80/TCP 28m

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 26d

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/demo-ingress-nginx nginx demo.test.nginx 80 28m

# 验证

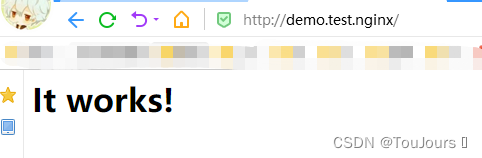

root@master1:~/yaml# curl demo.test.nginx

<html><body><h1>It works!</h1></body></html>

# 测试完之后,删除

# 删除ingress

root@master1:~/yaml# kubectl delete ingress demo-ingress-nginx

ingress.networking.k8s.io "demo-ingress-nginx" deleted

# 删除svc

root@master1:~/yaml# kubectl delete svc demo

service "demo" deleted

# 删除pods pods名称根据上面查看结果

root@master1:~/yaml# kubectl delete pods demo-75ddddf99c-6l5lm

pod "demo-75ddddf99c-6l5lm" deleted

浏览器验证结果

5.3 示例二:tomcat服务器对外通过Ingress暴露

# a. tomcat的Deployment和Service

cat > tomcat-test-svc.yaml <<EOF

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-tomcat

labels:

app: app-tomcat

spec:

selector:

matchLabels:

app: app-tomcat

template:

metadata:

labels:

app: app-tomcat

spec:

containers:

- name: tomcat-test-ingress

image: tomcat

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: tomcat-service

spec:

type: ClusterIP

selector:

app: app-tomcat

ports:

- protocol: TCP

port: 80

targetPort: 8080

EOF

# 创建

root@master1:~/yaml# kubectl apply -f tomcat-test-svc.yaml

deployment.apps/app-tomcat created

service/tomcat-service created

# b. 编写Ingress 规则

cat > tomcat-ingress.yaml<<EOF

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-tomcat-test

namespace: default

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

defaultBackend:

service:

name: tomcat-service

port:

number: 80

rules:

- host: demo.test.nginx # 配置的域名

http:

paths:

- path: "/"

pathType: Prefix

backend:

service:

name: tomcat-service

port:

number: 80

EOF

# 创建

root@master1:~/yaml# kubectl apply -f tomcat-ingress.yaml

ingress.networking.k8s.io/ingress-tomcat-test created

# c. 测试

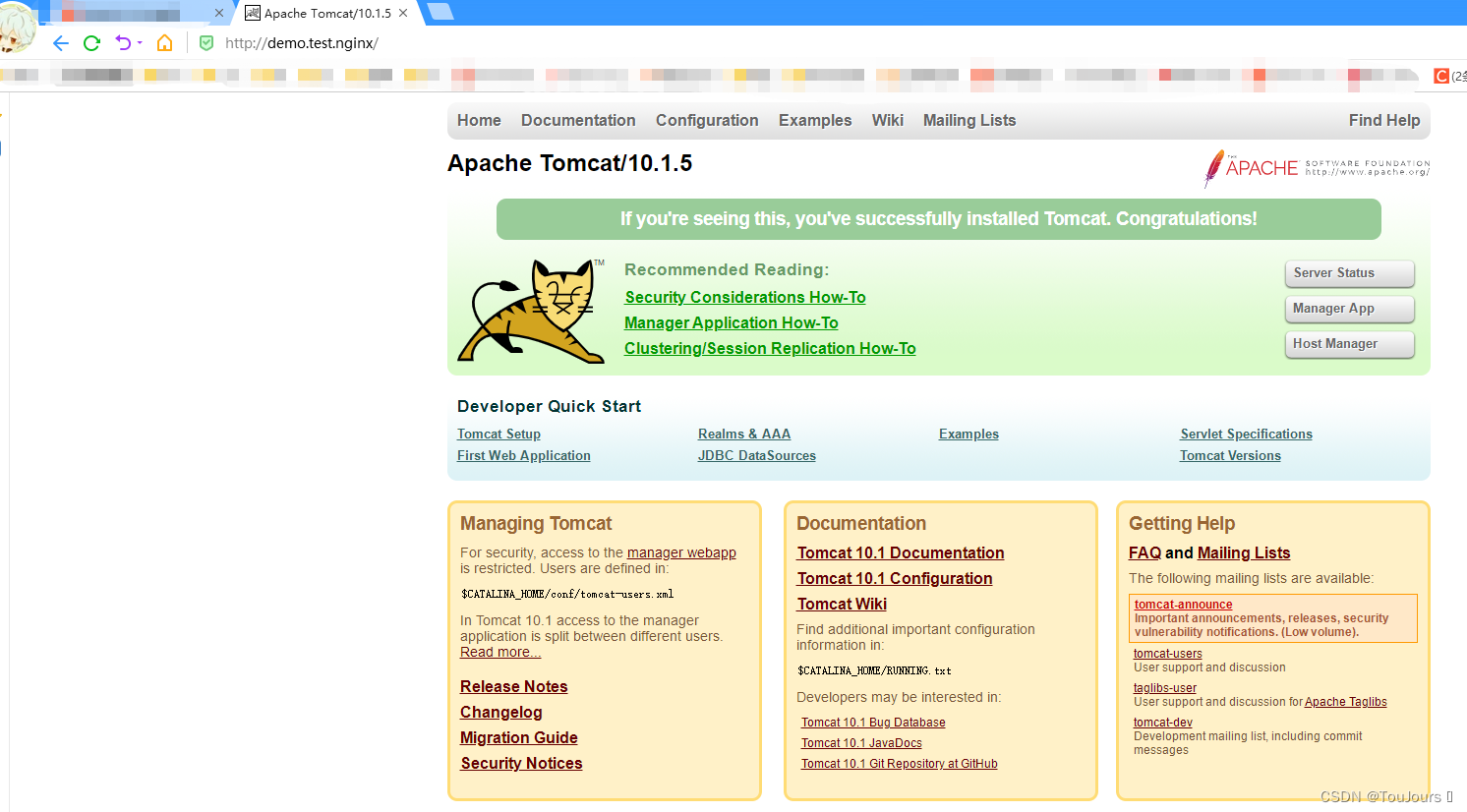

测试发现 返回404 需要修改容器里的webapps

# 进入容器,删除空文件webapps

root@master1:~/yaml# kubectl exec -it app-tomcat-7984487689-vbjsj -- /bin/sh

# rm -rf webapps

# mv webapps.dist webapps

# cd webapps

# ls

docs examples host-manager manager ROOT

# exit

# d. 再次测试

# e. 测试完删除

kubectl delete -f tomcat-ingress.yaml

kubectl delete -f tomcat-test-svc.yaml

5.4 Ingress的类型

5.4.1 通过同一主机不同的URI完成不同应用间的流量分发

# a. 部署demoapp v1.0

kubectl create deployment demoapp10 --image=ikubernetes/demoapp:v1.0 --replicas=2

kubectl create service clusterip demoapp10 --tcp=80:80

# b. 部署demoapp v1.1

kubectl create deployment demoapp11 --image=ikubernetes/demoapp:v1.1 --replicas=2

kubectl create service clusterip demoapp11 --tcp=80:80

# c. 查看

kubectl get svc,pods, deployment

# d. 创建ingress

kubectl create ingress demoapp --rule="demoapp.lec.com/v10=demoapp10:80" --rule="demoapp.lec.com/v11=demoapp11:80" --class=nginx --annotation nginx.ingress.kubernetes.io/rewrite-target="/"

# e. 测试

curl demoapp.lec.com/v10

curl demoapp.lec.com/v11

# f. 测试完成后删除

kubectl delete ingress demoapp

kubectl delete deployment demoapp10 demoapp11

kubectl delete svc demoapp10 demoapp11

5.4.2 但使用URI的前缀匹配,而非精确匹配

# a. 部署demoapp v1.0

kubectl create deployment demoapp10 --image=ikubernetes/demoapp:v1.0 --replicas=2

kubectl create service clusterip demoapp10 --tcp=80:80

# b. 部署demoapp v1.1

kubectl create deployment demoapp11 --image=ikubernetes/demoapp:v1.1 --replicas=2

kubectl create service clusterip demoapp11 --tcp=80:80

# c. 查看

kubectl get svc,pods, deployment

# d. 创建ingress

kubectl create ingress demoapp --rule='demoapp.lec.com/v10(/|$)(.*)=demoapp10:80' --rule='demoapp.lec.com/v11(/|$)(.*)=demoapp11:80' --class=nginx --annotation nginx.ingress.kubernetes.io/rewrite-target="/$2"

# e. 测试

curl demoapp.lec.com/v10/hostname

# f. 测试完成后删除

kubectl delete ingress demoapp

kubectl delete deployment demoapp10 demoapp11

kubectl delete svc demoapp10 demoapp11

5.4.3 通过不同的专有的主机名完成不同应用间的流量分发

# a. 部署demoapp v1.0

kubectl create deployment demoapp10 --image=ikubernetes/demoapp:v1.0 --replicas=2

kubectl create service clusterip demoapp10 --tcp=80:80

# b. 部署demoapp v1.1

kubectl create deployment demoapp11 --image=ikubernetes/demoapp:v1.1 --replicas=2

kubectl create service clusterip demoapp11 --tcp=80:80

# c. 查看

kubectl get svc,pods, deployment

# d. 创建ingress

kubectl create ingress demoapp --rule="demoapp10.lec.com/*=demoapp10:80" --rule="demoapp11.lec.com/*=demoapp11:80" --class=nginx

# e. 测试

curl demoapp10.lec.com

curl demoapp11.lec.com

# f. 测试完成后删除

kubectl delete ingress demoapp

kubectl delete deployment demoapp10 demoapp11

kubectl delete svc demoapp10 demoapp11

5.4.4 TLS

- 但仅限于443/TCP端口

# a. 部署demoapp v1.0

kubectl create deployment demoapp10 --image=ikubernetes/demoapp:v1.0 --replicas=2

kubectl create service clusterip demoapp10 --tcp=80:80

# b. 创建自签证书

# 创建私钥

~# (umask 077; openssl genrsa -out lec.key 2048)

# 创建自签证书

~# openssl req -new -x509 -key lec.key -out lec.crt -subj /C=CN/ST=Beijing/L=Beijing/O=DevOps/CN=demoapp.lec.com

# c. 证书保存成secret格式

~# kubectl create secret tls tls-lec --cert=./lec.crt --key=./lec.key

kubectl get secret

# d. 创建ingress规则,同时将该主机定义为TLS类型

~# kubectl create ingress tls-demo --rule='demoapp.lec.com/*=demoapp10:80,tls=tls-lec' --class=nginx

# e. 域名解析,查看ingress-controller在哪个节点就解析哪个节点,如在node1(ip 10.0.0.71)上

vi /etc/hosts

10.0.0.71 demoapp.lec.com

# f. 测试

curl -I demoapp.lec.com

curl -k https://demoapp.lec.com

# g. 测试完成后删除

kubectl delete ingress tls-demo

kubectl delete deployment demoapp10

kubectl delete svc demoapp10

5.5 基于Ingress Nginx的灰度发布

5.5.1 Ingress Nginx的流量发布机制

蓝绿:

production: 100%, canary: 0%

production: 0%, canary: 100% --> Canary变成后面的Production

Canary:

流量比例化切分:

逐渐调整

流量识别,将特定的流量分发给Canary:

By-Header:基于特定的标头识别

标头值默认:Always/Nerver

标准值自定义:

标准值可以基于正则表达式Pattern进行匹配:

By-Cookie: 基于Cookie识别

5.5.2 基于权重的金丝雀发布

# a. 部署demoapp v1.0

kubectl create deployment demoapp10 --image=ikubernetes/demoapp:v1.0 --replicas=2

kubectl create service clusterip demoapp10 --tcp=80:80

# b. 部署demoapp v1.1

kubectl create deployment demoapp11 --image=ikubernetes/demoapp:v1.1 --replicas=2

kubectl create service clusterip demoapp11 --tcp=80:80

# c. 部署Ingress

vi ingress-test-01.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-weight: "10"

name: demoapp-canary-by-weight

spec:

rules:

- host: demoapp.lec.com

http:

paths:

- backend:

service:

name: demoapp-v11

port:

number: 80

path: /

pathType: Prefix

# 创建

kubectl apply -f ingress-test-01.yaml

# e. 测试

curl -H "true: 10" demoapp.lec.com

# f. 测试完删除

kubectl delete -f ingress-test-01.yaml

kubectl delete deployment demoapp10 demoapp11

kubectl delete svc demoapp10 demoapp11

5.5.3 基于标头的金丝雀发布-1

# a. 部署demoapp v1.0

kubectl create deployment demoapp10 --image=ikubernetes/demoapp:v1.0 --replicas=2

kubectl create service clusterip demoapp10 --tcp=80:80

# b. 部署demoapp v1.1

kubectl create deployment demoapp11 --image=ikubernetes/demoapp:v1.1 --replicas=2

kubectl create service clusterip demoapp11 --tcp=80:80

# c. 部署Ingress

vi ingress-test-02.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-header: "Username"

nginx.ingress.kubernetes.io/canary-by-header-pattern: "(vip|VIP)_.*"

name: demoapp-canary-by-header-pattern

spec:

rules:

- host: demoapp.lec.com

http:

paths:

- backend:

service:

name: demoapp-v11

port:

number: 80

path: /

pathType: Prefix

# 创建

kubectl apply -f ingress-test-02.yaml

# e. 测试

curl -H "Username: vip_123" demoapp.lec.com

# f. 测试完删除

kubectl delete -f ingress-test-02.yaml

kubectl delete deployment demoapp10 demoapp11

kubectl delete svc demoapp10 demoapp11

5.5.4 基于标头的金丝雀发布-2

# a. 部署demoapp v1.0

kubectl create deployment demoapp10 --image=ikubernetes/demoapp:v1.0 --replicas=2

kubectl create service clusterip demoapp10 --tcp=80:80

# b. 部署demoapp v1.1

kubectl create deployment demoapp11 --image=ikubernetes/demoapp:v1.1 --replicas=2

kubectl create service clusterip demoapp11 --tcp=80:80

# c. 部署Ingress

vi ingress-test-03.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-header: "X-Canary"

name: demoapp-canary-by-header-pattern

spec:

rules:

- host: demoapp.magedu.com

http:

paths:

- backend:

service:

name: demoapp-v11

port:

number: 80

path: /

pathType: Prefix

# 创建

kubectl apply -f ingress-test-03.yaml

# e. 测试

curl -H "X-Canary: always" demoapp.lec.com

# f. 测试完删除

kubectl delete -f ingress-test-03.yaml

kubectl delete deployment demoapp10 demoapp11

kubectl delete svc demoapp10 demoapp11

5.5.5 基于标头的金丝雀发布-3

# a. 部署demoapp v1.0

kubectl create deployment demoapp10 --image=ikubernetes/demoapp:v1.0 --replicas=2

kubectl create service clusterip demoapp10 --tcp=80:80

# b. 部署demoapp v1.1

kubectl create deployment demoapp11 --image=ikubernetes/demoapp:v1.1 --replicas=2

kubectl create service clusterip demoapp11 --tcp=80:80

# c. 部署Ingress

vi ingress-test-04.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-cookie: "vip_user"

name: demoapp-canary-by-header-pattern

spec:

rules:

- host: demoapp.magedu.com

http:

paths:

- backend:

service:

name: demoapp-v11

port:

number: 80

path: /

pathType: Prefix

# 创建

kubectl apply -f ingress-test-04.yaml

# e. 测试

curl -b "vip_user=always" demoapp.lec.com

# f. 测试完删除

kubectl delete -f ingress-test-04.yaml

kubectl delete deployment demoapp10 demoapp11

kubectl delete svc demoapp10 demoapp11

1310

1310

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?