Adversarial Training

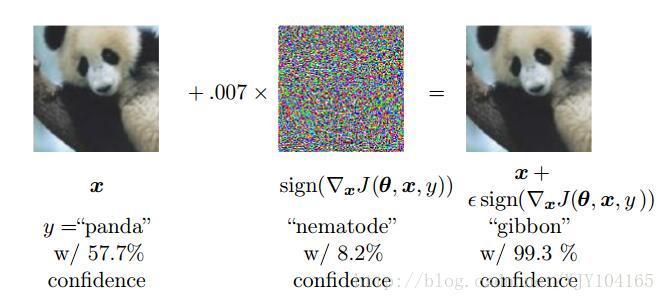

In order to probe the level of understanding a network has of the underlying task, we can search for examples that the model misclassifies. Szegedy et al. (2014b) found that even neural networks that perform at human level accuracy have a nearly 100% error rate on examples that are intentionally constructed by using an optimization procedure to search for an input

x′

near a data point

x

such that the model output is very different at

In many cases,

x′

can be so similar to

x

that a human observer cannot tell the difference between the original example and the adversarial example, but the network can make highly different predictions.

Adversarial examples have many implications, for example, in computer security, that are beyond the scope of this chapter. However, they are interesting in the context of regularization because one can reduce the error rate on the original i.i.d. test set via adversarial training—training on adversarially perturbed examples from the training set

Adversarial training helps to illustrate the power of using a large function family in combination with aggressive regularization.

Purely linear models, like logistic regression, are not able to resist adversarial examples because they are forced to be linear. Neural networks are able to represent functions that can range from nearly linear to nearly locally constant and thus have the flexibility to capture linear trends in the training data while still learning to resist local perturbation.

Adversarial examples also provide a means of accomplishing semi-supervised learning.

- At a point x that is not associated with a label in the dataset, the model itself assigns some label y^ .

- The model’s label y^ may not be the true label, but if the model is high quality, then y^ has a high probability of providing the true label.

- We can seek an adversarial example x′ that causes the classifier to output a label y′ with y′!=y^ .

- Adversarial examples generated using not the true label but a label provided by a trained model are called virtual adversarial examples.

- The classifier may then be trained to assign the same label to

x

and

x′ .

This encourages the classifier to learn a function that is robust to small changes anywhere along the manifold where the unlabeled data lies.

6404

6404

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?