因为太多的博客并没有深入理解,本文是自己学习后加入自己深入理解的总结记录,方便自己以后查看。

DeeplabV3+中Aspp的作用和DenseAsppp的区别

学习前言

随着Deeplab系列不断发展,DeeplabV3+做到了被大家认可为是语义分割的新高峰,本文一起来学习如何修改DeeplabV3+的加强特征提取网络Aspp

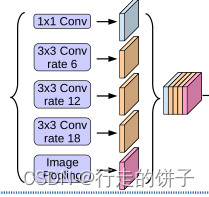

一、Aspp的原理和优点

DeepLabV3+的作者在在ASPP模块上做了以下优化:

- 1、优化了空洞比率

- 2、增加了全局池化层来提取全局信息

- 3、引入BatchNormalization

- 4、将ASPP的卷积替换成Depth-wise卷积来减少参数数量,加快计算速度

#ASPP特征提取模块(利用不同膨胀率的膨胀卷积进行特征提取)

class ASPP(nn.Module):

def __init__(self, dim_in, dim_out, rate=1, bn_mom=0.1):

super(ASPP, self).__init__()

#第一分支1x1卷积,膨胀系数1

self.branch1 = nn.Sequential(

nn.Conv2d(dim_in, dim_out, 1, 1, padding=0, dilation=rate,bias=True),

#作用:卷积层之后添加,标准化,对数据进行归一化处理,使得数据不会因为过大导致性能不稳定,提高收敛的速度

nn.BatchNorm2d(dim_out, momentum=bn_mom),

nn.ReLU(inplace=True),

)

#第二分支3x3卷积,膨胀系数6,填充系数6,增加偏置参数

self.branch2 = nn.Sequential(

nn.Conv2d(dim_in, dim_out, 3, 1, padding=6*rate, dilation=6*rate, bias=True),

nn.BatchNorm2d(dim_out, momentum=bn_mom),

nn.ReLU(inplace=True),

)

#第三分支3x3卷积,膨胀系数12,填充系数12,增加偏置参数

self.branch3 = nn.Sequential(

nn.Conv2d(dim_in, dim_out, 3, 1, padding=12*rate, dilation=12*rate, bias=True),

nn.BatchNorm2d(dim_out, momentum=bn_mom),

nn.ReLU(inplace=True),

)

#第四分支3x3卷积,膨胀系数18,填充系数18,增加偏置参数

self.branch4 = nn.Sequential(

nn.Conv2d(dim_in, dim_out, 3, 1, padding=18*rate, dilation=18*rate, bias=True),

nn.BatchNorm2d(dim_out, momentum=bn_mom),

nn.ReLU(inplace=True),

)

#第五分支1x1卷积,非空洞卷积

self.branch5_conv = nn.Conv2d(dim_in, dim_out, 1, 1, 0,bias=True)

self.branch5_bn = nn.BatchNorm2d(dim_out, momentum=bn_mom)

self.branch5_relu = nn.ReLU(inplace=True)

#堆叠后的语义做通道数调整

self.conv_cat = nn.Sequential(

nn.Conv2d(dim_out*5, dim_out, 1, 1, padding=0,bias=True),

nn.BatchNorm2d(dim_out, momentum=bn_mom),

nn.ReLU(inplace=True),

)

def forward(self, x):

[b, c, row, col] = x.size()

#-----------------------------------------#

# 一共五个分支

#-----------------------------------------#

conv1x1 = self.branch1(x)

conv3x3_1 = self.branch2(x)

conv3x3_2 = self.branch3(x)

conv3x3_3 = self.branch4(x)

#-----------------------------------------#

# 第五个分支,全局平均池化+卷积

#-----------------------------------------#

global_feature = torch.mean(x,2,True)

global_feature = torch.mean(global_feature,3,True)#平均池化

global_feature = self.branch5_conv(global_feature)#卷积

global_feature = self.branch5_bn(global_feature)

global_feature = self.branch5_relu(global_feature)

global_feature = F.interpolate(global_feature, (row, col), None, 'bilinear', True)

#-----------------------------------------#

# 将五个分支的内容堆叠起来

# 然后1x1卷积整合特征。

#-----------------------------------------#

feature_cat = torch.cat([conv1x1, conv3x3_1, conv3x3_2, conv3x3_3, global_feature], dim=1)#torch.cat堆叠特征层

result = self.conv_cat(feature_cat)#使用1x1卷积做特征整合

return result二、DenseAspp与Aspp的区别

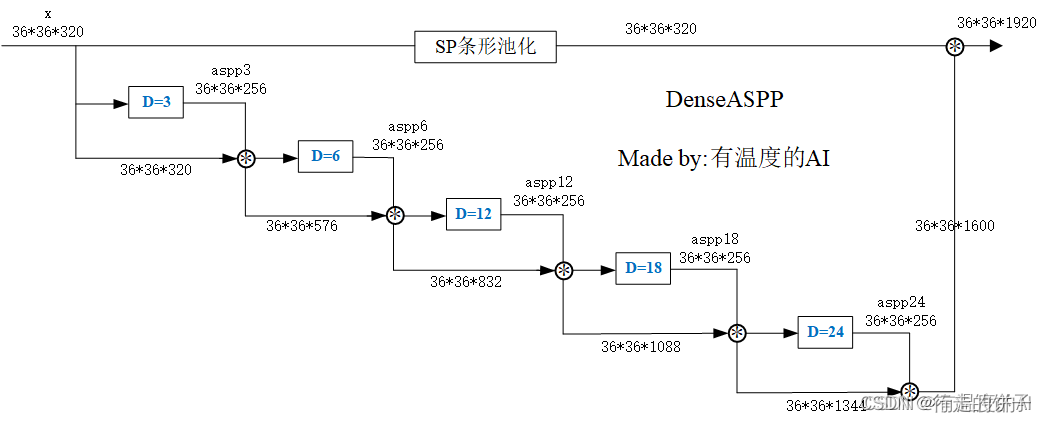

1.优点

获得更大的感受野

更密集的金字塔多尺度特征

2.缺点

计算速度更慢

二、Deeplabv3如何修改支持DenseAspp网络

1、DenseAspp原理

#DenseASPP主干网络

class _DenseASPPBlock(nn.Module):

def __init__(self, in_channels, inter_channels1, inter_channels2,

norm_layer=nn.BatchNorm2d, norm_kwargs=None):

super(_DenseASPPBlock, self).__init__()

self.aspp_3 = _DenseASPPConv(in_channels, inter_channels1, inter_channels2, 3, 0.1,

norm_layer, norm_kwargs)

self.aspp_6 = _DenseASPPConv(in_channels + inter_channels2 * 1, inter_channels1, inter_channels2, 6, 0.1,

norm_layer, norm_kwargs)

self.aspp_12 = _DenseASPPConv(in_channels + inter_channels2 * 2, inter_channels1, inter_channels2, 12, 0.1,

norm_layer, norm_kwargs)

self.aspp_18 = _DenseASPPConv(in_channels + inter_channels2 * 3, inter_channels1, inter_channels2, 18, 0.1,

norm_layer, norm_kwargs)

self.aspp_24 = _DenseASPPConv(in_channels + inter_channels2 * 4, inter_channels1, inter_channels2, 24, 0.1,

norm_layer, norm_kwargs)

self.SP = StripPooling(320, up_kwargs={'mode': 'bilinear', 'align_corners': True})

def forward(self, x):

x1 = self.SP(x)

aspp3 = self.aspp_3(x)

x = torch.cat([aspp3, x], dim=1)

aspp6 = self.aspp_6(x)

x = torch.cat([aspp6, x], dim=1)

aspp12 = self.aspp_12(x)

x = torch.cat([aspp12, x], dim=1)

aspp18 = self.aspp_18(x)

x = torch.cat([aspp18, x], dim=1)

aspp24 = self.aspp_24(x)

x = torch.cat([aspp24, x], dim=1)

x = torch.cat([x, x1], dim=1)

return x

#打印DenseASPP神经网络

_DenseASPPBlockShow = _DenseASPPBlock(120,512,256,norm_layer=nn.BatchNorm2d, norm_kwargs=None)

print(_DenseASPPBlockShow )

2、Deeplabv3+神经网络设计修改,支持DenseAspp(可根据具体项目的需求切换不同的网络)

# Deeplab:神经网络设计

class DeepLab(nn.Module):

def __init__(self, num_classes, backbone="Mobilenetv2", encoderaspp="Aspp", decoder="V3", pretrained=True,

downsample_factor=16):

super(DeepLab, self).__init__()

# -----------------------------------#

# Encoder主干特征提取模块DCNN

# 目前融合了xception\mobilenetv2等网络

# -----------------------------------#

if backbone == "Xception":

# ----------------------------------#

# 获得两个特征层

# 浅层特征 [128,128,256]

# 主干部分 [30,30,2048]

# ----------------------------------#

self.backbone = xception(downsample_factor=downsample_factor, pretrained=pretrained)

in_channels = 2048

two_level_channels = 16

low_level_channels = 256

elif backbone == "Mobilenetv2":

# ----------------------------------#

# 获得两个特征层

# 浅层特征 [128,128,24]

# 主干部分 [30,30,320]

# ----------------------------------#

self.backbone = MobileNetV2(downsample_factor=downsample_factor, pretrained=pretrained)

in_channels = 320

two_level_channels = 16

low_level_channels = 24

else:

raise ValueError('Unsupported backbone - `{}`, Use mobilenet, xception.'.format(backbone))

# -----------------------------------------#

# Encoder加强特征提取模块ASPP

# 利用不同膨胀率的膨胀卷积进行特征提取

# -----------------------------------------#

if encoderaspp == "Aspp":

# 使用aspp

self.aspp = ASPP(dim_in=in_channels, dim_out=256, rate=16 // downsample_factor)

elif encoderaspp == "Denseaspp":

# 使用denseaspp

self.aspp = DenseASPP(dim_in=in_channels, dim_mid1=512, dim_mid2=256)

else:

raise ValueError('Unsupported backbone - `{}`,Use aspp,denseaspp'.format(encoderaspp))

# ---------------------------------------------------#

# Decoder解码器模块

# 利用解码器恢复边界信息,本文中重构了一下几种边界信息恢复解码器

# ---------------------------------------------------#

if decoder == "V3":

# 使用deeplabv3的解码器

self.decoder = Decoderv3(low_level_channels=low_level_channels, num_classes=num_classes)

elif decoder == "V3_GNC_BR":

# 使用自己设计的解码器v3+GCN+BR,理论上边界信息提取更好,定位和分类精度更高

self.decoder = Decoder_GCN_BR(low_level_channels=low_level_channels, two_level_channels=two_level_channels,

num_classes=num_classes)

else:

raise ValueError('Unsupported decoder - `{}`,Use v3,v3+GNC+BR'.format(encoderaspp))

def forward(self, x):

H, W = x.size(2), x.size(3)

# -----------------------------------------#

# 获得两个特征层

# low_level_features: 浅层特征-进行卷积处理

# x : 主干部分-利用ASPP结构进行加强特征提取

# -----------------------------------------#

# 主干网络DNN(mobilenetv2/xceptionnet) 用mobilenetv2做深度卷积,更加轻量化根据计算机配置选择

the_two_features, low_level_features, the_three_features, the_four_features, x = self.backbone(x)

# 加强特征提取网络(aspp/denseaspp)denseaspp更多特征层提取

x = self.aspp(x)

# 解码器decoder恢复边界信息

x = self.decoder(x, low_level_features, the_two_features)

# 做上采样,使H、W值恢复到输入图片的大小

x = F.interpolate(x, size=(H, W), mode='bilinear', align_corners=True)

return x总结

以上主要讲解了如何简单的重新设计Deeplab网络来支持不同的加强特征提取网络,下篇主要内容是如何让自己开发的算法平台支持Deeplab的标注、训练、推理。链接如下:

博主比较懒请等待

本文详细介绍了DeeplabV3+中ASPP模块的改进,包括优化空洞比例、引入BN和Depth-wise卷积,并对比了DenseAspp的更大感受野特性。同时,展示了如何在Deeplabv3基础上支持DenseAspp网络的设计。

本文详细介绍了DeeplabV3+中ASPP模块的改进,包括优化空洞比例、引入BN和Depth-wise卷积,并对比了DenseAspp的更大感受野特性。同时,展示了如何在Deeplabv3基础上支持DenseAspp网络的设计。

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?