Improvise a Jazz Solo with an LSTM Network

实现使用LSTM生成音乐的模型,你可以在结束时听你自己的音乐,接下来你将会学习到:

-

使用LSTM生成音乐

-

使用深度学习生成你自己的爵士乐

现在加载库,其中,music21可能不在你的环境内,你需要在命令行中执行pip install msgpack以及pip install music21来获取。

from __future__ import print_function

import IPython

import sys

from music21 import *

import numpy as np

from grammar import *

from qa import *

from preprocess import *

from music_utils import *

from data_utils import *

from keras.models import load_model, Model

from keras.layers import Dense, Activation, Dropout, Input, LSTM, Reshape, Lambda, RepeatVector

from keras.initializers import glorot_uniform

from keras.utils import to_categorical

from keras.optimizers import Adam

from keras import backend as K1. 问题描述(Problem statement)

使用LSTM生成爵士音乐

1.1 Dataset

IPython.display.Audio('./data/30s_seq.mp3')

我们已经对音乐数据进行了预处理,以按照音乐“值”来呈现它。你可以非正式地将每个“值”视为一个音符,其中包括振幅和持续时间。

-

例,如果你按下特定的钢琴键0.5秒,那么你刚刚弹奏了一个音符。在音乐理论中,“值”实际上比这更复杂 - 具体而言,它还收集了同时播放多个音符所需的信息。

-

例如,在播放乐曲时,你可以同时按下两个钢琴键(同时播放多个音符会产生所谓的“和弦”)。但是我们不需要担心音乐理论的细节。

这个作业目标,你需要知道的是我们将获得值的数据集,并将学习RNN模型以 生成值序列。

我们的音乐生成系统将使用78个独特的值。运行以下代码以加载原始音乐数据,并将其预处理为值。这可能需要几分钟时间。

X, Y, n_values, indices_values = load_music_utils()

print('shape of X:', X.shape)

print('number of training examples:', X.shape[0])

print('Tx (length of sequence):', X.shape[1])

print('total # of unique values:', n_values)

print('Shape of Y:', Y.shape)shape of X: (60, 30, 78)

number of training examples: 60

Tx (length of sequence): 30

total # of unique values: 78

Shape of Y: (30, 60, 78)

简单解释下数据集:

-

X: 这个是维度 (m, \(T_x\), 78) 的数组,我们有 m 个训练样本, 每个样本被分割为 \(T_x =30\) 的音乐值。在每个时间步,输入的是78个不同的可能值之一,表示为一个one-hot向量。 因此,X[i,t,:]是表示 时间t的 第i个样本的值的一个 one-hot 向量。 -

Y: 这与“X”基本相同,但向左移动了一步。类似于恐龙命名,我们感兴趣的是使用先前的值来预测下一个值的网络,因此,我们序列模型在给定 \(x^{\langle 1\rangle}, \ldots, x^{\langle t \rangle}\) 时将尝试预测 \(y^{\langle t \rangle}\)。 但是,数据Y被重排成维度\((T_y, m, 78)\), 且 \(T_y = T_x\),这个格式更方便给LSTM喂数据。 -

n_values: 此数据集中唯一值的数量,这应该是78。 -

indices_values: 字典,0-77的数值映射到音乐值的字典。

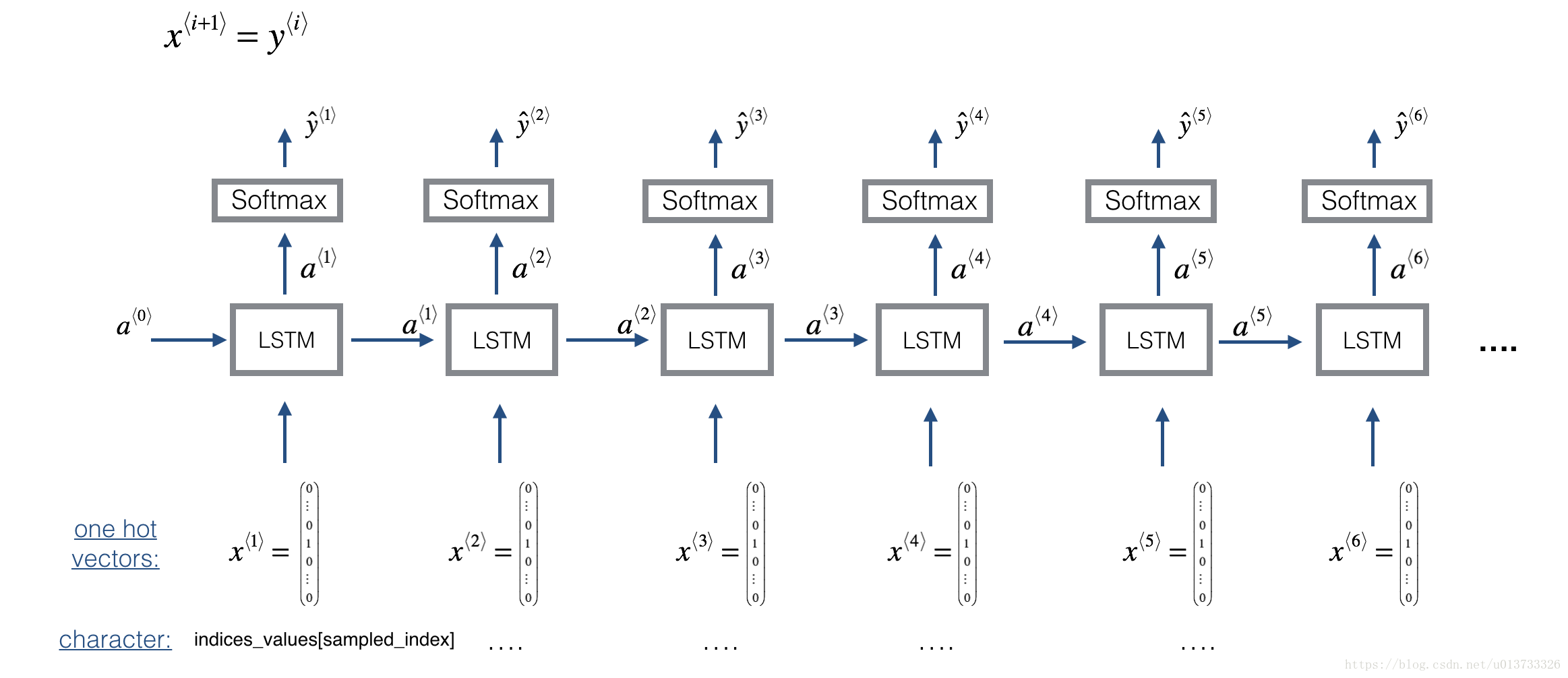

1.2 模型预览(Overview of our model)

下面将使用的模型的架构:

我们将从一段较长的音乐片段中 随机抽取30个值 来训练模型。因此,我们不会设置第一个输入为 \(x^{\langle 1 \rangle} = \vec{0}\),因为现在大部分的音频片段都在一个音乐的中间开始,我们设置每个片段具有相同的长度 \(T_x = 30\) 让向量化更容易。

2. 构建模型(Building the model)

在这里,我们将构建和训练一个学习音乐的模型,输入的是维度为 \((m, T_x, 78)\) 的 X,维度为\((T_y, m, 78)\)的 Y,我们使用的是 64维隐藏层的LSTM,所以n_a = 64。

以下是如何创建具有多个输入和输出的Keras模型。如果你正在构建一个RNN网络,即使在测试时也预先给出了整个输入序列 \(x^{\langle 1 \rangle}, x^{\langle 2 \rangle}, \ldots, x^{\langle T_x \rangle}\)

-

例如,如果输入是单词,输出是一个标签,那么keras有简单的内置函数来构建模型。但是,对于序列生成,在测试时我们并不提前知道 \(x^{\langle t\rangle}\) 的所有值;

-

相反,我们使用 \(x^{\langle t\rangle} = y^{\langle t-1 \rangle}\) 一次生成一个。你需要实现你自己的for循环来迭代不同的时间步。

函数 djmodel() 将使用for循环,调用LSTM层 \(T_x\) 次,并且重要的是,所有 \(T_x\) 副本 具有相同权重。也就是说,它不应该每次都重新初始化权重 - \(T_x\) 步应该具有相同的权重, 在Keras中实现具有可共享权重的层的关键步骤是:

-

定义图层对象(我们将使用全局变量)。

-

在传播输入时,调用这些对象。

我们将需要的layers objects定义为全局变量,运行下面代码,理解这些layers: Reshape(), LSTM(), Dense().

reshapor = Reshape((1, 78)) # Used in Step 2.B of djmodel(), below

LSTM_cell = LSTM(n_a, return_state = True) # Used in Step 2.C

densor = Dense(n_values, activation='softmax') # Used in Step 2.D每个reshapor、LSTM_cell和densor都是层对象,我们可以使用它们来实现djmodel()。为了将Keras张量对象X通过其中一个层传播,如果需要多个输入,可以使用 layer_object(X)(或layer_object([X,Y]))。例如,reshapor(X)将通过上面定义的Reshape(1,78)层来传播X。

Exercise: 实现 djmodel(),需要以下两步骤:

-

创建一个空list "outputs" 来保存LSTM cell 所有time-step的输出。

-

在 \(t \in 1, \ldots, T_x\) 中循环:

A. 从 X 中选择第 “t” 个时间步向量,选择的向量的维度应该是 (78,),在keras中创建一个Lambda layer:

-

x = Lambda(lambda x: X[:,t,:])(X) -

查看 Keras文档,了解它的作用。它正在创建一个 "临时" 或 "未命名" 函数 (Lambda 函数所用函数) 它提取出适当的 one-hot向量, 并使该函数成为 Keras

Layerobject应用到X.

B. 把 X 重构(Reshape) 为 (1,78). 可以使用

reshapor()层.C. 使用 x 在 LSTM_cell中执行单步传播,要记得使用之前 hidden state \(a\) 和 cell state \(c\) 初始化LSTM_cell:

a, _, c = LSTM_cell(input_x, initial_state=[previous hidden state, previous cell state])

D. 使用

densor通过 dense+softmax layer 传播LSTM的输出激活值.E. 把预测值添加到 "outputs" 列表中.

-

# GRADED FUNCTION: djmodel

def djmodel(Tx, n_a, n_values):

"""

Implement the model

Arguments:

Tx -- length of the sequence in a corpus

n_a -- the number of activations used in our model

n_values -- number of unique values in the music data (78)

Returns:

model -- a keras model with the

"""

# Define the input of your model with a shape

X = Input(shape=(Tx, n_values))

# Define s0, initial hidden state for the decoder LSTM

a0 = Input(shape=(n_a,), name='a0')

c0 = Input(shape=(n_a,), name='c0')

a = a0

c = c0

### START CODE HERE ###

# Step 1: Create empty list to append the outputs while you iterate (≈1 line)

outputs = []

# Step 2: Loop

for t in range(Tx):

# Step 2.A: select the "t"th time step vector from X.

x = Lambda(lambda x: X[:,t,:])(X)

# Step 2.B: Use reshapor to reshape x to be (1, n_values) (≈1 line)

x = reshapor(x)

# Step 2.C: Perform one step of the LSTM_cell

a, _, c = LSTM_cell(x, initial_state=[a, c])

# Step 2.D: Apply densor to the hidden state output of LSTM_Cell

out = densor(a)

# Step 2.E: add the output to "outputs"

outputs.append(out)

# Step 3: Create model instance

model = Model([X, a0, c0], outputs)

### END CODE HERE ###

return model使用 Tx = 30, n_a = 64(the dimmension of the LSTM activations) 和 n_values = 78 来定义model:

model = djmodel(Tx = 30 , n_a = 64, n_values = 78)编译被训练的模型,使用 Adam优化 和 categorical coss-entropy loss:

opt = Adam(lr=0.01, beta_1=0.9, beta_2=0.999, decay=0.01)

model.compile(optimizer=opt, loss='categorical_crossentropy', metrics=['accuracy'])初始化 LSTM的 a0 和 c0 为 0

m = 60

a0 = np.zeros((m, n_a))

c0 = np.zeros((m, n_a))

# print(X.shape) # (60, 30, 78)拟合模型,把 Y 转换成一个列表,因为cost function 期望 Y 以这种格式提供 (每一个时间步的一个列表项)。list(Y)是一个有30个item的列表,其中每个列表项都是维度为 (60, 78) 矩阵,让我们迭代100次:

# print(Y.shape, len(list(Y))) # (30, 60, 78) 30

model.fit([X, a0, c0], list(Y), epochs=100)(30, 60, 78) 30

Epoch 1/100

60/60 [==============================] - 19s 314ms/step - loss: 125.7977 - dense_1_loss_1: 4.3546 - dense_1_loss_2: 4.3526 - dense_1_loss_3: 4.3441 - dense_1_loss_4: 4.3390 - dense_1_loss_5: 4.3357 - dense_1_loss_6: 4.3407 - dense_1_loss_7: 4.3445 - dense_1_loss_8: 4.3307 - dense_1_loss_9: 4.3382 - dense_1_loss_10: 4.3404 - dense_1_loss_11: 4.3329 - dense_1_loss_12: 4.3320 - dense_1_loss_13: 4.3400 - dense_1_loss_14: 4.3372 - dense_1_loss_15: 4.3397 - dense_1_loss_16: 4.3303 - dense_1_loss_17: 4.3366 - dense_1_loss_18: 4.3466 - dense_1_loss_19: 4.3298 - dense_1_loss_20: 4.3378 - dense_1_loss_21: 4.3367 - dense_1_loss_22: 4.3380 - dense_1_loss_23: 4.3339 - dense_1_loss_24: 4.3301 - dense_1_loss_25: 4.3423 - dense_1_loss_26: 4.3403 - dense_1_loss_27: 4.3303 - dense_1_loss_28: 4.3267 - dense_1_loss_29: 4.3359 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.0667 - dense_1_acc_2: 0.0667 - dense_1_acc_3: 0.0500 - dense_1_acc_4: 0.0833 - dense_1_acc_5: 0.1333 - dense_1_acc_6: 0.0667 - dense_1_acc_7: 0.0167 - dense_1_acc_8: 0.0667 - dense_1_acc_9: 0.0500 - dense_1_acc_10: 0.0167 - dense_1_acc_11: 0.0500 - dense_1_acc_12: 0.0667 - dense_1_acc_13: 0.0333 - dense_1_acc_14: 0.0833 - dense_1_acc_15: 0.0833 - dense_1_acc_16: 0.1000 - dense_1_acc_17: 0.0500 - dense_1_acc_18: 0.0333 - dense_1_acc_19: 0.0667 - dense_1_acc_20: 0.0667 - dense_1_acc_21: 0.0333 - dense_1_acc_22: 0.0333 - dense_1_acc_23: 0.0833 - dense_1_acc_24: 0.1000 - dense_1_acc_25: 0.0667 - dense_1_acc_26: 0.0833 - dense_1_acc_27: 0.1500 - dense_1_acc_28: 0.1333 - dense_1_acc_29: 0.1000 - dense_1_acc_30: 0.0167

Epoch 2/100

60/60 [==============================] - 0s 3ms/step - loss: 122.6379 - dense_1_loss_1: 4.3326 - dense_1_loss_2: 4.3086 - dense_1_loss_3: 4.2778 - dense_1_loss_4: 4.2739 - dense_1_loss_5: 4.2504 - dense_1_loss_6: 4.2621 - dense_1_loss_7: 4.2517 - dense_1_loss_8: 4.2252 - dense_1_loss_9: 4.2435 - dense_1_loss_10: 4.2262 - dense_1_loss_11: 4.2214 - dense_1_loss_12: 4.2263 - dense_1_loss_13: 4.2185 - dense_1_loss_14: 4.2061 - dense_1_loss_15: 4.1950 - dense_1_loss_16: 4.2028 - dense_1_loss_17: 4.2081 - dense_1_loss_18: 4.2314 - dense_1_loss_19: 4.1873 - dense_1_loss_20: 4.2280 - dense_1_loss_21: 4.2331 - dense_1_loss_22: 4.2040 - dense_1_loss_23: 4.2199 - dense_1_loss_24: 4.2204 - dense_1_loss_25: 4.2235 - dense_1_loss_26: 4.1923 - dense_1_loss_27: 4.1657 - dense_1_loss_28: 4.1849 - dense_1_loss_29: 4.2171 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.0667 - dense_1_acc_2: 0.1500 - dense_1_acc_3: 0.1500 - dense_1_acc_4: 0.1500 - dense_1_acc_5: 0.2667 - dense_1_acc_6: 0.1333 - dense_1_acc_7: 0.1333 - dense_1_acc_8: 0.2167 - dense_1_acc_9: 0.1500 - dense_1_acc_10: 0.1833 - dense_1_acc_11: 0.1333 - dense_1_acc_12: 0.1500 - dense_1_acc_13: 0.1833 - dense_1_acc_14: 0.1667 - dense_1_acc_15: 0.2000 - dense_1_acc_16: 0.2000 - dense_1_acc_17: 0.1833 - dense_1_acc_18: 0.1500 - dense_1_acc_19: 0.1833 - dense_1_acc_20: 0.1167 - dense_1_acc_21: 0.1500 - dense_1_acc_22: 0.1333 - dense_1_acc_23: 0.1333 - dense_1_acc_24: 0.2000 - dense_1_acc_25: 0.1833 - dense_1_acc_26: 0.1833 - dense_1_acc_27: 0.2000 - dense_1_acc_28: 0.2000 - dense_1_acc_29: 0.1500 - dense_1_acc_30: 0.0000e+00

Epoch 3/100

60/60 [==============================] - 0s 3ms/step - loss: 116.5064 - dense_1_loss_1: 4.3092 - dense_1_loss_2: 4.2572 - dense_1_loss_3: 4.1908 - dense_1_loss_4: 4.1687 - dense_1_loss_5: 4.1198 - dense_1_loss_6: 4.1413 - dense_1_loss_7: 4.0898 - dense_1_loss_8: 4.0162 - dense_1_loss_9: 4.0055 - dense_1_loss_10: 3.9203 - dense_1_loss_11: 3.8927 - dense_1_loss_12: 4.0545 - dense_1_loss_13: 3.9829 - dense_1_loss_14: 3.8713 - dense_1_loss_15: 3.8982 - dense_1_loss_16: 3.9528 - dense_1_loss_17: 4.0107 - dense_1_loss_18: 4.1170 - dense_1_loss_19: 3.8229 - dense_1_loss_20: 4.0493 - dense_1_loss_21: 4.1200 - dense_1_loss_22: 3.9718 - dense_1_loss_23: 4.0150 - dense_1_loss_24: 3.9912 - dense_1_loss_25: 4.0960 - dense_1_loss_26: 3.7427 - dense_1_loss_27: 3.7657 - dense_1_loss_28: 3.8336 - dense_1_loss_29: 4.0994 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.1000 - dense_1_acc_2: 0.1667 - dense_1_acc_3: 0.1500 - dense_1_acc_4: 0.1333 - dense_1_acc_5: 0.2333 - dense_1_acc_6: 0.0500 - dense_1_acc_7: 0.1333 - dense_1_acc_8: 0.1833 - dense_1_acc_9: 0.1000 - dense_1_acc_10: 0.1333 - dense_1_acc_11: 0.0833 - dense_1_acc_12: 0.0833 - dense_1_acc_13: 0.0833 - dense_1_acc_14: 0.1167 - dense_1_acc_15: 0.1000 - dense_1_acc_16: 0.0500 - dense_1_acc_17: 0.1333 - dense_1_acc_18: 0.0667 - dense_1_acc_19: 0.1000 - dense_1_acc_20: 0.0333 - dense_1_acc_21: 0.0667 - dense_1_acc_22: 0.0667 - dense_1_acc_23: 0.0500 - dense_1_acc_24: 0.0667 - dense_1_acc_25: 0.0333 - dense_1_acc_26: 0.1167 - dense_1_acc_27: 0.1333 - dense_1_acc_28: 0.0833 - dense_1_acc_29: 0.0833 - dense_1_acc_30: 0.0000e+00

Epoch 4/100

60/60 [==============================] - 0s 3ms/step - loss: 112.5397 - dense_1_loss_1: 4.2875 - dense_1_loss_2: 4.2067 - dense_1_loss_3: 4.0999 - dense_1_loss_4: 4.0628 - dense_1_loss_5: 3.9631 - dense_1_loss_6: 3.9754 - dense_1_loss_7: 3.9326 - dense_1_loss_8: 3.7577 - dense_1_loss_9: 3.7947 - dense_1_loss_10: 3.6684 - dense_1_loss_11: 3.7238 - dense_1_loss_12: 3.9494 - dense_1_loss_13: 3.7782 - dense_1_loss_14: 3.6621 - dense_1_loss_15: 3.7522 - dense_1_loss_16: 3.7304 - dense_1_loss_17: 3.9046 - dense_1_loss_18: 3.9039 - dense_1_loss_19: 3.6987 - dense_1_loss_20: 3.9690 - dense_1_loss_21: 4.0111 - dense_1_loss_22: 3.8870 - dense_1_loss_23: 3.8095 - dense_1_loss_24: 3.7872 - dense_1_loss_25: 3.9636 - dense_1_loss_26: 3.6922 - dense_1_loss_27: 3.7426 - dense_1_loss_28: 3.7778 - dense_1_loss_29: 4.0477 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.1000 - dense_1_acc_2: 0.1500 - dense_1_acc_3: 0.2000 - dense_1_acc_4: 0.1667 - dense_1_acc_5: 0.2167 - dense_1_acc_6: 0.1333 - dense_1_acc_7: 0.1333 - dense_1_acc_8: 0.1667 - dense_1_acc_9: 0.1500 - dense_1_acc_10: 0.1167 - dense_1_acc_11: 0.1833 - dense_1_acc_12: 0.0833 - dense_1_acc_13: 0.1500 - dense_1_acc_14: 0.1667 - dense_1_acc_15: 0.0833 - dense_1_acc_16: 0.1333 - dense_1_acc_17: 0.0833 - dense_1_acc_18: 0.1000 - dense_1_acc_19: 0.0833 - dense_1_acc_20: 0.0000e+00 - dense_1_acc_21: 0.0500 - dense_1_acc_22: 0.1000 - dense_1_acc_23: 0.0833 - dense_1_acc_24: 0.0333 - dense_1_acc_25: 0.0667 - dense_1_acc_26: 0.1167 - dense_1_acc_27: 0.0833 - dense_1_acc_28: 0.1333 - dense_1_acc_29: 0.0333 - dense_1_acc_30: 0.0000e+00

Epoch 5/100

60/60 [==============================] - 0s 2ms/step - loss: 109.3722 - dense_1_loss_1: 4.2683 - dense_1_loss_2: 4.1588 - dense_1_loss_3: 4.0308 - dense_1_loss_4: 3.9839 - dense_1_loss_5: 3.8596 - dense_1_loss_6: 3.8590 - dense_1_loss_7: 3.8689 - dense_1_loss_8: 3.6674 - dense_1_loss_9: 3.6728 - dense_1_loss_10: 3.5623 - dense_1_loss_11: 3.6424 - dense_1_loss_12: 3.8932 - dense_1_loss_13: 3.6310 - dense_1_loss_14: 3.5967 - dense_1_loss_15: 3.7081 - dense_1_loss_16: 3.6147 - dense_1_loss_17: 3.7010 - dense_1_loss_18: 3.7351 - dense_1_loss_19: 3.5656 - dense_1_loss_20: 3.7975 - dense_1_loss_21: 3.7712 - dense_1_loss_22: 3.7415 - dense_1_loss_23: 3.6773 - dense_1_loss_24: 3.6429 - dense_1_loss_25: 3.8775 - dense_1_loss_26: 3.5570 - dense_1_loss_27: 3.6268 - dense_1_loss_28: 3.7597 - dense_1_loss_29: 3.9010 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.1000 - dense_1_acc_2: 0.1000 - dense_1_acc_3: 0.1500 - dense_1_acc_4: 0.1500 - dense_1_acc_5: 0.1500 - dense_1_acc_6: 0.0500 - dense_1_acc_7: 0.1000 - dense_1_acc_8: 0.1000 - dense_1_acc_9: 0.1500 - dense_1_acc_10: 0.1000 - dense_1_acc_11: 0.1167 - dense_1_acc_12: 0.0500 - dense_1_acc_13: 0.1167 - dense_1_acc_14: 0.1333 - dense_1_acc_15: 0.1333 - dense_1_acc_16: 0.1667 - dense_1_acc_17: 0.2000 - dense_1_acc_18: 0.1333 - dense_1_acc_19: 0.1500 - dense_1_acc_20: 0.1167 - dense_1_acc_21: 0.1000 - dense_1_acc_22: 0.1333 - dense_1_acc_23: 0.1167 - dense_1_acc_24: 0.0667 - dense_1_acc_25: 0.1167 - dense_1_acc_26: 0.1667 - dense_1_acc_27: 0.0833 - dense_1_acc_28: 0.1000 - dense_1_acc_29: 0.0833 - dense_1_acc_30: 0.0000e+00

Epoch 6/100

60/60 [==============================] - 0s 2ms/step - loss: 108.0867 - dense_1_loss_1: 4.2509 - dense_1_loss_2: 4.1168 - dense_1_loss_3: 3.9615 - dense_1_loss_4: 3.9062 - dense_1_loss_5: 3.7630 - dense_1_loss_6: 3.7685 - dense_1_loss_7: 3.7983 - dense_1_loss_8: 3.5817 - dense_1_loss_9: 3.5890 - dense_1_loss_10: 3.5109 - dense_1_loss_11: 3.5797 - dense_1_loss_12: 3.8280 - dense_1_loss_13: 3.6232 - dense_1_loss_14: 3.6211 - dense_1_loss_15: 3.6698 - dense_1_loss_16: 3.6198 - dense_1_loss_17: 3.6469 - dense_1_loss_18: 3.6950 - dense_1_loss_19: 3.6232 - dense_1_loss_20: 3.7699 - dense_1_loss_21: 3.7520 - dense_1_loss_22: 3.6760 - dense_1_loss_23: 3.6543 - dense_1_loss_24: 3.5639 - dense_1_loss_25: 3.8296 - dense_1_loss_26: 3.4468 - dense_1_loss_27: 3.6168 - dense_1_loss_28: 3.8022 - dense_1_loss_29: 3.8218 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.1000 - dense_1_acc_2: 0.0833 - dense_1_acc_3: 0.1500 - dense_1_acc_4: 0.1500 - dense_1_acc_5: 0.1333 - dense_1_acc_6: 0.0500 - dense_1_acc_7: 0.1000 - dense_1_acc_8: 0.1000 - dense_1_acc_9: 0.1333 - dense_1_acc_10: 0.1167 - dense_1_acc_11: 0.0833 - dense_1_acc_12: 0.0500 - dense_1_acc_13: 0.1000 - dense_1_acc_14: 0.1167 - dense_1_acc_15: 0.0667 - dense_1_acc_16: 0.1500 - dense_1_acc_17: 0.2333 - dense_1_acc_18: 0.0333 - dense_1_acc_19: 0.1500 - dense_1_acc_20: 0.1333 - dense_1_acc_21: 0.1333 - dense_1_acc_22: 0.0667 - dense_1_acc_23: 0.1167 - dense_1_acc_24: 0.0833 - dense_1_acc_25: 0.0833 - dense_1_acc_26: 0.1500 - dense_1_acc_27: 0.1000 - dense_1_acc_28: 0.1000 - dense_1_acc_29: 0.1167 - dense_1_acc_30: 0.0000e+00

Epoch 7/100

60/60 [==============================] - 0s 2ms/step - loss: 103.8930 - dense_1_loss_1: 4.2382 - dense_1_loss_2: 4.0857 - dense_1_loss_3: 3.9053 - dense_1_loss_4: 3.8487 - dense_1_loss_5: 3.6737 - dense_1_loss_6: 3.7210 - dense_1_loss_7: 3.7256 - dense_1_loss_8: 3.4510 - dense_1_loss_9: 3.5242 - dense_1_loss_10: 3.4234 - dense_1_loss_11: 3.4343 - dense_1_loss_12: 3.6753 - dense_1_loss_13: 3.4377 - dense_1_loss_14: 3.3464 - dense_1_loss_15: 3.5030 - dense_1_loss_16: 3.4224 - dense_1_loss_17: 3.4194 - dense_1_loss_18: 3.5219 - dense_1_loss_19: 3.3465 - dense_1_loss_20: 3.5958 - dense_1_loss_21: 3.5271 - dense_1_loss_22: 3.4739 - dense_1_loss_23: 3.4422 - dense_1_loss_24: 3.4390 - dense_1_loss_25: 3.6897 - dense_1_loss_26: 3.3513 - dense_1_loss_27: 3.4474 - dense_1_loss_28: 3.6011 - dense_1_loss_29: 3.6216 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.1000 - dense_1_acc_2: 0.1667 - dense_1_acc_3: 0.2000 - dense_1_acc_4: 0.1833 - dense_1_acc_5: 0.2833 - dense_1_acc_6: 0.0833 - dense_1_acc_7: 0.1333 - dense_1_acc_8: 0.1833 - dense_1_acc_9: 0.1667 - dense_1_acc_10: 0.1333 - dense_1_acc_11: 0.1667 - dense_1_acc_12: 0.1000 - dense_1_acc_13: 0.1833 - dense_1_acc_14: 0.2000 - dense_1_acc_15: 0.1833 - dense_1_acc_16: 0.2000 - dense_1_acc_17: 0.2500 - dense_1_acc_18: 0.1333 - dense_1_acc_19: 0.1833 - dense_1_acc_20: 0.2167 - dense_1_acc_21: 0.1167 - dense_1_acc_22: 0.1333 - dense_1_acc_23: 0.1500 - dense_1_acc_24: 0.0667 - dense_1_acc_25: 0.1333 - dense_1_acc_26: 0.2333 - dense_1_acc_27: 0.1167 - dense_1_acc_28: 0.1000 - dense_1_acc_29: 0.1167 - dense_1_acc_30: 0.0000e+00

Epoch 8/100

60/60 [==============================] - 0s 2ms/step - loss: 100.5637 - dense_1_loss_1: 4.2247 - dense_1_loss_2: 4.0484 - dense_1_loss_3: 3.8486 - dense_1_loss_4: 3.7777 - dense_1_loss_5: 3.5875 - dense_1_loss_6: 3.6638 - dense_1_loss_7: 3.6380 - dense_1_loss_8: 3.3351 - dense_1_loss_9: 3.4310 - dense_1_loss_10: 3.3314 - dense_1_loss_11: 3.3658 - dense_1_loss_12: 3.5451 - dense_1_loss_13: 3.3172 - dense_1_loss_14: 3.2619 - dense_1_loss_15: 3.3357 - dense_1_loss_16: 3.2681 - dense_1_loss_17: 3.2598 - dense_1_loss_18: 3.3700 - dense_1_loss_19: 3.2892 - dense_1_loss_20: 3.5225 - dense_1_loss_21: 3.3918 - dense_1_loss_22: 3.3632 - dense_1_loss_23: 3.3773 - dense_1_loss_24: 3.2751 - dense_1_loss_25: 3.5087 - dense_1_loss_26: 3.1853 - dense_1_loss_27: 3.2134 - dense_1_loss_28: 3.4509 - dense_1_loss_29: 3.3767 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.0667 - dense_1_acc_2: 0.2000 - dense_1_acc_3: 0.2167 - dense_1_acc_4: 0.2333 - dense_1_acc_5: 0.2667 - dense_1_acc_6: 0.0833 - dense_1_acc_7: 0.1167 - dense_1_acc_8: 0.1833 - dense_1_acc_9: 0.1500 - dense_1_acc_10: 0.1000 - dense_1_acc_11: 0.1833 - dense_1_acc_12: 0.1333 - dense_1_acc_13: 0.1167 - dense_1_acc_14: 0.1833 - dense_1_acc_15: 0.1500 - dense_1_acc_16: 0.2000 - dense_1_acc_17: 0.2667 - dense_1_acc_18: 0.1333 - dense_1_acc_19: 0.1500 - dense_1_acc_20: 0.2000 - dense_1_acc_21: 0.1333 - dense_1_acc_22: 0.1500 - dense_1_acc_23: 0.1167 - dense_1_acc_24: 0.0833 - dense_1_acc_25: 0.1500 - dense_1_acc_26: 0.1833 - dense_1_acc_27: 0.1500 - dense_1_acc_28: 0.1167 - dense_1_acc_29: 0.2000 - dense_1_acc_30: 0.0000e+00

Epoch 9/100

60/60 [==============================] - 0s 2ms/step - loss: 97.6538 - dense_1_loss_1: 4.2129 - dense_1_loss_2: 4.0140 - dense_1_loss_3: 3.7859 - dense_1_loss_4: 3.7113 - dense_1_loss_5: 3.4915 - dense_1_loss_6: 3.5985 - dense_1_loss_7: 3.5351 - dense_1_loss_8: 3.2549 - dense_1_loss_9: 3.3474 - dense_1_loss_10: 3.2364 - dense_1_loss_11: 3.2461 - dense_1_loss_12: 3.4407 - dense_1_loss_13: 3.2169 - dense_1_loss_14: 3.1409 - dense_1_loss_15: 3.1597 - dense_1_loss_16: 3.2155 - dense_1_loss_17: 3.1377 - dense_1_loss_18: 3.2473 - dense_1_loss_19: 3.1737 - dense_1_loss_20: 3.3686 - dense_1_loss_21: 3.3245 - dense_1_loss_22: 3.1831 - dense_1_loss_23: 3.2586 - dense_1_loss_24: 3.1811 - dense_1_loss_25: 3.4273 - dense_1_loss_26: 2.9959 - dense_1_loss_27: 3.1855 - dense_1_loss_28: 3.2751 - dense_1_loss_29: 3.2877 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.0333 - dense_1_acc_2: 0.1500 - dense_1_acc_3: 0.2167 - dense_1_acc_4: 0.2000 - dense_1_acc_5: 0.2333 - dense_1_acc_6: 0.0833 - dense_1_acc_7: 0.1167 - dense_1_acc_8: 0.1833 - dense_1_acc_9: 0.1500 - dense_1_acc_10: 0.1500 - dense_1_acc_11: 0.1500 - dense_1_acc_12: 0.1000 - dense_1_acc_13: 0.1333 - dense_1_acc_14: 0.1833 - dense_1_acc_15: 0.1500 - dense_1_acc_16: 0.2167 - dense_1_acc_17: 0.2500 - dense_1_acc_18: 0.1667 - dense_1_acc_19: 0.1833 - dense_1_acc_20: 0.2167 - dense_1_acc_21: 0.1667 - dense_1_acc_22: 0.1500 - dense_1_acc_23: 0.1833 - dense_1_acc_24: 0.0833 - dense_1_acc_25: 0.1333 - dense_1_acc_26: 0.2500 - dense_1_acc_27: 0.0667 - dense_1_acc_28: 0.1667 - dense_1_acc_29: 0.1667 - dense_1_acc_30: 0.0000e+00

Epoch 10/100

60/60 [==============================] - 0s 2ms/step - loss: 94.5077 - dense_1_loss_1: 4.2015 - dense_1_loss_2: 3.9753 - dense_1_loss_3: 3.7196 - dense_1_loss_4: 3.6295 - dense_1_loss_5: 3.3847 - dense_1_loss_6: 3.5107 - dense_1_loss_7: 3.4479 - dense_1_loss_8: 3.1222 - dense_1_loss_9: 3.2076 - dense_1_loss_10: 3.0990 - dense_1_loss_11: 3.1676 - dense_1_loss_12: 3.2743 - dense_1_loss_13: 3.0354 - dense_1_loss_14: 2.9962 - dense_1_loss_15: 3.0603 - dense_1_loss_16: 3.0755 - dense_1_loss_17: 2.9575 - dense_1_loss_18: 3.1052 - dense_1_loss_19: 2.9998 - dense_1_loss_20: 3.3360 - dense_1_loss_21: 3.1840 - dense_1_loss_22: 3.1216 - dense_1_loss_23: 3.2225 - dense_1_loss_24: 3.0645 - dense_1_loss_25: 3.2708 - dense_1_loss_26: 2.9672 - dense_1_loss_27: 2.9512 - dense_1_loss_28: 3.2526 - dense_1_loss_29: 3.1675 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.0333 - dense_1_acc_2: 0.1167 - dense_1_acc_3: 0.2167 - dense_1_acc_4: 0.1500 - dense_1_acc_5: 0.2833 - dense_1_acc_6: 0.0833 - dense_1_acc_7: 0.1000 - dense_1_acc_8: 0.1833 - dense_1_acc_9: 0.2000 - dense_1_acc_10: 0.1500 - dense_1_acc_11: 0.1833 - dense_1_acc_12: 0.1500 - dense_1_acc_13: 0.1500 - dense_1_acc_14: 0.1667 - dense_1_acc_15: 0.1500 - dense_1_acc_16: 0.1833 - dense_1_acc_17: 0.2500 - dense_1_acc_18: 0.1167 - dense_1_acc_19: 0.2000 - dense_1_acc_20: 0.1667 - dense_1_acc_21: 0.1167 - dense_1_acc_22: 0.1500 - dense_1_acc_23: 0.1333 - dense_1_acc_24: 0.0667 - dense_1_acc_25: 0.1833 - dense_1_acc_26: 0.1833 - dense_1_acc_27: 0.1833 - dense_1_acc_28: 0.1333 - dense_1_acc_29: 0.2000 - dense_1_acc_30: 0.0000e+00

Epoch 11/100

60/60 [==============================] - 0s 2ms/step - loss: 90.5518 - dense_1_loss_1: 4.1910 - dense_1_loss_2: 3.9385 - dense_1_loss_3: 3.6594 - dense_1_loss_4: 3.5486 - dense_1_loss_5: 3.2944 - dense_1_loss_6: 3.4155 - dense_1_loss_7: 3.3324 - dense_1_loss_8: 3.0174 - dense_1_loss_9: 3.0827 - dense_1_loss_10: 2.9771 - dense_1_loss_11: 3.0266 - dense_1_loss_12: 3.1418 - dense_1_loss_13: 2.8959 - dense_1_loss_14: 2.8912 - dense_1_loss_15: 2.9084 - dense_1_loss_16: 3.0064 - dense_1_loss_17: 2.8210 - dense_1_loss_18: 2.9839 - dense_1_loss_19: 2.8749 - dense_1_loss_20: 3.1386 - dense_1_loss_21: 3.0156 - dense_1_loss_22: 2.9231 - dense_1_loss_23: 2.9384 - dense_1_loss_24: 2.8513 - dense_1_loss_25: 3.1007 - dense_1_loss_26: 2.7463 - dense_1_loss_27: 2.8255 - dense_1_loss_28: 3.0031 - dense_1_loss_29: 3.0021 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.0333 - dense_1_acc_2: 0.1500 - dense_1_acc_3: 0.2500 - dense_1_acc_4: 0.2500 - dense_1_acc_5: 0.2667 - dense_1_acc_6: 0.0833 - dense_1_acc_7: 0.1333 - dense_1_acc_8: 0.2000 - dense_1_acc_9: 0.1667 - dense_1_acc_10: 0.1833 - dense_1_acc_11: 0.1667 - dense_1_acc_12: 0.1500 - dense_1_acc_13: 0.2667 - dense_1_acc_14: 0.2167 - dense_1_acc_15: 0.1833 - dense_1_acc_16: 0.2000 - dense_1_acc_17: 0.3000 - dense_1_acc_18: 0.1667 - dense_1_acc_19: 0.2333 - dense_1_acc_20: 0.2333 - dense_1_acc_21: 0.1833 - dense_1_acc_22: 0.1833 - dense_1_acc_23: 0.2500 - dense_1_acc_24: 0.1333 - dense_1_acc_25: 0.1667 - dense_1_acc_26: 0.3167 - dense_1_acc_27: 0.2167 - dense_1_acc_28: 0.2000 - dense_1_acc_29: 0.1833 - dense_1_acc_30: 0.0000e+00

Epoch 12/100

60/60 [==============================] - 0s 3ms/step - loss: 87.1859 - dense_1_loss_1: 4.1808 - dense_1_loss_2: 3.8989 - dense_1_loss_3: 3.5895 - dense_1_loss_4: 3.4616 - dense_1_loss_5: 3.1889 - dense_1_loss_6: 3.2854 - dense_1_loss_7: 3.2092 - dense_1_loss_8: 2.9227 - dense_1_loss_9: 2.9535 - dense_1_loss_10: 2.8391 - dense_1_loss_11: 2.9101 - dense_1_loss_12: 2.9845 - dense_1_loss_13: 2.7465 - dense_1_loss_14: 2.7550 - dense_1_loss_15: 2.7654 - dense_1_loss_16: 2.9037 - dense_1_loss_17: 2.6879 - dense_1_loss_18: 2.8540 - dense_1_loss_19: 2.7448 - dense_1_loss_20: 2.9551 - dense_1_loss_21: 2.9016 - dense_1_loss_22: 2.8120 - dense_1_loss_23: 2.8517 - dense_1_loss_24: 2.7128 - dense_1_loss_25: 2.9469 - dense_1_loss_26: 2.6279 - dense_1_loss_27: 2.7803 - dense_1_loss_28: 2.8488 - dense_1_loss_29: 2.8670 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.1000 - dense_1_acc_2: 0.2000 - dense_1_acc_3: 0.2500 - dense_1_acc_4: 0.2833 - dense_1_acc_5: 0.3167 - dense_1_acc_6: 0.1333 - dense_1_acc_7: 0.2000 - dense_1_acc_8: 0.2667 - dense_1_acc_9: 0.2000 - dense_1_acc_10: 0.2500 - dense_1_acc_11: 0.1667 - dense_1_acc_12: 0.1333 - dense_1_acc_13: 0.3000 - dense_1_acc_14: 0.2500 - dense_1_acc_15: 0.2500 - dense_1_acc_16: 0.2667 - dense_1_acc_17: 0.3333 - dense_1_acc_18: 0.1833 - dense_1_acc_19: 0.2167 - dense_1_acc_20: 0.2500 - dense_1_acc_21: 0.2333 - dense_1_acc_22: 0.2000 - dense_1_acc_23: 0.2667 - dense_1_acc_24: 0.1500 - dense_1_acc_25: 0.1833 - dense_1_acc_26: 0.3000 - dense_1_acc_27: 0.2167 - dense_1_acc_28: 0.2500 - dense_1_acc_29: 0.1667 - dense_1_acc_30: 0.0000e+00

Epoch 13/100

60/60 [==============================] - ETA: 0s - loss: 82.1174 - dense_1_loss_1: 4.2224 - dense_1_loss_2: 3.8586 - dense_1_loss_3: 3.5017 - dense_1_loss_4: 3.2271 - dense_1_loss_5: 2.8925 - dense_1_loss_6: 3.0915 - dense_1_loss_7: 3.1799 - dense_1_loss_8: 2.7774 - dense_1_loss_9: 2.5569 - dense_1_loss_10: 2.6189 - dense_1_loss_11: 2.7944 - dense_1_loss_12: 3.0989 - dense_1_loss_13: 2.5184 - dense_1_loss_14: 2.4656 - dense_1_loss_15: 2.6785 - dense_1_loss_16: 2.4916 - dense_1_loss_17: 2.4808 - dense_1_loss_18: 2.6075 - dense_1_loss_19: 2.6400 - dense_1_loss_20: 2.6781 - dense_1_loss_21: 2.7208 - dense_1_loss_22: 2.7776 - dense_1_loss_23: 2.6270 - dense_1_loss_24: 2.6346 - dense_1_loss_25: 2.8020 - dense_1_loss_26: 2.3756 - dense_1_loss_27: 2.5356 - dense_1_loss_28: 2.6989 - dense_1_loss_29: 2.5646 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.0938 - dense_1_acc_2: 0.2188 - dense_1_acc_3: 0.3438 - dense_1_acc_4: 0.4062 - dense_1_acc_5: 0.3750 - dense_1_acc_6: 0.1562 - dense_1_acc_7: 0.1562 - dense_1_acc_8: 0.2500 - dense_1_acc_9: 0.3750 - dense_1_acc_10: 0.2812 - dense_1_acc_11: 0.2188 - dense_1_acc_12: 0.1250 - dense_1_acc_13: 0.2500 - dense_1_acc_14: 0.2812 - dense_1_acc_15: 0.2500 - dense_1_acc_16: 0.2812 - dense_1_acc_17: 0.3125 - dense_1_acc_18: 0.1875 - dense_1_acc_19: 0.1250 - dense_1_acc_20: 0.3125 - dense_1_acc_21: 0.2500 - dense_1_acc_22: 0.1562 - dense_1_acc_23: 0.2188 - dense_1_acc_24: 0.0938 - dense_1_acc_25: 0.1250 - dense_1_acc_26: 0.3750 - dense_1_acc_27: 0.1562 - dense_1_acc_28: 0.1875 - dense_1_acc_29: 0.2812 - dense_1_acc_30: 0.0000e+ - 0s 3ms/step - loss: 83.5233 - dense_1_loss_1: 4.1701 - dense_1_loss_2: 3.8576 - dense_1_loss_3: 3.5164 - dense_1_loss_4: 3.3676 - dense_1_loss_5: 3.0747 - dense_1_loss_6: 3.1431 - dense_1_loss_7: 3.1010 - dense_1_loss_8: 2.7814 - dense_1_loss_9: 2.8180 - dense_1_loss_10: 2.6978 - dense_1_loss_11: 2.8173 - dense_1_loss_12: 2.8441 - dense_1_loss_13: 2.5778 - dense_1_loss_14: 2.5753 - dense_1_loss_15: 2.6941 - dense_1_loss_16: 2.7487 - dense_1_loss_17: 2.4578 - dense_1_loss_18: 2.7103 - dense_1_loss_19: 2.5794 - dense_1_loss_20: 2.8230 - dense_1_loss_21: 2.7426 - dense_1_loss_22: 2.7192 - dense_1_loss_23: 2.7729 - dense_1_loss_24: 2.5449 - dense_1_loss_25: 2.8469 - dense_1_loss_26: 2.5397 - dense_1_loss_27: 2.6301 - dense_1_loss_28: 2.6810 - dense_1_loss_29: 2.6906 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.1000 - dense_1_acc_2: 0.2000 - dense_1_acc_3: 0.3000 - dense_1_acc_4: 0.2833 - dense_1_acc_5: 0.3500 - dense_1_acc_6: 0.1333 - dense_1_acc_7: 0.2333 - dense_1_acc_8: 0.3000 - dense_1_acc_9: 0.2500 - dense_1_acc_10: 0.2667 - dense_1_acc_11: 0.1833 - dense_1_acc_12: 0.1333 - dense_1_acc_13: 0.2667 - dense_1_acc_14: 0.2500 - dense_1_acc_15: 0.2667 - dense_1_acc_16: 0.2000 - dense_1_acc_17: 0.3333 - dense_1_acc_18: 0.1667 - dense_1_acc_19: 0.2000 - dense_1_acc_20: 0.2500 - dense_1_acc_21: 0.2500 - dense_1_acc_22: 0.1667 - dense_1_acc_23: 0.1833 - dense_1_acc_24: 0.1833 - dense_1_acc_25: 0.1333 - dense_1_acc_26: 0.3000 - dense_1_acc_27: 0.1833 - dense_1_acc_28: 0.2167 - dense_1_acc_29: 0.2333 - dense_1_acc_30: 0.0000e+00

Epoch 14/100

60/60 [==============================] - 0s 2ms/step - loss: 79.5880 - dense_1_loss_1: 4.1616 - dense_1_loss_2: 3.8145 - dense_1_loss_3: 3.4330 - dense_1_loss_4: 3.2622 - dense_1_loss_5: 2.9531 - dense_1_loss_6: 2.9997 - dense_1_loss_7: 2.9812 - dense_1_loss_8: 2.6205 - dense_1_loss_9: 2.6600 - dense_1_loss_10: 2.5606 - dense_1_loss_11: 2.6819 - dense_1_loss_12: 2.7294 - dense_1_loss_13: 2.4227 - dense_1_loss_14: 2.4622 - dense_1_loss_15: 2.6156 - dense_1_loss_16: 2.5417 - dense_1_loss_17: 2.3775 - dense_1_loss_18: 2.5938 - dense_1_loss_19: 2.4494 - dense_1_loss_20: 2.6307 - dense_1_loss_21: 2.5532 - dense_1_loss_22: 2.5047 - dense_1_loss_23: 2.5582 - dense_1_loss_24: 2.3443 - dense_1_loss_25: 2.7281 - dense_1_loss_26: 2.3558 - dense_1_loss_27: 2.5472 - dense_1_loss_28: 2.5273 - dense_1_loss_29: 2.5178 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.1000 - dense_1_acc_2: 0.1833 - dense_1_acc_3: 0.3000 - dense_1_acc_4: 0.3000 - dense_1_acc_5: 0.3667 - dense_1_acc_6: 0.1833 - dense_1_acc_7: 0.2833 - dense_1_acc_8: 0.3167 - dense_1_acc_9: 0.2833 - dense_1_acc_10: 0.2833 - dense_1_acc_11: 0.2333 - dense_1_acc_12: 0.1333 - dense_1_acc_13: 0.3667 - dense_1_acc_14: 0.2833 - dense_1_acc_15: 0.2000 - dense_1_acc_16: 0.2500 - dense_1_acc_17: 0.3333 - dense_1_acc_18: 0.2500 - dense_1_acc_19: 0.3000 - dense_1_acc_20: 0.2833 - dense_1_acc_21: 0.3000 - dense_1_acc_22: 0.2667 - dense_1_acc_23: 0.2833 - dense_1_acc_24: 0.2500 - dense_1_acc_25: 0.2000 - dense_1_acc_26: 0.4000 - dense_1_acc_27: 0.2833 - dense_1_acc_28: 0.2667 - dense_1_acc_29: 0.3333 - dense_1_acc_30: 0.0000e+00

Epoch 15/100

60/60 [==============================] - 0s 4ms/step - loss: 75.7343 - dense_1_loss_1: 4.1531 - dense_1_loss_2: 3.7718 - dense_1_loss_3: 3.3510 - dense_1_loss_4: 3.1671 - dense_1_loss_5: 2.8436 - dense_1_loss_6: 2.8611 - dense_1_loss_7: 2.8422 - dense_1_loss_8: 2.4536 - dense_1_loss_9: 2.5180 - dense_1_loss_10: 2.4452 - dense_1_loss_11: 2.5421 - dense_1_loss_12: 2.5206 - dense_1_loss_13: 2.2906 - dense_1_loss_14: 2.3022 - dense_1_loss_15: 2.4022 - dense_1_loss_16: 2.3899 - dense_1_loss_17: 2.2665 - dense_1_loss_18: 2.4165 - dense_1_loss_19: 2.2795 - dense_1_loss_20: 2.4354 - dense_1_loss_21: 2.4304 - dense_1_loss_22: 2.3353 - dense_1_loss_23: 2.4410 - dense_1_loss_24: 2.2275 - dense_1_loss_25: 2.5568 - dense_1_loss_26: 2.2404 - dense_1_loss_27: 2.4561 - dense_1_loss_28: 2.3955 - dense_1_loss_29: 2.3989 - dense_1_loss_30: 0.0000e+00 - dense_1_acc_1: 0.1000 - dense_1_acc_2: 0.2167 - dense_1_acc_3: 0.3500 - dense_1_acc_4: 0.3000 - dense_1_acc_5: 0.3500 - dense_1_acc_6: 0.2167 - dense_1_acc_7: 0.3000 - dense_1_acc_8: 0.3667 - dense_1_acc_9: 0.4333 - dense_1_acc_10: 0.3833 - dense_1_acc_11: 0.3000 - dense_1_acc_12: 0.2833 - dense_1_acc_13: 0.4667 - dense_1_acc_14: 0.4000 - dense_1_acc_15: 0.3833 - dense_1_acc_16: 0.3167 - dense_1_acc_17: 0.3667 - dense_1_acc_18: 0.3000 - dense_1_acc_19: 0.4333 - dense_1_acc_20: 0.3500 - dense_1_acc_21: 0.3167 - dense_1_acc_22: 0.3167 - dense_1_acc_23: 0.3000 - dense_1_acc_24: 0.2333 - dense_1_acc_25: 0.1667 - dense_1_acc_26: 0.4167 - dense_1_acc_27: 0.3167 - dense_1_acc_28: 0.3167 - dense_1_acc_29: 0.3000 - dense_1_acc_30: 0.0000e+00

Epoch 16/100

60/60 [==============================] - 0s 4ms/step - loss: 71.7748 - dense_1_loss_1: 4.1451 - dense_1_loss_2: 3.7296 - dense_1_loss_3: 3.2631 - dense_1_loss_4: 3.0666 - dense_1_loss_5: 2.7274 - dense_1_loss_6: 2.7193 - dense_1_loss_7: 2.6975 - dense_1_loss_8: 2.2796 - dense_1_loss_9: 2.3815 - dense_1_loss_10: 2.3257 - dense_1_loss_11: 2.3607 - dense_1_loss_12: 2.3665 - dense_1_loss_13: 2.1351 - dense_1_loss_14: 2.1532 - dense_1_loss_15: 2.2803 - dense_1_loss_16: 2.1855 - dense_1_loss_17: 2.0913 - dense_1_loss_18: 2.3243 - dense_1_loss_19: 2.1770 - dense_1_loss_20: 2.2572 - dense_1_loss_21: 2.2738 - dense_1_loss_22: 2.1593 - dense_1_loss_23: 2.2638 - dense_1_loss_24: 2.0977 - dense_1_loss_25: 2.4014 - dense_1_loss_26: 2.1162 - dense_1_loss_27: 2.3181 - dense_1_loss_28: 2.2100 - dense_1_loss_2

Improvise a Jazz Solo with an LSTM Network实现使用LSTM生成音乐的模型,你可以在结束时听你自己的音乐,接下来你将会学习到:使用LSTM生成音乐使用深度学习生成你自己的爵士乐现在加载库,其中,music21可能不在你的环境内,你需要在命令行中执行pip install msgpack以及pip install music21来...

Improvise a Jazz Solo with an LSTM Network实现使用LSTM生成音乐的模型,你可以在结束时听你自己的音乐,接下来你将会学习到:使用LSTM生成音乐使用深度学习生成你自己的爵士乐现在加载库,其中,music21可能不在你的环境内,你需要在命令行中执行pip install msgpack以及pip install music21来...

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

2809

2809

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?