本文主要是对其传感器SDK进行学习

相关环境配置请看Azure Kinect sdk 入门,简单使用深度相机_Aaronder的博客-CSDN博客

目录:

1.了解Azure Kinect功能。

2.打开其三个摄像头,并交互保存每一帧的Depth,ir,RGB图像。

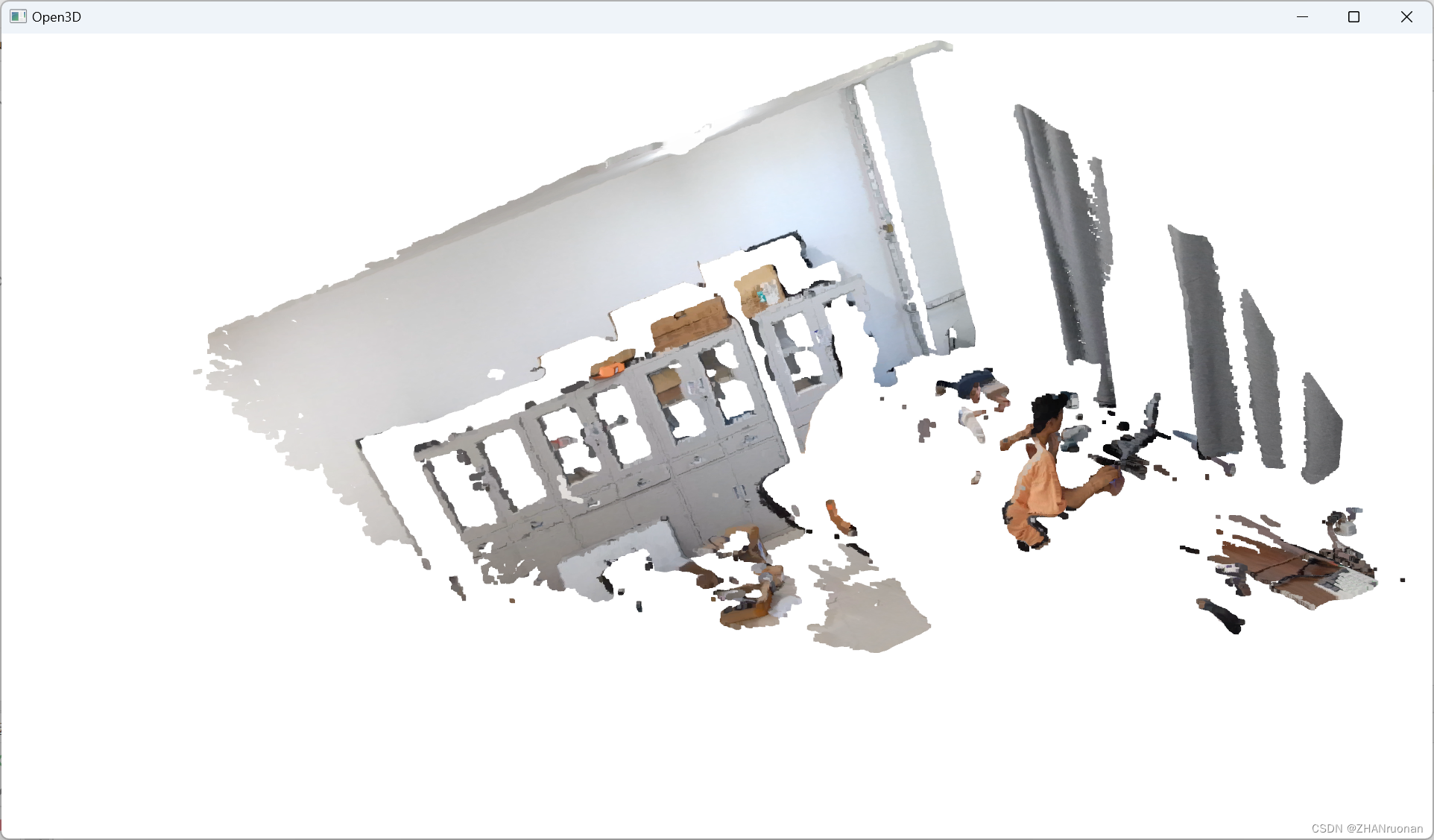

3.拍摄一帧图像,并将其保存为云点图。

4.根据拍摄一段视频,匹配视频每一帧的彩色图像和深度图像,构成云点图。

1.参考官方文档Azure Kinect DK 文档 | Microsoft Learn

对其人体跟踪功能未学习

根据文档内容快速入门实现''生成你的第一个应用程序''和''生成第一个人体跟踪应用程序''以了解该相机的基本用法。

2.打开其三个摄像头,并交互保存每一帧的Depth,ir,RGB图像。

按下空格采集图片,按q退出采集:

rgb图像:

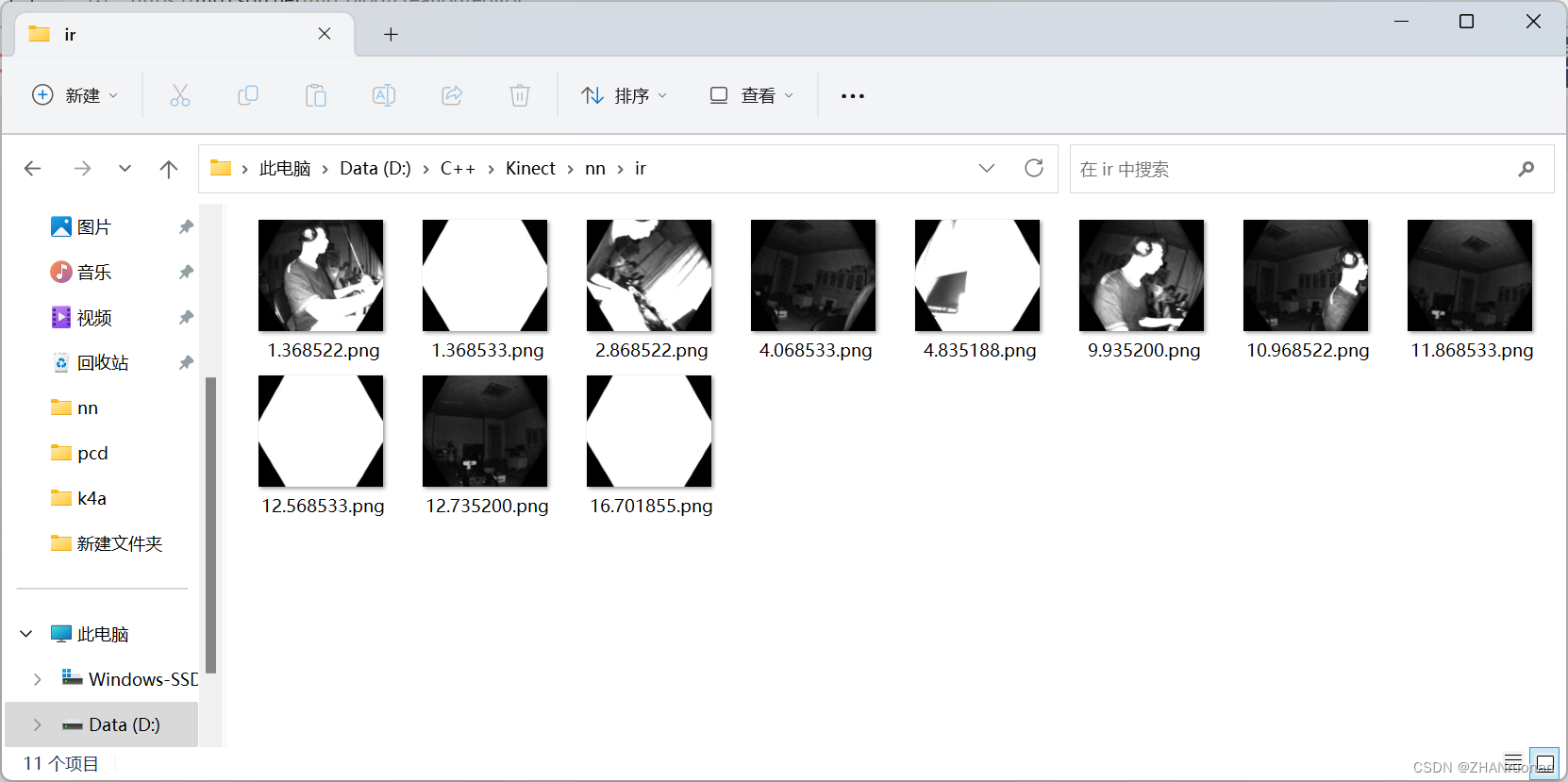

ir图像: depth图像:

depth图像:

源代码:

// C++

#include <iostream>

#include <chrono>

#include <string>

// OpenCV

#include <opencv2/opencv.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

// Kinect DK

#include <k4a/k4a.hpp>

#include<fstream>

using namespace cv;

using namespace std;

// 宏

// 方便控制是否 std::cout 信息

#define DEBUG_std_cout 0

int main(int argc, char* argv[]) {

/*

找到并打开 Azure Kinect 设备

*/

// 发现已连接的设备数

const uint32_t device_count = k4a::device::get_installed_count();

if (0 == device_count) {

std::cout << "Error: no K4A devices found. " << std::endl;

return -1;

}

else {

std::cout << "Found " << device_count << " connected devices. " << std::endl;

if (1 != device_count)// 超过1个设备,也输出错误信息。

{

std::cout << "Error: more than one K4A devices found. " << std::endl;

return -1;

}

else// 该示例代码仅限对1个设备操作

{

std::cout << "Done: found 1 K4A device. " << std::endl;

}

}

// 打开(默认)设备

k4a::device device = k4a::device::open(K4A_DEVICE_DEFAULT);

std::cout << "Done: open device. " << std::endl;

/*

检索并保存 Azure Kinect 图像数据

*/

// 配置并启动设备

k4a_device_configuration_t config = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

config.camera_fps = K4A_FRAMES_PER_SECOND_30;

config.color_format = K4A_IMAGE_FORMAT_COLOR_BGRA32;

config.color_resolution = K4A_COLOR_RESOLUTION_1080P;

config.depth_mode = K4A_DEPTH_MODE_NFOV_UNBINNED;

//config.depth_mode = K4A_DEPTH_MODE_WFOV_2X2BINNED;

config.synchronized_images_only = true;// ensures that depth and color images are both available in the capture

device.start_cameras(&config);

std::cout << "Done: start camera." << std::endl;

//写入txt文件流

ofstream rgb_out;

ofstream d_out;

ofstream ir_out;

rgb_out.open("./rgb.txt");

d_out.open("./depth.txt");

ir_out.open("./ir.txt");

rgb_out << "# color images" << endl;

rgb_out << "# file: rgbd_dataset" << endl;

rgb_out << "# timestamp" << " " << "filename" << endl;

d_out << "# depth images" << endl;

d_out << "# file: rgbd_dataset" << endl;

d_out << "# timestamp" << " " << "filename" << endl;

ir_out << "# ir images" << endl;

ir_out << "# file: rgbd_dataset" << endl;

ir_out << "# timestamp" << " " << "filename" << endl;

rgb_out << flush;

d_out << flush;

// 稳定化

k4a::capture capture;

int iAuto = 0;//用来稳定,类似自动曝光

int iAutoError = 0;// 统计自动曝光的失败次数

while (true) {

if (device.get_capture(&capture)) {

std::cout << iAuto << ". Capture several frames to give auto-exposure" << std::endl;

// 跳过前 n 个(成功的数据采集)循环,用来稳定

if (iAuto != 30) {

iAuto++;

continue;

}

else {

std::cout << "Done: auto-exposure" << std::endl;

break;// 跳出该循环,完成相机的稳定过程

}

}

else {

std::cout << iAutoError << ". K4A_WAIT_RESULT_TIMEOUT." << std::endl;

if (iAutoError != 30) {

iAutoError++;

continue;

}

else {

std::cout << "Error: failed to give auto-exposure. " << std::endl;

return -1;

}

}

}

std::cout << "-----------------------------------" << std::endl;

std::cout << "----- Have Started Kinect DK. -----" << std::endl;

std::cout << "-----------------------------------" << std::endl;

// 从设备获取捕获

k4a::image rgbImage;

k4a::image depthImage;

k4a::image irImage;

k4a::image transformed_depthImage;

cv::Mat cv_rgbImage_with_alpha;

cv::Mat cv_rgbImage_no_alpha;

cv::Mat cv_depth;

cv::Mat cv_depth_8U;

cv::Mat cv_irImage;

cv::Mat cv_irImage_8U;

while (true)

// for (size_t i = 0; i < 100; i++)

{

// if (device.get_capture(&capture, std::chrono::milliseconds(0)))

if (device.get_capture(&capture)) {

// rgb

// * Each pixel of BGRA32 data is four bytes. The first three bytes represent Blue, Green,

// * and Red data. The fourth byte is the alpha channel and is unused in the Azure Kinect APIs.

rgbImage = capture.get_color_image();

#if DEBUG_std_cout == 1

std::cout << "[rgb] " << "\n"

<< "format: " << rgbImage.get_format() << "\n"

<< "device_timestamp: " << rgbImage.get_device_timestamp().count() << "\n"

<< "system_timestamp: " << rgbImage.get_system_timestamp().count() << "\n"

<< "height*width: " << rgbImage.get_height_pixels() << ", " << rgbImage.get_width_pixels()

<< std::endl;

#endif

// depth

// * Each pixel of DEPTH16 data is two bytes of little endian unsigned depth data. The unit of the data is in

// * millimeters from the origin of the camera.

depthImage = capture.get_depth_image();

#if DEBUG_std_cout == 1

std::cout << "[depth] " << "\n"

<< "format: " << depthImage.get_format() << "\n"

<< "device_timestamp: " << depthImage.get_device_timestamp().count() << "\n"

<< "system_timestamp: " << depthImage.get_system_timestamp().count() << "\n"

<< "height*width: " << depthImage.get_height_pixels() << ", " << depthImage.get_width_pixels()

<< std::endl;

#endif

// ir

// * Each pixel of IR16 data is two bytes of little endian unsigned depth data. The value of the data represents

// * brightness.

irImage = capture.get_ir_image();

#if DEBUG_std_cout == 1

std::cout << "[ir] " << "\n"

<< "format: " << irImage.get_format() << "\n"

<< "device_timestamp: " << irImage.get_device_timestamp().count() << "\n"

<< "system_timestamp: " << irImage.get_system_timestamp().count() << "\n"

<< "height*width: " << irImage.get_height_pixels() << ", " << irImage.get_width_pixels()

<< std::endl;

#endif

//深度图和RGB图配准

k4a::calibration k4aCalibration = device.get_calibration(config.depth_mode, config.color_resolution);// Get the camera calibration for the entire K4A device, which is used for all transformation functions.

k4a::transformation k4aTransformation = k4a::transformation(k4aCalibration);

transformed_depthImage = k4aTransformation.depth_image_to_color_camera(depthImage);

cv_rgbImage_with_alpha = cv::Mat(rgbImage.get_height_pixels(), rgbImage.get_width_pixels(), CV_8UC4,

(void*)rgbImage.get_buffer());

cv::cvtColor(cv_rgbImage_with_alpha, cv_rgbImage_no_alpha, cv::COLOR_BGRA2BGR);

cv_depth = cv::Mat(transformed_depthImage.get_height_pixels(), transformed_depthImage.get_width_pixels(), CV_16U,

(void*)transformed_depthImage.get_buffer(), static_cast<size_t>(transformed_depthImage.get_stride_bytes()));

cv_depth.convertTo(cv_depth_8U, CV_8U, 1);

cv_irImage = cv::Mat(irImage.get_height_pixels(), irImage.get_width_pixels(), CV_16U,

(void*)irImage.get_buffer(), static_cast<size_t>(irImage.get_stride_bytes()));

cv_irImage.convertTo(cv_irImage_8U, CV_8U, 1);

// show image

cv::imshow("color", cv_rgbImage_no_alpha);

cv::imshow("depth", cv_depth_8U);

cv::imshow("ir", cv_irImage_8U);

// save image

double time_rgb = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

rgbImage.get_device_timestamp()).count());

std::string filename_rgb = std::to_string(time_rgb / 1000000) + ".png";

double time_d = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

depthImage.get_device_timestamp()).count());

std::string filename_d = std::to_string(time_d / 1000000) + ".png";

double time_ir = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

irImage.get_device_timestamp()).count());

std::string filename_ir = std::to_string(time_ir / 1000000) + ".png";

imwrite("./rgb/" + filename_rgb, cv_rgbImage_no_alpha);

imwrite("./depth/" + filename_d, cv_depth_8U);

imwrite("./ir/" + filename_ir, cv_irImage_8U);

std::cout << "Acquiring!" << endl;

//写入depth.txt,rgb.txt文件

rgb_out << std::to_string(time_rgb / 1000000) << " " << "rgb/" << filename_rgb << endl;

d_out << std::to_string(time_d / 1000000) << " " << "depth/" << filename_d << endl;

ir_out << std::to_string(time_ir / 1000000) << " " << "ir/" << filename_ir << endl;

rgb_out << flush;

d_out << flush;

ir_out << flush;

cv_rgbImage_with_alpha.release();

cv_rgbImage_no_alpha.release();

cv_depth.release();

cv_depth_8U.release();

cv_irImage.release();

cv_irImage_8U.release();

capture.reset();

if (cv::waitKey(0) == 'q') {//按键采集,用户按下'q',跳出循环,结束采集

std::cout << "----------------------------------" << std::endl;

std::cout << "------------- closed -------------" << std::endl;

std::cout << "----------------------------------" << std::endl;

break;

}

}

else {

std::cout << "false: K4A_WAIT_RESULT_TIMEOUT." << std::endl;

}

}

cv::destroyAllWindows();

rgb_out << flush;

d_out << flush;

ir_out << flush;

rgb_out.close();

d_out.close();

ir_out.close();

// 释放,关闭设备

rgbImage.reset();

depthImage.reset();

irImage.reset();

capture.reset();

device.close();

return 1;

}

3.拍摄一帧图像,并将其保存为云点图,用open3d查看效果。

源代码:

slamBase.h

# pragma once //保证头文件只被编译一次

#include <iostream>

// opencv

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

// pcl

#include <pcl/point_types.h>

#include <pcl/io/pcd_io.h>

#include <pcl/io/ply_io.h>

#include <pcl/common/transforms.h>

using namespace std;

using namespace cv;

typedef pcl::PointXYZRGB PointT;

typedef pcl::PointCloud<PointT> PointCloud;

// camera instrinsic parameters

struct CAMERA_INTRINSIC_PARAMETERS

{

double fx, fy, cx, cy, scale;

};

struct FRAME

{

cv::Mat rgb, depth;

};

PointCloud::Ptr image2PointCloud(Mat rgb, Mat depth, CAMERA_INTRINSIC_PARAMETERS camera);

PointCloud::Ptr pointCloudFusion(PointCloud::Ptr& original, FRAME& newFrame, CAMERA_INTRINSIC_PARAMETERS camera);

void readCameraTrajectory(string camTransFile, vector<Eigen::Isometry3d>& poses);

pointCloudFusion.cpp

#include <iostream>

#include <chrono>

#include <string>

#include <vector>

// OpenCV

#include <opencv2/opencv.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

// Kinect DK

#include <k4a/k4a.hpp>

// PCL 库

#include <pcl/io/pcd_io.h>

#include <pcl/io/ply_io.h>

#include <pcl/point_types.h>

#include "slamBase.h"

// 定义点云类型

typedef pcl::PointXYZRGB PointT;

typedef pcl::PointCloud<PointT> PointCloud;

using namespace cv;

using namespace std;

// 方便控制是否 std::cout 信息

#define DEBUG_std_cout 1

int main(int argc, char* argv[]) {

/*

找到并打开 Azure Kinect 设备

*/

// 发现已连接的设备数

const uint32_t device_count = k4a::device::get_installed_count();

if (0 == device_count) {

std::cout << "Error: no K4A devices found. " << std::endl;

return -1;

}

else {

std::cout << "Found " << device_count << " connected devices. " << std::endl;

if (1 != device_count)// 超过1个设备,也输出错误信息。

{

std::cout << "Error: more than one K4A devices found. " << std::endl;

return -1;

}

else// 该示例代码仅限对1个设备操作

{

std::cout << "Done: found 1 K4A device. " << std::endl;

}

}

// 打开(默认)设备

k4a::device device = k4a::device::open(K4A_DEVICE_DEFAULT);

std::cout << "Done: open device. " << std::endl;

// 配置并启动设备

k4a_device_configuration_t config = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

config.camera_fps = K4A_FRAMES_PER_SECOND_30;

//config.camera_fps = K4A_FRAMES_PER_SECOND_15;

config.color_format = K4A_IMAGE_FORMAT_COLOR_BGRA32;

config.color_resolution = K4A_COLOR_RESOLUTION_1080P;

config.depth_mode = K4A_DEPTH_MODE_NFOV_UNBINNED;

//config.depth_mode = K4A_DEPTH_MODE_WFOV_2X2BINNED;

config.synchronized_images_only = true;// ensures that depth and color images are both available in the capture

device.start_cameras(&config);

std::cout << "Done: start camera." << std::endl;

// 稳定化

k4a::capture capture;

int iAuto = 0;//用来稳定,类似自动曝光

int iAutoError = 0;// 统计自动曝光的失败次数

while (true) {

if (device.get_capture(&capture)) {

std::cout << iAuto << ". Capture several frames to give auto-exposure" << std::endl;

// 跳过前 n 个(成功的数据采集)循环,用来稳定

if (iAuto != 30) {

iAuto++;

continue;

}

else {

std::cout << "Done: auto-exposure" << std::endl;

break;// 跳出该循环,完成相机的稳定过程

}

}

else {

std::cout << iAutoError << ". K4A_WAIT_RESULT_TIMEOUT." << std::endl;

if (iAutoError != 30) {

iAutoError++;

continue;

}

else {

std::cout << "Error: failed to give auto-exposure. " << std::endl;

return -1;

}

}

}

std::cout << "-----------------------------------" << std::endl;

std::cout << "----- Have Started Kinect DK. -----" << std::endl;

std::cout << "-----------------------------------" << std::endl;

// 从设备获取捕获

k4a::image rgbImage;

k4a::image depthImage;

k4a::image transformed_depthImage;

cv::Mat cv_rgbImage_with_alpha;

cv::Mat cv_rgbImage_no_alpha;

cv::Mat cv_depth;

cv::Mat cv_depth_8U;

// for (size_t i = 0; i < 100; i++)

while (true) {

// if (device.get_capture(&capture, std::chrono::milliseconds(0)))

if (device.get_capture(&capture)) {

// rgb

// * Each pixel of BGRA32 data is four bytes. The first three bytes represent Blue, Green,

// * and Red data. The fourth byte is the alpha channel and is unused in the Azure Kinect APIs.

rgbImage = capture.get_color_image();

#if DEBUG_std_cout == 1

std::cout << "[rgb] " << "\n"

<< "format: " << rgbImage.get_format() << "\n"

<< "device_timestamp: " << rgbImage.get_device_timestamp().count() << "\n"

<< "system_timestamp: " << rgbImage.get_system_timestamp().count() << "\n"

<< "height*width: " << rgbImage.get_height_pixels() << ", " << rgbImage.get_width_pixels()

<< std::endl;

#endif

// depth

// * Each pixel of DEPTH16 data is two bytes of little endian unsigned depth data. The unit of the data is in

// * millimeters from the origin of the camera.

depthImage = capture.get_depth_image();

#if DEBUG_std_cout == 1

std::cout << "[depth] " << "\n"

<< "format: " << depthImage.get_format() << "\n"

<< "device_timestamp: " << depthImage.get_device_timestamp().count() << "\n"

<< "system_timestamp: " << depthImage.get_system_timestamp().count() << "\n"

<< "height*width: " << depthImage.get_height_pixels() << ", " << depthImage.get_width_pixels()

<< std::endl;

#endif

//获取彩色点云

k4a::calibration k4aCalibration = device.get_calibration(config.depth_mode, config.color_resolution);

k4a::transformation k4aTransformation = k4a::transformation(k4aCalibration);

//PointCloud::Ptr cloud(new PointCloud);

int color_image_width_pixels = rgbImage.get_width_pixels();

int color_image_height_pixels = rgbImage.get_height_pixels();

transformed_depthImage = NULL;

transformed_depthImage = k4a::image::create(K4A_IMAGE_FORMAT_DEPTH16,

color_image_width_pixels,

color_image_height_pixels,

color_image_width_pixels * (int)sizeof(uint16_t));

k4a::image point_cloud_image = NULL;

point_cloud_image = k4a::image::create(K4A_IMAGE_FORMAT_CUSTOM,

color_image_width_pixels,

color_image_height_pixels,

color_image_width_pixels * 3 * (int)sizeof(int16_t));

if (depthImage.get_width_pixels() == rgbImage.get_width_pixels() && depthImage.get_height_pixels() == rgbImage.get_height_pixels()) {

std::copy(depthImage.get_buffer(), depthImage.get_buffer() + depthImage.get_height_pixels() * depthImage.get_width_pixels() * (int)sizeof(uint16_t), transformed_depthImage.get_buffer());

cout << "if" << endl;

}

else {

cout << "else" << endl;

k4aTransformation.depth_image_to_color_camera(depthImage, &transformed_depthImage);

}

k4aTransformation.depth_image_to_point_cloud(transformed_depthImage, K4A_CALIBRATION_TYPE_COLOR, &point_cloud_image);

pcl::PointCloud<pcl::PointXYZRGB>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZRGB>);

cloud->width = color_image_width_pixels;

cloud->height = color_image_height_pixels;

cloud->is_dense = false;

cloud->resize(static_cast<size_t>(color_image_width_pixels) * color_image_height_pixels);

const int16_t* point_cloud_image_data = reinterpret_cast<const int16_t*>(point_cloud_image.get_buffer());

const uint8_t* color_image_data = rgbImage.get_buffer();

for (int i = 0; i < color_image_width_pixels * color_image_height_pixels; i++) {

PointT point;

point.x = point_cloud_image_data[3 * i + 0] / 1000.0f;

point.y = point_cloud_image_data[3 * i + 1] / 1000.0f;

point.z = point_cloud_image_data[3 * i + 2] / 1000.0f;

point.b = color_image_data[4 * i + 0];

point.g = color_image_data[4 * i + 1];

point.r = color_image_data[4 * i + 2];

uint8_t alpha = color_image_data[4 * i + 3];

if (point.x == 0 && point.y == 0 && point.z == 0 && alpha == 0)

continue;

cloud->points[i] = point;

}

double time_d = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

depthImage.get_device_timestamp()).count());

std::string filename_pc = std::to_string(time_d / 1000000);

pcl::io::savePLYFile("ply/" + filename_pc + ".ply", *cloud); //将点云数据保存为ply文件

pcl::io::savePCDFile("pcd/" + filename_pc + ".pcd", *cloud); //将点云数据保存为pcd文件

}

else {

std::cout << "false: K4A_WAIT_RESULT_TIMEOUT." << std::endl;

}

}

cv::destroyAllWindows();

// 释放,关闭设备

rgbImage.reset();

depthImage.reset();

capture.reset();

device.close();

return 1;

}open3d读取代码

import open3d as o3d

pcd = o3d.io.read_point_cloud(r"path")

o3d.visualization.draw_geometries([pcd])这只是单帧,要想实现三维重建,需要对多帧点云图进行匹配配准和融合。目前还不会TuT~

4.根据拍摄一段视频,匹配视频每一帧的彩色图像和深度图像,构成云点图。

参考Azure Kinect DK 实现三维重建 (PC非实时版)_lucky li的博客-CSDN博客

他的例子是Ubuntu18.04笔记本+DK相机上进行,我进行windows版

首先

利用开源框架open3d的Reconstruction system实现Azure Kinect DK相机的三维重建,已上传代码:

https://github.com/luckyluckydadada/Azure-Kinect-DK-3D-reconstruction.git

然后进入Azure-Kinect-DK-3D-reconstruction/open3d_reconstruction目录

打开cmd

输入录制数据,空格开始录制,esc退出录制并保存:

python sensors/azure_kinect_recorder.py --output dataset/name.mkv

再输入

提取rgb和depth图像,以及相机config和相机内参config:

python sensors/azure_kinect_mkv_reader.py --input dataset/name.mkv --output dataset/name

最后输入

python run_system.py dataset/name/config.json --make --register --refine --integrate

然后在新生成的dataset文件夹找到生成的ply文件再打开

效果跟参考文章一样

建议open3d版本要0.14.1

这个适用对一个物体进行重建,不能移动拍摄整个屋子。

本文介绍了如何使用AzureKinectSDK进行设备配置、捕获RGB、深度和红外图像,并将它们保存为图像文件。此外,还展示了如何将图像转换为点云图,并使用Open3D库进行查看。文章提供了C++代码示例,包括稳定化相机、生成第一帧点云图以及从视频中提取帧进行三维重建的过程。

本文介绍了如何使用AzureKinectSDK进行设备配置、捕获RGB、深度和红外图像,并将它们保存为图像文件。此外,还展示了如何将图像转换为点云图,并使用Open3D库进行查看。文章提供了C++代码示例,包括稳定化相机、生成第一帧点云图以及从视频中提取帧进行三维重建的过程。

1143

1143

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?