一、 VGG16简介

- VGG16网络是通过卷积和全连接的方式提取图片特征,进行识别一种网络结构。曾在某年取得分类第二,定位任务第一的佳绩。

- 其结构包含: 13个卷积层 + 3个全连接层,所以被称为VGG16,如下图绿色的部分即是网络的结构组成:

- 卷积配置说明:

- input(224224RGB image) :输入图片大小为:224224,通道数为:3

- conv3_XXX :卷积核为3(以下都是卷积核都是3,后面省略),卷积层的通道数为:XXX

- VGG16特点(这里是借鉴[韩鼎の个人网站]:

- 卷积核全部使用3*3的卷积核 ,且所有卷积核 : strides=[1,1,1,1] ,padding=“SAME”。

- 池化层全部使用: max的池化方式,2*2的池化核,通过全0填充,步长为2,strides=[1,2,2,1] ,padding=“SAME”。

二、代码实现

import h5py

import json

import tensorflow as tf

import cv2

import numpy as np

import matplotlib.pyplot as plt

# 该文件包括最后三层的全连接层

# 这里的h5文件和下面的json文件下载来自于kaggle:链接为:https://www.kaggle.com/keras/vgg16

model_path = './kreas_vgg16_model_para/vgg16_weights_tf_dim_ordering_tf_kernels.h5'

# 该文件不包含最后三层的全连接层

# model_path = './kreas_vgg16_model_para/vgg16_weights_tf_dim_ordering_tf_kernels_notop.h5'

# 1.通过h5py读取model的各层参数,笨办法就主要笨在这个地方,但是很好理解。

with h5py.File(model_path,'r') as f:

# # 得到h5文件的第一层目录名字

# layer1 = [ i for i in f]

# print(layer1)

# # 得到h5文件的第二层目录名字

# layer2 = {'layer2_'+str(i):[j for j in f[layer1[i]]] for i in range(len(layer1))}

# print(layer2)

# 13层convolution 5个block 卷积核kernel为3*3*3

# block1

block1_conv1_W_1 = f['/block1_conv1/block1_conv1_W_1:0'][:]

# print(block1_conv1_W_1.shape)

block1_conv2_W_1 = f['/block1_conv2/block1_conv2_W_1:0'][:]

# print(block1_conv2_W_1.shape)

block1_conv1_b_1 = f['/block1_conv1/block1_conv1_b_1:0'][:]

block1_conv2_b_1 = f['/block1_conv2/block1_conv2_b_1:0'][:]

# block2

block2_conv1_W_1 = f['/block2_conv1/block2_conv1_W_1:0'][:]

# print(block2_conv1_W_1.shape)

block2_conv2_W_1 = f['/block2_conv2/block2_conv2_W_1:0'][:]

# print(block2_conv2_W_1.shape)

block2_conv1_b_1 = f['/block2_conv1/block2_conv1_b_1:0'][:]

block2_conv2_b_1 = f['/block2_conv2/block2_conv2_b_1:0'][:]

# block3

block3_conv1_W_1 = f['/block3_conv1/block3_conv1_W_1:0'][:]

# print(block3_conv1_W_1.shape)

block3_conv2_W_1 = f['/block3_conv2/block3_conv2_W_1:0'][:]

# print(block3_conv2_W_1.shape)

block3_conv3_W_1 = f['/block3_conv3/block3_conv3_W_1:0'][:]

# print(block3_conv3_W_1.shape)

block3_conv1_b_1 = f['/block3_conv1/block3_conv1_b_1:0'][:]

block3_conv2_b_1 = f['/block3_conv2/block3_conv2_b_1:0'][:]

block3_conv3_b_1 = f['/block3_conv3/block3_conv3_b_1:0'][:]

# block4

block4_conv1_W_1 = f['/block4_conv1/block4_conv1_W_1:0'][:]

block4_conv2_W_1 = f['/block4_conv2/block4_conv2_W_1:0'][:]

block4_conv3_W_1 = f['/block4_conv3/block4_conv3_W_1:0'][:]

block4_conv1_b_1 = f['/block4_conv1/block4_conv1_b_1:0'][:]

block4_conv2_b_1 = f['/block4_conv2/block4_conv2_b_1:0'][:]

block4_conv3_b_1 = f['/block4_conv3/block4_conv3_b_1:0'][:]

# block5

block5_conv1_W_1 = f['/block5_conv1/block5_conv1_W_1:0'][:]

block5_conv2_W_1 = f['/block5_conv2/block5_conv2_W_1:0'][:]

block5_conv3_W_1 = f['/block5_conv3/block5_conv3_W_1:0'][:]

block5_conv1_b_1 = f['/block5_conv1/block5_conv1_b_1:0'][:]

block5_conv2_b_1 = f['/block5_conv2/block5_conv2_b_1:0'][:]

block5_conv3_b_1 = f['/block5_conv3/block5_conv3_b_1:0'][:]

# 三个全连接层

fc1_W_1 = f['/fc1/fc1_W_1:0'][:]

fc1_b_1 = f['/fc1/fc1_b_1:0'][:]

# print(fc1_W_1.shape)

fc2_W_1 = f['/fc2/fc2_W_1:0'][:]

fc2_b_1 = f['/fc2/fc2_b_1:0'][:]

# print(fc2_W_1.shape)

predictions_W_1 = f['/predictions/predictions_W_1:0'][:]

predictions_b_1 = f['/predictions/predictions_b_1:0'][:]

# print(predictions_W_1.shape)

# 2.把拿到的参数交给网络结构,就可以做预测了,得到softmax后的千分类。

def prediction(image):

# 13个卷积层

# block 1

conv1 = conv2d(image,block1_conv1_W_1)

relu1 = tf.nn.relu(tf.nn.bias_add(conv1,block1_conv1_b_1))

conv2 = conv2d(relu1,block1_conv2_W_1)

relu2 = tf.nn.relu(tf.nn.bias_add(conv2,block1_conv2_b_1))

pool1 = max_pool_2x2(relu2)

# block 2

conv3 = conv2d(pool1,block2_conv1_W_1)

relu3 = tf.nn.relu(tf.nn.bias_add(conv3,block2_conv1_b_1))

conv4 = conv2d(relu3,block2_conv2_W_1)

relu4 = tf.nn.relu(tf.nn.bias_add(conv4,block2_conv2_b_1))

pool2 = max_pool_2x2(relu4)

# block 3

conv5 = conv2d(pool2,block3_conv1_W_1)

relu5 = tf.nn.relu(tf.nn.bias_add(conv5,block3_conv1_b_1))

conv6 = conv2d(relu5,block3_conv2_W_1)

relu6 = tf.nn.relu(tf.nn.bias_add(conv6,block3_conv2_b_1))

conv7 = conv2d(relu6,block3_conv3_W_1)

relu7 = tf.nn.relu(tf.nn.bias_add(conv7,block3_conv3_b_1))

pool3 = max_pool_2x2(relu7)

# block 4

conv8 = conv2d(pool3,block4_conv1_W_1)

relu8 = tf.nn.relu(tf.nn.bias_add(conv8,block4_conv1_b_1))

conv9 = conv2d(relu8,block4_conv2_W_1)

relu9 = tf.nn.relu(tf.nn.bias_add(conv9,block4_conv2_b_1))

conv10 = conv2d(relu9,block4_conv3_W_1)

relu10 = tf.nn.relu(tf.nn.bias_add(conv10,block4_conv3_b_1))

pool4 = max_pool_2x2(relu10)

# block 5

conv11 = conv2d(pool4,block5_conv1_W_1)

relu11 = tf.nn.relu(tf.nn.bias_add(conv11,block5_conv1_b_1))

conv12 = conv2d(relu11,block5_conv2_W_1)

relu12 = tf.nn.relu(tf.nn.bias_add(conv12,block5_conv2_b_1))

conv13 = conv2d(relu12,block5_conv3_W_1)

relu13 = tf.nn.relu(tf.nn.bias_add(conv13,block5_conv3_b_1))

pool5 = max_pool_2x2(relu13)

# print(pool5.shape) # (1, 7, 7, 512)

pool_shape = pool5.get_shape().as_list()

nodes = pool_shape[1]*pool_shape[2]*pool_shape[3]

reshaped = tf.reshape(pool5,[pool_shape[0],nodes])

# print(reshaped.shape) # (1, 25088)

# 3个全连接层

fc1 = tf.nn.relu(tf.nn.bias_add(tf.matmul(reshaped,fc1_W_1),fc1_b_1))

fc2 =tf.nn.relu(tf.nn.bias_add(tf.matmul(fc1,fc2_W_1),fc2_b_1))

y = tf.nn.softmax(tf.nn.bias_add(tf.matmul(fc2,predictions_W_1),predictions_b_1))

# print(y.shape) # (1, 1000)

y = tf.squeeze(y)

index = tf.argmax(y,0)

labels_data = reafLabels()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

y_value = sess.run(y)

# 将拿到的前五种可能,通过matplotlib可视化出来

getTop5(y_value)

return None

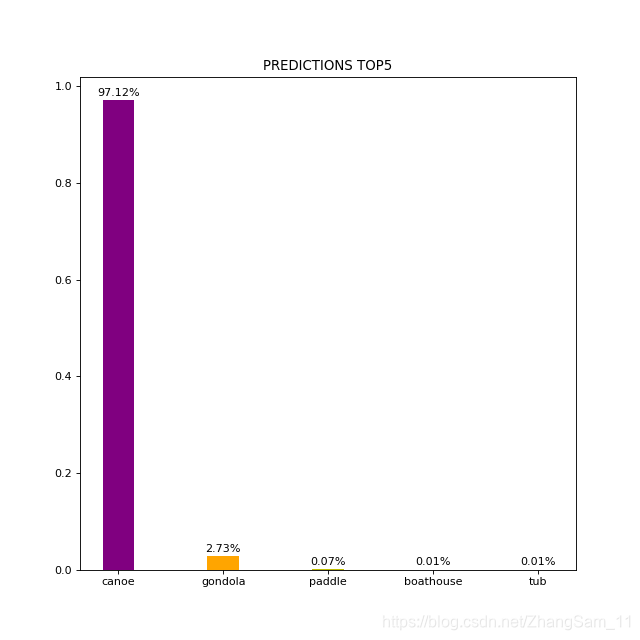

# 2.1 TOP5可视化

def getTop5(y_value):

# 传入的参数y_value只是softmax后得到的ndarray

# ndarray的内容是:各个...(有点难表述)

# 举例:y_value = [0.02,0.01,0.4....]表示识别的该物品在index=0上的可能性是0.02,在index=1上的可能性是0.01,在index=2上的可能性是0.4...

# 拿到可能性最大的前五个索引index,后面通过索引拿到可能的value

top5_index = np.argsort(-y_value)[:5]

# 读取下载好的json文件,json文件内容:包含索引和对应的value,打开一看便知

labels_data = reafLabels()

# top5 各自的可能性

top5_accuracy = [(y_value[i]) for index in top5_index for i in range(len(y_value)) if index==i]

# top5 各自的value

top5_prediction = [value[1] for index in top5_index for key,value in labels_data.items() if key==str(index)]

# 画图

plt.figure(figsize=(8,8),dpi=80)

x_ticks = range(len(top5_prediction))

# 柱状图

plt.bar(x_ticks,top5_accuracy,width=0.3,color=["purple","orange","y","g","c"])

# 标记数字

for x, y in zip(x_ticks, top5_accuracy):

plt.text(x , y + 0.005, '%s' % percent(y), ha='center', va='bottom')

# 添加刻度上的值

plt.xticks(x_ticks,top5_prediction)

# 标题

plt.title("PREDICTIONS TOP5")

plt.show()

return None

def reafLabels():

with open('./kreas_vgg16_model_para/imagenet_class_index.json', 'r', encoding='utf8')as fp:

labels_data = json.load(fp)

return labels_data

def dealImage(image_path="./image/frog.jpg"):

image = cv2.imread(image_path)

resized_image = cv2.resize(image,(224,224))

# astype不改变numpy原数组,dtype容易出错

retyped_image = resized_image.astype(np.float32)

final_image = np.reshape(retyped_image,[1,224,224,3])

# cv2.imshow('IMAGE',image)

# cv2.waitKey(0)

return final_image

def conv2d(x,w):

return tf.nn.conv2d(x,w,[1,1,1,1],padding="SAME")

def max_pool_2x2(x):

return tf.nn.max_pool(x,[1,2,2,1],[1,2,2,1],padding="SAME")

def percent(value):

return "%.2f%%"%(value*100)

if __name__ == '__main__':

image = dealImage()

prediction(image)

三、效果展示

752

752

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?