一 推陈出新

- 采用连续的3x3的卷积核代替AlexNet中的较大卷积核(11x11,7x7,5x5),2 个 3x3 的卷积核叠加,它们的感受野等同于 1 个 5x5 的卷积核,3 个 3x3 的卷积核叠加后,它们的感受野等同于 1 个 7x7 的效果,所以使用3x3卷积核堆叠的形式,既增加了网络层数又减少了参数量。

- 引入了1x1卷积核,实现了在保持feature map 尺寸不变(即不损失分辨率)的前提下,大幅增加非线性表达能力,加深了网络层数。同时,可以进行卷积核通道数的降维和升维。

二 渐入佳境

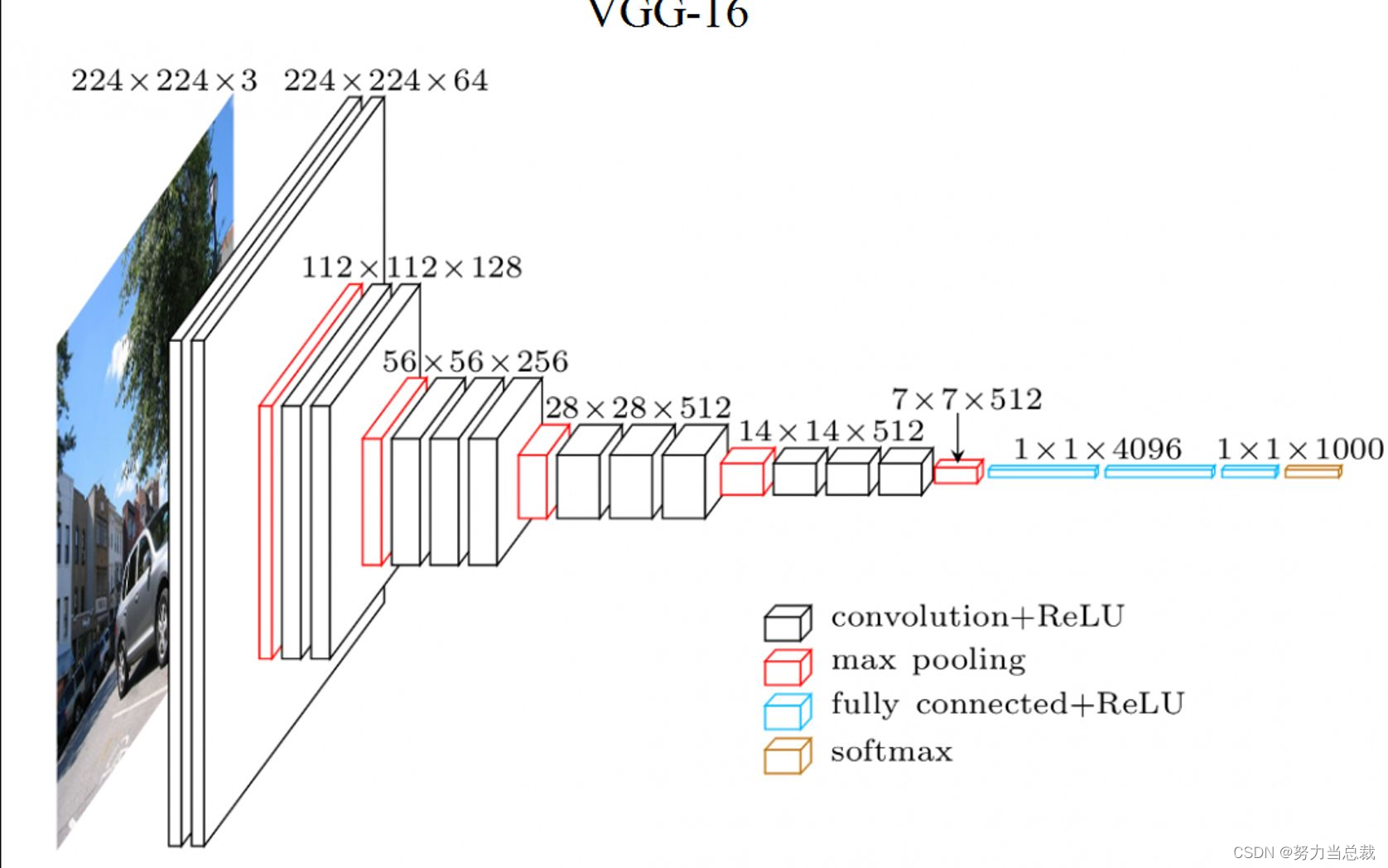

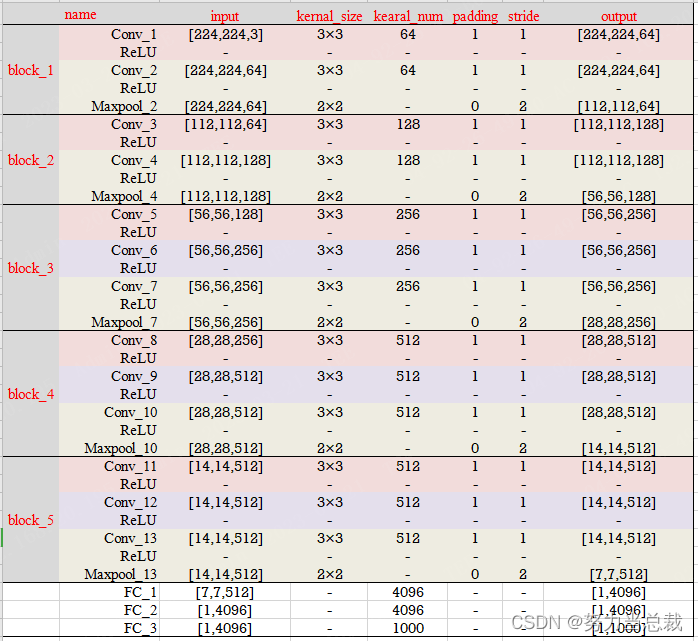

首先请明确,该网络共有16层(13个卷积层 + 3个全连接),如下图所示:

[注]上图是从百度图片中截取的,我们总是站在巨人的肩膀上,才会有更多的时间去约会,感谢这位作者!

接下来对每一层进行梳理:

【注】我还在亏……躺平了。上表的参数核实了几遍,但也不保证准确无误。抱歉!

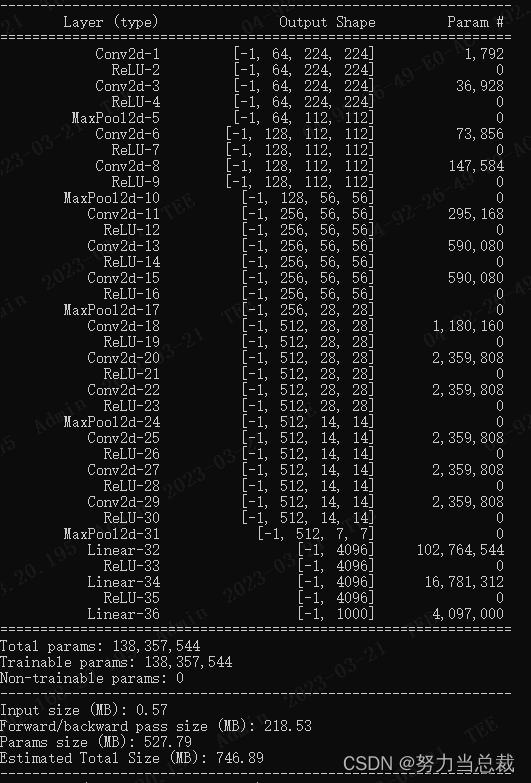

利用summary()函数打印网络结构和参数

block-1

1-0 卷积层

输入图像:224*224*3

【图像的长*宽*通道数】

卷 积 核:

尺寸信息:3*3*3

【滑动窗口/卷积核的长*宽*通道数,其中通道数默认与上一层的输入通道数一致】

数量: 64

【一般结合GPU硬件的配置,按照16的倍数递增】

步 长:stride= 1

填 充 值:padding = 1

输出特征图:224*224*64

【特征图尺寸计算公式:(图像尺寸-卷积核尺寸 + 2*填充值)/步长+1】

【即 (224-3 + 2*1)/1+1 = 224】

【64 为通道数,由该卷积层的卷积核数量决定 】

参数量:(3x3x3+1)x64=1792

【3x3x3为卷积核,1为偏置参数,64为卷积核个数】

1-1 激活函数

ReLU

———————————————————————————————————————————

2-0 卷积层

输入特征图:224*224*64

卷 积 核:

尺寸信息:3*3*64

数量: 64

步 长:stride= 1

填 充 值:padding = 1

输出特征图:224*224*64

【即 (224-3 + 2*1)/1+1 = 224 】

参数量:(3x3x64+1)x64=36928

2-1 激活函数

ReLU

2-2 池化层

输入特征图:224*224*64

池 化 核:3*3*1

步 长: stride = 2

填 充 值: padding = 0

输出特征图:112*112*64

【即 (224-2 + 2*0)/2+1 = 112】

———————————————————————————————————————————

block-2

3-0 卷积层

输入特征图:112*112*64

卷 积 核:

尺寸信息:3*3*64

数量:128

步 长:stride= 1

填 充 值:padding = 1

输出特征图:112*112*128

【即 (112-3+ 2*1)/1+1 = 112】

参数量:(3x3x64+1)x128=73856

3-1 激活函数

ReLU

———————————————————————————————————————————

4-0 卷积层

输入特征图:112*112*128

卷 积 核:

尺寸信息:3*3*128

数量:128

步 长:stride= 1

填 充 值:padding = 1

输出特征图:112*112*128

【即 (112-3+ 2*1)/1+1 = 112】

参数量:(3x3x128+1)x128=7147584

4-1 激活函数

ReLU

4-2 池化层

输入特征图:112*112*128

池 化 核:3*3*1

步 长: stride = 2

填 充 值: padding = 0

输出特征图:56*56*128

【即 (112-2+ 2*0)/2+1 = 56】

———————————————————————————————————————————

block-3

5-0 卷积层

输入特征图:56*56*128

卷 积 核:

尺寸信息:3*3*128

数量: 256

步 长:stride= 1

填 充 值:padding = 1

输出特征图:56*56*256

【即 (56-3+ 2*1)/1+1 = 56 】

参数量:(3x3x128+1)x256=295168

5-1 激活函数

ReLU

———————————————————————————————————————————

6-0 卷积层

输入特征图:56*56*256

卷 积 核:

尺寸信息:3*3*256

数量: 256

步 长:stride= 1

填 充 值:padding = 1

输出特征图:56*56*256

【即 (56-3+ 2*1)/1+1 = 56 】

参数量:(3x3x256+1)x256=589824

6-1 激活函数

ReLU

———————————————————————————————————————————

7-0 卷积层

输入特征图:56*56*256

卷 积 核:

尺寸信息:3*3*256

数量: 256

步 长:stride= 1

填 充 值:padding = 1

输出特征图:56*56*256

【即 (56-3+ 2*1)/1+1 = 56 】

参数量:(3x3x256+1)x256=589824

7-1 激活函数

ReLU

7-2 池化层

输入特征图:56*56*256

池 化 核:3*3*1

步 长: stride = 2

填 充 值: padding = 0

输出特征图:28*28*256

【即 (56-2 + 2*0)/2+1 = 28】

———————————————————————————————————————————

block-4

8-0 卷积层

输入特征图:28*28*256

卷 积 核:

尺寸信息:3*3*256

数量: 512

步 长:stride= 1

填 充 值:padding = 1

输出特征图:28*28*512

【即 (28-3+ 2*1)/1+1 = 28 】

参数量:(3x3x256+1)x512=1179648

8-1 激活函数

ReLU

———————————————————————————————————————————

9-0 卷积层

输入特征图:28*28*512

卷 积 核:

尺寸信息:3*3*512

数量: 512

步 长:stride= 1

填 充 值:padding = 1

输出特征图:28*28*512

【即 (28-3+ 2*1)/1+1 = 28】

参数量:(3x3x512+1)x512=2359808

9-1 激活函数

ReLU

———————————————————————————————————————————

10-0 卷积层

输入特征图:28*28*512

卷 积 核:

尺寸信息:3*3*512

数量:512

步 长:stride= 1

填 充 值:padding = 1

输出特征图:28*28*512

【即 (28-3+ 2*1)/1+1 = 28 】

参数量:(3x3x512+1)x512=2359808

10-1 激活函数

ReLU

10-2 池化层

输入特征图:28*28*512

池 化 核:3*3*1

步 长: stride = 2

填 充 值: padding = 0

输出特征图:14*14*512

【即 (28-2 + 2*0)/2+1 = 14 】

———————————————————————————————————————————

block-5

11-0 卷积层

输入特征图:14*14*512

卷 积 核:

尺寸信息:3*3*512

数量: 512

步 长:stride= 1

填 充 值:padding = 1

输出特征图:14*14*512

【即 (14-3+ 2*1)/1+1 = 14 】

参数量:(3x3x512+1)x512=2359808

11-1 激活函数

ReLU

———————————————————————————————————————————

12-0 卷积层

输入特征图:14*14*512

卷 积 核:

尺寸信息:3*3*512

数量: 512

步 长:stride= 1

填 充 值:padding = 1

输出特征图:14*14*512

【即 (14-3+ 2*1)/1+1 = 14】

参数量:(3x3x512+1)x512=2359808

12-1 激活函数

ReLU

———————————————————————————————————————————

13-0 卷积层

输入特征图:14*14*512

卷 积 核:

尺寸信息:3*3*512

数量:512

步 长:stride= 1

填 充 值:padding = 1

输出特征图:14*14*512

【即 (14-3+ 2*1)/1+1 = 14】

参数量:(3x3x512+1)x512=2359808

13-1 激活函数

ReLU

13-2 池化层

输入特征图:14*14*512

池 化 核:3*3*1

步 长: stride = 2

填 充 值: padding = 0

输出特征图:7*7*512

【即 (14-2 + 2*1)/2+1 = 7 】

———————————————————————————————————————————

14-0 全连接层

输入特征图:7*7*512

卷 积 核:

尺寸信息:7*7*512

数量: 4096

步 长:stride= 0

填 充 值:padding = 0

输出特征图:1*1*4096

【即 (7-7+ 2*0)/1+1 = 1 】

参数量:(7x7x512+1)x4096=102764544

14-1 激活函数

ReLU

———————————————————————————————————————————

15-0 全连接层

输入特征图:1*1*4096

参数量:(4096+1)x4096=16781312

15-1 激活函数

ReLU

———————————————————————————————————————————

16-0 输出层

输入特征图:1*1*4096

参数量:1000x4096=4096000

16-1 分类映射

Softmax:将输入映射为0-1之间的实数,并且归一化保证和为1,因此多分类的概率之和也刚好为1。也就是说,分类问题是一个非黑即白的场景,要么是A,要么是B,要么是C,但是我们想知道更多的信息,于是通过softmax函数输出是A的概率(假设是0.88),是B的概率(假设是0.08),是C的概率(假设是0.01)。 [注]上图是从百度图片中截取的,感谢这位作者!

———————————————————————————————————————————

三 千里之行 始于手巧代码

1 CIAFR10_数据集加载

import torchvision

import torchvision.transforms as transforms

batchSize = 128 # 该参数根据计算机性能可修改

normalize = transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5]) # 将图像的像素值归一化到[-1,1]之间

# Compose将多个transforms的操作整合在一起

data_transform = transforms.Compose([

transforms.Resize((224,224)),

transforms.ToTensor(),

normalize()])

trainset = torchvision.datasets.CIFAR10(root='./Cifar-10',

train=True, download=True, transform=data_transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=batchSize, shuffle=True)

testset = torchvision.datasets.CIFAR10(root='./Cifar-10',

train=False, download=True, transform=data_transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=batchSize, shuffle=False)

2 模型搭建

- 初阶代码

import torch

import torch.nn as nn

import torch.functional as F

from torchsummary import summary

class VGG16(nn.Module):

def __init__(self, in_channels =1, num_classes=1000):

super(VGG16,self).__init__()

# block_1

self.c1=nn.Conv2d(in_channels=in_channels,out_channels=64,kernel_size=3,stride=1,padding=1)

self.a1=nn.ReLU(inplace=True)

self.c2=nn.Conv2d(64,64,3,stride=1,padding=1)

self.a2=nn.ReLU(inplace=True)

self.p2=nn.MaxPool2d(kernel_size=2,stride=2,padding = 0)

# block_2

self.c3=nn.Conv2d(64,128,3,stride=1,padding=1)

self.a3=nn.ReLU(inplace=True)

self.c4=nn.Conv2d(128,128,3,stride=1,padding=1)

self.a4=nn.ReLU(inplace=True)

self.p4=nn.MaxPool2d(kernel_size=2,stride=2,padding = 0)

# block_3

self.c5=nn.Conv2d(128,256,3,stride=1,padding=1)

self.a5=nn.ReLU(inplace=True)

self.c6=nn.Conv2d(256,256,3,stride=1,padding=1)

self.a6=nn.ReLU(inplace=True)

self.c7=nn.Conv2d(256,256,3,stride=1,padding=1)

self.a7=nn.ReLU(inplace=True)

self.p7=nn.MaxPool2d(kernel_size=2,stride=2,padding = 0)

# block_4

self.c8=nn.Conv2d(256,512,3,stride=1,padding=1)

self.a8=nn.ReLU(inplace=True)

self.c9=nn.Conv2d(512,512,3,stride=1,padding=1)

self.a9=nn.ReLU(inplace=True)

self.c10=nn.Conv2d(512,512,3,stride=1,padding=1)

self.a10=nn.ReLU(inplace=True)

self.p10=nn.MaxPool2d(kernel_size=2,stride=2,padding = 0)

# block_5

self.c11=nn.Conv2d(512,512,3,stride=1,padding=1)

self.a11=nn.ReLU(inplace=True)

self.c12=nn.Conv2d(512,512,3,stride=1,padding=1)

self.a12=nn.ReLU(inplace=True)

self.c13=nn.Conv2d(512,512,3,stride=1,padding=1)

self.a13=nn.ReLU(inplace=True)

self.p13=nn.MaxPool2d(kernel_size=2,stride=2,padding = 0)

self.fc1_d=nn.Dropout(p=0.5)

self.fc1=nn.Linear(512*7*7,4096)

self.fc1_a=nn.ReLU(inplace=True)

self.fc2_d=nn.Dropout(p=0.5)

self.fc2=nn.Linear(4096,4096)

self.fc2_a=nn.ReLU(inplace=True)

self.fc3=nn.Linear(4096,num_classes)

def forward(self,x):

x = self.c1(x)

x = self.a1(x)

x = self.c2(x)

x = self.a2(x)

x = self.p2(x)

x = self.c3(x)

x = self.a3(x)

x = self.c4(x)

x = self.a4(x)

x = self.p4(x)

x = self.c5(x)

x = self.a5(x)

x = self.c6(x)

x = self.a6(x)

x = self.c7(x)

x = self.a7(x)

x = self.p7(x)

x = self.c8(x)

x = self.a8(x)

x = self.c9(x)

x = self.a9(x)

x = self.c10(x)

x = self.a10(x)

x = self.p10(x)

x = self.c11(x)

x = self.a11(x)

x = self.c12(x)

x = self.a12(x)

x = self.c13(x)

x = self.a13(x)

x = self.p13(x)

x=torch.flatten(x,start_dim=1)

x = self.fc1_d(x)

x = self.fc1(x)

x = self.fc1_a(x)

x = self.fc2_d(x)

x = self.fc2(x)

x = self.fc2_a(x)

x=self.fc3(x)

return x

# 打印模型结构

model =VGG16(in_channels = 3, num_classes = 10)

summary(model, input_size=(3, 224, 224))

- 高阶代码

from pyexpat import features

import torch.nn as nn

import torch

# VGG网络结构根据不同深度有四个版本,VGG11/13/16/19

# 我们试图在搭建网络的时候能够总结多种版本的VGG网络结构规律,用最少的代码行实现公共结构的复现,从而实现装B的梦想。

# VGG分为两个部分:一是特征提取网络,二是映射分类网络。

# 定义VGG类

class VGG(nn.Module):

# 两个参数:features和num_classes

def __init__(self, features, num_classes=1000):

super(VGG, self).__init__()

# 特征提取网络:包含卷积运算+激活函数+池化操作

self.features = features

# 映射分类网络:包含Dropout+全连接层+激活函数

# 这部分层数较少,可直接罗列。

# 以指定的运算传递顺序添加到序列容器nn.Sequential()中,在前向传播时自动调用forward()方法,无需再定义。

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(512*7*7, 4096),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Linear(4096, num_classes)

)

# 定义网络结构的运算传递顺序/关系

def forward(self, x):

x = self.features(x)

x = torch.flatten(x, start_dim=1)

x = self.classifier(x)

return x

# 定义VGG不同结构的配置列表,json格式{字典},依照顺序,依次抽不同网络中的重要参数

# 观察发现,区别在于卷积核:不同层的个数有所不同

# 依照顺序,数字表示卷积核个数(等价于输入/输出特征图的通道数),'M'表示最大池化层

model_names = {

'vgg11': [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'vgg13': [64, 64, 'M', 128, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'vgg16': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'],

'vgg19': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512, 'M'],

}

# 定义特征提取网络函数

# 传入参数有两个:int类型的in_channels、列表类型的 model_name

def make_features( in_channels, model_name ):

model_name = model_name

# 定义一个用于存储特征提取网络层的列表

layers = []

in_channels = in_channels

# 有序遍历配置列表

for v in model_name:

# 以池化操作为界限

# 当配置列表中出现'M'时,向特征提取网络层中增加一层池化

if v == "M":

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

# 否则,向特征提取网络层中增加一层卷积运算+激活函数

# 并更新通道数,因为当前层的feature输出通道=下一层feature的输入通道

else:

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1)

layers += [conv2d, nn.ReLU(True)]

in_channels = v

# 将特征提取网络层的列表添加到序列容器nn.Sequential()中

return nn.Sequential(*layers)

# 到此为止,我们装B成功,相比初阶代码,我们少敲了2/3的代码。!

if __name__ == '__main__':

in_channels = 3

model_name = model_names["vgg16"]

model = VGG(make_features(in_channels, model_name), num_classes=10)

print(model)

3 模型训练

from torch.optim import lr_scheduler

from tqdm import tqdm

# 优先调用 GPU

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# 超参数设置

n_epochs = 100

in_channels = 3

num_classes = 10

learning_rate = 0.0001 #学习率,优化器迭代的步长

momentum = 0.9 # 动量因子,用来矫正优化率,可选参数

# 实例化模型

model = VGG16(in_channels = in_channels, num_classes = num_classes)

model = model.to(device)

# 定义损失函数(交叉熵损失)

criterion = torch.nn.CrossEntropyLoss()

# 定义优化器(随机梯度下降法)

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, momentum = momentum)

# 动态调整学习率:每隔10轮变为原来的0.5。

# 其中StepLR()用于调整学习率,一般情况下会设置随着epoch的增大而逐渐减小,从而达到更好的训练效果。

lr_scheduler = lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.5)

# 开始训练模型

for epoch in range(n_epochs):

running_loss = 0.0

running_correct = 0

print("Epoch {}/{}".format(epoch, n_epochs))

print("-"*10)

# 读取数据

# for data in tqdm(trainloader): # 可自动生成进度条

for data in trainloader:

X_train, y_train = data

X_train, y_train = X_train.cuda(), y_train.cuda()

# 计算训练值

outputs = model(X_train)

# torch.max(input, dim)函数会返回两个tensor,第一个tensor是每行的最大值;第二个tensor是每行最大值的索引

_,pred = torch.max(outputs.data, 1)

# 计算观测值(label)与训练值的损失函数

loss = criterion(outputs, y_train)

###————————————————————————————————————————————————————————————

# 反向传播部分

# 梯度归零

optimizer.zero_grad()

# 反向传播,计算当前梯度

loss.backward()

# 根据梯度更新网络参数

optimizer.step()

# item()得到元素张量的元素值

running_loss += loss.data.item()

running_correct += torch.sum(pred == y_train.data)

# 验证模型

testing_correct = 0

for data in testloader:

X_test, y_test = data

X_test, y_test = X_test.cuda(), y_test.cuda()

# eval():如果模型中有Batch Normalization和Dropout,则不启用,以防改变权值

# model.eval()

# 进行预测

outputs = model(X_test)

_, pred = torch.max(outputs.data, 1)

testing_correct += torch.sum(pred == y_test.data)

print("Loss is:{:.4f}, Train Accuracy is:{:.4f}%, Test Accuracy is:{:.4f}".format(torch.true_divide(running_loss, len(trainset)),

torch.true_divide(100*running_correct, len(trainset)),

torch.true_divide(100*testing_correct, len(testset))))

torch.save(model.state_dict(), "model_parameter.pkl")

4 模型预测

5 完整代码

- 初阶代码

import torch

import torch.nn as nn

import torch.functional as F

import torchvision

import torchvision.transforms as transforms

import tqdm

from torch.optim import lr_scheduler

from torchsummary import summary

class VGG16(nn.Module):

def __init__(self, in_channels =1, num_classes=1000):

super(VGG16,self).__init__()

# block_1

self.c1=nn.Conv2d(in_channels=in_channels,out_channels=64,kernel_size=3,stride=1,padding=1)

self.a1=nn.ReLU(inplace=True)

self.c2=nn.Conv2d(64,64,3,stride=1,padding=1)

self.a2=nn.ReLU(inplace=True)

self.p2=nn.MaxPool2d(kernel_size=2,stride=2,padding = 0)

# block_2

self.c3=nn.Conv2d(64,128,3,stride=1,padding=1)

self.a3=nn.ReLU(inplace=True)

self.c4=nn.Conv2d(128,128,3,stride=1,padding=1)

self.a4=nn.ReLU(inplace=True)

self.p4=nn.MaxPool2d(kernel_size=2,stride=2,padding = 0)

# block_3

self.c5=nn.Conv2d(128,256,3,stride=1,padding=1)

self.a5=nn.ReLU(inplace=True)

self.c6=nn.Conv2d(256,256,3,stride=1,padding=1)

self.a6=nn.ReLU(inplace=True)

self.c7=nn.Conv2d(256,256,3,stride=1,padding=1)

self.a7=nn.ReLU(inplace=True)

self.p7=nn.MaxPool2d(kernel_size=2,stride=2,padding = 0)

# block_4

self.c8=nn.Conv2d(256,512,3,stride=1,padding=1)

self.a8=nn.ReLU(inplace=True)

self.c9=nn.Conv2d(512,512,3,stride=1,padding=1)

self.a9=nn.ReLU(inplace=True)

self.c10=nn.Conv2d(512,512,3,stride=1,padding=1)

self.a10=nn.ReLU(inplace=True)

self.p10=nn.MaxPool2d(kernel_size=2,stride=2,padding = 0)

# block_5

self.c11=nn.Conv2d(512,512,3,stride=1,padding=1)

self.a11=nn.ReLU(inplace=True)

self.c12=nn.Conv2d(512,512,3,stride=1,padding=1)

self.a12=nn.ReLU(inplace=True)

self.c13=nn.Conv2d(512,512,3,stride=1,padding=1)

self.a13=nn.ReLU(inplace=True)

self.p13=nn.MaxPool2d(kernel_size=2,stride=2,padding = 0)

# self.fc1_d=nn.Dropout(p=0.5)

self.fc1=nn.Linear(512*7*7,4096)

self.fc1_a=nn.ReLU(inplace=True)

# self.fc2_d=nn.Dropout(p=0.5)

self.fc2=nn.Linear(4096,4096)

self.fc2_a=nn.ReLU(inplace=True)

self.fc3=nn.Linear(4096,num_classes)

def forward(self,x):

x = self.c1(x)

x = self.a1(x)

x = self.c2(x)

x = self.a2(x)

x = self.p2(x)

x = self.c3(x)

x = self.a3(x)

x = self.c4(x)

x = self.a4(x)

x = self.p4(x)

x = self.c5(x)

x = self.a5(x)

x = self.c6(x)

x = self.a6(x)

x = self.c7(x)

x = self.a7(x)

x = self.p7(x)

x = self.c8(x)

x = self.a8(x)

x = self.c9(x)

x = self.a9(x)

x = self.c10(x)

x = self.a10(x)

x = self.p10(x)

x = self.c11(x)

x = self.a11(x)

x = self.c12(x)

x = self.a12(x)

x = self.c13(x)

x = self.a13(x)

x = self.p13(x)

x=torch.flatten(x,start_dim=1)

x = self.fc1(x)

x = self.fc1_a(x)

x = self.fc2(x)

x = self.fc2_a(x)

x=self.fc3(x)

return x

if __name__ == '__main__':

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

batchSize = 128

normalize = transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

data_transform = transforms.Compose([

transforms.Resize((224,224)),

transforms.ToTensor(),

normalize()])

trainset = torchvision.datasets.CIFAR10(root='./Cifar-10',

train=True, download=True, transform=data_transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=batchSize, shuffle=True)

testset = torchvision.datasets.CIFAR10(root='./Cifar-10',

train=False, download=True, transform=data_transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=batchSize, shuffle=False)

model = VGG16(in_channels = 3, num_classes = 10).to(device)

n_epochs = 40

num_classes = 10

learning_rate = 0.0001

momentum = 0.9

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

lr_scheduler = lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.5)

for epoch in range(n_epochs):

print("Epoch {}/{}".format(epoch, n_epochs))

print("-"*10)

running_loss = 0.0

running_correct = 0

for data in trainloader:

X_train, y_train = data

X_train, y_train = X_train.cuda(), y_train.cuda()

outputs = model(X_train)

loss = criterion(outputs, y_train)

_,pred = torch.max(outputs.data, 1)

optimizer.zero_grad()

loss.backward()

optimizer.step()

running_loss += loss.data.item()

running_correct += torch.sum(pred == y_train.data)

testing_correct = 0

for data in testloader:

X_test, y_test = data

X_test, y_test = X_test.cuda(), y_test.cuda()

outputs = model(X_test)

_, pred = torch.max(outputs.data, 1)

testing_correct += torch.sum(pred == y_test.data)

print("Loss is:{:.4f}, Train Accuracy is:{:.4f}%, Test Accuracy is:{:.4f}".format(torch.true_divide(running_loss, len(trainset)),

torch.true_divide(100*running_correct, len(trainset)),

torch.true_divide(100*testing_correct, len(testset))))

torch.save(model.state_dict(), "model_parameter.pkl")

- 高阶代码

import torch

import torch.nn as nn

import torch.functional as F

import torchvision

import torchvision.transforms as transforms

import tqdm

from torch.optim import lr_scheduler

from torchsummary import summary

class VGG(nn.Module):

def __init__(self, features, num_classes=1000):

super(VGG, self).__init__()

self.features = features

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(512*7*7, 2048),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(2048, 2048),

nn.ReLU(True),

nn.Linear(2048, num_classes)

)

def forward(self, x):

x = self.features(x)

x = torch.flatten(x, start_dim=1)

x = self.classifier(x)

return x

model_names = {

'vgg11': [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'vgg13': [64, 64, 'M', 128, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'vgg16': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'],

'vgg19': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512, 'M'],

}

def make_features( in_channels, model_name):

model_name = model_name

layers = []

in_channels = in_channels

for v in model_name:

if v == "M":

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1)

layers += [conv2d, nn.ReLU(True)]

in_channels = v

return nn.Sequential(*layers)

if __name__ == '__main__':

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

batchSize = 64

normalize = transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

data_transform = transforms.Compose([

transforms.Resize((224,224)),

transforms.ToTensor(),

normalize()])

trainset = torchvision.datasets.CIFAR10(root='./Cifar-10',

train=True, download=True, transform=data_transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=batchSize, shuffle=True)

testset = torchvision.datasets.CIFAR10(root='./Cifar-10',

train=False, download=True, transform=data_transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=batchSize, shuffle=False)

in_channels = 3

model_name = model_names["vgg16"]

model = VGG(make_features(in_channels, model_name), num_classes=10)

n_epochs = 40

num_classes = 10

learning_rate = 0.0001

momentum = 0.9

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

lr_scheduler = lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.5)

for epoch in range(n_epochs):

print("Epoch {}/{}".format(epoch, n_epochs))

print("-"*10)

running_loss = 0.0

running_correct = 0

for data in trainloader:

X_train, y_train = data

X_train, y_train = X_train.cuda(), y_train.cuda()

outputs = model(X_train)

loss = criterion(outputs, y_train)

_,pred = torch.max(outputs.data, 1)

optimizer.zero_grad()

loss.backward()

optimizer.step()

running_loss += loss.data.item()

running_correct += torch.sum(pred == y_train.data)

testing_correct = 0

for data in testloader:

X_test, y_test = data

X_test, y_test = X_test.cuda(), y_test.cuda()

outputs = model(X_test)

_, pred = torch.max(outputs.data, 1)

testing_correct += torch.sum(pred == y_test.data)

print("Loss is:{:.4f}, Train Accuracy is:{:.4f}%, Test Accuracy is:{:.4f}".format(torch.true_divide(running_loss, len(trainset)),

torch.true_divide(100*running_correct, len(trainset)),

torch.true_divide(100*testing_correct, len(testset))))

torch.save(model.state_dict(), "model_parameter.pkl")

7269

7269

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?