前注:

学习书籍<AWS Certified Solutions Architect Associate All-in-One Exam Guide (Exam SAA-C01)>时记录的笔记。

由于是全英文书籍,所以笔记记录大部分为英文。

Index

Auto Scaling

1. Benefits of Auto Scaling

(1) Main benefits

Dynamic scaling

Provision in real time

Best user experience

Never run out of resources;

Can create various rules within it to provide the best user experience.

Health check and fleet management

Fleet: if you are hosting your application on a bunch of EC2 servers, the collection of those EC2 servers is called a fleet.

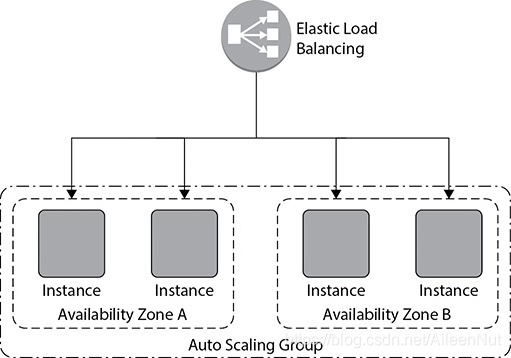

Load balancing

When you use Auto Scaling along with ELB, it can take care of balancing the workload load across multiple EC2 instances.

Auto Scaling also automatically balances the EC2 instances across multiple AZs when multiple AZs are configured.

Target tracking

Can use Auto Scaling to run on a particular target, and it will adjust the number of EC2 instances in order to meet the target.

(2) Other services can be used

EC2 spot instances

EC2 Container Service (ECS)

Elastic Map Reducer (EMR) clusters

AppStream 2.0 instances

DynamoDB

(3) Auto Scaling works in real life

When you use Auto Scaling, you simply add the EC2 instances to an Auto Scaling group, define the minimum and maximum number of servers, and then define the scaling policy.

2. Launch Configuration

You can define what kind of server to use by creating a launch configuration.

A launch configuration is a template that stores all the information about the instance, such as the AMI details, instance type, key pair, security group, IAM instance profile, user data, storage attached and so on.

One launch configuration can attach to multiple Auto Scaling groups.

One Auto Scaling group has only one launch configuration.

Once you create an Auto Scaling group, you can’t edit the launch configuration tied up with it; the only way to do this is to create a new launch configuration and associate the Auto Scaling group with the new one. For the new launch configuration, there is no impact on the running servers, only the new servers will reflect the change.

3. Auto Scaling Groups

An Auto Scaling group has all the rules and policies that govern how the EC2 instances will be terminated or started.

When you create an Auto Scaling group, first you need to provide the launch configuration that has the details of the instance type, and then you need to choose the scaling plan or scaling policy.

To create an Auto Scaling group, you need to provide the minimum number of instances running at any time, set the maximum number of servers to which the instances can scale. In some cases, you can set a desired number of instances that is the optimal number of instances the system should be.

You can configure Amazon Simple Notification Service (SNS) to send notifications whenever your Auto Scaling group scales up or down. Amazon SNS can deliver notifications as HTTP or HTTPS POST, as an e-mail, or as a message posted to an Amazon SQS queue.

An Auto Scaling group can span multiple AZs within a region, but cannot span regions.

It is recommended that you use the same instance type in an Auto Scaling group for an effective load distribution.

(1) Four ways to scale

Maintaining the instance level

Default scaling plan

Define the minimum or the specified number of servers that will be running all the time.

Manual scaling

Scale up or down manually either via the console or the API or CLI.

Scaling as per the demand

Scale to meet the demand.

Can scale according to various CloudWatch metrics such as an increase in CPU, disk reads, disk writes, network in, network out and so on.

Must define two policies, one for scaling up and one for scaling down.

Scaling as per schedule

For the case that your traffic is predictable and you know that you are going to have an increase in traffic during certain hours.

You need to create a scheduled action that tells the Auto Scaling group to perform the scaling action at the specified time.

(2) Three types of scaling policies

Simple Scaling

Scale up or down on the basis of only one scaling adjustment.

In this mechanism, you select an alarm, which can be CPU utilization, disk read, disk write, network in or network out and so on.

Cooldown period: how long to wait before starting or stopping a new instance.

Simple Scaling with Steps

Like Simple Scaling, but you add a few more steps and have finer-grained control.

Use case: When the CPU utilization is between 50 percent to 60 percent, add two more instances, and when the CPU utilization is 60 percent or more, add four more instances.

The ways of changing the capacity:

Exact capacity: increase or decrease to an exact capacity.

Chance in capacity: increase or decrease a specific number.

Percentage change in capacity: increase or decrease by a certain percentage of capacity (round off the number to the nearest digit).

Target-tracking Scaling Policies

Either you can select a predetermined metric or you choose your own metric and then set it to a target value.

Once the alarm is triggered, Auto Scaling calculates the number of instances it needs to increase or decrease to meet the desired metric, and it automatically does what you need.

4. Termination Policy

The termination policy determines which EC2 instance you are going to shut down first.

When you terminate a machine, it deregisters itself from the load balancer, if any, and then it waits for the grace period, if any, so that any connections opened to that instance will be drained.

Multiple ways to writing down termination policies:

· Terminate the longest running server in your fleet

The server running for the longest time may not have been patched, or may happen some memory leaks and so on.

· Terminate the servers that are close to billing an hour

More value for money

· Terminate the oldest launch configuration

When you are running servers with some older version of AMIs and are thinking of changing them.

5. Elastic Load Balancing

Elastic Load Balancing automatically distributes incoming application traffic across the multiple applications, microservices, and containers hosted on Amazon EC2 instances.

You can configure a load balancer to be either external facing or internal facing.

External load balancer: access from the Internet.

Internal load balancer: don’t have Internet access, load balance a couple of instances running on a private subnet.

Load balancers in EC2-Classic are always Internet-facing load balancers.

A Load balancer within a VPC can either be external facing or internal facing.

(1) Advantages of ELB

· Elastic

Automatically scalable, no manual intervention at all.

· Integrated

Can integrate with various AWS services.

Integrate with Auto Scaling: scale EC2 instances and workload distribution

Integrate with CloudWatch: get all metrics and decides whether to take an instance up or down or what other action to take

Integrate with Route 53: for DNS failover

· Secured

Provide security features, such as integrated certificate management and SSL decryption, port forwarding…

Can terminate HTTPS/SSL traffic at the load balancer to avoid having to run the CPU-intensive decryption process on EC2 instances. This can also help in mitigating a DDoS attack.

Can configure security groups for ELB that allows you to control incoming and outgoing traffic.

· Highly available

Can distribute the traffic across Amazon EC2 instances, containers and IP addresses.

Deploy applications across multiple AZs and have ELB distribute the traffic across the multiple AZs. By doing this, if one of the AZs goes down, your application continues to run.

· Cheap

(2) How ELB works

Offer high availability: Even if you do not deploy your application or workload across multiple AZs (which is always recommended), the load balancers that you are going to use will be always deployed across multiple AZs.

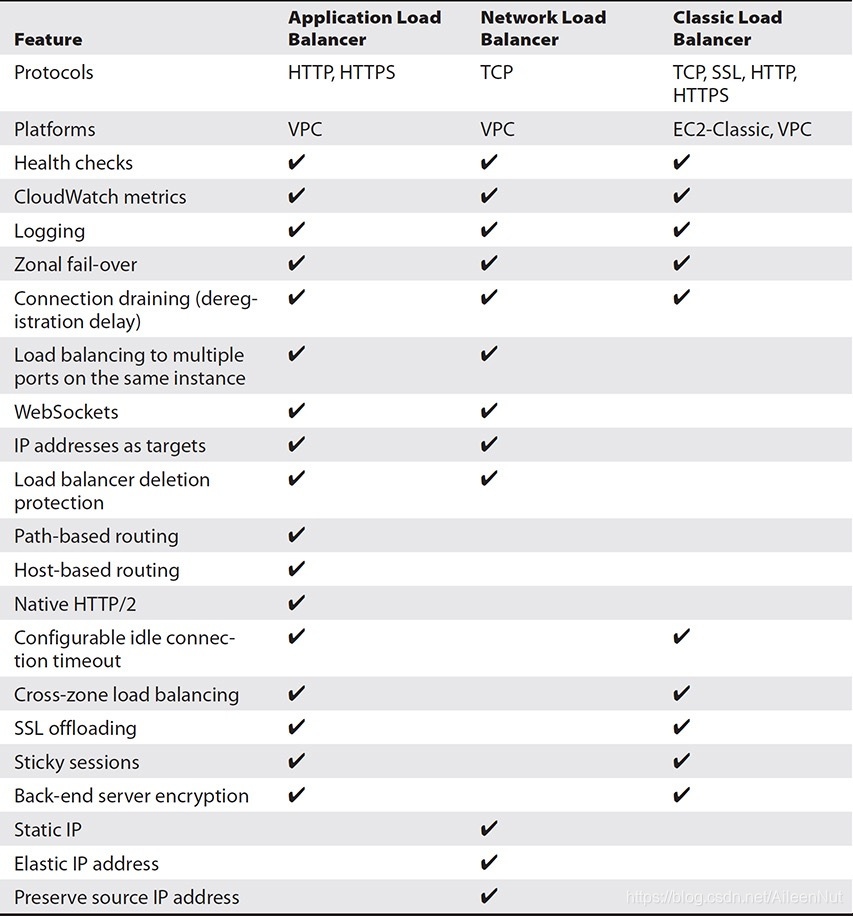

(3) Types of Load Balancers

· Network Load Balancer

The network load balancer (NLB), or the TCP load balancer, acts in layer 4 of the OSI model.

It can handle connections across EC2 instances, containers and IP addresses based on IP data.

In all cases, all the requests flow through the load balancer, then the load balancer handles those packets and forwards them to the back end as they are received.

It supports both TCP and SSL.

It preserves the client-side source IP address, allowing the back end to see the IP address of the client.

It doesn’t make any changes or touch the packet, no X-Forward-For headers, proxy protocol prepends, source or destination IP addresses, or ports to request.

· Application Load Balancer

The application load balancer (ALB) works on layer 7 of the OSI model.

It supports HTTP and HTTPS.

Whenever a package comes from an application, it looks at its header and then decides the course of action. The headers might be modified.

The ALB is capable of these kinds of routings:

Content-based routing: If your application consists of multiple services, it can route to a specific service as per the content of the request.

Host-based routing: You route a client request based on the Host field of the HTTP header.

Path-based routing: You route a client request based on the URL path of the HTTP header.

· Classic Load Balancer

It supports the classic EC2 instances. If you are not using the classic EC2 instances, you should use either an application or a network load balancer.

It supports both network and application load balancing, so it operates on layer 4 as well as layer of the OSI model.

6. Load Balancer Key Concepts and Terminology

The application load balancer supports content-based routing, therefore, the biggest benefit from it is that it allows for multiple applications to be hosted behind a single load balancer.

Using path-based routing, you can have up to ten different sets of rules, which means you can host up to ten applications using one load balancer.

The application load balancer provides native support for microservice and container-based architectures.

With the application load balancer, you can register an instance with different ports multiple times which is helpful when you are running a container-based application because containers often give dynamic ports. If you use Amazon ECS, it takes care of the register tasks automatically with the load balancer using a dynamic port mapping.

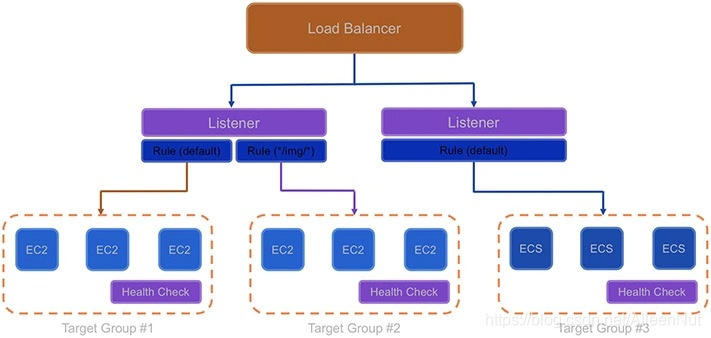

(1) Core Components of Load Balancer

· Listeners

Listeners define the protocol and port on which the load balancer listens for incoming connections.

Need at least one listener to accept incoming traffic and can support up to ten listeners.

Can define all the routing rules on the listener.

For ALB, the listener supports the HTTP and HTTPS protocols, and also provides native support for HTTP/2 with HTTPS listeners.

For NLB, the listener supports the TCP protocols.

· Target Groups and Target

The target groups are logical groupings of targets behind a load balancer.

Target groups can exist independently from the load balancer. You can create a target group and keep it ready. You can keep on adding resources to the target group and may not immediately add it with the load balancer. You can associate it with a load balancer when needed. You can also associate it with the Auto Scaling group.

The target is a logical load balancing target, which can be an EC2 instance, microservice, or container-based application for an ALB and instance or an IP address for a NLB.

EC2 instances can be registered with the same target group using multiple ports.

A single target can be registered with multiple target groups.

· Rules

Rules provide the link between listeners and target groups and consist of conditions and actions.

When a request meets the condition of the rule, the associated action is taken.

Rules can forward requests to a specified target group.

When you create a listener by default, it has a default rule. The default rule has the lowest-priority value and evaluated last.

Currently, the rule supports only “forward” action.

Two types of rule conditions: host and path.

Load balancers can support up to 10 rules. Road map can support for 100 rules.

(2) Health Check

A health check is going to check the target or target group at a certain interval of time defined by you to make sure the target or the target group is working fine.

If any of the targets have issues, then health checks allow for traffic to be shifted away from the impaired or failed instances.

HealthCheckIntervalSeconds: the interval at which the load balancer sent a check request switch.

You specify the port, protocol and ping path with the health check request.

The ALB supports HTTP and HTTPS health checks.

The NLB supports TCP health checks.

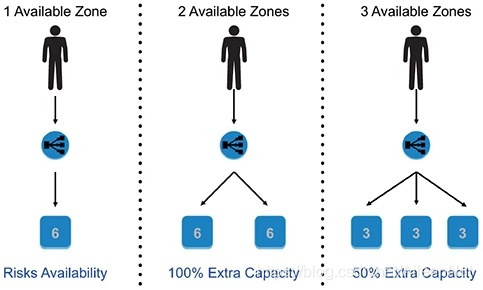

(3) Using Multiple AZs

When you dynamically scale up or scale down, it is important to maintain the state information of the session. It is recommended you maintain the state information outside the EC2 servers. DynamoDB is a great way to maintain it.

Sometimes the application caches the server IP address in the DNS, and as a result, it redirects the traffic to the same instance every time. This causes an imbalance in the instance capacity since the proper load distribution does not happen. Cross-zone load balancing solves the problem.

Cross-zone load balancing distributes the requests evenly across multiple available zones.

For an ALB, cross-zone load balancing is enabled by default.

For a classic load balancer and create by API or CLI, you need to configure cross-zone load balancing manually. If you create it by console, it is selected by default.

For a NLB, each load balancer node distributes traffic across the registered targets in its available zone only.

The cross-load balancing happens across the targets and not at the AZ level.

In the case of the ALB, when the load balancer receives a request, it checks the priority order from the listener rule, determines which rule to apply, selects a target from the target group and applies the action of the rule. The routing is performed independently for each target group, and it doesn’t matter if the target is registered with multiple target groups.

In the case of the NLB, the load balancer receives a request and selects a target from the target group for the default rule using a flow hash algorithm, based on the protocol, source IP address, source port, destination IP address and destination port. This provides session stickiness, you can instruct the load balancer to route repeated requests to the same EC2 instance whenever possible (可以指示负载平衡器尽可能将重复的请求路由到同一EC2实例). The advantage of this is that the EC2 instance can cache the user data locally for better performance.

In the case of the classic load balancers, when the load balancer node receives a request, for HTTP and HTTPS listeners, it selects the least outstanding request’s routing algorithm; for TCP listeners, it selects a registered instance using the round-robin routing algorithm.

155

155

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?