PyTorch示例——LogisticRegressionModel

版本信息

- PyTorch:

1.12.1 - Python:

3.7.13

导包

import torch

import matplotlib.pyplot as plt

原始数据

X_data = torch.Tensor([[1.0, 2.9], [2.0, 6.1], [3.0, 9.2], [4.0, 12.3], [5.0, 14.9], [6.0, 18.1]])

y_data = torch.Tensor([[0], [0], [0], [1], [1], [1]])

构建模型

class MyLogisticRegressionModel(torch.nn.Module):

def __init__(self):

super(MyLogisticRegressionModel, self).__init__()

self.linear = torch.nn.Linear(2, 1)

self.logistic = torch.nn.Sigmoid()

def forward(self, x):

ouput = self.linear(x)

ouput = self.logistic(ouput)

return ouput

开始训练

learning_rate = 0.005

epoch_num = 100

my_model = MyLogisticRegressionModel()

loss = torch.nn.BCELoss(reduction='sum')

optimizer = torch.optim.SGD(my_model.parameters(), lr=learning_rate)

loss_list = []

for epoch in range(epoch_num):

y_pred = my_model(X_data)

l = loss(y_pred, y_data)

optimizer.zero_grad()

l.backward()

optimizer.step()

print(f"Train... ===> epoch = {epoch}, loss_val = {l.item()}")

loss_list.append(l.item())

Train... ===> epoch = 0, loss = 6.798981189727783

Train... ===> epoch = 1, loss = 4.380733966827393

Train... ===> epoch = 2, loss = 4.372033596038818

Train... ===> epoch = 3, loss = 4.36480188369751

Train... ===> epoch = 4, loss = 4.357597351074219

Train... ===> epoch = 5, loss = 4.350410461425781

Train... ===> epoch = 6, loss = 4.343241214752197

Train... ===> epoch = 7, loss = 4.336090087890625

Train... ===> epoch = 8, loss = 4.328956604003906

Train... ===> epoch = 9, loss = 4.321841239929199

......

Train... ===> epoch = 90, loss = 3.8016786575317383

Train... ===> epoch = 91, loss = 3.7959144115448

Train... ===> epoch = 92, loss = 3.7901651859283447

Train... ===> epoch = 93, loss = 3.7844314575195312

Train... ===> epoch = 94, loss = 3.778712272644043

Train... ===> epoch = 95, loss = 3.773008346557617

Train... ===> epoch = 96, loss = 3.7673189640045166

Train... ===> epoch = 97, loss = 3.761645555496216

Train... ===> epoch = 98, loss = 3.755985736846924

Train... ===> epoch = 99, loss = 3.7503416538238525

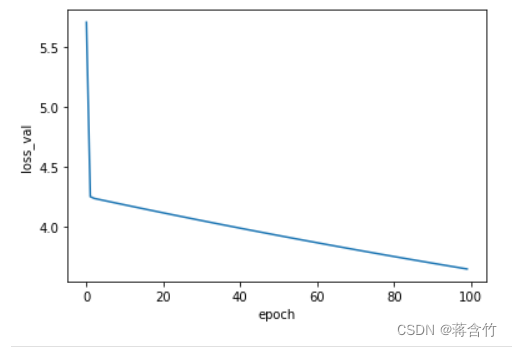

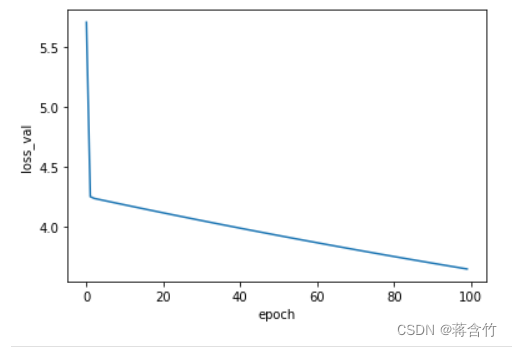

绘制曲线:epoch与loss

plt.plot(epochs, loss_list)

plt.xlabel("epoch")

plt.ylabel("loss_val")

plt.show()

查看权重、偏置信息

print(f"w = {my_model.linear.weight}")

print(f"b = {my_model.linear.bias}")

w = Parameter containing:

tensor([[0.1506, 0.0253]], requires_grad=True)

b = Parameter containing:

tensor([-0.0544], requires_grad=True)

利用模型做预测

X_test = torch.Tensor([[4.0, 12.0]])

y_pred = my_model(X_test)

print(f"y_pred = {y_pred.data}")

y_pred = tensor([[0.7010]])

import numpy as np

x = np.linspace(0, 10, 40)

x_t = torch.Tensor(x).view((20, 2))

y_t = my_model(x_t)

y = y_t.data.numpy()

for xe,ye in zip(x_t, y_t):

print(xe.data, '\t', ye.data)

tensor([0.0000, 0.2564]) tensor([0.4880])

tensor([0.5128, 0.7692]) tensor([0.5106])

tensor([1.0256, 1.2821]) tensor([0.5331])

tensor([1.5385, 1.7949]) tensor([0.5554])

tensor([2.0513, 2.3077]) tensor([0.5776])

tensor([2.5641, 2.8205]) tensor([0.5994])

tensor([3.0769, 3.3333]) tensor([0.6209])

tensor([3.5897, 3.8462]) tensor([0.6419])

tensor([4.1026, 4.3590]) tensor([0.6623])

tensor([4.6154, 4.8718]) tensor([0.6822])

tensor([5.1282, 5.3846]) tensor([0.7014])

tensor([5.6410, 5.8974]) tensor([0.7200])

tensor([6.1538, 6.4103]) tensor([0.7378])

tensor([6.6667, 6.9231]) tensor([0.7549])

tensor([7.1795, 7.4359]) tensor([0.7712])

tensor([7.6923, 7.9487]) tensor([0.7867])

tensor([8.2051, 8.4615]) tensor([0.8015])

tensor([8.7179, 8.9744]) tensor([0.8154])

tensor([9.2308, 9.4872]) tensor([0.8286])

tensor([ 9.7436, 10.0000]) tensor([0.8410])

该文展示了如何在PyTorch中自定义一个LogisticRegressionModel,从构建模型、设置损失函数(BCELoss)、选择优化器(SGD)到训练过程,以及绘制训练过程中的损失(loss)随epoch变化的曲线,并最终进行预测。模型在给定的数据集上进行训练,权重和偏置在训练过程中得到更新。

该文展示了如何在PyTorch中自定义一个LogisticRegressionModel,从构建模型、设置损失函数(BCELoss)、选择优化器(SGD)到训练过程,以及绘制训练过程中的损失(loss)随epoch变化的曲线,并最终进行预测。模型在给定的数据集上进行训练,权重和偏置在训练过程中得到更新。

1175

1175

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?