单线程和多线程

- thread

import blog_spider

import threading

import time

def single_thread():

print('single thread begin')

for url in blog_spider.urls:

blog_spider.craw(url)

print('single thread end')

def muti_thread():

print('muti thread begin')

threads = []

for url in blog_spider.urls:

threads.append(

threading.Thread(target=blog_spider.craw, args=(url,))

)

for thread in threads:

thread.start()

for thread in threads:

thread.join()

print('muti thread end')

if __name__ == '__main__':

start = time.time()

single_thread()

end = time.time()

print('single cost :',end - start)

start = time.time()

muti_thread()

end = time.time()

print('muti cost :',end - start)

生产者和消费者

import queue

import spider.blog_spider as blog_spider

import time

import random

import threading

def do_craw(url_queue: queue.Queue, html_queue: queue.Queue):

while True:

url = url_queue.get()

html = blog_spider.craw(url)

html_queue.put(html)

print(threading.currentThread().name, f"craw {url}", "url_queue.size=", url_queue.qsize())

time.sleep(random.randint(1, 2))

def do_parse(html_queue: queue.Queue, fout):

while True:

html = html_queue.get()

results = blog_spider.parse(html)

for result in results:

fout.write(str(result) + '\n')

print(threading.currentThread().name, f"results.size", len(results), "html_queue.size=", html_queue.qsize())

time.sleep(random.randint(1, 2))

if __name__ == '__main__':

url_queue = queue.Queue()

html_queue = queue.Queue()

for url in blog_spider.urls:

url_queue.put(url)

for idx in range(3):

t = threading.Thread(target=do_craw, args=(url_queue, html_queue), name=f"craw{idx}")

t.start()

fout = open("data.txt", "w")

for idx in range(2):

t = threading.Thread(target=do_parse, args=(html_queue, fout), name=f"parse{idx}")

t.start()

import math

import time

from concurrent.futures import ThreadPoolExecutor, ProcessPoolExecutor

PRIMES = [112272535095293] * 100

def is_prime(n):

if n < 2:

return False

if n == 2:

return True

if n % 2 == 0:

return False

sqrt_n = int(math.floor(math.sqrt(n)))

for i in range(3, sqrt_n + 1, 2):

if n % i == 0:

return False

return True

def single_thread():

for number in PRIMES:

is_prime(number)

def multi_thread():

with ThreadPoolExecutor() as pool:

pool.map(is_prime, PRIMES)

def multi_process():

with ProcessPoolExecutor() as pool:

pool.map(is_prime, PRIMES)

if __name__ == '__main__':

start = time.time()

single_thread()

end = time.time()

print("single_thread, cost:", end - start, "seconds")

start = time.time()

multi_thread()

end = time.time()

print("multi_thread, cost:", end - start, "seconds")

start = time.time()

multi_process()

end = time.time()

print("multi_process, cost:", end - start, "seconds")

# single_thread, cost: 40.538614988327026 seconds

# multi_thread, cost: 40.512372970581055 seconds

# multi_process, cost: 11.588581800460815 seconds

线程池(进程池)

- 池化后,可以解决线程or进程创建,销毁的性能开销

- 创建多线程,两种方式

- pool.map

-

- 顺序

-

- 资源提前准备好

-

- pool.submit

-

- future.result() 拿到结果

-

- 也是顺序的

-

- concurrent.futures.as_completed 哪个线程先执行完,就先返回谁

-

- pool.map

import concurrent.futures

import spider.blog_spider as blog_spider

# 线程池

# pool.map 是顺序的,适用于固定的资源,资源要提前准备好 blog_spider.urls

# future = pool.submit() 也是顺序的。 future.result 拿到结果

# concurrent.futures.as_completed 无序的, 哪个先完成,返回哪个

# craw

with concurrent.futures.ThreadPoolExecutor() as pool:

htmls = pool.map(blog_spider.craw, blog_spider.urls)

htmls = list(zip(blog_spider.urls, htmls))

for url, html in htmls:

print(url, len(html))

print('craw over')

# parse

with concurrent.futures.ThreadPoolExecutor() as pool:

futures = {}

for url, html in htmls:

future = pool.submit(blog_spider.parse, html)

futures[future] = url

# 遍历方法一

# for future,url in futures.items():

# print(url,future.result())

# 遍历方法二 as_completed 无序

for future in concurrent.futures.as_completed(futures):

url = futures[future]

print(url, future.result())

asyncio实现协程

- loop = asyncio.get_event_loop() 获取至尊循环

- async 说明这个函数是协程

- await 表示这是个 io ,不阻塞,让超级循环进入下一个循环的执行

await 后面跟的,必须是协程对象 or future 对象 or task 对象 !!!

import asyncio

import aiohttp

import time

from spider import blog_spider

async def async_craw(url):

print("craw url: ", url)

async with aiohttp.ClientSession() as session:

async with session.get(url) as resp:

result = await resp.text() // 遇到 await 交给其他协程处理,不阻塞

print(f"craw url: {url}, {len(result)}")

loop = asyncio.get_event_loop()

# 创建 task 列表

tasks = [

loop.create_task(async_craw(url))

for url in blog_spider.urls]

start = time.time()

# 执行爬虫事件列表,等待他们的完成

loop.run_until_complete(asyncio.wait(tasks))

end = time.time()

print("use time seconds: ", end - start)

使用信号量来控制协程并发

import asyncio

import aiohttp

from spider import blog_spider

semaphore = asyncio.Semaphore(10)

async def async_craw(url):

async with semaphore:

print("craw url: ", url)

async with aiohttp.ClientSession() as session:

async with session.get(url) as resp:

result = await resp.text()

await asyncio.sleep(5)

print(f"craw url: {url}, {len(result)}")

loop = asyncio.get_event_loop()

tasks = [

loop.create_task(async_craw(url))

for url in blog_spider.urls]

import time

start = time.time()

loop.run_until_complete(asyncio.wait(tasks))

end = time.time()

print("use time seconds: ", end - start)

线程安全

- python 中有一些操作,内部已经把锁加上了,所以是多线程操作是线程安全的

- 可以参考官方文档哪些是线程安全的

- L.append(x) 列表添加值

- L.extend(L2)

- x = L.pop()

- L[x:y] = L2

- D[x] = y

- D.update(D2)

import threading

lock = threading.Lock()

class Account:

def __init__(self, balance):

self.balance = balance

def drawWithLock(account, amount):

"""

自动上下文管理锁

:param account:

:param amount:

:return:

"""

with lock:

if (account.balance >= amount):

print(threading.currentThread().name, '取成功')

account.balance -= amount

print(threading.currentThread().name, '余额', account.balance)

else:

print(threading.currentThread().name, '取失败,余额不足')

def drawWithLockManual(account, amount):

"""

手动加锁,释放锁

:param account:

:param amount:

:return:

"""

lock.acquire() # 加锁

if (account.balance >= amount):

print(threading.currentThread().name, '取成功')

account.balance -= amount

print(threading.currentThread().name, '余额', account.balance)

else:

print(threading.currentThread().name, '取失败,余额不足')

lock.release()

def draw(account, amount):

if (account.balance >= amount):

print(threading.currentThread().name, '取成功')

account.balance -= amount

print(threading.currentThread().name, '余额', account.balance)

else:

print(threading.currentThread().name, '取失败,余额不足')

if __name__ == '__main__':

account = Account(1000)

# ta = threading.Thread(target=draw, args=(account, 800))

# tb = threading.Thread(target=draw, args=(account, 800))

ta = threading.Thread(target=drawWithLockManual, args=(account, 800))

tb = threading.Thread(target=drawWithLockManual, args=(account, 800))

ta.start()

tb.start()

线程锁

Lock 同步锁和 RLock 递归锁

- RLock 可以支持锁的嵌套,项目中会用的比较多,比Lock

- Lock 的一次锁,一次解,效率比嵌套锁要高

import threading

Rlock = threading.RLock()

class Account:

def __init__(self, balance):

self.balance = balance

def drawWithLockManual(account, amount):

"""

手动加锁,释放锁

:param account:

:param amount:

:return:

"""

Rlock.acquire() # 加锁

if (account.balance >= amount):

print(threading.currentThread().name, '取成功')

Rlock.acquire() # 加锁

account.balance -= amount

Rlock.release()

print(threading.currentThread().name, '余额', account.balance)

else:

print(threading.currentThread().name, '取失败,余额不足')

Rlock.release()

if __name__ == '__main__':

account = Account(1000)

ta = threading.Thread(target=drawWithLockManual, args=(account, 800))

tb = threading.Thread(target=drawWithLockManual, args=(account, 800))

ta.start()

tb.start()

线程池

- 创建线程池 pool = ThreadPoolExecutor(10)

- 向线程池添加任务,future = pool.submit(task,url)

- 可以主线程等待线程执行完 # pool.shutdown(True),不等就立刻继续执行主线程

import time

import random

from concurrent.futures import ThreadPoolExecutor

# 线程池

def task(video_url):

print('开始',video_url)

time.sleep(2)

return random.randint(0,10)

def done(response):

print('执行',response.result())

pool = ThreadPoolExecutor(10)

url_list = ["www-{}.com".format(i) for i in range(15)]

for url in url_list:

future = pool.submit(task,url)

future.add_done_callback(done)

# 主线程等待线程池执行完

# pool.shutdown(True)

print('主线程执行完')

多进程

1. 多进程的三种模式,fork,spawn,forkserver

- fork。

- 拷贝几乎所有资源

- 【支持文件对象/线程锁的传参】

- 可以使用隐式拷贝的,也可以使用传参的

- 在【unix】系统下才有,【win】没有,

- spawn。

- 只传递必备资源,内部可以理解为,只创建一个 python 解释器去执行代码

- 【不拷贝主进程中的资源,也不支持文件对象/线程锁的传参】,进程锁可以当参数传递,共享进程锁

- 【unix,win】都有

- win默认是这个模式

- mac中,python3.8以后也默认是这个

- 这种模式下,主进程一定要等子进程完成后再退出,不然会报错,子进程要 p.join()

- forkserver

- 和spawn类似

- 部分unix才可用

import concurrent.futures

# 定义需要执行的任务

def worker(index):

print(index)

print(name)

if __name__ == '__main__':

print('开始')

name = []

# 使用 ProcessPoolExecutor 并行执行任务

with concurrent.futures.ProcessPoolExecutor(max_workers=4) as executor:

futures = [executor.submit(worker, i) for i in range(10)]

name.append(222)

# 打印共享资源

print('主进程',id(name),name)

- fork 模式下,锁被拷贝时,都是进程中的,主线程拷贝走的

"""

锁也会被多进程中 在 fork 模式下,拷贝走。都是被进程中的主线程拷贝走

"""

import multiprocessing

import threading

import time

def func():

print('来了')

with lock:

print(666)

time.sleep(1)

def task():

# 拷贝的时候锁也是被申请走的状态

# 是被子进程中的主线程申请走了

for i in range(10):

t = threading.Thread(target=func) # 这里面在子进程中开启了多线程,其实在子进程的,主线程里已经拷贝了一个锁了,如果没有释放,则子进程中的多线程会被 lock住

t.start()

# lock.release()

if __name__ == '__main__':

multiprocessing.set_start_method('fork')

name = []

lock = threading.RLock()

lock.acquire()

p1 = multiprocessing.Process(target=task)

p1.start()

2. 进程常用的方法

- p.start() 开启子进程

- p.join() 主进程卡主,等待子进程完成后再执行下面代码

- p.deamon = True 设置子进程,是否为守护进程。如果是,则主进程执行完毕后,子进程也自动关闭

- p.deamon = False 设置子进程不是守护,则子进程执行完毕后,主进程才结束

- p.name = xxxx 设置进程名字

3. 进程间的数据共享

- Manager 创建字典,列表,传进子进程,可以共享

- d = manager.dict()

import multiprocessing

import time

def task(d):

d[2] = 2

if __name__ == '__main__':

with multiprocessing.Manager() as manager:

d = manager.dict()

d[1] = 0

p = multiprocessing.Process(target=task, args=(d,))

p.start()

p.join() # 一定要等子进程结束后再结束,不然 spawn 模式下会报错

print(d)

# time.sleep(5) 或者主进程等一下,不然报错

- Queue 队列

- Pipes 管道

import multiprocessing

import time

def task(conn):

time.sleep(1)

conn.send([111, 222])

data = conn.recv() # 阻塞

print('子进程', data)

time.sleep(1)

if __name__ == '__main__':

parent_conn, child_conn = multiprocessing.Pipe()

p = multiprocessing.Process(target=task, args=(child_conn,))

p.start()

info = parent_conn.recv()

print('主进程', info)

parent_conn.send(666)

4. 进程池

- 想要进程池锁,不能用 mutiprocessing.Lock(),要用 mutiprocessing.Manager().RLock()

- 进程池如果要主进程等待子进程执行完,用pool.shutdown(True)

- 进程池中,每个子进程执行完,可以加个回调,future.add_done_callback(…)

- 这个回调函数是,主进程调用的,并不是每个子进程。。注意和线程池的区别

题外话

感兴趣的小伙伴,赠送全套Python学习资料,包含面试题、简历资料等具体看下方。

👉CSDN大礼包🎁:全网最全《Python学习资料》免费赠送🆓!(安全链接,放心点击)

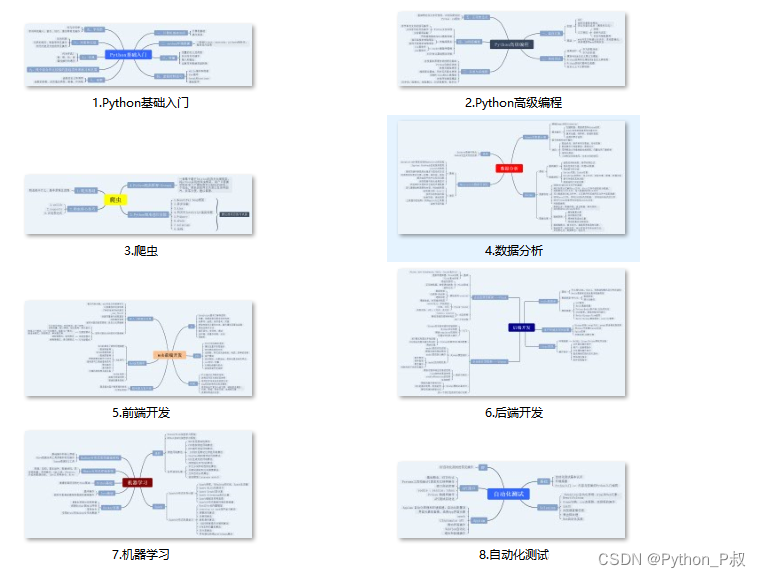

一、Python所有方向的学习路线

Python所有方向的技术点做的整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照下面的知识点去找对应的学习资源,保证自己学得较为全面。

二、Python兼职渠道推荐*

学的同时助你创收,每天花1-2小时兼职,轻松稿定生活费.

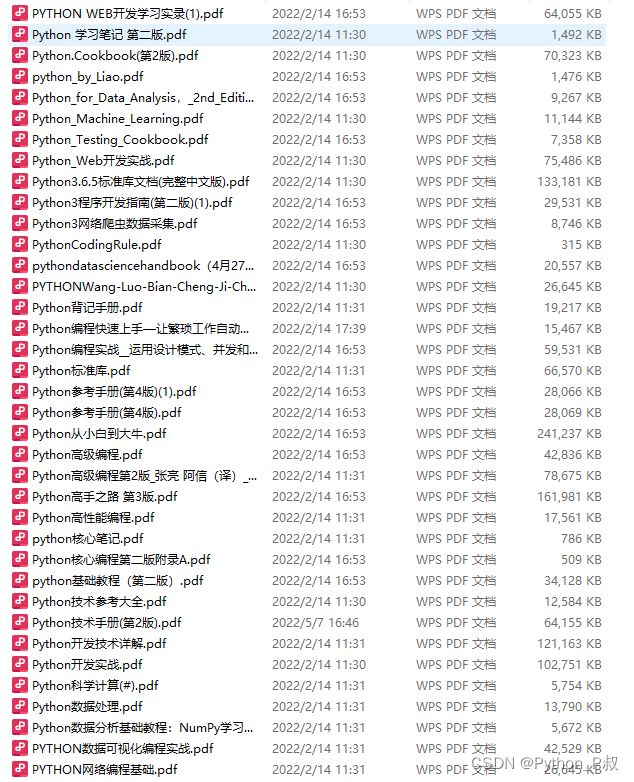

三、最新Python学习笔记

当我学到一定基础,有自己的理解能力的时候,会去阅读一些前辈整理的书籍或者手写的笔记资料,这些笔记详细记载了他们对一些技术点的理解,这些理解是比较独到,可以学到不一样的思路。

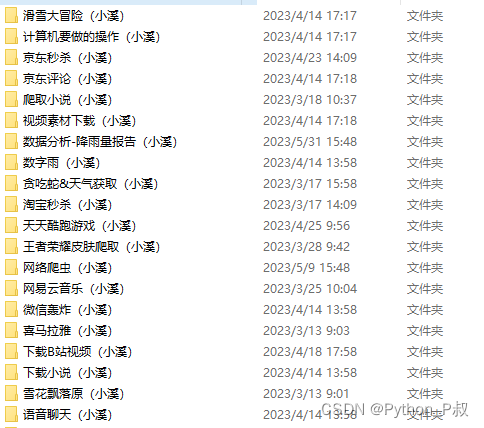

四、实战案例

纸上得来终觉浅,要学会跟着视频一起敲,要动手实操,才能将自己的所学运用到实际当中去,这时候可以搞点实战案例来学习。

👉CSDN大礼包🎁:全网最全《Python学习资料》免费赠送🆓!(安全链接,放心点击)

若有侵权,请联系删除

72

72

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?