以下是yolov7网络结构配置的yaml,对每一层的输出加了注释。

# parameters

nc: 80 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

# anchors

anchors:

- [12,16, 19,36, 40,28] # P3/8

- [36,75, 76,55, 72,146] # P4/16

- [142,110, 192,243, 459,401] # P5/32

# yolov7 backbone

backbone:

# [from, number, module, args] [N,3,640,640]

[[-1, 1, Conv, [32, 3, 1]], # 0 [N,32,640,640]

[-1, 1, Conv, [64, 3, 2]], # 1-P1/2 [N,64,320,320]

[-1, 1, Conv, [64, 3, 1]], #[N,64,320,320]

[-1, 1, Conv, [128, 3, 2]], # 3-P2/4 [N,128,160,160]

[-1, 1, Conv, [64, 1, 1]], # -6 [N,64,160,160]

[-2, 1, Conv, [64, 1, 1]], # -5 [N,64,160,160]

[-1, 1, Conv, [64, 3, 1]], #[N,64,160,160]

[-1, 1, Conv, [64, 3, 1]], # -3 #[N,64,160,160]

[-1, 1, Conv, [64, 3, 1]], #[N,64,160,160]

[-1, 1, Conv, [64, 3, 1]], # -1 #[N,64,160,160]

[[-1, -3, -5, -6], 1, Concat, [1]], #[N,256,160,160]

[-1, 1, Conv, [256, 1, 1]], # 11 #[N,256,160,160]

[-1, 1, MP, []], #[N,256,80,80]

[-1, 1, Conv, [128, 1, 1]], #[N,128,80,80]

[-3, 1, Conv, [128, 1, 1]], #[N,128,160,160]

[-1, 1, Conv, [128, 3, 2]], #[N,128,80,80]

[[-1, -3], 1, Concat, [1]], # 16-P3/8 #[N,256,80,80]

[-1, 1, Conv, [128, 1, 1]], #[N,128,80,80]

[-2, 1, Conv, [128, 1, 1]], #[N,128,80,80]

[-1, 1, Conv, [128, 3, 1]], #[N,128,80,80]

[-1, 1, Conv, [128, 3, 1]], #[N,128,80,80]

[-1, 1, Conv, [128, 3, 1]], #[N,128,80,80]

[-1, 1, Conv, [128, 3, 1]], #[N,128,80,80]

[[-1, -3, -5, -6], 1, Concat, [1]], #[N,512,80,80]

[-1, 1, Conv, [512, 1, 1]], # 24 #[N,512,80,80]

[-1, 1, MP, []], #[N,512,40,40]

[-1, 1, Conv, [256, 1, 1]], #[N,256,40,40]

[-3, 1, Conv, [256, 1, 1]], #[N,256,80,80]

[-1, 1, Conv, [256, 3, 2]], #[N,256,40,40]

[[-1, -3], 1, Concat, [1]], # 29-P4/16 #[N,512,40,40]

[-1, 1, Conv, [256, 1, 1]], #[N,256,40,40]

[-2, 1, Conv, [256, 1, 1]], #[N,256,40,40]

[-1, 1, Conv, [256, 3, 1]], #[N,256,40,40]

[-1, 1, Conv, [256, 3, 1]], #[N,256,40,40]

[-1, 1, Conv, [256, 3, 1]], #[N,256,40,40]

[-1, 1, Conv, [256, 3, 1]], #[N,256,40,40]

[[-1, -3, -5, -6], 1, Concat, [1]], #[N,1024,40,40]

[-1, 1, Conv, [1024, 1, 1]], # 37 #[N,1024,40,40]

[-1, 1, MP, []], #[N,1024,20,20]

[-1, 1, Conv, [512, 1, 1]], #[N,512,20,20]

[-3, 1, Conv, [512, 1, 1]], #[N,512,40,40]

[-1, 1, Conv, [512, 3, 2]], #[N,512,20,20]

[[-1, -3], 1, Concat, [1]], # 42-P5/32 #[N,1024,20,20]

[-1, 1, Conv, [256, 1, 1]], #[N,256,20,20]

[-2, 1, Conv, [256, 1, 1]], #[N,256,20,20]

[-1, 1, Conv, [256, 3, 1]], #[N,256,20,20]

[-1, 1, Conv, [256, 3, 1]], #[N,256,20,20]

[-1, 1, Conv, [256, 3, 1]], #[N,256,20,20]

[-1, 1, Conv, [256, 3, 1]], #[N,256,20,20]

[[-1, -3, -5, -6], 1, Concat, [1]], #[N,1024,20,20]

[-1, 1, Conv, [1024, 1, 1]], # 50 #[N,1024,20,20]

]

# yolov7 head

head:

[[-1, 1, SPPCSPC, [512]], # 51 #[N,512,20,20]

[-1, 1, Conv, [256, 1, 1]], #[N,256,20,20]

[-1, 1, nn.Upsample, [None, 2, 'nearest']], #[N,256,40,40]

[37, 1, Conv, [256, 1, 1]], # route backbone P4 #[N,256,40,40]

[[-1, -2], 1, Concat, [1]], #[N,512,40,40]

[-1, 1, Conv, [256, 1, 1]], #[N,256,40,40]

[-2, 1, Conv, [256, 1, 1]], #[N,256,40,40]

[-1, 1, Conv, [128, 3, 1]], #[N,128,40,40]

[-1, 1, Conv, [128, 3, 1]], #[N,128,40,40]

[-1, 1, Conv, [128, 3, 1]], #[N,128,40,40]

[-1, 1, Conv, [128, 3, 1]], #[N,128,40,40]

[[-1, -2, -3, -4, -5, -6], 1, Concat, [1]], #[N,1024,40,40]

[-1, 1, Conv, [256, 1, 1]], # 63 #[N,256,40,40]

[-1, 1, Conv, [128, 1, 1]], #[N,128,40,40]

[-1, 1, nn.Upsample, [None, 2, 'nearest']], #[N,128,80,80]

[24, 1, Conv, [128, 1, 1]], # route backbone P3 #[N,128,80,80]

[[-1, -2], 1, Concat, [1]], #[N,256,80,80]

[-1, 1, Conv, [128, 1, 1]], #[N,128,80,80]

[-2, 1, Conv, [128, 1, 1]], #[N,128,80,80]

[-1, 1, Conv, [64, 3, 1]], #[N,64,80,80]

[-1, 1, Conv, [64, 3, 1]], #[N,64,80,80]

[-1, 1, Conv, [64, 3, 1]], #[N,64,80,80]

[-1, 1, Conv, [64, 3, 1]], #[N,64,80,80]

[[-1, -2, -3, -4, -5, -6], 1, Concat, [1]], #[N,512,80,80]

[-1, 1, Conv, [128, 1, 1]], # 75 #[N,128,80,80]

[-1, 1, MP, []], #[N,128,40,40]

[-1, 1, Conv, [128, 1, 1]], #[N,128,40,40]

[-3, 1, Conv, [128, 1, 1]], #[N,128,80,80]

[-1, 1, Conv, [128, 3, 2]], #[N,128,40,40]

[[-1, -3, 63], 1, Concat, [1]], #[N,512,40,40]

[-1, 1, Conv, [256, 1, 1]], #[N,256,40,40]

[-2, 1, Conv, [256, 1, 1]], #[N,256,40,40]

[-1, 1, Conv, [128, 3, 1]], #[N,128,40,40]

[-1, 1, Conv, [128, 3, 1]], #[N,128,40,40]

[-1, 1, Conv, [128, 3, 1]], #[N,128,40,40]

[-1, 1, Conv, [128, 3, 1]], #[N,128,40,40]

[[-1, -2, -3, -4, -5, -6], 1, Concat, [1]], #[N,1024,40,40]

[-1, 1, Conv, [256, 1, 1]], # 88 #[N,256,40,40]

[-1, 1, MP, []], #[N,256,20,20]

[-1, 1, Conv, [256, 1, 1]], #[N,256,20,20]

[-3, 1, Conv, [256, 1, 1]], #[N,256,40,40]

[-1, 1, Conv, [256, 3, 2]], #[N,256,20,20]

[[-1, -3, 51], 1, Concat, [1]], #[N,1024,20,20]

[-1, 1, Conv, [512, 1, 1]], #[N,512,20,20]

[-2, 1, Conv, [512, 1, 1]], #[N,512,20,20]

[-1, 1, Conv, [256, 3, 1]], #[N,256,20,20]

[-1, 1, Conv, [256, 3, 1]], #[N,256,20,20]

[-1, 1, Conv, [256, 3, 1]], #[N,256,20,20]

[-1, 1, Conv, [256, 3, 1]], #[N,256,20,20]

[[-1, -2, -3, -4, -5, -6], 1, Concat, [1]], #[N,2048,20,20]

[-1, 1, Conv, [512, 1, 1]], # 101 #[N,512,20,20]

[75, 1, RepConv, [256, 3, 1]], #[N,256,80,80]

[88, 1, RepConv, [512, 3, 1]], #[N,512,40,40]

[101, 1, RepConv, [1024, 3, 1]], #[N,1024,20,20]

[[102,103,104], 1, IDetect, [nc, anchors]], # Detect(P3, P4, P5)

]网络结构图

网络结构分块讲解

1、基础模块

主要是1x1以及3x3的卷积,3x3的卷积区别主要是stride不同,最后都是用SiLU进行激活。

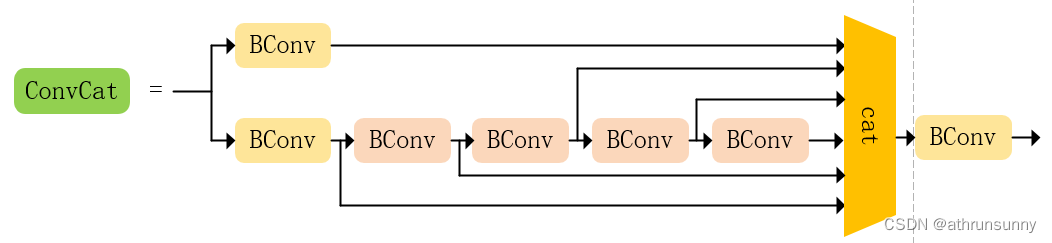

2、多路卷积模块

[-1, 1, Conv, [256, 1, 1]], #[4,256,40,40] [-2, 1, Conv, [256, 1, 1]], #[4,256,40,40] [-1, 1, Conv, [128, 3, 1]], #[4,128,40,40] [-1, 1, Conv, [128, 3, 1]], #[4,128,40,40] [-1, 1, Conv, [128, 3, 1]], #[4,128,40,40] [-1, 1, Conv, [128, 3, 1]], #[4,128,40,40] [[-1, -2, -3, -4, -5, -6], 1, Concat, [1]], #[4,1024,40,40] [-1, 1, Conv, [256, 1, 1]], # 63 #[4,256,40,40]

or

[-1, 1, Conv, [64, 1, 1]], # -6 [4,64,160,160] [-2, 1, Conv, [64, 1, 1]], # -5 [4,64,160,160] [-1, 1, Conv, [64, 3, 1]], #[4,64,160,160] [-1, 1, Conv, [64, 3, 1]], # -3 #[4,64,160,160] [-1, 1, Conv, [64, 3, 1]], #[4,64,160,160] [-1, 1, Conv, [64, 3, 1]], # -1 #[4,64,160,160] [[-1, -3, -5, -6], 1, Concat, [1]], #[4,256,160,160] [-1, 1, Conv, [256, 1, 1]], # 11 #[4,256,160,160]

对应的模块

or

其中主要是用了较多的1x1和3x3的卷积,每个卷积的输出会作为下一个卷积的输入,同时还会和其他卷积的输出进行concat,这样的结构提升了网络的精度但是这也会增加一定的耗时。

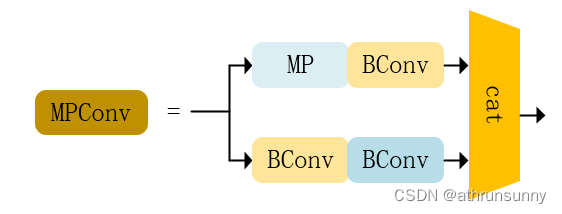

3、MP模块

[-1, 1, MP, []], #[4,256,80,80] [-1, 1, Conv, [128, 1, 1]], #[4,128,80,80] [-3, 1, Conv, [128, 1, 1]], #[4,128,160,160] [-1, 1, Conv, [128, 3, 2]], #[4,128,80,80]

MP代码如下:

class MP(nn.Module):

def __init__(self, k=2):

super(MP, self).__init__()

self.m = nn.MaxPool2d(kernel_size=k, stride=k)

def forward(self, x):

return self.m(x)图解结构如下:

将kernel_size和stride设置为2的Maxpool2d,该模块会用到1x1卷积,3x3卷积,以及一个Maxpool2d,之后将卷积输出进行concat,从而组成了该模块。

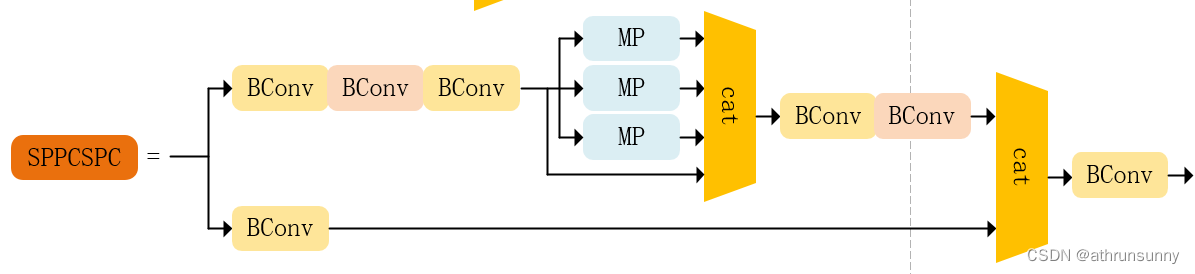

4、SPPCSPC模块

该模块是利用金字塔池化和CSP结构得到的模块,其中也是用了较多的支路,具体代码如下:

class SPPCSPC(nn.Module):

# CSP https://github.com/WongKinYiu/CrossStagePartialNetworks

def __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5, k=(5, 9, 13)):

super(SPPCSPC, self).__init__()

c_ = int(2 * c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.cv3 = Conv(c_, c_, 3, 1)

self.cv4 = Conv(c_, c_, 1, 1)

self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])

self.cv5 = Conv(4 * c_, c_, 1, 1)

self.cv6 = Conv(c_, c_, 3, 1)

self.cv7 = Conv(2 * c_, c2, 1, 1)

def forward(self, x):

x1 = self.cv4(self.cv3(self.cv1(x)))

y1 = self.cv6(self.cv5(torch.cat([x1] + [m(x1) for m in self.m], 1)))

y2 = self.cv2(x)

return self.cv7(torch.cat((y1, y2), dim=1))图解之后得到下图:

其中的MP就是金字塔池化,最后将两条分支的Y1和Y2的输出进行concat。

5、RepConv模块

代码如下:

class RepConv(nn.Module):

# Represented convolution

# https://arxiv.org/abs/2101.03697

def __init__(self, c1, c2, k=3, s=1, p=None, g=1, act=True, deploy=False):

super(RepConv, self).__init__()

self.deploy = deploy

self.groups = g

self.in_channels = c1

self.out_channels = c2

assert k == 3

assert autopad(k, p) == 1

padding_11 = autopad(k, p) - k // 2

self.act = nn.SiLU() if act is True else (act if isinstance(act, nn.Module) else nn.Identity())

if deploy:

self.rbr_reparam = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=True)

else:

self.rbr_identity = (nn.BatchNorm2d(num_features=c1) if c2 == c1 and s == 1 else None)

self.rbr_dense = nn.Sequential(

nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False),

nn.BatchNorm2d(num_features=c2),

)

self.rbr_1x1 = nn.Sequential(

nn.Conv2d( c1, c2, 1, s, padding_11, groups=g, bias=False),

nn.BatchNorm2d(num_features=c2),

)

def forward(self, inputs):

if hasattr(self, "rbr_reparam"):

return self.act(self.rbr_reparam(inputs))

if self.rbr_identity is None:

id_out = 0

else:

id_out = self.rbr_identity(inputs)

return self.act(self.rbr_dense(inputs) + self.rbr_1x1(inputs) + id_out)图解之后得到下图:

该模块的具体讲解可以参看:BACKBONE部分。

最后,如有理解不当的地方,欢迎大佬来纠错。

4128

4128

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?