集成方法主要包括Bagging和Boosting,Bagging和Boosting都是将已有的分类或回归算法通过一定方式组合起来,形成一个更加强大的分类。两种方法都是把若干个分类器整合为一个分类器的方法,只是整合的方式不一样,最终得到不一样的效果。常见的基于Baggin思想的集成模型有:随机森林、基于Boosting思想的集成模型有:Adaboost、GBDT、XgBoost、LightGBM等。

xgboost的工作原理如下

有一个样本[数据->标签]是:[(feature1,feature2,feature3)-> 1000块]

第一棵决策树用这个样本训练的预测为950

那么第二棵决策树训练时的输入,这个样本就变成了:[(feature1,feature2,feature3)->50]

第二棵决策树用这个样本训练的预测为30

那么第三棵决策树训练时的输入,这个样本就变成了:[(feature1,feature2,feature3)->20]

第三棵决策树用这个样本训练的预测为20

在实际过程中,以一个金融风控为例

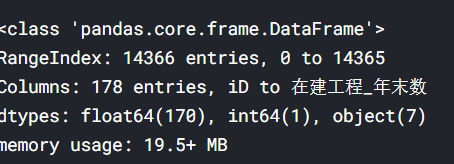

该数据集包含了大量金融数据及企业自身特征

一共有178项特征

我们分别用一些基准模型进行对比分析

随机森林模型

from sklearn.ensemble import RandomForestClassifier

# create object model

RF_model = RandomForestClassifier()

# fit the model

RF_model.fit(X_train,y_train)

# model score

predict_train_RF = RF_model.predict(X_train)

predict_test_RF = RF_model.predict(X_test)

# accuracy score

RF_train_score = RF_model.score(X_train,y_train)

RF_test_score = RF_model.score(X_test,y_test)

# f1-score

RF_f1_score = metrics.f1_score(y_test,predict_test_RF)

print('Accuracy on Train set',RF_train_score)

print('Accuracy on Test set',RF_test_score)

print('F1-score on Test set:',RF_f1_score)

print(metrics.classification_report(y_test,predict_test_RF))线性回归模型

from sklearn.linear_model import LogisticRegression

LR_model = LogisticRegression(max_iter=2000)

# fit the model

LR_model.fit(X_train, y_train)

y_predict_LR = LR_model.predict(X_test)

# model score

predict_train_LR = LR_model.predict(X_train)

predict_test_LR = LR_model.predict(X_test)

# accuracy score

LR_train_score = LR_model.score(X_train,y_train)

LR_test_score = LR_model.score(X_test,y_test)

# f1-score

LR_f1_score = metrics.f1_score(y_test,predict_test_LR)

LR_recall = metrics.recall_score(y_test,predict_test_LR)

print('Accuracy on Train set',LR_train_score)

print('Accuracy on Test set',LR_test_score)

print('F1-score on Test set:',LR_f1_score)

print(metrics.classification_report(y_test, predict_test_LR))LDA模型

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

LDA = LinearDiscriminantAnalysis(solver='svd')

# fit the model

LDA.fit(X_train, y_train)

y_predict_LDA = LDA.predict(X_test)

# model score

predict_train_LDA = LDA.predict(X_train)

predict_test_LDA = LDA.predict(X_test)

# accuracy score

LDA_train_score = LDA.score(X_train,y_train)

LDA_test_score = LDA.score(X_test,y_test)

# f1-score

LDA_f1_score = metrics.f1_score(y_test,predict_test_LDA)

LDA_recall = metrics.recall_score(y_test, predict_test_LDA)

print('Accuracy on Train set',LDA_train_score)

print('Accuracy on Test set',LDA_test_score)

print('F1-score on Test set:',LDA_f1_score)

print(metrics.classification_report(y_test, predict_test_LDA))NB

from sklearn.naive_bayes import GaussianNB

NB_model = GaussianNB()

# fit the model

NB_model.fit(X_train, y_train)

y_predict_NB = NB_model.predict(X_test)

# model score

predict_train_NB = NB_model.predict(X_train)

predict_test_NB = NB_model.predict(X_test)

# accuracy score

NB_train_score = NB_model.score(X_train,y_train)

NB_test_score = NB_model.score(X_test,y_test)

# f1-score

NB_f1_score = metrics.f1_score(y_test,predict_test_NB)

NB_recall = metrics.recall_score(y_test, predict_test_NB)

print('Accuracy on Train set',NB_train_score)

print('Accuracy on Test set',NB_test_score)

print('F1-score on Test set:',NB_f1_score)

print(metrics.classification_report(y_test, predict_test_NB))然后对这些基准模型使用贝叶斯搜索

from sklearn.model_selection import RandomizedSearchCV

# to get best parameters

# fine Tune the model using RandomizedSearchCV

#"""

parameters= {'n_estimators':[400,500],

'max_depth':[7,10],

'max_features':[4,5],

'min_samples_split' : [100,150],

'min_samples_leaf' : [30,40]}

rf = RandomForestClassifier()

rf_model_tune = RandomizedSearchCV(rf, param_distributions = parameters, cv=3,n_iter = 20, verbose=2, random_state=42)

rf_model_tune.fit(X_train,y_train)

#"""各模型的性能如下

然后采用xgb训练

#简单xgb分类训练

import xgboost as xgb

xgb_model = xgb.XGBClassifier(objective="binary:logistic", random_state=42)

xgb_model.fit(X_train,y_train)

predict_train_xgb = xgb_model.predict(X_train)

predict_test_xgb = xgb_model.predict(X_test)

# accuracy score

predict_train_xgb = xgb_model.predict(X_test)

#xgb_score = xgb.score(X_train,y_train)

xgb_test_score = metrics.accuracy_score(y_test,predict_train_xgb)

# f1-score

xgb_f1_score = metrics.f1_score(y_test,predict_test_xgb)

xgb_recall = metrics.recall_score(y_test,predict_test_xgb)

#print('Accuracy on Train set',xgb_train_score)

print('Accuracy on Test set',xgb_test_score)

print('F1-score on Test set:',xgb_f1_score)

print(metrics.classification_report(y_test,predict_test_xgb))

print("F1 score: {}".format(f1_score(y_test, pred)))效果如下

可以发现模型的部分指标有所提升

8万+

8万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?