注意点:

1 数据预处理阶段(添加特殊字符)

主要用来进行字符补全,和都是用在Decoder端的序列中,告诉解码器句子的起始与结束

则用来替代一些未出现过的词或者低频词

2 encoder

直接LSTM或RNN模型

#下面我用到的是LSTM模型

3 decoder

对target数据进行处理

构造Decoder

在训练过程中,我们需要将我们的target序列作为输入传给Decoder端RNN的每个阶段,而不是使用前一阶段预测输出,这样会使得模型更加准确。TrainingHelper用于训练阶段,GreedyEmbeddingHelper用于测试阶段

Embedding

构造Decoder层

构造输出层,输出层会告诉我们每个时间序列的RNN输出结果

Training Decoder

Predicting Decoder

4 构建好了Encoder层与Decoder以后,我们需要将它们连接起来build我们的Seq2Seq模型

图中每一个box代表了一个RNN单元,通常是LSTM或者GRU。其实基础的Seq2Seq是有很多弊端的,首先Encoder将输入编码为固定大小状态向量的过程实际上是一个信息“信息有损压缩”的过程,如果信息量越大,那么这个转化向量的过程对信息的损失就越大,同时,随着sequence length的增加,意味着时间维度上的序列很长,RNN模型也会出现梯度弥散。最后,基础的模型连接Encoder和Decoder模块的组件仅仅是一个固定大小的状态向量,这使得Decoder无法直接去关注到输入信息的更多细节。由于基础Seq2Seq的种种缺陷,随后引入了Attention的概念以及Bi-directional encoder layer等,由于本篇文章主要是构建一个基础的Seq2Seq模型,对其他改进tricks先不做介绍。

总结起来说,基础的Seq2Seq主要包括Encoder,Decoder,以及连接两者的固定大小的State Vector。

代码如下:

1.seq2seq_modle.py代码

#coding=utf-8

__author__ = 'wangbing'

from distutils.version import LooseVersion

import tensorflow as tf

from tensorflow.python.layers.core import Dense

import numpy as np

import time

# encoder层

# decoder层

# seq2seq model

# Check TensorFlow Version

assert LooseVersion(tf.__version__) >= LooseVersion('1.1'), 'Please use TensorFlow version 1.1 or newer'

print('TensorFlow Version: {}'.format(tf.__version__))

# encode层输出结果

def get_encoder_layer(input_data, rnn_size, num_layers,

source_sequence_length, source_vocab_size,

encoding_embedding_size):

'''

构造Encoder层

参数说明:

- input_data: 输入tensor

- rnn_size: rnn隐层结点数量

- num_layers: 堆叠的rnn cell数量

- source_sequence_length: 源数据的序列长度

- source_vocab_size: 源数据的词典大小

- encoding_embedding_size: embedding的大小

'''

# Encoder embedding

encoder_embed_input = tf.contrib.layers.embed_sequence(input_data, source_vocab_size, encoding_embedding_size)

# RNN cell

def get_lstm_cell(rnn_size):

lstm_cell = tf.contrib.rnn.LSTMCell(rnn_size, initializer=tf.random_uniform_initializer(-0.1, 0.1, seed=2))

return lstm_cell

# lstm_cell = tf.contrib.rnn.LSTMCell(rnn_size, initializer=tf.random_uniform_initializer(-0.1, 0.1, seed=2))

cell = tf.contrib.rnn.MultiRNNCell([get_lstm_cell(rnn_size) for _ in range(num_layers)])

#encoder_state表示encoder最终状态

encoder_output, encoder_state = tf.nn.dynamic_rnn(cell, encoder_embed_input,

sequence_length=source_sequence_length, dtype=tf.float32)

# lstm_cell_fw = tf.nn.rnn_cell.BasicLSTMCell(encoding_embedding_size, forget_bias=1.0)

# input_batch_size = tf.shape(input_data)[0] + 0

# initial_state_sentence = lstm_cell_fw.zero_state(input_batch_size, dtype=tf.float32)

# encoder_output, encoder_state = tf.nn.dynamic_rnn(lstm_cell_fw, encoder_embed_input, dtype=tf.float32,

# initial_state=initial_state_sentence)

return encoder_output, encoder_state

def process_decoder_input(data, vocab_to_int, batch_size):

'''

补充<GO>,并移除最后一个字符

'''

# cut掉最后一个字符

ending = tf.strided_slice(data, [0, 0], [batch_size, -1], [1, 1])

decoder_input = tf.concat([tf.fill([batch_size, 1], vocab_to_int['<GO>']), ending], 1)

return decoder_input

def decoding_layer(target_letter_to_int, decoding_embedding_size, num_layers, rnn_size,

target_sequence_length, max_target_sequence_length, encoder_state, decoder_input, batch_size):

'''

构造Decoder层

参数:

- target_letter_to_int: target数据的映射表

- decoding_embedding_size: embed向量大小

- num_layers: 堆叠的RNN单元数量

- rnn_size: RNN单元的隐层结点数量

- target_sequence_length: target数据序列长度

- max_target_sequence_length: target数据序列最大长度

- encoder_state: encoder端编码的状态向量

- decoder_input: decoder端输入

'''

# 1. Embedding

target_vocab_size = len(target_letter_to_int)

decoder_embeddings = tf.Variable(tf.random_uniform([target_vocab_size, decoding_embedding_size]))

decoder_embed_input = tf.nn.embedding_lookup(decoder_embeddings, decoder_input)

# 2. 构造Decoder中的RNN单元

def get_decoder_cell(rnn_size):

decoder_cell = tf.contrib.rnn.LSTMCell(rnn_size,

initializer=tf.random_uniform_initializer(-0.1, 0.1, seed=2))

return decoder_cell

cell = tf.contrib.rnn.MultiRNNCell([get_decoder_cell(rnn_size) for _ in range(num_layers)])

# 3. Output全连接层

output_layer = Dense(target_vocab_size,

kernel_initializer = tf.truncated_normal_initializer(mean = 0.0, stddev=0.1))

# 4. Training decoder

# TrainingHelper用于train阶段

# encoder_state表示encoder的final state

with tf.variable_scope("decode"):

# 得到help对象

training_helper = tf.contrib.seq2seq.TrainingHelper(inputs=decoder_embed_input,

sequence_length=target_sequence_length,

time_major=False)

# 构造decoder

training_decoder = tf.contrib.seq2seq.BasicDecoder(cell,

training_helper,

encoder_state,

output_layer)

training_decoder_output, _, _ = tf.contrib.seq2seq.dynamic_decode(training_decoder,

impute_finished=True,

maximum_iterations=max_target_sequence_length)

# 5. Predicting decoder

# 与training共享参数

# GreedyEmbeddingHelper用于test阶段

with tf.variable_scope("decode", reuse=True):

# 创建一个常量tensor并复制为batch_size的大小

start_tokens = tf.tile(tf.constant([target_letter_to_int['<GO>']], dtype=tf.int32), [batch_size],

name='start_tokens')

predicting_helper = tf.contrib.seq2seq.GreedyEmbeddingHelper(decoder_embeddings,

start_tokens,

target_letter_to_int['<EOS>'])

predicting_decoder = tf.contrib.seq2seq.BasicDecoder(cell,

predicting_helper,

encoder_state,

output_layer)

predicting_decoder_output, _, _ = tf.contrib.seq2seq.dynamic_decode(predicting_decoder,

impute_finished=True,

maximum_iterations=max_target_sequence_length)

return training_decoder_output, predicting_decoder_output

def seq2seq_model(input_data, targets, lr, target_sequence_length,

max_target_sequence_length, source_sequence_length,

source_vocab_size, target_vocab_size,

encoding_embedding_size, decoder_embedding_size,

rnn_size, num_layers, target_letter_to_int, batch_size, decoding_embedding_size):

# 获取encoder的状态输出

_, encoder_state = get_encoder_layer(input_data,

rnn_size,

num_layers,

source_sequence_length,

source_vocab_size,

encoding_embedding_size)

# 预处理后的decoder输入

decoder_input = process_decoder_input(targets, target_letter_to_int, batch_size)

# 将状态向量与输入传递给decoder

training_decoder_output, predicting_decoder_output = decoding_layer(target_letter_to_int,

decoding_embedding_size,

num_layers,

rnn_size,

target_sequence_length,

max_target_sequence_length,

encoder_state,

decoder_input,

batch_size)

return training_decoder_output, predicting_decoder_output

2.data_process.py的代码

#coding=utf-8

__author__ = 'wangbing'

# 构造词和id对应的标

def extract_character_vocab(data):

'''

构造映射表

'''

special_words = ['<PAD>', '<UNK>', '<GO>', '<EOS>']

set_words = list(set([character for line in data.split('\n') for character in line]))

set_words.sort()

# 这里要把四个特殊字符添加进词典

int_to_vocab = {idx: word for idx, word in enumerate(special_words + set_words)}

vocab_to_int = {word: idx for idx, word in int_to_vocab.items()}

return int_to_vocab, vocab_to_int

3.训练代码train.py

#coding=utf-8

import os

from data_process import extract_character_vocab

# https://blog.csdn.net/leadai/article/details/78809788

# https://www.jianshu.com/p/83443b2baf27

# https://zhuanlan.zhihu.com/p/27608348

__author__ = 'wangbing'

import numpy as np

from seq2seq_model import seq2seq_model

import tensorflow as tf

# 超参数

# Number of Epochs

epochs = 60

# Batch Size

batch_size = 128

# RNN Size

rnn_size = 50

# Number of Layers

num_layers = 2

# Embedding Size

encoding_embedding_size = 15

decoding_embedding_size = 15

# Learning Rate

learning_rate = 0.001

tf.flags.DEFINE_integer("num_checkpoints", 30, "Number of checkpoints to store ")

tf.flags.DEFINE_integer("batch_size", 128, "Batch Size ")

tf.flags.DEFINE_boolean("allow_soft_placement", True, "Allow device soft device placement")

tf.flags.DEFINE_boolean("log_device_placement", False, "Log placement of ops on devices")

tf.flags.DEFINE_boolean("debug", False, "Run with tf debugger")

FLAGS = tf.flags.FLAGS

# FLAGS._parse_flags()

with open('letters_source.txt', 'r', encoding='utf-8') as f:

source_data = f.read()

with open('letters_target.txt', 'r', encoding='utf-8') as f:

target_data = f.read()

source_int_to_letter, source_letter_to_int = extract_character_vocab(source_data)

target_int_to_letter, target_letter_to_int = extract_character_vocab(target_data)

# 对字母进行转换

source_int = [[source_letter_to_int.get(letter, source_letter_to_int['<UNK>'])

for letter in line] for line in source_data.split('\n')]

target_int = [[target_letter_to_int.get(letter, target_letter_to_int['<UNK>'])

for letter in line] + [target_letter_to_int['<EOS>']] for line in target_data.split('\n')]

# 将数据集分割为train和validation

train_source = source_int[batch_size:]

train_target = target_int[batch_size:]

# 留出一个batch进行验证

valid_source = source_int[:batch_size]

valid_target = target_int[:batch_size]

display_step = 50 # 每隔50轮输出loss

#模型参数定义和占位

def get_inputs():

'''

模型输入tensor

'''

inputs = tf.placeholder(tf.int32, [None, None], name='inputs')

targets = tf.placeholder(tf.int32, [None, None], name='targets')

learning_rate = tf.placeholder(tf.float32, name='learning_rate')

# 定义target序列最大长度(之后target_sequence_length和source_sequence_length会作为feed_dict的参数)

target_sequence_length = tf.placeholder(tf.int32, (None,), name='target_sequence_length')

max_target_sequence_length = tf.reduce_max(target_sequence_length, name='max_target_len')

source_sequence_length = tf.placeholder(tf.int32, (None,), name='source_sequence_length')

return inputs, targets, learning_rate, target_sequence_length, max_target_sequence_length, source_sequence_length

def pad_sentence_batch(sentence_batch, pad_int):

'''

对batch中的序列进行补全,保证batch中的每行都有相同的sequence_length

参数:

- sentence batch

- pad_int: <PAD>对应索引号

'''

max_sentence = max([len(sentence) for sentence in sentence_batch])

return [sentence + [pad_int] * (max_sentence - len(sentence)) for sentence in sentence_batch]

def get_batches(targets, sources, batch_size, source_pad_int, target_pad_int):

'''

定义生成器,用来获取batch

'''

for batch_i in range(0, len(sources)//batch_size):

start_i = batch_i * batch_size

sources_batch = sources[start_i:start_i + batch_size]

targets_batch = targets[start_i:start_i + batch_size]

# 补全序列

pad_sources_batch = np.array(pad_sentence_batch(sources_batch, source_pad_int))

pad_targets_batch = np.array(pad_sentence_batch(targets_batch, target_pad_int))

# 记录每条记录的长度

targets_lengths = []

for target in targets_batch:

targets_lengths.append(len(target))

source_lengths = []

for source in sources_batch:

source_lengths.append(len(source))

yield pad_targets_batch, pad_sources_batch, targets_lengths, source_lengths

# 获得模型输入

input_data, targets, lr, target_sequence_length, max_target_sequence_length, source_sequence_length = get_inputs()

# 上面都是数据预处理

# 构建图

#有默认的graph

# with tf.Graph().as_default():

session_conf = tf.ConfigProto(

allow_soft_placement=FLAGS.allow_soft_placement,

log_device_placement=FLAGS.log_device_placement

)

session_conf.gpu_options.allow_growth = True

sess = tf.Session(config=session_conf)

with sess.as_default():

training_decoder_output, predicting_decoder_output = seq2seq_model(input_data,

targets,

lr,

target_sequence_length,

max_target_sequence_length,

source_sequence_length,

len(source_letter_to_int),

len(target_letter_to_int),

encoding_embedding_size,

decoding_embedding_size,

rnn_size,

num_layers,

target_letter_to_int,

batch_size,

decoding_embedding_size)

# tf.identity是返回一个一模一样新的tensor的op,这会增加一个新节点到gragh中

# tf.contrib.seq2seq.dynamic_decode

# 用于构造一个动态的decoder,返回的内容是:

# (final_outputs, final_state, final_sequence_lengths).

# 其中,final_outputs是一个namedtuple,里面包含两项(rnn_outputs, sample_id)

# rnn_output: [batch_size, decoder_targets_length, vocab_size],保存decode每个时刻每个单词的概率,可以用来计算loss

# sample_id: [batch_size], tf.int32,保存最终的编码结果。可以表示最后的答案

# 作者:石晓文的学习日记

training_logits = tf.identity(training_decoder_output.rnn_output, 'logits')

predicting_logits = tf.identity(predicting_decoder_output.sample_id, name='predictions')

masks = tf.sequence_mask(target_sequence_length, max_target_sequence_length, dtype=tf.float32, name='masks')

with tf.name_scope("optimization"):

# Loss function

cost = tf.contrib.seq2seq.sequence_loss(

training_logits,

targets,

masks)

# Optimizer

optimizer = tf.train.AdamOptimizer(lr)

# Gradient Clipping

gradients = optimizer.compute_gradients(cost)

capped_gradients = [(tf.clip_by_value(grad, -5., 5.), var) for grad, var in gradients if grad is not None]

train_op = optimizer.apply_gradients(capped_gradients)

# 用于验证的batch

(valid_targets_batch, valid_sources_batch, valid_targets_lengths, valid_sources_lengths) = next(get_batches(valid_target, valid_source, batch_size,

source_letter_to_int['<PAD>'],

target_letter_to_int['<PAD>']))

#训练部分

checkpoint = "model_checkpoint/trained_model.ckpt"

sess.run(tf.global_variables_initializer())

#checkpoint 保存

# Checkpoint directory. Tensorflow assumes this directory already exists so we need to create it

# out_dir = "runs"

# checkpoint_dir = os.path.abspath(os.path.join(out_dir, "checkpoints"))

# checkpoint_prefix = os.path.join(checkpoint_dir, "model")

# if not os.path.exists(checkpoint_dir):

# os.makedirs(checkpoint_dir)

# saver = tf.train.Saver(tf.global_variables(), max_to_keep=FLAGS.num_checkpoints)

#

# # Write vocabulary

# vocab_processor.save(os.path.join(out_dir, "vocab"))

for epoch_i in range(1, epochs+1):

for batch_i, (targets_batch, sources_batch, targets_lengths, sources_lengths) in enumerate(

get_batches(train_target, train_source, batch_size,

source_letter_to_int['<PAD>'],

target_letter_to_int['<PAD>'])):

feed_dic = {input_data: sources_batch,

targets: targets_batch,

lr: learning_rate,

target_sequence_length: targets_lengths,

source_sequence_length: sources_lengths}

_, loss = sess.run([train_op, cost], feed_dic)

if batch_i % display_step == 0:

# 计算validation loss

feed_dic = {input_data: valid_sources_batch,

targets: valid_targets_batch,

lr: learning_rate,

target_sequence_length: valid_targets_lengths,

source_sequence_length: valid_sources_lengths}

validation_loss = sess.run([cost], feed_dic)

print('Epoch {:>3}/{} Batch {:>4}/{} - Training Loss: {:>6.3f} - Validation loss: {:>6.3f}'

.format(epoch_i,

epochs,

batch_i,

len(train_source) // batch_size,

loss,

validation_loss[0]))

# 保存模型

saver = tf.train.Saver()

saver.save(sess, checkpoint)

print('Model Trained and Saved')

跑一下训练结果:

选取了10个epoch的batch的训练数据,有Training Loss和Validation loss。

4.测试代码,text.py

__author__ = 'wangbing'

#coding=utf-8

import tensorflow as tf

from data_process import extract_character_vocab

batch_size = 128

# batch_size = FLAGS.batch_size

with open('letters_source.txt', 'r', encoding='utf-8') as f:

source_data = f.read()

with open('letters_target.txt', 'r', encoding='utf-8') as f:

target_data = f.read()

source_int_to_letter, source_letter_to_int = extract_character_vocab(source_data)

target_int_to_letter, target_letter_to_int = extract_character_vocab(target_data)

def source_to_seq(text):

'''

对源数据进行转换

'''

sequence_length = 7

return [source_letter_to_int.get(word, source_letter_to_int['<UNK>']) for word in text] + [source_letter_to_int['<PAD>']]*(sequence_length-len(text))

input_word = 'this is my pen!'

text = source_to_seq(input_word)

print(text)

# [15, 16, 9, 9, 16, 10, 0]

# [23, 18, 16, 16, 18, 17, 0]

# [17, 29, 5, 5, 29, 6, 0]

checkpoint = "model_checkpoint/trained_model.ckpt"

# loaded_graph = tf.Graph()

with tf.Session() as sess:

# with tf.Session(graph=loaded_graph) as sess:

# 加载模型

saver = tf.train.import_meta_graph(checkpoint + '.meta')

saver.restore(sess, checkpoint)

graph = tf.get_default_graph()

input_data = graph.get_tensor_by_name('inputs:0')

logits = graph.get_tensor_by_name('predictions:0')

source_sequence_length = graph.get_tensor_by_name('source_sequence_length:0')

target_sequence_length = graph.get_tensor_by_name('target_sequence_length:0')

feed_dic = {input_data: [text]*batch_size,

target_sequence_length: [len(input_word)]*batch_size,

source_sequence_length: [len(input_word)]*batch_size}

answer_logits = sess.run(logits, feed_dic)[0]

pad = source_letter_to_int["<PAD>"]

print('原始输入:', input_word)

print('\nSource')

print(' Word 编号: {}'.format([i for i in text]))

print(' Input Words: {}'.format(" ".join([source_int_to_letter[i] for i in text])))

print('\nTarget')

print(' Word 编号: {}'.format([i for i in answer_logits if i != pad]))

print(' Response Words: {}'.format(" ".join([target_int_to_letter[i] for i in answer_logits if i != pad])))

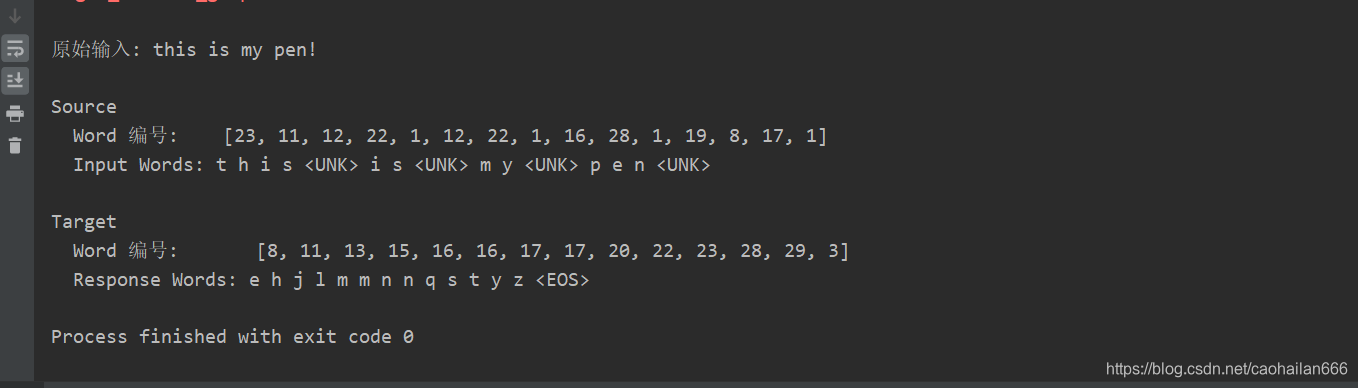

跑下测试结果:

测试结果还是很不错!!!

#######

参考https://zhuanlan.zhihu.com/p/27608348

源码 https://github.com/lbda1/base_seq2seq

#######

504

504

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?