Netty Intro

Background

Synchronized Worker

public class Server {

public static void main(String[] args) {

...

server();

}

public void server(int port) {

// Create a socket for incoming client requests on the provided port

final ServerSocket listenerSocket = new ServerSocket(port);

while (true) {

// Watch the server socket for new incoming connections.

final Socket clientSocket = listenerSocket.accept();

// <- It only continues here when a new connection has been accepted!

// Then start processing ...

// Since the thread is busy now, no new connections can be accepted

worker(clientSocket);

}

...

}

private void worker(Socket socket) {

// Get in/out Stream

InputStream in = socket.getInputStream();

OutputStream out = socket.getOutputStream();

// Read - blocked if no

// 1. data

// 2. complete

// 3. exception

...

// Process the data

...

// Write - blocked until

// 1. all data is written to into *socket*/sysbuffer..

// 2. sysbuffer (tcp window) might get to zero if client is too slow.. then block

...

}

}- Listener and Worker share the same thread;

- Listener Blocked by Worker

- -> Listener is blocked - no new connection accepted until Worker complete;

- Read and Write in Worker is blocked

- -> Woker is blocked -> Listener is blocked

ASynchronized Worker

public class Server {

public static void main(String[] args) {

...

server();

}

public void server(int port) {

// Create a socket for incoming client requests on the provided port

final ServerSocket listenerSocket = new ServerSocket(port);

while (true) {

// Watch the server socket for new incoming connections.

final Socket clientSocket = listenerSocket.accept();

// <- It only continues here when a new connection has been accepted!

// Then start processing ...

new Thread(Worker(clientSocket)).start();

...

// Getting back to Accept new connections ..

}

...

}

}

public class Worker implements Runnable {

private Socket socket;

Worker(Socket socket) {

this.socket = socket;

}

private void run() {

// Get in/out Stream

InputStream in = socket.getInputStream();

OutputStream out = socket.getOutputStream();

// Read - blocked if no

// 1. data

// 2. complete

// 3. exception

...

// Process the data

...

// Write - blocked until

// 1. all data is written to into *socket*/sysbuffer..

// 2. sysbuffer (tcp window) might get to zero if client is too slow.. then block

...

}

}- Listener and Workers are in different threads;

- Listener is NOT Blocked by Worker

- new connections are accepted until Server Crash (too many Threads due to high concurrency)

- once crashed no new connections accepted

- Read and Write in Worker is blocked

- -> Woker is blocked -> Listener is blocked

ASynchronized Worker (Pooling/Limit/Bounded)

public class Server {

public static void main(String[] args) {

...

server();

}

public void server(int port) {

// Create a socket for incoming client requests on the provided port

final ServerSocket listenerSocket = new ServerSocket(port);

// Create a Pool with max Threads and max tasks in Queue

PoolingExecutorService executor = new PoolingExecutorService(maxPoolSize, maxQueueSize);

while (true) {

// Watch the server socket for new incoming connections.

final Socket clientSocket = listenerSocket.accept();

// <- It only continues here when a new connection has been accepted!

// Use Executor to execute Worker Threads;

executor.execute(new Worker(socket));

...

// Getting back to Accept new connections ..

}

...

}

}

public class PoolingExecutorService {

private ExecutorService executor;

// Bounded Pool(Threads) and Bounded Queue (Tasks in Queue)

public PoolingExecutorService(int maxPoolSize, int queueSize) {

executor = new ThreadPoolExecutor(Runtime.getRuntime().availableProcessors(),

maxPoolSize,

120L,

TimeUnit.SECONDS,

new ArrayBlockingQueue(queueSize));

}

public void execute(java.lang.Runnable task) {

executor.execute(task);

}

}

public class Worker implements Runnable {

private Socket socket;

Worker(Socket socket) {

this.socket = socket;

}

private void run() {

// Get in/out Stream

InputStream in = socket.getInputStream();

OutputStream out = socket.getOutputStream();

// Read - blocked if no

// 1. data

// 2. complete

// 3. exception

...

// Process the data

...

// Write - blocked until

// 1. all data is written to into *socket*/sysbuffer..

// 2. sysbuffer (tcp window) might get to zero if client is too slow.. then block

...

}

}- Listener and Workers are in different threads;

- Listener is NOT Blocked by Worker

- new connections are accepted until Pool Size and Queue Size reached

- once reached no new connections accepted

- Read and Write in Worker is blocked

- -> Woker is blocked -> Listener is blocked

ASynchronized & NIO

public class Server {

public static void main(String[] args) {

...

server(port);

}

public void server(int port) {

ServerSocketChannel listenerChannel;

Selector selector;

// Create a new channel - accepting incoming connection

listenerChannel = ServerSocketChannel.open();

ServerSocket ss = listenerChannel.socket();

InetSocketAddress address = new InetSocketAddress(port);

ss.bind(address);

listenerChannel.configureBlocking(false);

// Create a Selector

selector = Selector.open();

// Register the selector on the new Channel

listenerChannel.register(selector, SelectionKey.OP_ACCEPT);

while (true) {

// Selector polling to get the new event. If no, block...

selector.select();

// Get the keys (type of events)

Set<SelectionKey> readKeys = selector.selectedKeys();

Iterator<SelectionKey> iterator = readKeys.iterator();

while (iterator.hasNext()) {

SelectionKey key = iterator.next();

iterator.remove();

// New Connection Handler

if (key.isAcceptable()) {

// get channel

ServerSocketChannel listenerChannel = (ServerSocketChannel) key.channel();

// create a new channel

SocketChannel clientChannel = listenerChannel.accept();

clientChannel.configureBlocking(false);

// register selector on the new channel

SelectionKey key2 = clientChannel.register(selector, SelectionKey.OP_WRITE);

} else {

worker(key);

}

}

}

...

}

private void worker(SelectionKey key) {

// Write Event Handler

if (key.isWritable()) {

// get channel

SocketChannel client = (SocketChannel) key.channel();

// handle write

client.write();

...

}

// Read Event Handler

else (key.isReadable()) {

// get channel

SocketChannel client = (SocketChannel) key.channel();

// handle read ...

client.read();

....

}

}- Listener and Workers are in the same thread (could be different ones)

- Listener is Still Blocked by Worker (if they share the same thread)

- however, I/O awaiting time is drastically reduced because worker thread is invoked ONLY WHEN I/O READY (-> CHANNEL EVENT -> SELECTOR KEY).

- Read and Write in Worker is NOT blocked

NIO v.s. OIO

Old IO was a stream of bytes and the new IO is a block of bytes, old one was a synchronous while this one is Asynchronous. NIO is good for the situations where you have to keep a lot of connections open and do a little work amongst them, like in chat applications. In Old IO you keep thread pools to manage lot of thread IO work.

- Channel: a abstraction for communication or transfer of data (byte array)

- ChannelBuffer: Byte array which can provide random or direct data storage mechanism.

- ChannelPipeline: collection of handlers to process your events in the way you want.

- ChannelFactory: Resource allocators, like thread pools.

- ChannelHandler: Provides method to handle events on channel.

- ChannelEvents: Events like connected, exception raised, message recieved etc. see in detail here.

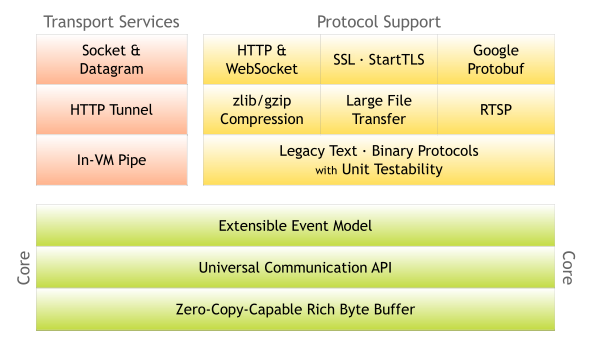

About Netty

Netty is a NIO client server framework which enables quick and easy development of network applications such as protocol servers and clients. It greatly simplifies and streamlines network programming such as TCP and UDP socket server.

'Quick and easy' doesn't mean that a resulting application will suffer from a maintainability or a performance issue. Netty has been designed carefully with the experiences earned from the implementation of a lot of protocols such as FTP, SMTP, HTTP, and various binary and text-based legacy protocols. As a result, Netty has succeeded to find a way to achieve ease of development, performance, stability, and flexibility without a compromise.

The Netty project is an effort to provide an asynchronous event-driven network application framework and tooling for the rapid development of maintainable high-performance · high-scalability protocol servers and clients.

In other words, Netty is a NIO client server framework which enables quick and easy development of network applications such as protocol servers and clients.

Netty Tools

Netty contains an impressive set of IO tools. Some of these tools are:

- HTTP Server

- HTTPS Server

- WebSocket Server

- TCP Server

- UDP Server

- In VM Pipe

Netty contains more than this, and Netty keeps growing.Using Netty's IO tools it is easy to start an HTTP server, WebSocket server etc. It takes just a few lines of code.

Concepts

Netty's high performance rely on NIO. Netty has serveral important conceps: channel, pipeline, and Inbound/Outbound handler.

Channel

Channel can be thought as a tunnel that I/O request will go through. Every Channel has its own pipeline. On API level, the most used channel are

- socket server :io.netty.channel.NioServerSocketChannel

- socket client : io.netty.channel.NioSocketChannel

Pipeline

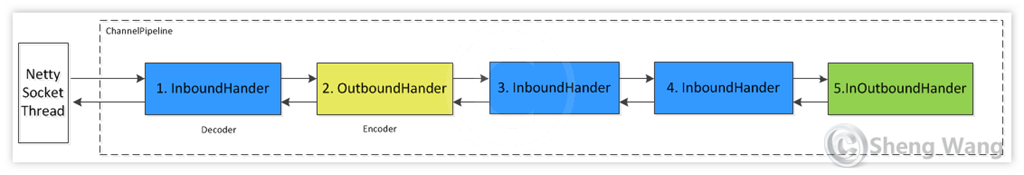

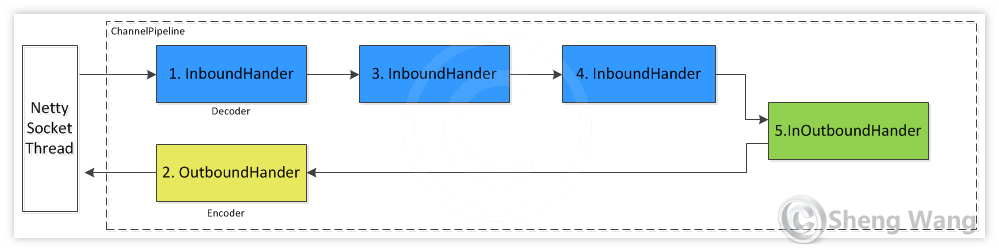

Pipeline is one of the most important notion to Netty. You can treat pipeline as a bi-direction queue. The queue is filled with inbound and outbound handlers. Every handler works like a servlet filter. As the name says , "Inbound" handlers only process read-in I/O event, "OutBound" handlers only process write-out I/O event, "InOutbound" handlers process both. For example a pipeline configured with 5 handlers looks like blow.

This pipeline is equivalent to the following logic. The input I/O event is process by handlers 1-3-4-5. The output is process by handes .

In real project, the first input handler, handler 1 in above chart, is usually an decoder. The last output handler, handler 2 in above chart, is usually a encoder. The last InOutboundHandler usually do the real business, process input data object and send reply back. In real usage, the last business logic handler often executes in a different thread than I/O thread so that the I/O is not blocked by any time-consuming tasks. (see example below)

Decoder transfers the read-in ByteBuf into data structure that is used in above bussiness logic. e.g. transfer byte stream into POJOs. If a frame is not fully received, it will block until its completion, so the next handler would NOT need to face a partial frame.

Encoder transfers the internal data structure to ByteBuf that will finally write out by socket.

References

http://ayedo.github.io/netty/2013/06/19/what-is-netty.html

http://shengwangi.blogspot.jp/2016/03/netty-tutorial-hello-world-example.html

5512

5512

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?