python简单神经网络

A neural network is a powerful tool often utilized in Machine Learning because neural networks are fundamentally very mathematical. We will use our basics of Linear Algebra and NumPy to understand the foundation of Machine Learning using Neural Networks. Our article is a showcase of the application of Linear Algebra and Python provides a wide set of libraries that help to build our motivation of using Python for machine learning.

神经网络是机器学习中经常使用的强大工具,因为神经网络从根本上说是非常数学的。 我们将使用线性代数和NumPy的基础知识来理解使用神经网络进行机器学习的基础。 我们的文章展示了线性代数的应用,Python提供了许多库,这些库有助于建立我们使用Python进行机器学习的动机。

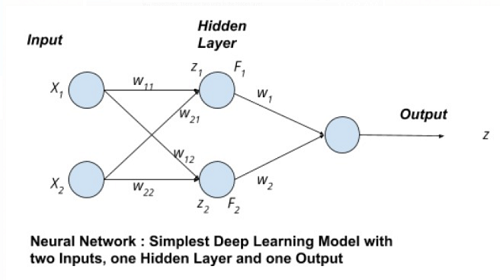

The figure is showing a neural network with two input nodes, one hidden layer, and one output node.

该图显示了具有两个输入节点,一个隐藏层和一个输出节点的神经网络。

Input to the neural network is X1, X2, and their corresponding weights are w11, w12, w21, and w21 respectively. There are two units in the hidden layer.

向神经网络的输入是X1 , X2 ,它们的相应权重分别是w11 , w12 , w21和w21 。 隐藏层中有两个单位。

For unit z1 in hidden layer:

对于隐藏层中的单位z1 :

F1 = tanh(z1) F1 = tanh(X1.w11 + X2.w21)For unit z2 in hidden layer:

对于隐藏层中的单位z2 :

F1 = tanh(z2) F2 = tanh(X2.w12 + X2.w22)

The output z is a tangent hyperbolic function for decision making which has input as the sum of products of Input and Weight. Mathematically, z = tanh(∑Fiwi)

输出z是决策的切线双曲函数 ,其输入为输入与权重的乘积之和。 数学上, z = tanh(∑F i w i )

Where tanh() is an tangent hyperbolic function because it is one of the most used decision-making functions. So for drawing this mathematical network in a python code by defining a function neural_network(X, W).

其中tanh()是切线双曲函数,因为它是最常用的决策函数之一。 因此,通过定义函数Neuro_network(X,W)以python代码绘制此数学网络。

Note: The tangent hyperbolic function takes input within a range of 0 to 1.

注意:切线双曲函数的输入范围为0到1。

Input Parameter: Vector X, W and w

输入参数:向量X , W和w

Return: A value ranging between 0 and 1, as a prediction of the neural network based on the inputs.

返回值:一个介于0到1之间的值,作为基于输入的神经网络的预测。

Application:

应用:

Machine Learning

机器学习

Computer Vision

计算机视觉

Data Analysis

数据分析

Fintech

金融科技

一个隐藏层最简单的神经网络的Python代码 (Python code for one hidden layer simplest neural network)

# Linear Algebra and Neural Network

# Linear Algebra Learning Sequence

import numpy as np

# Use of np.array() to define an Input Vector

V = np.array([.323,.432])

print("The Vector A as Inputs : ",V)

# defining Weight Vector

VV = np.array([[.3,.66,],[.27,.32]])

W = np.array([.7,.3,])

print("\nThe Vector B as Weights: ",VV)

# defining a neural network for predicting an

# output value

def neural_network(inputs, weights):

wT = np.transpose(weights)

elpro = wT.dot(inputs)

# Tangent Hyperbolic Function for Decision Making

out = np.tanh(elpro)

return out

outputi = neural_network(V,VV)

# printing the expected output

print("Expected Value of Hidden Layer Units: ", outputi)

outputj = neural_network(outputi,W)

# printing the expected output

print("Expected Output of the with one hidden layer : ", outputj)

Output:

输出:

The Vector A as Inputs : [0.323 0.432]

The Vector B as Weights: [[0.3 0.66]

[0.27 0.32]]

Expected Value of Hidden Layer Units: [0.21035237 0.33763427]

Expected Output of the with one hidden layer : 0.24354287168861996

翻译自: https://www.includehelp.com/python/one-hidden-layer-simplest-neural-network.aspx

python简单神经网络

1968

1968

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?