Keras-使用LSTM RNN的时间序列预测 (Keras - Time Series Prediction using LSTM RNN)

In this chapter, let us write a simple Long Short Term Memory (LSTM) based RNN to do sequence analysis. A sequence is a set of values where each value corresponds to a particular instance of time. Let us consider a simple example of reading a sentence. Reading and understanding a sentence involves reading the word in the given order and trying to understand each word and its meaning in the given context and finally understanding the sentence in a positive or negative sentiment.

在本章中,让我们编写一个简单的基于RSTM的长期短期记忆(LSTM)进行序列分析。 序列是一组值,其中每个值对应于特定的时间实例。 让我们考虑一个简单的阅读句子的例子。 阅读和理解句子包括按照给定的顺序阅读单词,并尝试在给定的上下文中理解每个单词及其含义,最后以积极或消极的态度理解句子。

Here, the words are considered as values, and first value corresponds to first word, second value corresponds to second word, etc., and the order will be strictly maintained. Sequence Analysis is used frequently in natural language processing to find the sentiment analysis of the given text.

在此,将单词视为值,第一个值对应于第一个单词,第二个值对应于第二个单词等,并且将严格保持顺序。 序列分析经常用于自然语言处理中,以查找给定文本的情感分析。

Let us create a LSTM model to analyze the IMDB movie reviews and find its positive/negative sentiment.

让我们创建一个LSTM模型来分析IMDB电影评论并找到其正面/负面情绪。

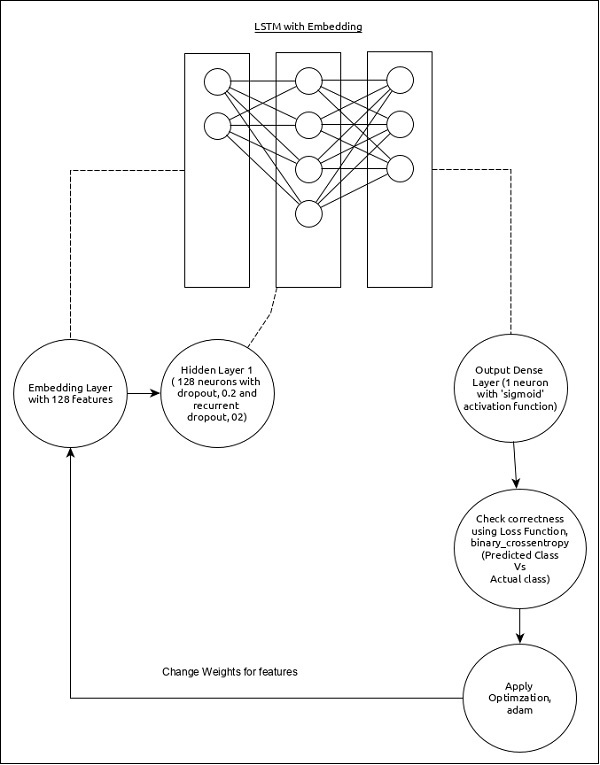

The model for the sequence analysis can be represented as below −

序列分析的模型可以表示如下-

The core features of the model are as follows −

该模型的核心特征如下-

Input layer using Embedding layer with 128 features.

输入层使用具有128个要素的嵌入层。

First layer, Dense consists of 128 units with normal dropout and recurrent dropout set to 0.2.

第一层,密集(Dense)由128个单元组成,正常辍学和经常性辍学设置为0.2。

Output layer, Dense consists of 1 unit and ‘sigmoid’ activation function.

输出层Dense由1个单元和“ Sigmoid”激活功能组成。

Use binary_crossentropy as loss function.

使用binary_crossentropy作为损失函数。

Use adam as Optimizer.

使用亚当作为优化器。

Use accuracy as metrics.

使用准确性作为指标。

Use 32 as batch size.

使用32作为批处理大小。

Use 15 as epochs.

使用15作为时代。

Use 80 as the maximum length of the word.

使用80作为单词的最大长度。

Use 2000 as the maximum number of word in a given sentence.

使用2000作为给定句子中的最大单词数。

步骤1:导入模块 (Step 1: Import the modules)

Let us import the necessary modules.

让我们导入必要的模块。

from keras.preprocessing import sequence

from keras.models import Sequential

from keras.layers import Dense, Embedding

from keras.layers import LSTM

from keras.datasets import imdb

步骤2:载入资料 (Step 2: Load data)

Let us import the imdb dataset.

让我们导入imdb数据集。

(x_train, y_train), (x_test, y_test) = imdb.load_data(num_words = 2000)

Here,

这里,

imdb is a dataset provided by Keras. It represents a collection of movies and its reviews.

imdb是Keras提供的数据集。 它代表了电影及其评论的集合。

num_words represent the maximum number of words in the review.

num_words表示评论中的最大单词数。

步骤3:处理资料 (Step 3: Process the data)

Let us change the dataset according to our model, so that it can be fed into our model. The data can be changed using the below code −

让我们根据我们的模型更改数据集,以便可以将其输入到我们的模型中。 可以使用以下代码更改数据-

x_train = sequence.pad_sequences(x_train, maxlen=80)

x_test = sequence.pad_sequences(x_test, maxlen=80)

Here,

这里,

sequence.pad_sequences convert the list of input data with shape, (data) into 2D NumPy array of shape (data, timesteps). Basically, it adds timesteps concept into the given data. It generates the timesteps of length, maxlen.

sequence.pad_sequences将形状为(data)的输入数据列表转换为形状(data,timesteps)的 2D NumPy数组。 基本上,它将时间步概念添加到给定数据中。 它生成长度maxlen的时间步长。

步骤4:建立模型 (Step 4: Create the model)

Let us create the actual model.

让我们创建实际的模型。

model = Sequential()

model.add(Embedding(2000, 128))

model.add(LSTM(128, dropout = 0.2, recurrent_dropout = 0.2))

model.add(Dense(1, activation = 'sigmoid'))

Here,

这里,

We have used Embedding layer as input layer and then added the LSTM layer. Finally, a Dense layer is used as output layer.

我们已经使用嵌入层作为输入层,然后添加了LSTM层。 最后, 密集层用作输出层。

步骤5:编译模型 (Step 5: Compile the model)

Let us compile the model using selected loss function, optimizer and metrics.

让我们使用选定的损失函数,优化器和指标来编译模型。

model.compile(loss = 'binary_crossentropy',

optimizer = 'adam', metrics = ['accuracy'])

步骤6:训练模型 (Step 6: Train the model)

LLet us train the model using fit() method.

让我们使用fit()方法训练模型。

model.fit(

x_train, y_train,

batch_size = 32,

epochs = 15,

validation_data = (x_test, y_test)

)

Executing the application will output the below information −

执行应用程序将输出以下信息-

Epoch 1/15 2019-09-24 01:19:01.151247: I

tensorflow/core/platform/cpu_feature_guard.cc:142]

Your CPU supports instructions that this

TensorFlow binary was not co mpiled to use: AVX2

25000/25000 [==============================] - 101s 4ms/step - loss: 0.4707

- acc: 0.7716 - val_loss: 0.3769 - val_acc: 0.8349 Epoch 2/15

25000/25000 [==============================] - 95s 4ms/step - loss: 0.3058

- acc: 0.8756 - val_loss: 0.3763 - val_acc: 0.8350 Epoch 3/15

25000/25000 [==============================] - 91s 4ms/step - loss: 0.2100

- acc: 0.9178 - val_loss: 0.5065 - val_acc: 0.8110 Epoch 4/15

25000/25000 [==============================] - 90s 4ms/step - loss: 0.1394

- acc: 0.9495 - val_loss: 0.6046 - val_acc: 0.8146 Epoch 5/15

25000/25000 [==============================] - 90s 4ms/step - loss: 0.0973

- acc: 0.9652 - val_loss: 0.5969 - val_acc: 0.8147 Epoch 6/15

25000/25000 [==============================] - 98s 4ms/step - loss: 0.0759

- acc: 0.9730 - val_loss: 0.6368 - val_acc: 0.8208 Epoch 7/15

25000/25000 [==============================] - 95s 4ms/step - loss: 0.0578

- acc: 0.9811 - val_loss: 0.6657 - val_acc: 0.8184 Epoch 8/15

25000/25000 [==============================] - 97s 4ms/step - loss: 0.0448

- acc: 0.9850 - val_loss: 0.7452 - val_acc: 0.8136 Epoch 9/15

25000/25000 [==============================] - 95s 4ms/step - loss: 0.0324

- acc: 0.9894 - val_loss: 0.7616 - val_acc: 0.8162Epoch 10/15

25000/25000 [==============================] - 100s 4ms/step - loss: 0.0247

- acc: 0.9922 - val_loss: 0.9654 - val_acc: 0.8148 Epoch 11/15

25000/25000 [==============================] - 99s 4ms/step - loss: 0.0169

- acc: 0.9946 - val_loss: 1.0013 - val_acc: 0.8104 Epoch 12/15

25000/25000 [==============================] - 90s 4ms/step - loss: 0.0154

- acc: 0.9948 - val_loss: 1.0316 - val_acc: 0.8100 Epoch 13/15

25000/25000 [==============================] - 89s 4ms/step - loss: 0.0113

- acc: 0.9963 - val_loss: 1.1138 - val_acc: 0.8108 Epoch 14/15

25000/25000 [==============================] - 89s 4ms/step - loss: 0.0106

- acc: 0.9971 - val_loss: 1.0538 - val_acc: 0.8102 Epoch 15/15

25000/25000 [==============================] - 89s 4ms/step - loss: 0.0090

- acc: 0.9972 - val_loss: 1.1453 - val_acc: 0.8129

25000/25000 [==============================] - 10s 390us/step

步骤7-评估模型 (Step 7 − Evaluate the model)

Let us evaluate the model using test data.

让我们使用测试数据评估模型。

score, acc = model.evaluate(x_test, y_test, batch_size = 32)

print('Test score:', score)

print('Test accuracy:', acc)

Executing the above code will output the below information −

执行上面的代码将输出以下信息-

Test score: 1.145306069601178

Test accuracy: 0.81292

翻译自: https://www.tutorialspoint.com/keras/keras_time_series_prediction_using_lstm_rnn.htm

本文介绍如何使用Keras构建一个基于LSTM的RNN模型,对IMDB电影评论进行情感分析。首先导入相关模块,然后加载IMDB数据集。接着处理数据,使其符合模型输入要求。接着构建包含嵌入层、LSTM层和密集层的模型,然后编译模型并使用fit()方法进行训练。最后,使用测试数据评估模型。

本文介绍如何使用Keras构建一个基于LSTM的RNN模型,对IMDB电影评论进行情感分析。首先导入相关模块,然后加载IMDB数据集。接着处理数据,使其符合模型输入要求。接着构建包含嵌入层、LSTM层和密集层的模型,然后编译模型并使用fit()方法进行训练。最后,使用测试数据评估模型。

569

569

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?