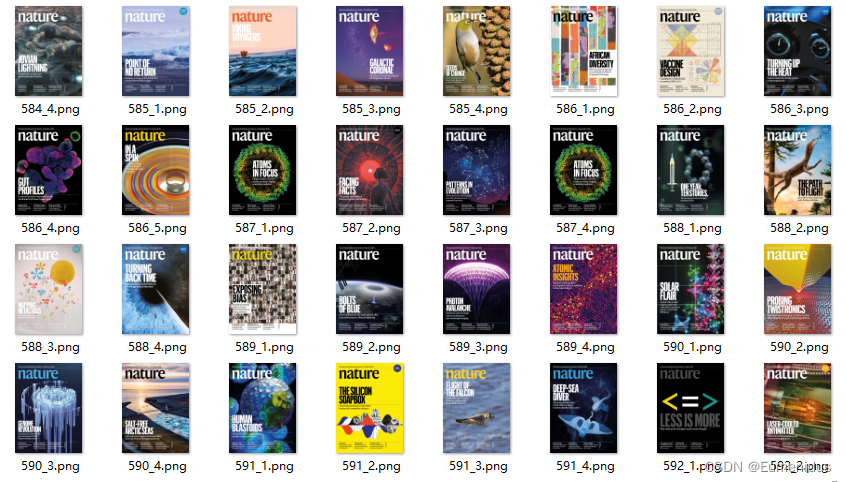

nature作为科学界最顶级的期刊之一,其期刊封面审美也一直很在线,兼具科学和艺术的美感

为了方便快速获取nature系列封面,这里用python requests模块进行自动化请求并使用BeautifulSoup模块进行html解析

import requests

from bs4 import BeautifulSoup

import os

path = 'C:\\Users\\User\\Desktop\\nature 封面\\nature 正刊'

# path = os.getcwd()

if not os.path.exists(path):

os.makedirs(path)

print("新建文件夹 nature正刊")

# 在这里改变要下载哪期的封面

# 注意下载是从后往前下载的,所以start_volume应大于等于end_volume

start_volume = 501

end_volume = 500

# nature_url = 'https://www.nature.com/ng/volumes/' # nature genetics

nature_url='https://www.nature.com/nature/volumes/' # nature 正刊

kv = {

'User-Agent':

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.132 Safari/537.36'

}

while start_volume >= end_volume:

try:

volume_url = nature_url + str(start_volume)

volume_response = requests.get(url=volume_url, headers=kv, timeout=120)

except Exception:

print(str(start_volume) + "请求异常")

with open(path + "\\异常.txt", 'at') as txt:

txt.write(str(start_volume) + "请求异常\n")

continue

volume_response.encoding = 'utf-8'

volume_soup = BeautifulSoup(volume_response.text, 'html.parser')

ul_tag = volume_soup.find_all(

'ul',

class_=

'ma0 clean-list grid-auto-fill grid-auto-fill-w220 very-small-column medium-row-gap'

)

img_list = ul_tag[0].find_all("img")

issue_number = 0

for img_tag in img_list:

issue_number += 1

filename = path + '\\' + str(start_volume) + '_' + str(

issue_number) + '.png'

if os.path.exists(filename):

print(filename + "已经存在")

continue

print("Loading...........................")

img_url = 'https:' + img_tag.get("src").replace("w200", "w1000")

try:

img_response = requests.get(img_url, timeout=240, headers=kv)

except Exception:

print(start_volume, issue_number, '???????????异常????????')

with open(path + "\\异常.txt", 'at') as txt:

txt.write(

str(start_volume) + '_' + str(issue_number) + "请求异常\n")

continue

with open(filename, 'wb') as imgfile:

imgfile.write(img_response.content)

print("成功下载图片:" + str(start_volume) + '_' + str(issue_number))

start_volume -= 1

运行结果:

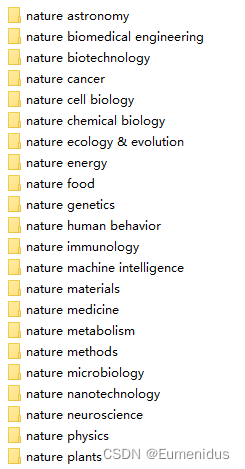

以上部分代码可以自动下载nature和nature genetics的封面,这两个期刊的网站结构跟其他子刊略有不同,其他子刊可以用以下代码来进行爬虫:

import requests

from bs4 import BeautifulSoup

import os

other_journals = {

'nature biomedical engineering': 'natbiomedeng',

'nature methods': 'nmeth',

'nature astronomy': 'natastron',

'nature medicine': 'nm',

'nature protocols': 'nprot',

'nature microbiology': 'nmicrobiol',

'nature cell biology': 'ncb',

'nature nanotechnology': 'nnano',

'nature immunology': 'ni',

'nature energy': 'nenergy',

'nature materials': 'nmat',

'nature cancer': 'natcancer',

'nature neuroscience': 'neuro',

'nature machine intelligence': 'natmachintell',

'nature metabolism': 'natmetab',

'nature food': 'natfood',

'nature ecology & evolution': "natecolevol",

"nature stuctural & molecular biology":"nsmb",

"nature physics":"nphys",

"nature human behavior":"nathumbehav",

"nature chemical biology":"nchembio"

}

nature_journal = {

# 要下载的期刊放这里

'nature plants': 'nplants',

'nature biotechnology': 'nbt'

}

folder_Name = "nature 封面"

kv = {

'User-Agent':

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.132 Safari/537.36'

}

def makefile(path):

folder = os.path.exists(path)

if not folder:

os.makedirs(path)

print("Make file -- " + path + " -- successfully!")

else:

raise AssertionError

################################################################

def getCover(url, journal, year, filepath, startyear=2022, endyear=2022):

# 注意endyear是比startyear小的,因为是从endyear开始由后往前来下载的

if not (endyear <= year <= startyear):

return

try:

issue_response = requests.get("https://www.nature.com" + url,

timeout=120,

headers=kv)

except Exception:

print(journal + " " + str(year) + " Error")

return

issue_response.encoding = 'gbk'

if 'Page not found' in issue_response.text:

print(journal + " Page not found")

return

issue_soup = BeautifulSoup(issue_response.text, 'html.parser')

cover_image = issue_soup.find_all("img", class_='image-constraint pt10')

for image in cover_image:

image_url = image.get("src")

print("Start loading img.............................")

image_url = image_url.replace("w200", "w1000")

if (image_url[-2] == '/'):

month = "0" + image_url[-1]

else:

month = image_url[-2:]

image_name = nature_journal[journal] + "_" + str(

year) + "_" + month + ".png"

if os.path.exists(filepath + journal + "\\" + image_name):

print(image_url + " 已经存在")

continue

print(image_url)

try:

image_response = requests.get("http:" + image_url,

timeout=240,

headers=kv)

except Exception:

print("获取图片异常:" + image_name)

continue

with open(filepath + journal + "\\" + image_name,

'wb') as downloaded_img:

downloaded_img.write(image_response.content)

def main():

try:

path = os.getcwd() + '\\'

makefile(path + folder_Name)

except Exception:

print("文件夹 --nature 封面-- 已经存在")

path = path + folder_Name + "\\"

for journal in nature_journal:

try:

makefile(path + journal)

except AssertionError:

print("File -- " + path + " -- has already exist!")

try:

volume_response = requests.get("https://www.nature.com/" +

nature_journal[journal] +

"/volumes",

timeout=120,

headers=kv)

except Exception:

print(journal + " 异常")

continue

volume_response.encoding = 'gbk'

volume_soup = BeautifulSoup(volume_response.text, 'html.parser')

volume_list = volume_soup.find_all(

'ul',

class_=

'clean-list ma0 clean-list grid-auto-fill medium-row-gap background-white'

)

number_of_volume = 0

for volume_child in volume_list[0].children:

if volume_child == '\n':

continue

issue_url = volume_child.find_all("a")[0].get("href")

print(issue_url)

print(2020 - number_of_volume)

getCover(issue_url,

journal,

year=(2020 - number_of_volume),

filepath=path,

startyear=2022,

endyear=2022)

number_of_volume += 1

if __name__ == "__main__":

main()

print("Finish Everything!")

运行结果:

496

496

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?