极大似然估计

Let be independent samples from an N(μ,Σ) distribution, where it is known that

, where

and

are given positive definite matrices. Which of the following is true?

最优检测器设计

Consider a binary detection system with the following confusion matrix

If both classes are equiprobable, what is the probability of error

错误的概率,即实际是假设1成立,而估计为假设2,以及实际是假设2而估计为假设1。一直每种假设发生的概率均为0.5,故错误的概率= 0.1*-。25+0.15*0.5=0.2

In binary hypothesis testing the likelihood ratio threshold can be interpreted as the cost of misclassification.

实验设计

If you solve the relaxed version of the optimal experiment design problem, and then simply round to get the (integer) number of times to carry out each experiment, you get the globally optimal (integer) experiment design.

Maximum likelihood estimation of an increasing nonnegative signal

We wish to estimate a scalar signal x(t), for t=1,2,…,N, which is known to be nonnegative and monotonically nondecreasing:

This occurs in many practical problems. For example, x(t) might be a measure of wear or deterioration, that can only get worse, or stay the same, as time t increases. We are also given that x(t)=0 for t≤0.

We are given a noise-corrupted moving average of x, given by

where v(t) are independent N(0,1) random variables.

Formulate the problem of finding the maximum likelihood estimate of x, given y, taking into account the prior assumption that x is nonnegative and monotonically nondecreasing, as a convex optimization problem. Now solve a specific instance of the problem, with problem data (i.e., N, k, h, and y) given in the file ml_estim_incr_signal_data_norng.m. (This file contains the true signal xtrue, which of course you cannot use in creating your estimate.) Find the maximum likelihood estimate x^ml, and plot it, along with the true signal. Also find and plot the maximum likelihood estimate x^ml,free not taking into account the signal nonnegativity and monotonicity.

Hint. The function conv (convolution) is overloaded to work with CVX.

Which of the following statements most accurately describe the plot

Worst-case probability of loss

Two investments are made, with random returns and

.The total return for the two investments is

, and the probability of a loss (including breaking even, i.e.,

) is ploss=prob(

). The goal is to find the worst-case (i.e., maximum possible) value of ploss, consistent with the following information. Both

and

have Gaussian marginal distributions, with known means

and

and known standard deviations

and

. In addition, it is known that

and

are correlated with correlation coefficient ρ, i.e.,

Your job is to find the worst-case ploss over any joint distribution of and

consistent with the given marginals and correlation coefficient.

We will consider the specific case with data

We can compare the results to the case when and

are jointly Gaussian. In this case we have

which for the data given above gives . Your job is to see how much larger

can possibly be.

This is an infinite-dimensional optimization problem, since you must maximize over an infinite-dimensional set of joint distributions. To (approximately) solve it, we discretize the values that

and

can take on, to n=100 values r1,…,rn, uniformly spaced from

to

. We use the discretized marginals

and

for

and

, given by

for k=1,2, i=1,…,n.

Formulate the (discretized) problem as a convex optimization problem, and solve it. What is the maximum value of ploss?

![]()

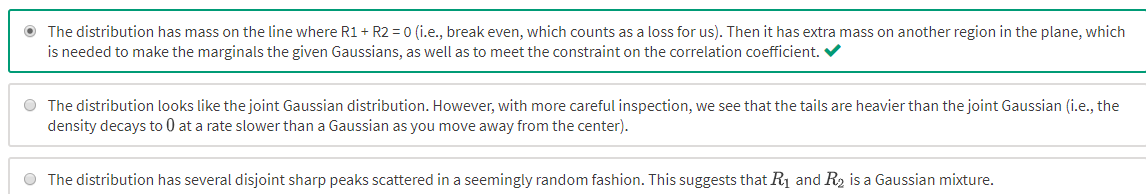

Plot the joint distribution that yields the maximum value of ploss using the Matlab commands mesh and contour. Which of the following statements most accurately describe the plot of the (worst-case) joint distribution?

448

448

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?