文章目录

1、论文总述

这篇论文提出了一种适合移动端部署的分类网络:MobileNetV2,是在MobileNetV1的基础上改进得来,整体上还是采用MobileNetV1中的深度可分离卷积来降低网络的参数量和推理速度,从论文标题中就可以看出本篇论文的两个主要的改进点:Inverted Resduals 和 Linear Bottlenecks,Inverted Resduals是指加入了Resnet中的shotcut结构,但是又和它不一样,resnet中的bottleneck是中间层的feature map的通道数少,而两侧的feature map的通道数多,是一个沙漏型,而本文提出的倒置残差结构是两侧的feature map的通道数少,而中间的通道数多,是柳叶型;至于 Linear Bottlenecks是将bottleneck中的最后的通道数较少的feature map后面跟的relu6激活函数去掉,即去掉了非线性。

注:其中table2中的t为1*1卷积用来升维时的expansion,作者大部分实验采用的是6,n是重复单元个数,s是步长

Our main contribution is a novel layer module: the inverted residual with linear bottleneck.

This module takes as an input a low-dimensional compressed

representation which is first expanded to high dimension and filtered with a lightweight depthwise convolution. Features are subsequently projected back to a

low-dimensional representation with a linear convolution. The official implementation is available as part of

TensorFlow-Slim model library in [4].

Furthermore, this convolutional module is particularly suitable for mobile designs, because it allows to signifi-

cantly reduce the memory footprint needed during inference by never fully materializing large intermediate

tensors

这个网络速度快是因为卷积时候并没有用标准卷积去卷积很大很深的feature map,在bottleneck中虽然是先升维,但是升维之后用的是深度可分裂卷积,然后降维时候用的是1*1卷积,这些参数量都很少,而且乘加法次数也少。

. Our network design is based on MobileNetV1 [27]. It retains its simplicity and does not require any special operators while significantly improves its accuracy, achieving state of the art on multiple image classification and

detection tasks for mobile e applications.

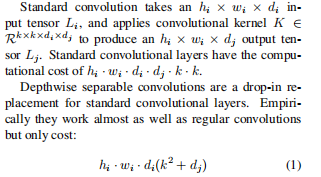

2、普通卷积与深度可分离卷积的计算量对比

3、移除部分非线性

作者在论文中花费了很多篇幅来证明:当使用了depthwise卷积后,且feature map的通道个数比较少时,这时候的卷积后面就不要跟着非线性激活函数了,直接去掉relu就行,会有性能提升。

To summarize, we have highlighted two properties

that are indicative of the requirement that the manifold

of interest should lie in a low-dimensional subspace of

the higher-dimensional activation space:

》1. If the manifold of interest remains non-zero volume after ReLU transformation, it corresponds to

a linear transformation.

》2. ReLU is capable of preserving complete information about the input manifold, but only if the input

manifold lies in a low-dimensional subspace of the

input space.

These two insights provide us with an empirical hint

for optimizing existing neural architectures: assuming

the manifold of interest is low-dimensional we can capture this by inserting linear bottleneck layers into the

convolutional blocks. Experimental evidence suggests

that using linear layers is crucial as it prevents nonlinearities from destroying too much information. In

Section 6, we show empirically that using non-linear

layers in bottlenecks indeed hurts the performance by

several percent, further validating our hypothesis3

. We

note that similar reports where non-linearity was helped

were reported in [29] where non-linearity was removed

from the input of the traditional residual block and that

lead to improved performance on CIFAR dataset.

4、 The difference between residual block and inverted residual

residual block是先降维再升维, inverted residual是先升维再降维

5、消融实验

6、SSDlite

SSDLite: In this paper, we introduce a mobile

friendly variant of regular SSD. We replace all the regular convolutions with separable convolutions (depthwise

followed by 1 × 1 projection) in SSD prediction layers. This design is in line with the overall design of

MobileNets and is seen to be much more computationally efficient. We call this modified version SSDLite.

Compared to regular SSD, SSDLite dramatically reduces both parameter count and computational cost as

shown in Table 5.

参考文献

1、如何评价mobilenet v2 ?

2、(二十八)通俗易懂理解——MobileNetV1 & MobileNetV2

914

914

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?