一、二

参考:

PyTorch深度学习快速入门教程(绝对通俗易懂!)【小土堆】笔记_电信保温杯的博客-CSDN博客_pytorct深度学习程序详解

P2. Python编辑器的选择、安装及配置(PyCharm、Jupyter安装)【PyTorch教程】_哔哩哔哩_bilibili

三、TensorBoard的使用

1.TensorBoard介绍

TensorBoard是一个可视化工具,它可以用来展示网络图、张量的指标变化、张量的分布情况等。特别是在训练网络的时候,我们可以设置不同的参数(比如:权重W、偏置B、卷积层数、全连接层数等),使用TensorBoader可以很直观的帮我们进行参数的选择。

2.显示图标和图片

from torch.utils.tensorboard import SummaryWriter

import numpy as np

from PIL import Image

writer = SummaryWriter("logs")

image_path = "data/train/ants_image/6240329_72c01e663e.jpg"

img_PIL = Image.open(image_path)

img_array = np.array(img_PIL)

print(type(img_array))

print(img_array.shape)

writer.add_image("train", img_array, 1, dataformats='HWC') //显示图

# y = 2x

for i in range(100):

writer.add_scalar("y=2x", 3*i, i) //显示数据表

writer.close()在pycharm命令行输入

tensorboard --logdir=logs --port=6007步骤

- 创建SummaryWriter类,传入logs文件输出地址

- 图片文件地址

- Image类打开图片文件

- 将Image类转换numpy格式

- SummaryWriter类加载numpy格式图片

- 画图

- 关闭SummaryWriter流

四、Transforms的使用

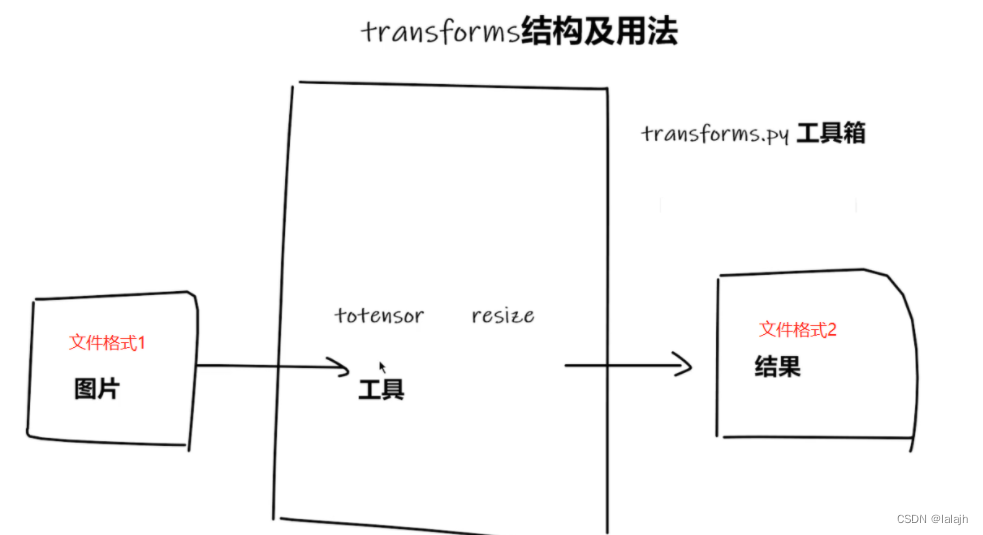

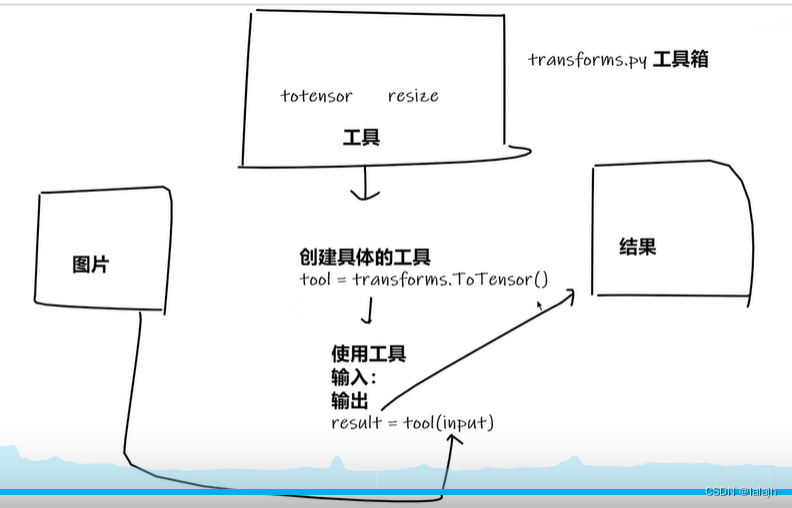

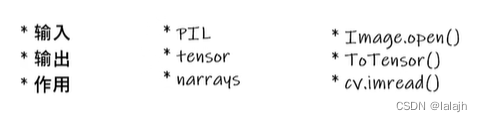

transforms主要用于图片的变换,是一个torchvision下的一个工具箱,用于格式转化,视觉处理工具,不用于文本。

具体点是这样

例如

from PIL import Image

from torchvision import transforms

img_path="dataset/train/ants/0013035.jpg"

img=Image.open(img_path)

tensor_trans=transforms.ToTensor()

tensor_img=tensor_trans(img)

print(tensor_img)显示ToTensor图片

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

img_path="dataset/train/ants/0013035.jpg"

img=Image.open(img_path)

writer =SummaryWriter("logs")

tensor_trans=transforms.ToTensor()

tensor_img=tensor_trans(img)

writer.add_image("tensor_img",tensor_img)

print(tensor_img)Transforms里面有多个工具类:

Compose类:将几个变换组合在一起。

ToTensor类:顾名思义,讲其他格式文件转换成tensor类的格式。

ToPILImage类:转换成Image类。

Nomalize类:用于正则化。

Resize类:裁剪。

RandomCrop:随机裁剪。

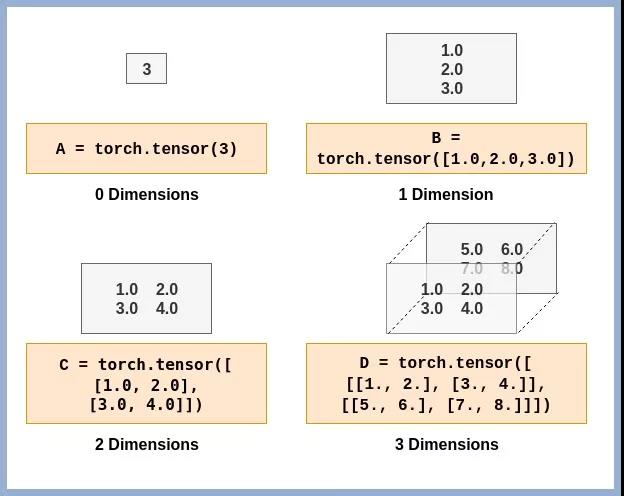

1.Tensor的操作

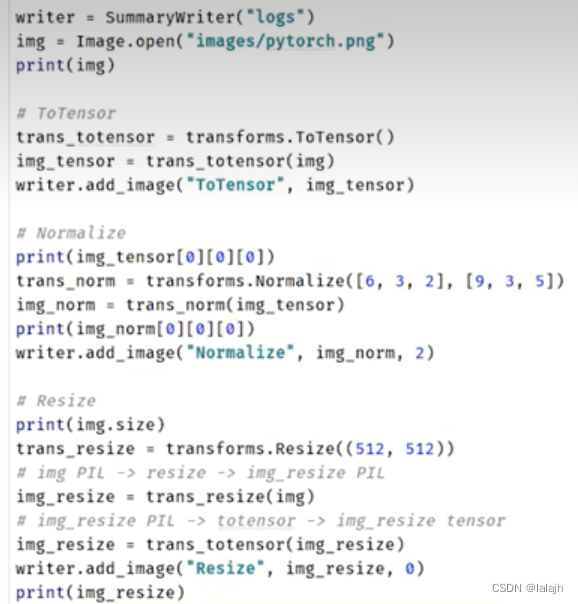

五、常见的Transforms

1._ _call()_ _ 方法

class Person:

def __call__(self,name):

print("__call()__"+" "+name)

def hello(self,name):

print("hello"+" "+name)

person=Person()

person("zhangsan") #对象调用_ _call()_ _方法不用点

person.hello("hello")输出结果

__call()__ zhangsan

hello hello2.ToTensor、Nomalize、Resize、Compose和RandomCrop等工具类的使用

六、torchvision中的数据集使用

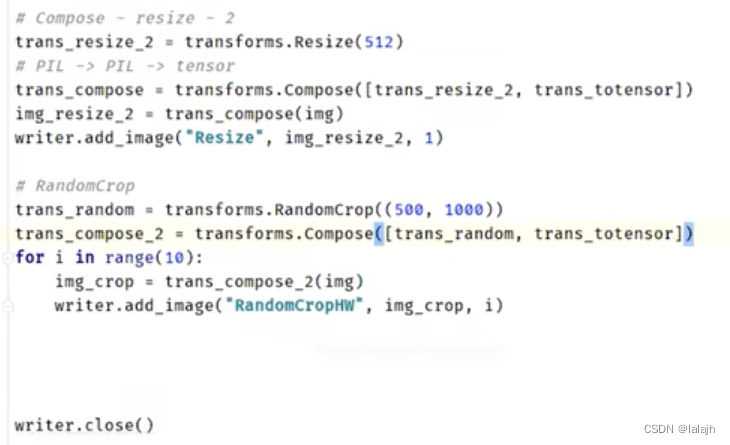

七、DataLoader的使用

Dataset如果是一叠扑克牌的话,就是数据集,定义了其在哪里,量有多少等。DataLoader是一个加载器,就是一只手,参数就是告诉这只手怎么抓取扑克牌,如取几次,一次取多少张等。

DataLoader常用参数:

- dataset (Dataset) – 使用哪个数据集

- batch_size (int, optional) – 一次抓取多少个数据,多少张牌

- shuffle (bool, optional) – 是否重新洗牌,若true下次重新洗牌

- num_workers (int, optional) – 多线程,windows下要设成0,不然会出错,出现

BrokenPipeError: [Errno 32] Broken pipe

- drop_last (bool, optional) – 当数据集大小不能被批大小整除时,设置为True则以删除最后一个不完整的批。False,则不删除,最后一批将变小。(默认值:False)

datasetloader加载批数据过程:

代码--dataloader.py

import torchvision

# 准备的测试数据集

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_data = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(dataset=test_data, batch_size=64, shuffle=True, num_workers=0, drop_last=True)

# 测试数据集中第一张图片及target

img, target = test_data[0]

print(img.shape)

print(target)

writer = SummaryWriter("dataloader")

for epoch in range(2):

step = 0

for data in test_loader:

imgs, targets = data

# print(imgs.shape)

# print(targets)

writer.add_images("Epoch: {}".format(epoch), imgs, step)

step = step + 1

writer.close()shuffle位true,代码设置抓了两次,第二次洗牌了。每小次抓了64张牌。

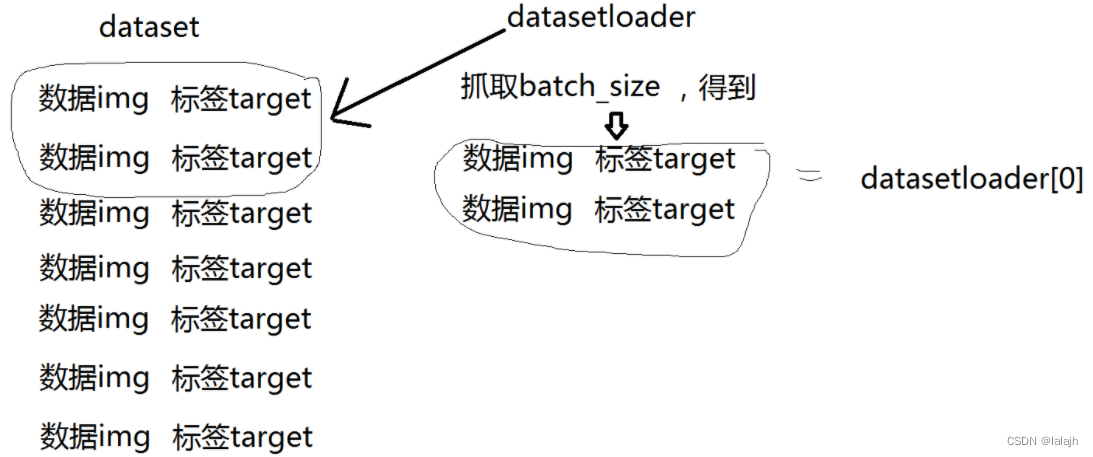

八、神经网络的基本骨架-torch.nn

torch.nn为神经网络提供骨架,我们往里面填充东西就是神经网络了。

1.Containers

代码-nn_module.py

import torch

from torch import nn

class Tudui(nn.Module):

def __init__(self):

super().__init__()

def forward(self, input):

output = input + 1

return output

tudui = Tudui()

x = torch.tensor(1.0)

output = tudui(x)

print(output)2.卷积操作

代码-nn_conv.py

import torch

import torch.nn.functional as F

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]]) #输入图像

kernel = torch.tensor([[1, 2, 1],

[0, 1, 0],

[2, 1, 0]]) #内核

input = torch.reshape(input, (1, 1, 5, 5))

kernel = torch.reshape(kernel, (1, 1, 3, 3))

print(input.shape)

print(kernel.shape)

output = F.conv2d(input, kernel, stride=1) #输出图像

print(output)

output2 = F.conv2d(input, kernel, stride=2)

print(output2)

output3 = F.conv2d(input, kernel, stride=1, padding=1)

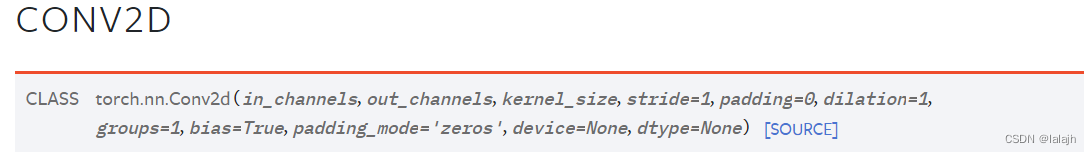

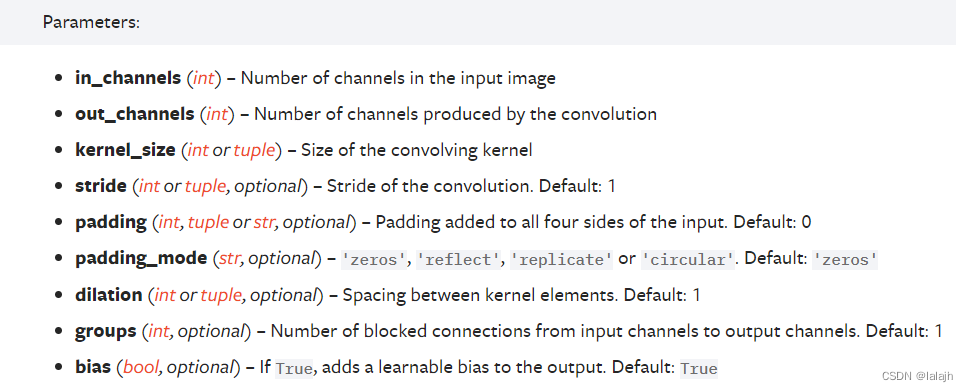

print(output3)3.卷积层

代码-nn_conv2.py

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

tudui = Tudui()

writer = SummaryWriter("../logs")

step = 0

for data in dataloader:

imgs, targets = data

output = tudui(imgs)

print(imgs.shape)

print(output.shape)

# torch.Size([64, 3, 32, 32])

writer.add_images("input", imgs, step)

# torch.Size([64, 6, 30, 30]) -> [xxx, 3, 30, 30]

output = torch.reshape(output, (-1, 3, 30, 30)) #需要变成3个通道才能显示

writer.add_images("output", output, step)

step = step + 14.最大池化

作用:保留输入的主要特征,同时数据量减少,这样训练更快。代码---nn_maxpool.py

dataset = torchvision.datasets.CIFAR10("../data", train=False, download=True,

transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3, ceil_mode=False) #ceil_mode=true

def forward(self, input):

output = self.maxpool1(input)

return output

tudui = Tudui()

writer = SummaryWriter("../logs_maxpool")

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images("input", imgs, step)

output = tudui(imgs)

writer.add_images("output", output, step)

step = step + 1

writer.close()5.非线性激活

作用:非线性变换,可以给网络模型引入非线性特征,这样泛化更强。代码-nn_relu.py

dataset = torchvision.datasets.CIFAR10("../data", train=False, download=True,

transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.relu1 = ReLU()

self.sigmoid1 = Sigmoid()

def forward(self, input):

output = self.sigmoid1(input)

return output

tudui = Tudui()

writer = SummaryWriter("../logs_relu")

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images("input", imgs, global_step=step)

output = tudui(imgs)

writer.add_images("output", output, step)

step += 1

writer.close()

6.线层及其他层介绍

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.linear1 = Linear(196608, 10) # 输入特征个数,输出特征个数

def forward(self,input):

output =self.linear1(input)

return output

tudui =Tudui()

for data in dataloader:

img, targets = data

print(img.shape)

output = torch.reshape(img, (1, 1, 1, -1)) #等价于 output=torch.flatten(img)

print(output.shape)

output= tudui(output)

print(output.shape)输出结果部分:

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([1, 1, 1, 10])7.搭建小实战和Sequential的使用

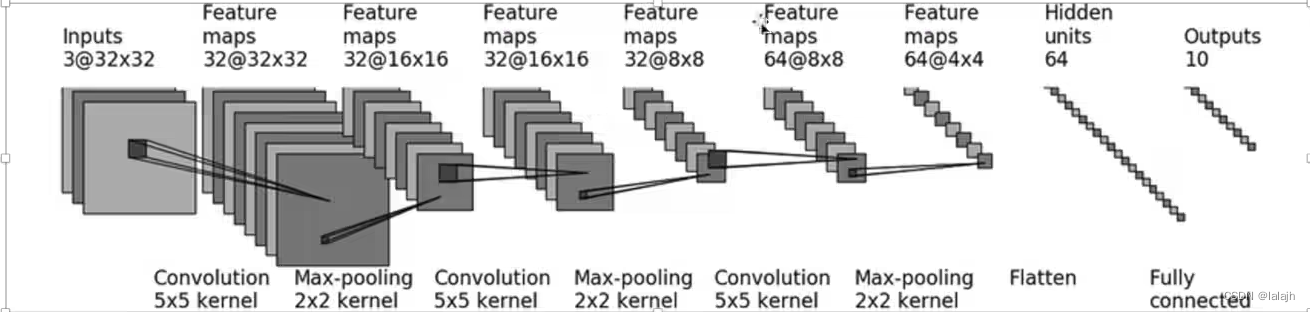

cifar10 model structure

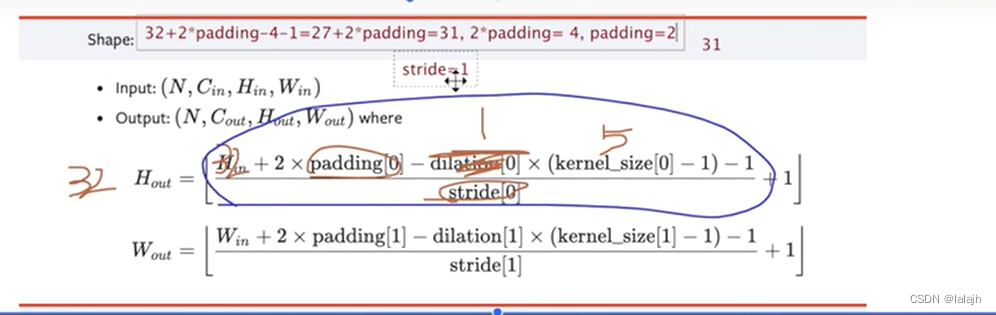

根据框架中可以看出in_channels=3,out_channels=32,kernel_size=5

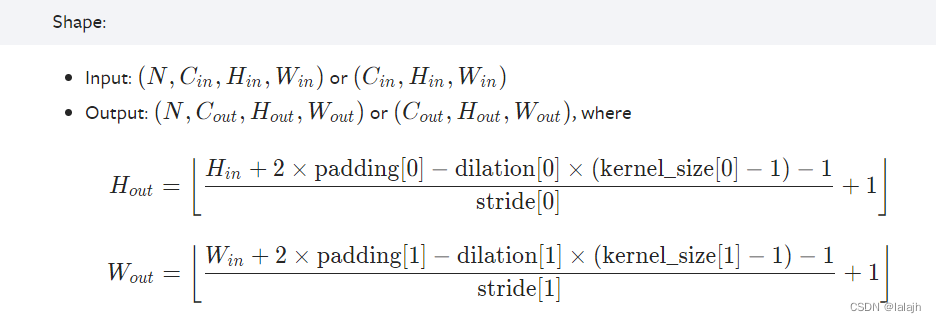

但是由于经过5*5大小的卷积核之后,图片的大小依然是32*32,所以我们要计算padding和stride

conv2d的pytorch文件有公式可以套:

代码--nn_seq.py

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

tudui = Tudui()

print(tudui)

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

writer = SummaryWriter("../logs_seq")

writer.add_graph(tudui, input)

writer.close()

或者

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1=Conv2d(3,32,5,padding=2)

self.maxpool1 = MaxPool2d(2)

self.conv2=Conv2d(32,32,5,padding=2)

self.maxpool2 =MaxPool2d(2)

self.conv3=Conv2d(32,64,5,padding=2)

self.maxpool3 =MaxPool2d(2)

self.flatten =Flatten() #弄成一行1024列,64*4*4

self.Linear1= Linear(1024,64)

self.Linear2 = Linear(64, 10)

def forward(self,x):

x =self.conv1(x)

x=self.maxpool1(x)

x=self.conv2(x)

x=self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

x=self.flatten(x)

x=self.Linear1(x)

x=self.Linear2(x)

return x

#下列是打印网络结构

tudui=Tudui()

print(tudui)

#创建数据,检验网络结构

input = torch.ones((64,3,32,32)) #创建一个大小64,3个通道,32*32的图片

output =tudui(input)

print(output.shape) #输出torch.Size([64, 10])为正确

#显示出网络结构

writer=SummaryWriter("logs")

writer.add_graph(tudui,input)

writer.close()8.损失函数与反向传播

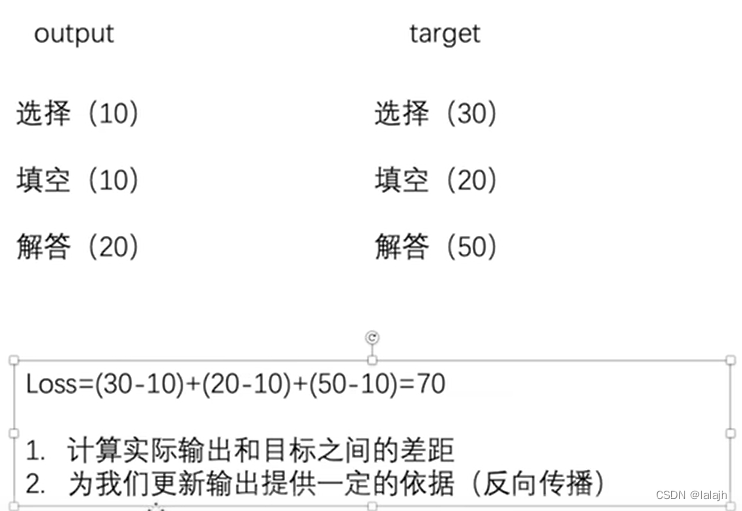

引入:好比一张试卷target是目标分数,output是实际分数

Loss越小实际输出越接近目标值,所以Loss一般是越小越好。Loss调整参数,更新输出,使实际输出越接近目标值。

Loss Functions

Pytorch学习之十九种损失函数_mingo_敏的博客-CSDN博客

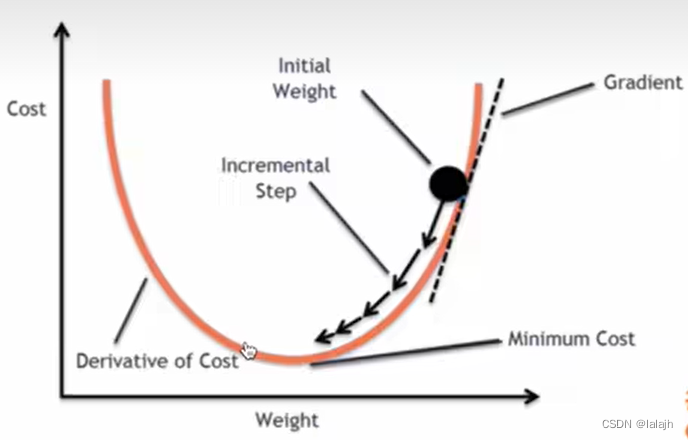

梯度下降

cost函数是loss函数,梯度不断下降,不断更新参数,梯度为0,更新参数,此时产出的模型Loss是最小的。

反向传播

代码--nn_loss_network.py

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

for data in dataloader:

imgs, targets = data

outputs = tudui(imgs)

result_loss = loss(outputs, targets)

result_loss.backward() #反向传播

print( result_loss)

9.优化器

损失函数,调用backward(),然后得到反向传播,就可以求出每个需要调节的参数,利用参数就可以求出梯度,有了梯度,就可以使用优化器对参数进行调整,以达到整体误差降低的目的。

代码--nn_optim.py

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

# 优化器通常设置模型的参数,学习率等

optim = torch.optim.SGD(tudui.parameters(), lr=0.01)

scheduler = StepLR(optim, step_size=5, gamma=0.1)

for epoch in range(20): #训练多少轮

running_loss = 0.0

for data in dataloader:

imgs, targets = data

outputs = tudui(imgs)

result_loss = loss(outputs, targets)

optim.zero_grad() #把上一次计算的梯度清零

result_loss.backward() # 损失反向传播,计算梯度

scheduler.step() # 使用梯度进行学习,即参数的优化

running_loss = running_loss + result_loss #训练多少轮的loss总和

print(running_loss)

九、现有网络模型的使用及修改

代码--model_pretrained.py

vgg16_false = torchvision.models.vgg16(pretrained=False)#没有训练的模型,原始的神经网络参数

vgg16_true = torchvision.models.vgg16(pretrained=True)

# 增加网络结点

# vgg16_true.add_module('add_linear',nn.Linear(1000,10))

vgg16_true.classifier.add_module("add_linear", nn.Linear(1000, 10))

print(vgg16_true)

# 修改原有结构,不增加网络结点

vgg16_false.classifier[6] =nn.Linear(4096,10)

print(vgg16_false)

十、网络模型的保存与读取

代码见model_save.py和model_load.py

保存模型

import torch

import torchvision

from torch import nn

vgg16 = torchvision.models.vgg16(pretrained=False)

# 保存方式1,模型结构+模型参数

torch.save(vgg16, "vgg16_method1.pth")

# 保存方式2,模型参数(官方推荐)

torch.save(vgg16.state_dict(), "vgg16_method2.pth")

# 陷阱

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = nn.Conv2d(3, 64, kernel_size=3)

def forward(self, x):

x = self.conv1(x)

return x

tudui = Tudui()

torch.save(tudui, "tudui_method1.pth")加载模型

import torch

from model_save import *

# 方式1-》保存方式1,加载模型

import torchvision

from torch import nn

model = torch.load("vgg16_method1.pth")

# print(model)

# 方式2,加载模型

vgg16 = torchvision.models.vgg16(pretrained=False)

vgg16.load_state_dict(torch.load("vgg16_method2.pth"))

# model = torch.load("vgg16_method2.pth")

# print(vgg16)

# 陷阱1

# class Tudui(nn.Module):

# def __init__(self):

# super(Tudui, self).__init__()

# self.conv1 = nn.Conv2d(3, 64, kernel_size=3)

#

# def forward(self, x):

# x = self.conv1(x)

# return x

model = torch.load('tudui_method1.pth') #需要模型定义,陷阱1部分,或者从其他地方引入from model_save import *

print(model)十一、完整的模型训练

网络结构--model.py

import torch

from torch import nn

# 搭建神经网络

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, 1, 2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64*4*4, 64),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

if __name__ == '__main__':

tudui = Tudui()

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)训练代码--train.py

import torchvision

from torch.utils.tensorboard import SummaryWriter

from model import *

# 准备数据集

from torch import nn

from torch.utils.data import DataLoader

rain_data = torchvision.datasets.CIFAR10(root="../data", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10(root="../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# length 长度

train_data_size = len(train_data)

test_data_size = len(test_data)

# 如果train_data_size=10, 训练数据集的长度为:10

print("训练数据集的长度为:{}".format(train_data_size))

print("测试数据集的长度为:{}".format(test_data_size))

# 利用 DataLoader 来加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# 创建网络模型

tudui = Tudui()

# 损失函数

loss_fn = nn.CrossEntropyLoss()

# 优化器

# learning_rate = 0.01

# 1e-2=1 x (10)^(-2) = 1 /100 = 0.01

learning_rate = 1e-2

optimizer = torch.optim.SGD(tudui.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer =SummaryWriter("logs")

for i in range(epoch):

print("--------第{}轮训练开始--------".format(i))

for data in train_dataloader:

imgs, targets = data

outputs = tudui(imgs)

loss = loss_fn(outputs, targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step = total_train_step + 1

if total_train_step % 100 == 0:

print("训练次数:{}, Loss: {}".format(total_train_step, loss.item()))

writer.add_scalar("train_loss", loss.item(), total_train_step)

# 测试步骤开始

total_test_loss=0

with torch.no_grad():

for data in test_dataloader:

imgs,targets =data

outputs = tudui(imgs)

loss = loss_fn(outputs,targets)

total_test_loss=total_test_loss+loss.item()

print("整体测试集上的Loss:{}".format(total_test_loss))

writer.add_scalar("test_loss", total_test_loss, total_test_step)

total_test_step =total_test_step+1

torch.save(tudui,"tudu_{}.pth".format(i))

print("模型已保存")

writer.close()但是测试集是不需要进行反向传播的,也就是说不需要计算去减小误差使模型拟合的,测试集的作用只是用来验证你模型效果的好坏,并不是用来训练模型用的,with torch.no_grad()就是防止进行梯度下降的。

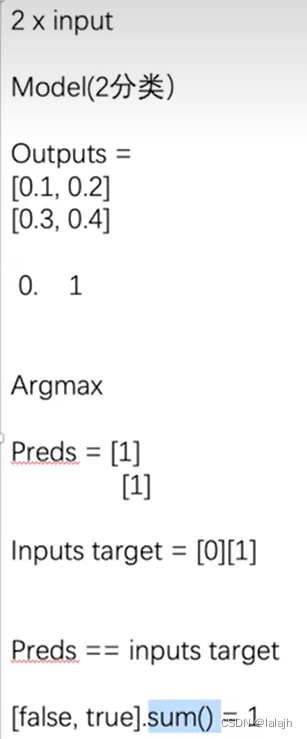

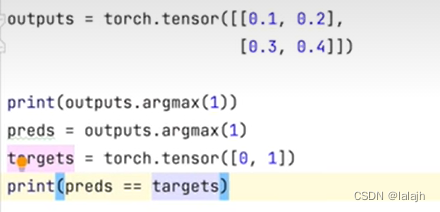

准确率的计算

输出结果:

或者训练代码--train.py

import torchvision

from torch.utils.tensorboard import SummaryWriter

from model import *

# 准备数据集

from torch import nn

from torch.utils.data import DataLoader

train_data = torchvision.datasets.CIFAR10(root="../data", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10(root="../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# length 长度

train_data_size = len(train_data)

test_data_size = len(test_data)

# 如果train_data_size=10, 训练数据集的长度为:10

print("训练数据集的长度为:{}".format(train_data_size))

print("测试数据集的长度为:{}".format(test_data_size))

# 利用 DataLoader 来加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# 创建网络模型

tudui = Tudui()

# 损失函数

loss_fn = nn.CrossEntropyLoss()

# 优化器

# learning_rate = 0.01

# 1e-2=1 x (10)^(-2) = 1 /100 = 0.01

learning_rate = 1e-2

optimizer = torch.optim.SGD(tudui.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer = SummaryWriter("../logs_train")

for i in range(epoch):

print("-------第 {} 轮训练开始-------".format(i+1))

# 训练步骤开始

tudui.train()

for data in train_dataloader:

imgs, targets = data

outputs = tudui(imgs)

loss = loss_fn(outputs, targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step = total_train_step + 1

if total_train_step % 100 == 0:

print("训练次数:{}, Loss: {}".format(total_train_step, loss.item()))

writer.add_scalar("train_loss", loss.item(), total_train_step)

# 测试步骤开始

tudui.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

outputs = tudui(imgs)

loss = loss_fn(outputs, targets)

total_test_loss = total_test_loss + loss.item()

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy = total_accuracy + accuracy

print("整体测试集上的Loss: {}".format(total_test_loss))

print("整体测试集上的正确率: {}".format(total_accuracy/test_data_size))

writer.add_scalar("test_accuracy", total_accuracy/test_data_size, total_test_step)

total_test_step = total_test_step + 1

torch.save(tudui, "tudui_{}.pth".format(i))

print("模型已保存")

writer.close()

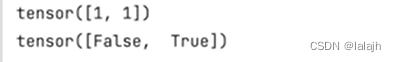

十二、利用GPU训练

1.方法一

有三种

有三种

可以使用谷歌GPU

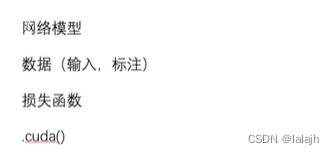

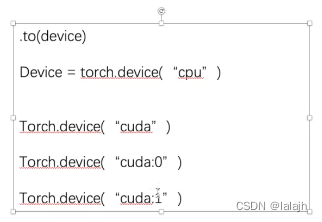

2.方法二

#方法一

tudui = Tudui()

if torch.cuda.is_available():

tudui = tudui.cuda()

#方法二

# 定义训练的设备

device = torch.device("cuda")

#使用

tudui = Tudui()

tudui = tudui.to(device) ![]()

十三、模型验证套路

import torch

import torchvision

from PIL import Image

from torch import nn

image_path = "../imgs/airplane.png"

image = Image.open(image_path)

print(image)

image = image.convert('RGB')

transform = torchvision.transforms.Compose([torchvision.transforms.Resize((32, 32)),

torchvision.transforms.ToTensor()])

image = transform(image)

print(image.shape)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, 1, 2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64*4*4, 64),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

model = torch.load("tudui_29_gpu.pth", map_location=torch.device('cpu'))

print(model)

image = torch.reshape(image, (1, 3, 32, 32)) #一张图片

model.eval()

with torch.no_grad():

output = model(image)

print(output)

print(output.argmax(1))

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?