算是一个记录,具体阅读论文是在去年五月份的时候,后续工作也是围绕着这个展开的,那么text2sql的第一篇文章就用这个吧

论文地址

https://arxiv.org/pdf/2304.11015

榜单查看

论文阅读

Abstract

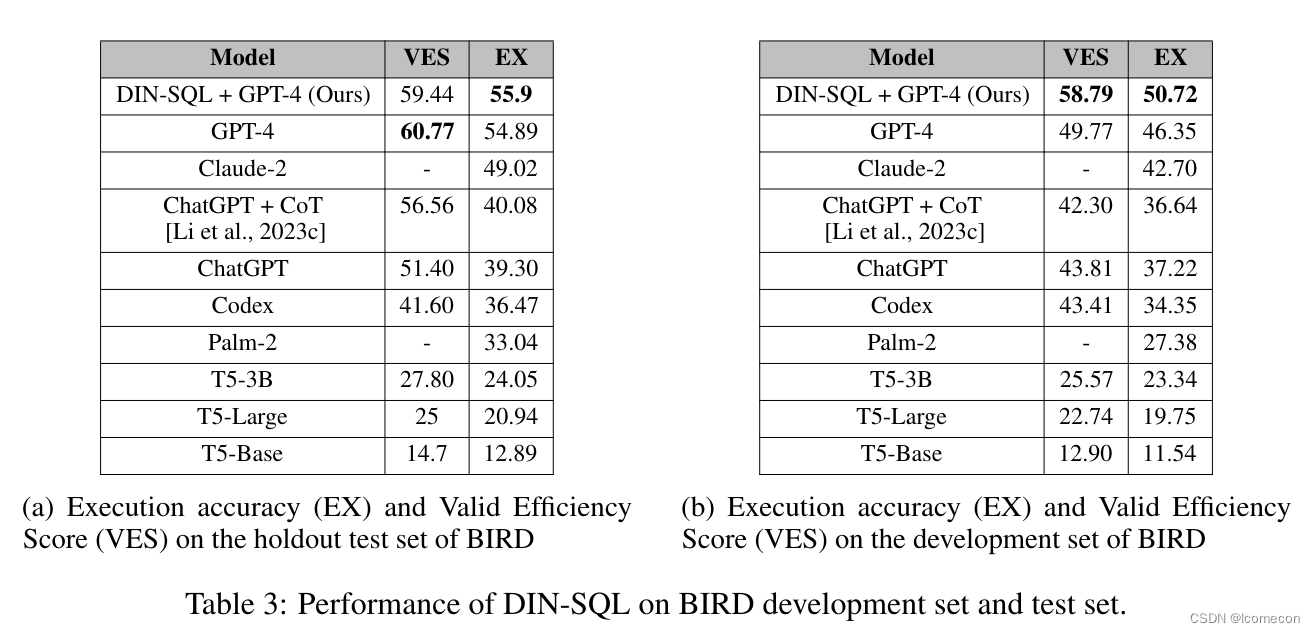

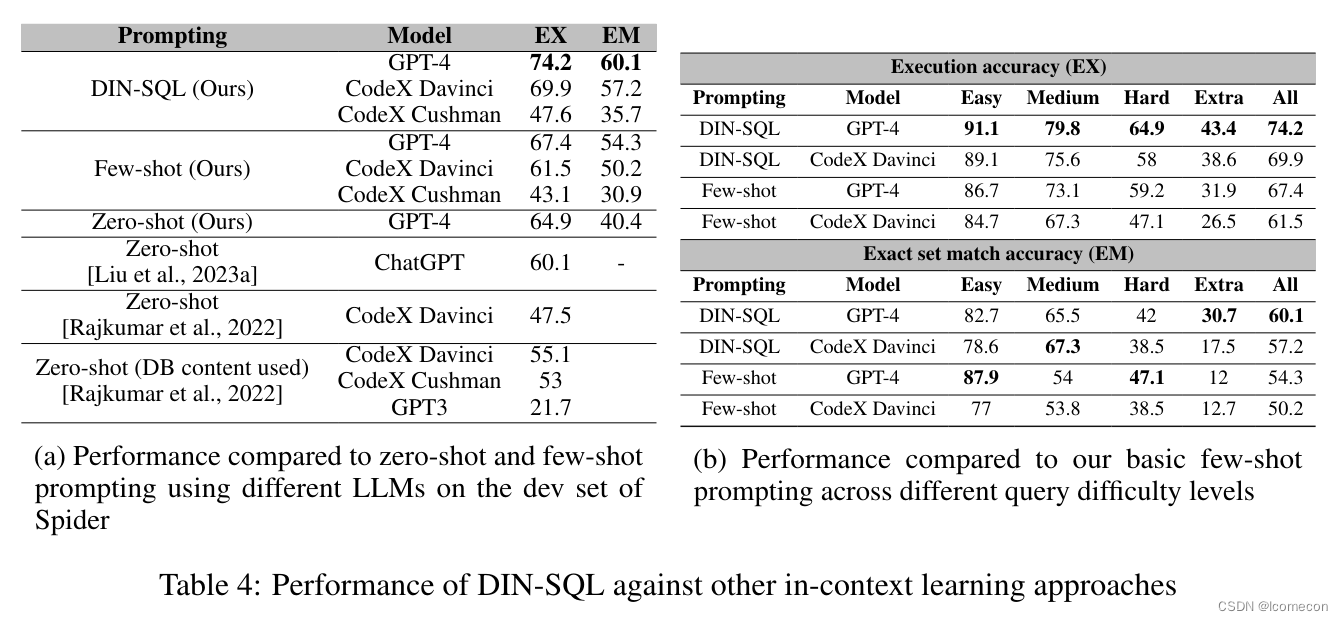

There is currently a significant gap between the performance of fine-tuned models and prompting approaches using Large Language Models (LLMs) on the challenging task of text-to-SQL, as evaluated on datasets such as Spider. To improve the performance of LLMs in the reasoning process, we study how decomposing the task into smaller sub-tasks can be effective. In particular, we show that breaking down the generation problem into sub-problems and feeding the solutions of those sub-problems into LLMs can be an effective approach for significantly improving their performance. Our experiments with three LLMs show that this approach consistently improves their simple few-shot performance by roughly 10%, pushing the accuracy of LLMs towards SOTA or surpassing it. On the holdout test set of Spider, the SOTA, in terms of execution accuracy, was 79.9 and the new SOTA at the time of this writing using our approach is 85.3. Our approach with in-context learning beats many heavily fine-tuned models by at least 5%. Additionally, when evaluated on the BIRD benchmark, our approach achieved an execution accuracy of 55.9%, setting a new SOTA on its holdout test set.

关键信息就是,使用LLM的提示工程方法解决text2sql,将问题进行分解为更小的子问题,并且是有效的,比当时的SOTA提升了5个点

1. Introduction

Natural language interfaces to databases aim at making it easier for end users to access data in a relational database. For example, given the utterance “find employees who make more than their managers” and the schema of tables employees and manages, one may want to generate a query in SQL that retrieves those employees from a database. Over the past two decades, research in this field has progressed through several phases, with early systems being domain-specific, supporting controlled natural language [Popescu et al., 2003, 2004, Li et al., 2007, Li and Jagadish, 2014] or relying on rule-based approaches [Stratica et al., 2005] while more recent systems offering greater domain-independence using supervised models trained on diverse domains and datasets [Zhong et al., 2017, Yu et al., 2018] and more recently deep neural models trained on large text and code repositories [Dong and Lapata, 2016, Devlin et al., 2018].

发展历程,比较早的阶段,没啥说的,对传统方法感兴趣的朋友们可以去撸论文

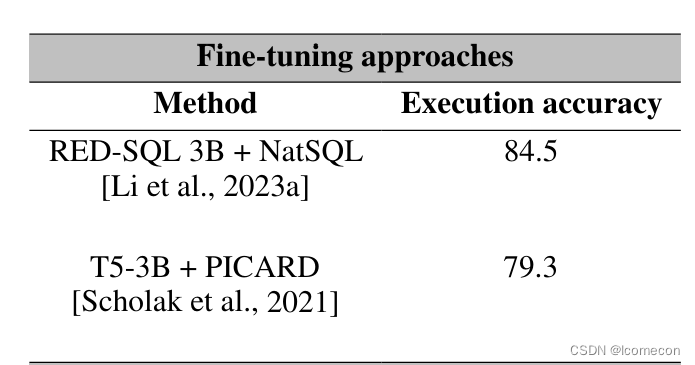

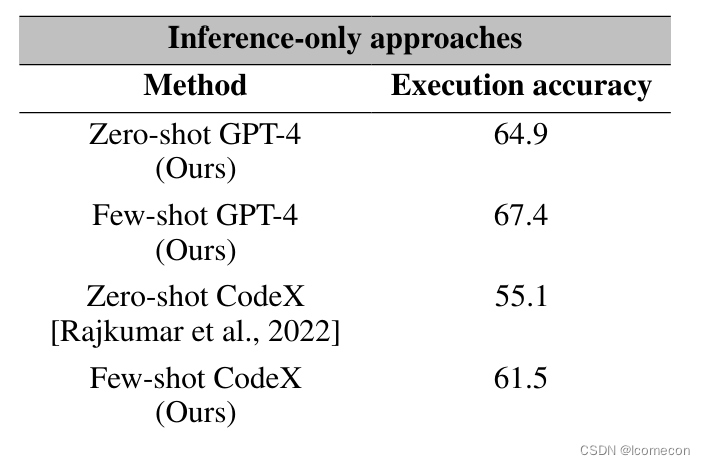

The latest development in this progression is the use of Large Language Models (LLMs) under zero-shot and few-shot prompting [Rajkumar et al., 2022, Liu et al., 2023a]. It has been shown that LLMs provide strong baselines using only a few demonstrations and no fine-tuning [Chen et al., 2021, Brown et al., 2020, Liu et al., 2023b]. However, these models fall behind on commonly used benchmarks (e.g., Spider) compared to well-designed and fine-tuned models. Table 1 shows the performance of two latest LLMs, CodeX and GPT-4, on the development set of the Spider dataset. Despite a strong performance, LLMs fall behind, compared to existing methods [Scholak et al., 2021, Li et al., 2023a], especially on medium and complex queries. The question investigated in this paper is where these LLMs fail and if some of the problems that they are facing can be mitigated to push the performance to reach or surpass fine-tuned SOTA models.

Fine-tuning 方法 Fine-tuning 方法

|

end to end end to end

|

Prompting has several advantages over traditional approaches using pretraining or fine-tuning. The main benefit is that LLMs can perform prediction tasks without requiring large task-specific training data. Training models from scratch or fine-tuning them is a resource-intensive process, often requiring a large number of training samples and machine resources, which may not be available. Additionally, few-shot prompting has been shown to outperform previous state-of-the-art methods on several benchmark datasets and can achieve high accuracy even with limited training examples [Brown et al., 2020, Wei et al., 2022b].

It has been recently shown that the performance of LLMs can be improved on more complex tasks (e.g., math word problems, compositional navigation steps) using approaches such as chain-of-thought [Wei et al., 2022b], least-to-most [Zhou et al., 2022], and decomposed [Khot et al., 2022] prompting techniques where a task is broken down into multiple steps and the intermediate results are used to generate a final answer. Unlike algebraic expressions, which consist of clear steps or operations, breaking a complex SQL query can be a more daunting task because of the declarative structure of the language and the complex relationships between query clauses.

老生常谈的,LLM的好处,以及让LLM效果更好的方式,比如COT,least-to-most,拆解任务等等

In this paper, we propose a novel method based on fewshot prompting that decomposes the task of natural language text to SQL (referred to as text-to-SQL) into multiple sub-tasks. Previous works on text-to-SQL prompting using LLMs are only evaluated in a zero-shot setting [Rajkumar et al., 2022, Liu et al., 2023a]. However, zero-shot prompting only provides a lower bound on the potential power of LLMs for most tasks [Zhang et al., 2022, Kojima et al., 2022, Wei et al., 2022b, 2021, Brown et al., 2020]. We show that our proposed method outperforms the few-shot prompting method by a large margin. We also compare our method with previous approaches on two cross-domain challenging benchmarks, Spider and BIRD. For Spider dataset, we use the two official evaluation metrics of execution accuracy and exact set match accuracy [Zhong et al., 2020]. We utilize two variants of the CodeX family, namely Davinci and Cushman [Chen et al., 2021], and the GPT-4 model for prompting. On the holdout test set of Spider, our method achieves an execution accuracy of 85.3% and 78.2% respectively using GPT-4 and CodeX Davinci models and an exact set match accuracy of 60% and 57% respectively using the same models. The large gap between the exact match and execution accuracies is due to the few-shot in-context nature of our method. Pretrained and fine-tuned approaches are more likely to generate SQL queries with a higher exact set match accuracy simply because these models have seen many examples during training that follow the composition style of the queries in the test set (queries in both sets are often written by the same people). Before our work, the SOTA on the test set had an execution accuracy of 79.9% [Li et al., 2023a] and an exact set match accuracy of 74% [Li et al., 2023b], and our method sets a new ground in terms of the execution accuracy. On the BIRD benchmark, our approach achieves a new SOTA result, attaining an execution accuracy of 55.9% on the holdout test set and 50.72% on the development set when employing GPT-4. Moreover, using the valid efficiency score introduced in this benchmark, our approach outperformed a GPT-4 baseline, demonstrating a 9% improvement on the development set. This highlights the effectiveness of our method.

Our contributions can be summarized as follows: (1) improving the performance of LLM-based text-to-SQL models through task decomposition, (2) introducing adaptive prompting strategies tailored to task complexity, (3) addressing schema linking challenges in the context of prompting, and (4) using LLMs for self correction. To replicate the reported results, visit our GitHub repository 1 for access to the prompts, results, and the code.

介绍作者的方法,主要是对任务拆解,然后自适应的prompt,以及强调schema-link的作用,LLM的自我修正(注:之前通过实验,好像并不能支撑这个观点)

2. Related Work

Sequence-to-sequence models [Sutskever et al., 2014] have shown great potential in code generation tasks including text-to-SQL. The key idea is to jointly encode a given natural language question and the database schema and leverage a decoder to predict the target SQL.

On the encoder side, learning a representation for the question and the database schema is carried out using bidirectional LSTM in IRNet [Graves and Graves, 2012], convolutional neural networks in RYANSQL [Choi et al., 2021], pretrained language models such as BERT in SQLova [Hwang et al., 2019] and graph neural networks in RATSQL [Wang et al., 2019], SADGA [Cai et al., 2021], and LGESQL [Cao et al., 2021]. Gan et al. [2021] propose an intermediate representation to bridge the gap between the natural language question and SQL statements. There has been also work on tabular language models that encode both tables and text such as TaBERT [Yin et al., 2020], TaPas [Herzig et al., 2020], and Grappa [Yu et al., 2020].

The methods on the decoder side can be categorized into sketch-based slot-filling and generationbased methods [Qin et al., 2022]. Sketch-based methods break the problem into several slot prediction sub-problems and aggregate the predictions for the slots of the SQL query to be generated [Hwang et al., 2019, Xu et al., 2017, Hui et al., 2021]. A drawback of these methods is that they cannot generalize to queries that do not follow the predefined templates. The generation-based methods [Guo et al., 2019, Wang et al., 2019, Cao et al., 2021, Huang et al., 2021] decode the SQL query as an abstract syntax tree.

In contrast to pretrained and fine-tuned models, Rajkumar et al. [2022] and Liu et al. [2023a] conduct an evaluation of the zero-shot prompting capability of LLMs on text-to-SQL using different prompts on the Spider dataset. Prompting techniques have been also used for tasks such as table understanding, table reasoning, and table-to-text generation [Guo et al., 2023, Chen, 2022], and some remarkable results have been reported using LLMs with just a small number of examples given in the prompt.

相关工作,主要是之前的一些方法介绍,seq-to-seq,怎么去encode,以及预测的decode

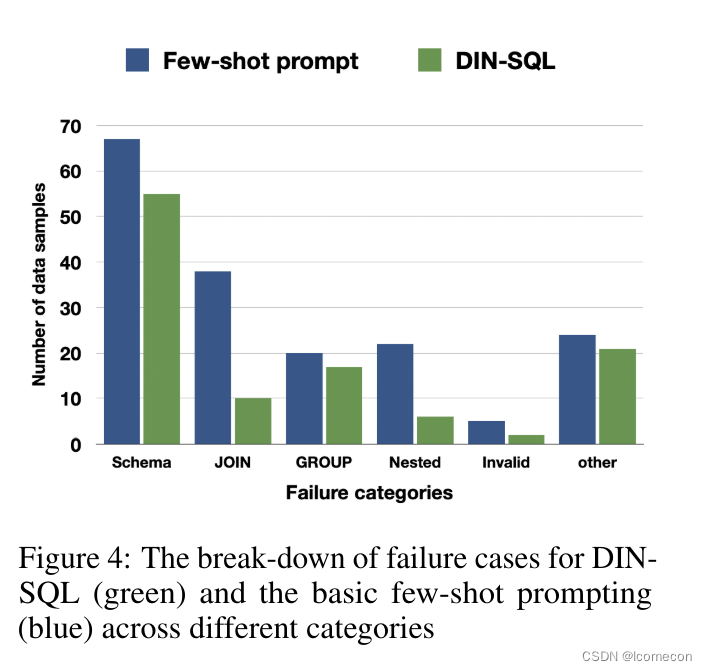

3. Few-shot Error Analysis

To better understand where LLMs fail under a few-shot setting, we randomly sampled 500 queries from different databases in the training set of the Spider dataset, excluding all databases used in our prompts. We searched for the queries that produced results different than those of gold queries, hence failing the execution accuracy. We manually examined these failures and classified them into six categories as shown in Figure 1 and discussed next.

直接end-to-end的LLM+?-shot效果不好,然后对badcase进行了分析,分析的结果图如下:

3.1 Schema linking

This category contained the largest number of failed queries and included instances where the model failed to identify column names, table names, or entities mentioned in questions. In some cases, the query required an aggregation function, but a matching column name was chosen instead. For instance, the database schema for question “What are the average and maximum capacities for all stadiums?” included a column named “average”, which was selected by the model instead of taking the average of the capacity column.

出错最多的,找表错误,找列错误,实体值出错;还举了一个例子,有时候是需要对列进行聚合,但模型只能找到列。

3.2 JOIN

This was the second largest category and included queries that needed a JOIN but the model was unable to identify all the tables required or the correct foreign keys to join the tables.

出错第二多的,不能找到所有的表,或者以及对应的外键

3.3 Group By

This category included cases where the SQL statement required a GROUP BY clause, but the model either did not recognize the need for grouping or wrong columns were used for grouping the results.

3.4 Queries with nesting and set operations

For this category, the gold query used nesting or set operations but the model did not recognize the nested structure or was unable to detect the correct nesting or set operation.

3.5 Invalid SQL

A small set of the generated SQL statements had syntax errors and could not be

executed.

3.6 Miscellaneous

This category included cases that did not fit under any of the previously mentioned categories. Examples included SQL queries that contained extra predicates, missed a predicate, or had missing or redundant DISTINCT or DESC keywords. This category also included cases where the WHERE clause was missing or the query had redundant aggregation functions.

多关注一下这些出错的地方,后续的方案也是基于修复这些badcase来做处理的

4. Methodology

Despite improvements over zero-shot, few-shot models struggle on more complex queries including those where schema linking is less trivial and the queries that use multiple joins or have a nested structure, as discussed in Section 3.

Our approach to address these challenges is to break down the problem into smaller sub-problems, solve each sub-problem, and use those solutions to construct a solution for the original problem. Similar approaches (e.g., chain-of-thought prompting [Wei et al., 2022b] and least-to-most prompting [Zhou et al., 2022]) have been taken to improve the performance of LLMs on tasks that can be broken down into multiple steps such as math word problems and compositional generalization [Cobbe et al., 2021, Lake and Baroni, 2018]. Unlike these domains where the tasks have a procedural structure with one step directly feeding into the next step, SQL queries in most parts are declarative and the possible steps and their boundaries are less clear. However, the thought process for writing SQL queries may be broken down to (1) detecting database tables and columns that are relevant to the query, (2) identifying the general query structure for more complex queries (e.g., group by, nesting, multiple joins, set operations, etc.), (3) formulating any procedural sub-components if they can be identified, and (4) writing the final query based on the solutions of the sub-problems.

Based on this thought process, our proposed method for decomposing a text-to-SQL task consists of four modules (as depicted in Figure 2): (1) schema linking, (2) query classification and decomposition, (3) SQL generation, and (4) self-correction, which are explained in detail in the following sub-sections. While these modules may be implemented using techniques from the literature, we implement them all using prompting techniques to show that LLMs are capable of solving them all if the problems are simply broken down to the right level of granularity. The few-shot examples used in the prompts are obtained from the training set of the respective benchmarks.

介绍了方法,一共是四个步骤:

- 找到schema_link

- 查询分类和分解任务

- SQL生成

- 自我修正

实现的方式是使用提示词技术

4.1 Schema Linking Module

Schema linking is responsible for identifying references to database schema and condition values in natural language queries. It is shown to help with the generalizability across domains and the synthesis of complex queries [Lei et al., 2020], making it a critical preliminary step in almost all existing text-to-SQL methods [Cao et al., 2021, Wang et al., 2019, Guo et al., 2019, Xuan et al., 2021]. This was also a single category with the largest number of failures made by the LLM in our case (Figure 2).

We designed a prompt-based module for schema linking. The prompt includes ten randomly selected samples from the training set of the Spider dataset. Following the chain-of-thought template [Wei et al., 2022b], the prompt begins with “Let’s think step by step,” as suggested by Kojima et al. [2022]. For each mention of a column name in the question, the corresponding columns and their tables are selected from the given database schema. Possible entities and cell values are also extracted from the question. Figure 3a illustrates an example and the full prompt can be found in Appendix A.3.

schema_links是利用samples的shots方式,找到查询需要的表和列

schema_linking_prompt = '''Table advisor, columns = [*,s_ID,i_ID]

Table classroom, columns = [*,building,room_number,capacity]

Table course, columns = [*,course_id,title,dept_name,credits]

Table department, columns = [*,dept_name,building,budget]

Table instructor, columns = [*,ID,name,dept_name,salary]

Table prereq, columns = [*,course_id,prereq_id]

Table section, columns = [*,course_id,sec_id,semester,year,building,room_number,time_slot_id]

Table student, columns = [*,ID,name,dept_name,tot_cred]

Table takes, columns = [*,ID,course_id,sec_id,semester,year,grade]

Table teaches, columns = [*,ID,course_id,sec_id,semester,year]

Table time_slot, columns = [*,time_slot_id,day,start_hr,start_min,end_hr,end_min]

Foreign_keys = [course.dept_name = department.dept_name,instructor.dept_name = department.dept_name,section.building = classroom.building,section.room_number = classroom.room_number,section.course_id = course.course_id,teaches.ID = instructor.ID,teaches.course_id = section.course_id,teaches.sec_id = section.sec_id,teaches.semester = section.semester,teaches.year = section.year,student.dept_name = department.dept_name,takes.ID = student.ID,takes.course_id = section.course_id,takes.sec_id = section.sec_id,takes.semester = section.semester,takes.year = section.year,advisor.s_ID = student.ID,advisor.i_ID = instructor.ID,prereq.prereq_id = course.course_id,prereq.course_id = course.course_id]

Q: "Find the buildings which have rooms with capacity more than 50."

A: Let’s think step by step. In the question "Find the buildings which have rooms with capacity more than 50.", we are asked:

"the buildings which have rooms" so we need column = [classroom.capacity]

"rooms with capacity" so we need column = [classroom.building]

Based on the columns and tables, we need these Foreign_keys = [].

Based on the tables, columns, and Foreign_keys, The set of possible cell values are = [50]. So the Schema_links are:

Schema_links: [classroom.building,classroom.capacity,50]

Table department, columns = [*,Department_ID,Name,Creation,Ranking,Budget_in_Billions,Num_Employees]

Table head, columns = [*,head_ID,name,born_state,age]

Table management, columns = [*,department_ID,head_ID,temporary_acting]

Foreign_keys = [management.head_ID = head.head_ID,management.department_ID = department.Department_ID]

Q: "How many heads of the departments are older than 56 ?"

A: Let’s think step by step. In the question "How many heads of the departments are older than 56 ?", we are asked:

"How many heads of the departments" so we need column = [head.*]

"older" so we need column = [head.age]

Based on the columns and tables, we need these Foreign_keys = [].

Based on the tables, columns, and Foreign_keys, The set of possible cell values are = [56]. So the Schema_links are:

Schema_links: [head.*,head.age,56]

Table department, columns = [*,Department_ID,Name,Creation,Ranking,Budget_in_Billions,Num_Employees]

Table head, columns = [*,head_ID,name,born_state,age]

Table management, columns = [*,department_ID,head_ID,temporary_acting]

Foreign_keys = [management.head_ID = head.head_ID,management.department_ID = department.Department_ID]

Q: "what are the distinct creation years of the departments managed by a secretary born in state 'Alabama'?"

A: Let’s think step by step. In the question "what are the distinct creation years of the departments managed by a secretary born in state 'Alabama'?", we are asked:

"distinct creation years of the departments" so we need column = [department.Creation]

"departments managed by" so we need column = [management.department_ID]

"born in" so we need column = [head.born_state]

Based on the columns and tables, we need these Foreign_keys = [department.Department_ID = management.department_ID,management.head_ID = head.head_ID].

Based on the tables, columns, and Foreign_keys, The set of possible cell values are = ['Alabama']. So the Schema_links are:

Schema_links: [department.Creation,department.Department_ID = management.department_ID,head.head_ID = management.head_ID,head.born_state,'Alabama']

Table Addresses, columns = [*,address_id,line_1,line_2,city,zip_postcode,state_province_county,country]

Table Candidate_Assessments, columns = [*,candidate_id,qualification,assessment_date,asessment_outcome_code]

Table Candidates, columns = [*,candidate_id,candidate_details]

Table Courses, columns = [*,course_id,course_name,course_description,other_details]

Table People, columns = [*,person_id,first_name,middle_name,last_name,cell_mobile_number,email_address,login_name,password]

Table People_Addresses, columns = [*,person_address_id,person_id,address_id,date_from,date_to]

Table Student_Course_Attendance, columns = [*,student_id,course_id,date_of_attendance]

Table Student_Course_Registrations, columns = [*,student_id,course_id,registration_date]

Table Students, columns = [*,student_id,student_details]

Foreign_keys = [Students.student_id = People.person_id,People_Addresses.address_id = Addresses.address_id,People_Addresses.person_id = People.person_id,Student_Course_Registrations.course_id = Courses.course_id,Student_Course_Registrations.student_id = Students.student_id,Student_Course_Attendance.student_id = Student_Course_Registrations.student_id,Student_Course_Attendance.course_id = Student_Course_Registrations.course_id,Candidates.candidate_id = People.person_id,Candidate_Assessments.candidate_id = Candidates.candidate_id]

Q: "List the id of students who never attends courses?"

A: Let’s think step by step. In the question "List the id of students who never attends courses?", we are asked:

"id of students" so we need column = [Students.student_id]

"never attends courses" so we need column = [Student_Course_Attendance.student_id]

Based on the columns and tables, we need these Foreign_keys = [Students.student_id = Student_Course_Attendance.student_id].

Based on the tables, columns, and Foreign_keys, The set of possible cell values are = []. So the Schema_links are:

Schema_links: [Students.student_id = Student_Course_Attendance.student_id]

Table Country, columns = [*,id,name]

Table League, columns = [*,id,country_id,name]

Table Player, columns = [*,id,player_api_id,player_name,player_fifa_api_id,birthday,height,weight]

Table Player_Attributes, columns = [*,id,player_fifa_api_id,player_api_id,date,overall_rating,potential,preferred_foot,attacking_work_rate,defensive_work_rate,crossing,finishing,heading_accuracy,short_passing,volleys,dribbling,curve,free_kick_accuracy,long_passing,ball_control,acceleration,sprint_speed,agility,reactions,balance,shot_power,jumping,stamina,strength,long_shots,aggression,interceptions,positioning,vision,penalties,marking,standing_tackle,sliding_tackle,gk_diving,gk_handling,gk_kicking,gk_positioning,gk_reflexes]

Table Team, columns = [*,id,team_api_id,team_fifa_api_id,team_long_name,team_short_name]

Table Team_Attributes, columns = [*,id,team_fifa_api_id,team_api_id,date,buildUpPlaySpeed,buildUpPlaySpeedClass,buildUpPlayDribbling,buildUpPlayDribblingClass,buildUpPlayPassing,buildUpPlayPassingClass,buildUpPlayPositioningClass,chanceCreationPassing,chanceCreationPassingClass,chanceCreationCrossing,chanceCreationCrossingClass,chanceCreationShooting,chanceCreationShootingClass,chanceCreationPositioningClass,defencePressure,defencePressureClass,defenceAggression,defenceAggressionClass,defenceTeamWidth,defenceTeamWidthClass,defenceDefenderLineClass]

Table sqlite_sequence, columns = [*,name,seq]

Foreign_keys = [Player_Attributes.player_api_id = Player.player_api_id,Player_Attributes.player_fifa_api_id = Player.player_fifa_api_id,League.country_id = Country.id,Team_Attributes.team_api_id = Team.team_api_id,Team_Attributes.team_fifa_api_id = Team.team_fifa_api_id]

Q: "List the names of all left-footed players who have overall rating between 85 and 90."

A: Let’s think step by step. In the question "List the names of all left-footed players who have overall rating between 85 and 90.", we are asked:

"names of all left-footed players" so we need column = [Player.player_name,Player_Attributes.preferred_foot]

"players who have overall rating" so we need column = [Player_Attributes.overall_rating]

Based on the columns and tables, we need these Foreign_keys = [Player_Attributes.player_api_id = Player.player_api_id].

Based on the tables, columns, and Foreign_keys, The set of possible cell values are = [left,85,90]. So the Schema_links are:

Schema_links: [Player.player_name,Player_Attributes.preferred_foot,Player_Attributes.overall_rating,Player_Attributes.player_api_id = Player.player_api_id,left,85,90]

Table advisor, columns = [*,s_ID,i_ID]

Table classroom, columns = [*,building,room_number,capacity]

Table course, columns = [*,course_id,title,dept_name,credits]

Table department, columns = [*,dept_name,building,budget]

Table instructor, columns = [*,ID,name,dept_name,salary]

Table prereq, columns = [*,course_id,prereq_id]

Table section, columns = [*,course_id,sec_id,semester,year,building,room_number,time_slot_id]

Table student, columns = [*,ID,name,dept_name,tot_cred]

Table takes, columns = [*,ID,course_id,sec_id,semester,year,grade]

Table teaches, columns = [*,ID,course_id,sec_id,semester,year]

Table time_slot, columns = [*,time_slot_id,day,start_hr,start_min,end_hr,end_min]

Foreign_keys = [course.dept_name = department.dept_name,instructor.dept_name = department.dept_name,section.building = classroom.building,section.room_number = classroom.room_number,section.course_id = course.course_id,teaches.ID = instructor.ID,teaches.course_id = section.course_id,teaches.sec_id = section.sec_id,teaches.semester = section.semester,teaches.year = section.year,student.dept_name = department.dept_name,takes.ID = student.ID,takes.course_id = section.course_id,takes.sec_id = section.sec_id,takes.semester = section.semester,takes.year = section.year,advisor.s_ID = student.ID,advisor.i_ID = instructor.ID,prereq.prereq_id = course.course_id,prereq.course_id = course.course_id]

Q: "Give the title of the course offered in Chandler during the Fall of 2010."

A: Let’s think step by step. In the question "Give the title of the course offered in Chandler during the Fall of 2010.", we are asked:

"title of the course" so we need column = [course.title]

"course offered in Chandler" so we need column = [SECTION.building]

"during the Fall" so we need column = [SECTION.semester]

"of 2010" so we need column = [SECTION.year]

Based on the columns and tables, we need these Foreign_keys = [course.course_id = SECTION.course_id].

Based on the tables, columns, and Foreign_keys, The set of possible cell values are = [Chandler,Fall,2010]. So the Schema_links are:

Schema_links: [course.title,course.course_id = SECTION.course_id,SECTION.building,SECTION.year,SECTION.semester,Chandler,Fall,2010]

Table city, columns = [*,City_ID,Official_Name,Status,Area_km_2,Population,Census_Ranking]

Table competition_record, columns = [*,Competition_ID,Farm_ID,Rank]

Table farm, columns = [*,Farm_ID,Year,Total_Horses,Working_Horses,Total_Cattle,Oxen,Bulls,Cows,Pigs,Sheep_and_Goats]

Table farm_competition, columns = [*,Competition_ID,Year,Theme,Host_city_ID,Hosts]

Foreign_keys = [farm_competition.Host_city_ID = city.City_ID,competition_record.Farm_ID = farm.Farm_ID,competition_record.Competition_ID = farm_competition.Competition_ID]

Q: "Show the status of the city that has hosted the greatest number of competitions."

A: Let’s think step by step. In the question "Show the status of the city that has hosted the greatest number of competitions.", we are asked:

"the status of the city" so we need column = [city.Status]

"greatest number of competitions" so we need column = [farm_competition.*]

Based on the columns and tables, we need these Foreign_keys = [farm_competition.Host_city_ID = city.City_ID].

Based on the tables, columns, and Foreign_keys, The set of possible cell values are = []. So the Schema_links are:

Schema_links: [city.Status,farm_competition.Host_city_ID = city.City_ID,farm_competition.*]

Table advisor, columns = [*,s_ID,i_ID]

Table classroom, columns = [*,building,room_number,capacity]

Table course, columns = [*,course_id,title,dept_name,credits]

Table department, columns = [*,dept_name,building,budget]

Table instructor, columns = [*,ID,name,dept_name,salary]

Table prereq, columns = [*,course_id,prereq_id]

Table section, columns = [*,course_id,sec_id,semester,year,building,room_number,time_slot_id]

Table student, columns = [*,ID,name,dept_name,tot_cred]

Table takes, columns = [*,ID,course_id,sec_id,semester,year,grade]

Table teaches, columns = [*,ID,course_id,sec_id,semester,year]

Table time_slot, columns = [*,time_slot_id,day,start_hr,start_min,end_hr,end_min]

Foreign_keys = [course.dept_name = department.dept_name,instructor.dept_name = department.dept_name,section.building = classroom.building,section.room_number = classroom.room_number,section.course_id = course.course_id,teaches.ID = instructor.ID,teaches.course_id = section.course_id,teaches.sec_id = section.sec_id,teaches.semester = section.semester,teaches.year = section.year,student.dept_name = department.dept_name,takes.ID = student.ID,takes.course_id = section.course_id,takes.sec_id = section.sec_id,takes.semester = section.semester,takes.year = section.year,advisor.s_ID = student.ID,advisor.i_ID = instructor.ID,prereq.prereq_id = course.course_id,prereq.course_id = course.course_id]

Q: "Find the id of instructors who taught a class in Fall 2009 but not in Spring 2010."

A: Let’s think step by step. In the question "Find the id of instructors who taught a class in Fall 2009 but not in Spring 2010.", we are asked:

"id of instructors who taught " so we need column = [teaches.id]

"taught a class in" so we need column = [teaches.semester,teaches.year]

Based on the columns and tables, we need these Foreign_keys = [].

Based on the tables, columns, and Foreign_keys, The set of possible cell values are = [Fall,2009,Spring,2010]. So the Schema_links are:

schema_links: [teaches.id,teaches.semester,teaches.year,Fall,2009,Spring,2010]

Table Accounts, columns = [*,account_id,customer_id,date_account_opened,account_name,other_account_details]

Table Customers, columns = [*,customer_id,customer_first_name,customer_middle_initial,customer_last_name,gender,email_address,login_name,login_password,phone_number,town_city,state_county_province,country]

Table Financial_Transactions, columns = [*,transaction_id,account_id,invoice_number,transaction_type,transaction_date,transaction_amount,transaction_comment,other_transaction_details]

Table Invoice_Line_Items, columns = [*,order_item_id,invoice_number,product_id,product_title,product_quantity,product_price,derived_product_cost,derived_vat_payable,derived_total_cost]

Table Invoices, columns = [*,invoice_number,order_id,invoice_date]

Table Order_Items, columns = [*,order_item_id,order_id,product_id,product_quantity,other_order_item_details]

Table Orders, columns = [*,order_id,customer_id,date_order_placed,order_details]

Table Product_Categories, columns = [*,production_type_code,product_type_description,vat_rating]

Table Products, columns = [*,product_id,parent_product_id,production_type_code,unit_price,product_name,product_color,product_size]

Foreign_keys = [Orders.customer_id = Customers.customer_id,Invoices.order_id = Orders.order_id,Accounts.customer_id = Customers.customer_id,Products.production_type_code = Product_Categories.production_type_code,Financial_Transactions.account_id = Accounts.account_id,Financial_Transactions.invoice_number = Invoices.invoice_number,Order_Items.order_id = Orders.order_id,Order_Items.product_id = Products.product_id,Invoice_Line_Items.product_id = Products.product_id,Invoice_Line_Items.invoice_number = Invoices.invoice_number,Invoice_Line_Items.order_item_id = Order_Items.order_item_id]

Q: "Show the id, the date of account opened, the account name, and other account detail for all accounts."

A: Let’s think step by step. In the question "Show the id, the date of account opened, the account name, and other account detail for all accounts.", we are asked:

"the id, the date of account opened, the account name, and other account detail for all accounts." so we need column = [Accounts.account_id,Accounts.account_name,Accounts.other_account_details,Accounts.date_account_opened]

Based on the columns and tables, we need these Foreign_keys = [].

Based on the tables, columns, and Foreign_keys, The set of possible cell values are = []. So the Schema_links are:

Schema_links: [Accounts.account_id,Accounts.account_name,Accounts.other_account_details,Accounts.date_account_opened]

Table city, columns = [*,City_ID,Official_Name,Status,Area_km_2,Population,Census_Ranking]

Table competition_record, columns = [*,Competition_ID,Farm_ID,Rank]

Table farm, columns = [*,Farm_ID,Year,Total_Horses,Working_Horses,Total_Cattle,Oxen,Bulls,Cows,Pigs,Sheep_and_Goats]

Table farm_competition, columns = [*,Competition_ID,Year,Theme,Host_city_ID,Hosts]

Foreign_keys = [farm_competition.Host_city_ID = city.City_ID,competition_record.Farm_ID = farm.Farm_ID,competition_record.Competition_ID = farm_competition.Competition_ID]

Q: "Show the status shared by cities with population bigger than 1500 and smaller than 500."

A: Let’s think step by step. In the question "Show the status shared by cities with population bigger than 1500 and smaller than 500.", we are asked:

"the status shared by cities" so we need column = [city.Status]

"cities with population" so we need column = [city.Population]

Based on the columns and tables, we need these Foreign_keys = [].

Based on the tables, columns, and Foreign_keys, The set of possible cell values are = [1500,500]. So the Schema_links are:

Schema_links: [city.Status,city.Population,1500,500]'''

4.2 Classification & Decomposition Module

For each join, there is some chance that a correct table or join condition is not detected. As the number of joins in a query increases, the chance that at least one join fails to generate correctly increases. One way to alleviate the problem is introduce a module that detects the tables to be joined. Also some queries have procedural components such as uncorrelated sub-queries, which may be generated independently and be merged with the main query.

To address these issues, we introduce a query classification and decomposition module. The module classifies each query into one of the three classes: easy, non-nested complex and nested complex. The easy class includes single-table queries that can be answered without join or nesting. The non-nested class includes queries that require join but no sub-queries, and the queries in the nested class can contain joins, sub-queries and set operations. The class labels are important for our query generation module, which uses different prompts for each query class. In addition to class labels, query classification and decomposition also detects the set of tables to be joined for both non-nested and nested queries as well as any sub-queries that may be detected for nested queries. Figure 3b shows an example input given to the model and the output that the model generates.

对sql任务进行分类,分为三类:

- 简单类型;不需要连表和嵌套查询

- 非嵌套类型;有连表操作,但是没有嵌套查询

- 嵌套查询;包含连接操作,子查询或者集合操作

不同类别任务对应不同的生成prompt

4.3 SQL Generation Module

As the queries become more complex, additional intermediate steps must be incorporated to bridge the gap between the natural language question and the SQL statement. This gap, known as the mismatch problem in the literature [Guo et al., 2019], poses a significant challenge to SQL generation, which stems from the fact that SQL is primarily designed for querying relational databases and not representing the meaning in natural language [Kate, 2008]. While more complex queries can benefit from listing the intermediate steps in a chain-of-thought style prompting, such listings can degrade the performance for simpler tasks [Wei et al., 2022b]. On the same basis, our query generation comprises of three modules, each geared toward different classes.

- 额外的中间状态可以弥补自然语言问题与SQL语句的差异(存疑,或者什么样的中间状态才是最重要的)。

- 利用COT的prompt,复杂问题会有增益,但是对于简单问题会有负面作用

- 最终,对于三种不同的类型,使用了三个不同的模板用于SQL的生成

For questions in our easy class, a simple few-shot prompting with no intermediate steps is adequate. The demonstration for an example Ej of this class follows the format < Q j , S j , A j > <Q_j , S_j , A_j> <Qj,Sj,Aj>, where Q j Q_j Qj and A j A_j Aj give the query text in English and SQL respectively and S j S_j Sj indicates the schema links.

Our non-nested complex class includes queries that require join. Our error analysis (§ 3) revealed that finding the right columns and foreign keys to join two tables can be challenging for LLMs under simple few-shot prompting, especially when the query requires joining multiple tables. To address this issue, we resort to an intermediate representation to bridge the gap between queries and SQL statements. Various intermediate representations have been introduced in the literature. In particular, SemQL [Guo et al., 2019] removes operators JOIN ON, FROM, and GROUP BY, which have no clear counterparts in natural language queries, and merges the HAVING and WHERE clauses. NatSQL [Gan et al., 2021] builds upon SemQL and removes the set operators. Expressions in natural language queries may not clearly map to a unique SQL clause or they may map to multiple clauses, so removing operators makes the transition from natural language to SQL easier. As our intermediate representation, we use NatSQL, which is shown to have a state-of-the-art performance when combined with other models [Li et al., 2023a]. The demonstration for an example E j E_j Ej of the non-nested complex class follows the format < Q j , S j , I j , A j > <Q_j , S_j , I_j , A_j> <Qj,Sj,Ij,Aj>, where S j S_j Sj and I j I_j Ij respectively denote the schema links and the intermediate representation for the jth example.

Lastly, the nested complex class is the most sophisticated type and requires several intermediate steps before generating the final answer. This class can contain queries that not only require sub-queries using nesting and set operations such as EXCEPT, UNION, and INTERSECT but also multiple table joins, same as the previous class. To break down the problem further into multiple steps, our prompt for this class is designed in a way that the LLM should first solve the sub-queries, generated from the previous module, and then use them to generate the final answer. The prompt for this class follows the format < Q j , S j , < Q j 1 , A j 1 , . . . , Q j k , A j k > , I j , A j > <Q_j , S_j , <Q_{j1} , A_{j1} , ..., Q_{jk} , A_{jk}> , I_j , A_j> <Qj,Sj,<Qj1,Aj1,...,Qjk,Ajk>,Ij,Aj>, where k denotes the number of sub-questions, and Q j i Q_{ji} Qji and A j i A_{ji} Aji respectively denote the i-th sub-question and the i-th sub-query. As before, Q j Q_j Qj and A j A_j Aj denote the query in English and SQL respectively, S j S_j Sj gives the schema links and I j I_j Ij is a NatSQL intermediate representation.

Full prompts for all three query classes are provided in Appendix A.4, and all examples for the three classes are obtained from the exact same training set database chosen for the classification prompt.

介绍三种生成SQL prompt的方式,主要观点是:

- 中间状态参考的是之前传统方法构建的,去掉某些关键字词(比如NatSQL),如果还有需要确认中间状态是否有用时,可能需要去了解NatSQL之类的

- 解决复杂问题的前提,先要解决子问题

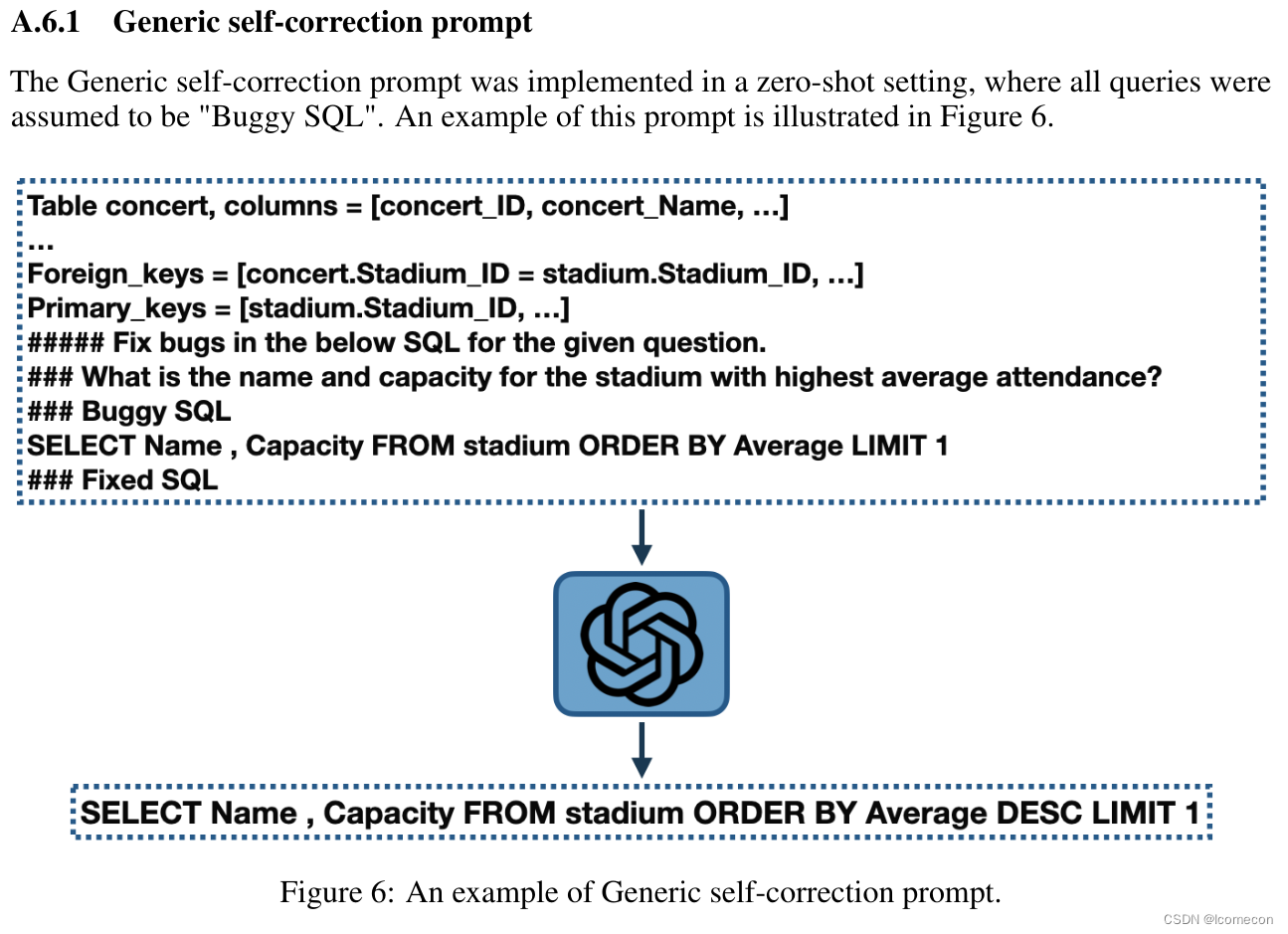

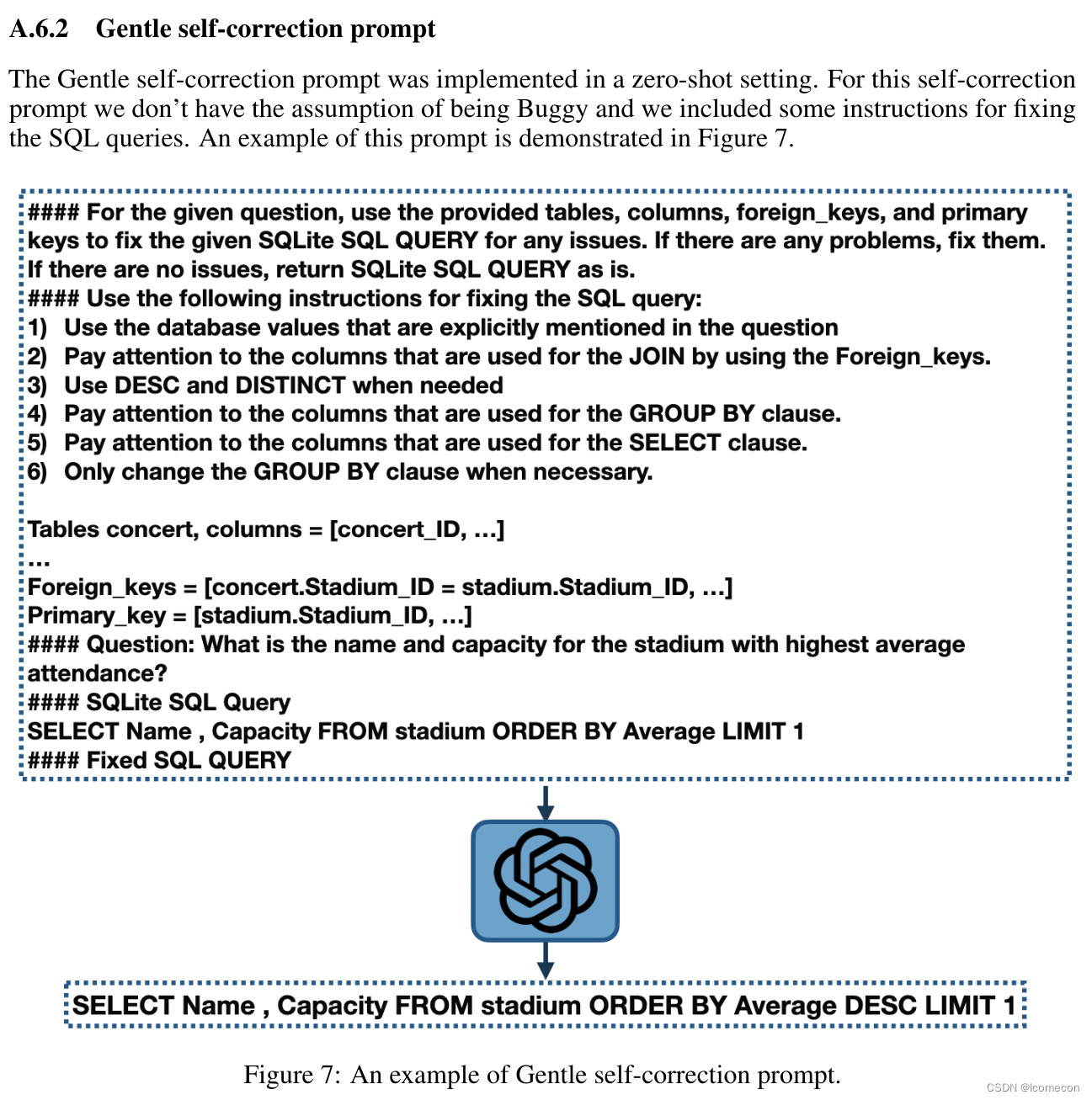

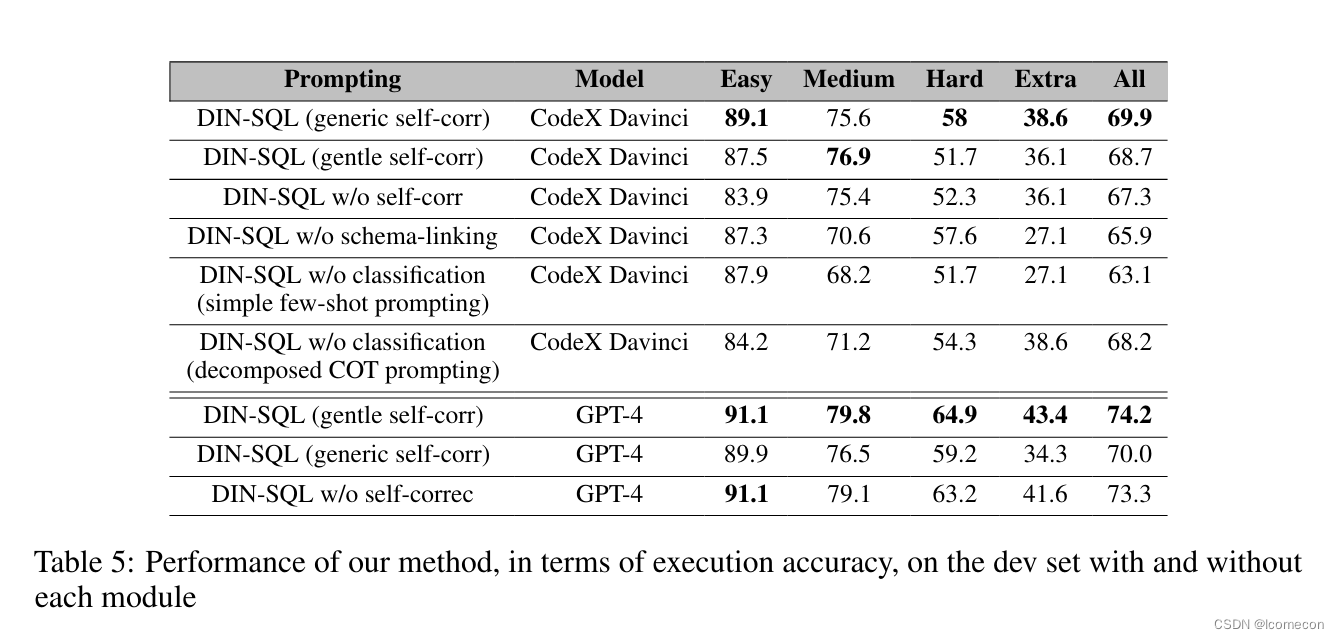

4.4 Self-correction Module

The generated SQL queries can sometimes have missing or redundant keywords such as DESC, DISTINCT and aggregation functions. Our experience with multiple LLMs indicates that these issues are less common in larger LLMs (e.g., queries generated by GPT-4 have less bugs than those from CodeX) but are still present. To address this, we propose a self-correction module where the model is instructed to correct those minor mistakes. This is achieved in a zero-shot setting, where only the buggy code is provided to the model and it is asked to fix the bugs. We propose two different prompts for the self-correction module: generic and gentle. With a generic prompt, we request the model to identify and correct the errors in the “BUGGY SQL”. The gentle prompt, on the other hand, does not assume the SQL query is buggy, and instead asks the model to check for any potential issues and provides some hints on the clauses to be checked. Our evaluation indicates that a generic prompt can yield a better result with the CodeX model, while a gentle prompt is more effective for the GPT-4 model. Unless explicitly stated otherwise, the default self-correction prompt in DIN-SQL is set to gentle for GPT-4 and generic for CodeX. Examples of both generic and gentle self-correction prompts can be found in Appendix A.6.

两种模式的自我修正,因为使用的是zero-shot,比较吃模型的能力。两个prompt如下:

|

|

5. Experiments

这个章节就不详细地说了

5.1 Models

选用的模型: CodeX, GPT4

5.2 Hyperparameter

All models were accessed via the OpenAI API. Greedy decoding was used to generate the output by setting the temperature at zero. The max tokens was set to 350 for the self-correction module and 600 for all other modules. The stopping token sequence was set to “#;\n \n” for the self-correction module and “Q:” for all other modules.

没啥说的,自己看吧

5.3 Dataset

SPIDER和BIRD

5.4 Metrics

The performance of our models are evaluated using the official metrics of each dataset: exact-setmatch accuracy (EM) and execution accuracy (EX) for Spider and valid efficiency score (VES) and execution accuracy (EX) for BIRD.

- EM: 与GT完全匹配

- EX: 与GT执行结果一直

- VES: SQL的效率分数,查询时间等

5.5 Results

结果图片

错误修正情况

消融实验

6. Conclusions

Prompting has enabled large language models to achieve impressive performance on numerous NLP tasks across different domains, without requiring a large training set. Prior to our research, the effectiveness of prompting methods utilizing LLMs for the text-to-SQL task was inferior to that of models fine-tuned for the task. To bridge this gap, we have devised a decomposition technique to tackle some of the challenges that caused this disparity. Our extensive experiments on two challenging datasets of Spider and BIRD show that our method significantly improves the performance of prompting across all query classes, producing comparable or even superior results to state-of-the-art fine-tuned approaches.

结论,大概就是prompt的作用以及DIN-SQL的有效性

7. Limitations

There are some limitations or areas of improvement to this work. Our manually constructed demonstrations are fixed for each query class. Future research may explore adaptive and automated methods for generating demonstrations at finer granularities, which can further enhance the performance of our approach. Additionally, as of the time of writing this paper, our proposed approach, characterized by its decomposed and step-by-step structure, incurs a cost of approximately $0.5 and exhibits a latency of approximately 60 seconds when responding to a natural language question from the Spider dataset using GPT-4. We anticipate that as LLMs continue to advance, these costs and latencies should decrease, but reducing the cost is another possible direction.

局限性: badcase的修复需要一个一个针对性的去修复,未来是否有自动修复的方法? 然后太贵了(不过现在降价了)

好了介绍就到这边,prompt的写法看论文的附录和源码上边都有,在spider上边,有效果比DIN-SQL更好的DAIL-SQL,作为TODO,那就挖个坑

TODO:

- DAIL-SQL论文分享(DDL: 2024-05-31)

- 构建一个供大家可尝试DIN-SQL方法的Demo,暂时还没想好怎么设计(DDL: 2024-07-31)

最后,还是放上一个群图片,目标是构建一个大家一起学习交流LLM的场所,然后期待2025-06-30时,能够达到20个人(对,目前就我一个)

1181

1181

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?