接下来是一个组跟数据和机器学习有关的内容,这部分在SAP-C02考试中目前占比可能不多且不是很深入,但是随着AI的趋势,这部分内容将会越来越重要,但是经常会出现在考题的选项中,因此了解其基本功能和在解决方案中的应用也是非常重要的。

目录

1 大数据

1.1 Amazon Kinesis家族

Amazon Kinesis家族有4个套件,主要是做流式处理。如果你关注过大数据flink、spark等组件,那么对Kinesis就不陌生,以下是Kinesis的作用:

- 处理流式大数据

- 适用于:日志处理、IoT、metrics、clickstream等场景

- 接近实时的数据处理

- 默认有3-AZ多可用区副本

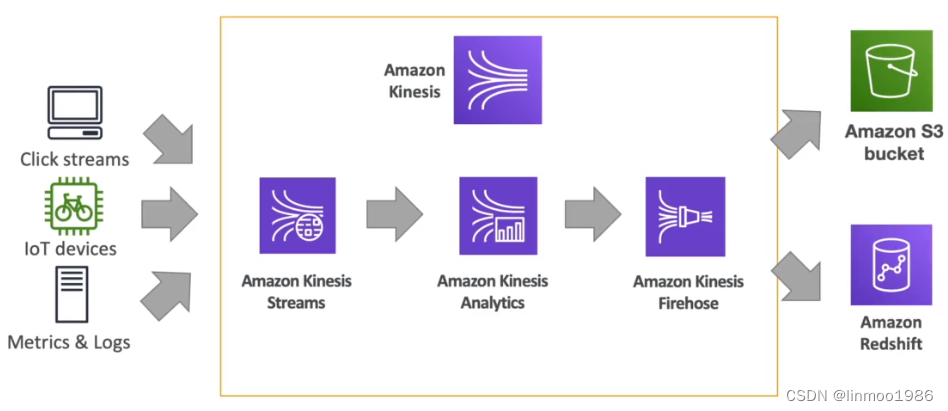

其中有3个套件是经常组合在一起,如下图

- 通过Kinesis Data Streams接收来自外部数据

- 通过Kinesis Data Analytics进行数据分析处理

- 通过Kinesis Data Firehose将数据输送到其它存储做分析或备份

下面分别介绍4种不同的组件以及可能的组合解决方案。

1.1.1 Kinesis Data Streams

Kinesis Data Streams是以流形式接收来自外部的数据,该数据可以来自日志、IoT、metrics、clickstream等等,可以理解它是一个kafka之类的。

例题:A company runs an loT platform on AWS. IoT sensors in various locations send data to the company’s Node.js API servers on Amazon EC2 instances running behind an Application Load Balancer, The data is stored an Amazon RDS MYSOL DB instance that uses a 4TB General Purpose SSD volume. The number of sensors the company has deployed in the field has increased over time, and is expected to grow significantly. The APl servers are consistently overloaded and RDS metrics show high write latency, Which of the following steps together will resolve the issues permanently and enable growth as new sensors are provisioned, while keeping this platform cost-efficient? (Choose two.)

A. Resize the MySOL General Purpose SSD storage to 6 TB to improve the volume’s IOPS

B. Re-architect the database tier to use Amazon Aurora instead of an RDS MySOL DB instance and add read replicas

C. Leverage Amazon Kinesis Data Streams and AWS Lambda to ingest and process the raw data

D. Use AWS-X-Ray to analyze and debug application issues and add more API servers to match the load

E. Re-architect the database tier to use Amazon DynamoDB instead of an RDS MySOL DB instance

答案:CE

答案解析:题目关键词:IoT, increased, overloaded。随着IoT传感器增加,出现过载和延迟。A选项只能暂时解决问题数据库硬盘延迟问题;B选项解决延迟问题,但是会有容量限制问题,也只是暂时解决问题;C选项Kinesis Data Streams 很好解决数据接收问题;D选项X-Ray是分析服务调用,与本题中解决问题并无关系;E选项DynamoDB没有容量限制,可以解决存储问题。

例题:A company is developing a gene reporting device that will collect genomic information to assist researchers will collecting large samples of data from a diverse population. The device will push 8 KB of genomic data every second to a data platform that will need to process and analyze the data and provide information back to researchers. The data platform must meet the following requirements:

– Provide near-real-time analytics of the inbound genomic data

– Ensure the data is flexible, parallel, and durable

– Deliver results of processing to a data warehouse

Which strategy should a solutions architect use to meet these requirements?

A. Use Amazon Kinesis Data Firehouse to collect the inbound sensor data, analyze the data with Kinesis clients, and save the results to an Amazon RDS instance.

B. Use Amazon Kinesis Data Streams to collect the inbound sensor data, analyze the data with Kinesis clients, and save the results to an Amazon Redshift cluster using Amazon EMR.

C. Use Amazon S3 to collect the inbound device data, analyze the data from Amazon SQS with Kinesis, and save the results to an Amazon Redshift cluster.

D. Use an Amazon API Gateway to put requests into an Amazon SQS queue, analyze the data with an AWS Lambda function, and save the results to an Amazon Redshift cluster using Amazon EMR.

答案:B

答案解析:题目要求采集基因数据并处理分析,并且将结果返回给客户端。A选项在采集数据方面,Kinesis Data Firehouse虽然也能采集,但是一般用于处理非实时,并且结果保存在EC2上面不方便查询。C选项存储在S3需要开发接收程序。D选项同样问题。因此采用B选项。

1.1.1.1 基本特性

- 数据保留默认24小时,当然可以扩展到7天

- 数据不能手动被删除,除非过期(这一点和SNS不一样)

- 允许多个程序消费同一个stream

- 可以使用分片机制

- 每个分片1秒钟只能接收1MB或1000条消息,如果生产者发送太快会报:ProvisionedThroughputException

- 每个分片1秒钟最多能发送2MB,只支持5个API同时调用同一个分片

- 支持Enhanced Fan-Out,通过推送的方式将每秒2MB速度推送,这样就不受API调用限制

例题:A company has IoT sensors that monitor traffic patterns throughout a large city. The company wants to read and collect data from the sensors and perform aggregations on the data.

A solutions architect designs a solution in which the IoT devices are streaming to Amazon Kinesis Data Streams. Several applications are reading from the stream. However, several consumers are experiencing throttling and are periodically encountering a ReadProvisionedThroughputExceeded error.

Which actions should the solutions architect take to resolve this issue? (Choose three.)

A. Reshard the stream to increase the number of shards in the stream.

B. Use the Kinesis Producer Library (KPL). Adjust the polling frequency.

C. Use consumers with the enhanced fan-out feature.

D. Reshard the stream to reduce the number of shards in the stream.

E. Use an error retry and exponential backoff mechanism in the consumer logic.

F. Configure the stream to use dynamic partitioning.

答案:ACE

答案解析:题目说服务消费Kinesis Data Streams流出现ReadProvisionedThroughputExceeded。出现错误是因为读取达到瓶颈。A选项重新增加分片是可以增加消费速度,可以缓解问题。B选项虽然调整频率,但是还是不能解决API调用限制问题。C选项使用 fan-out,可以提升速度,缓解问题。D选项减少分片反而是问题更严重。E选项重试机制可以自动重试以致成功,缓解问题。F选项动态分区针对Kinesis Firehose。因此答案ACE。

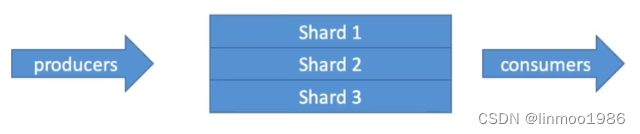

1.1.1.2 分片

- 一个stream可以多个分片,取决于你的需要

- 分片可以扩展或者缩减

- 支持批处理

- 分片中数据是按照顺序(这个可以做顺序处理的场景)

1.1.1.3 生产者和消费者

- Kinesis Producers

1)使用AWS SDK:单个生产者

2)使用KPL:如果是你的程序需要发送数据,则使用KPL最合适,支持C++、Java。同时KPL可以直接发送到Kinesis Data Firehose

3)Kinesis Agent:多用于日志采集,同时Kinesis Agent可以直接发送到Kinesis Data Firehose(注意:考试中会出现不同方式发送数据,则根据场景选择) - Kinesis Consumer

1)AWS SDK:单个消费者

2)Lambda:通过Event source方式

3)KCL:可以支持多个消费者同时消费

1.1.2 Kinesis Data Firehose

Amazon Kinesis Data Firehose 是一项完全托管的服务,用于向亚马逊简单存储服务 (Amazon S3)、亚马逊 Redshift、亚马逊服务、亚马逊无服务器、Splunk 以及支持的第三方服务提供商拥有的任何自定义 HTTP 终端节点或 HTTP 终端节点(包括 Datadog、 LogicMonitor Dynatrace、MongoDB、New OpenSearch Relic)等目的地提供实时流数据、Coralogix 和 Elastic。

1.1.2.1 基本特性

- 它是一个serverless,自动伸缩的工具

- 提供**Near Real Time(近实时)**数据同步。取决于缓冲区的大小和停留时间

- 运行将数据存入Amazon S3、Amazon Redshift、ElasticSearch、Splunk等

- 允许做格式转换等操作

- 如果要做实时处理Kinesis Data Streams的数据,就不能使用Firehose,而是需要Lambda

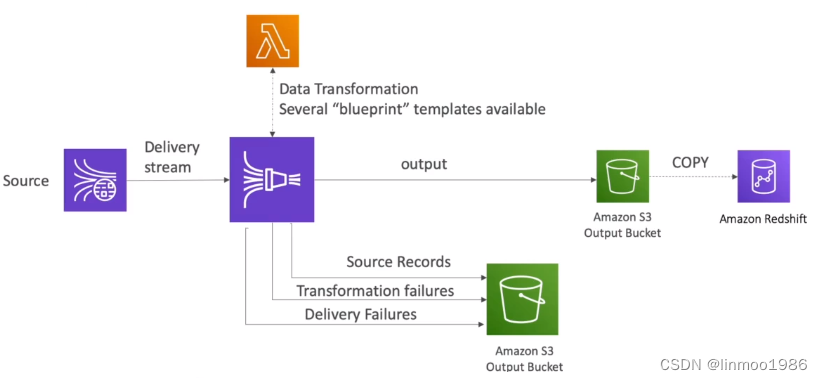

1.1.2.2 典型架构

- 基于Lambda做数据处理到S3,同时利用另外一个S3存储错误处理结果

1.1.2.3 Firehose与Stream的最大区别

- Firehose准实时(near-real time),Stream是实时(real time)

- Firehose不存数据,到了规定时间或大小,一刷数据就不见了;Stream可以存储数据

- Firehose是serverless

例题:A company has an on-premises monitoring solution using a PostgreSQL database for persistence of events. The database is unable to scale due to heavy ingestion and it frequently runs out of storage.

The company wants to create a hybrid solution and has already set up a VPN connection between its network and AWS. The solution should include the following attributes:

– Managed AWS services to minimize operational complexity.

– A buffer that automatically scales to match the throughput of data and requires no ongoing administration.

– A visualization tool to create dashboards to observe events in near-real time.

– Support for semi-structured JSON data and dynamic schemas.

Which combination of components will enable the company to create a monitoring solution that will satisfy these requirements? (Choose two.)

A. Use Amazon Kinesis Data Firehose to buffer events. Create an AWS Lambda function to process and transform events.

B. Create an Amazon Kinesis Data Stream to buffer events. Create an AWS Lambda function to process and transform events.

C. Configure an Amazon Aurora PostgreSQL DB cluster to receive events. Use Amazon QuickSight to read from the database and create near-real-time visualizations and dashboards.

D. Configure Amazon Elasticsearch Service (Amazon ES) to receive events. Use the Kibana endpoint deployed with Amazon ES to create near-real-time visualizations and dashboards.

E. Configure an Amazon Neptune DB instance to receive events. Use Amazon QuickSight to read from the database and create near-real-time visualizations and dashboards.

答案:AD

答案解析:题目关键词:minimize operational complexity,create dashboards,near-real time,semi-structured JSON。那么首先处理near-real time准实时数据,使用Data Firehose ,而不是Data Stream,因此选择A选项。其次做半结构化的JSON格式查询,使用Elasticsearch(注意现在应该使用OpenSearch或者CloudSearch)是最合适,因此最终答案为AD

例题:A company has developed a hybrid solution between its data center and AWS. The company uses Amazon VPC and Amazon EC2 instances that send application logs to Amazon CloudWatch. The EC2 instances read data from multiple relational databases that are hosted on premises.

The company wants to monitor which EC2 instances are connected to the databases in near-real time. The company already has a monitoring solution that uses Splunk on premises. A solutions architect needs to determine how to send networking traffic to Splunk.

How should the solutions architect meet these requirements?

A. Enable VPC flows logs, and send them to CloudWatch. Create an AWS Lambda function to periodically export the CloudWatch logs to an Amazon S3 bucket by using the pre-defined export function. Generate ACCESS_KEY and SECRET_KEY AWS credentials. Configure Splunk to pull the logs from the S3 bucket by using those credentials.

B. Create an Amazon Kinesis Data Firehose delivery stream with Splunk as the destination. Configure a pre-processing AWS Lambda function with a Kinesis Data Firehose stream processor that extracts individual log events from records sent by CloudWatch Logs subscription filters. Enable VPC flows logs, and send them to CloudWatch. Create a CloudWatch Logs subscription that sends log events to the Kinesis Data Firehose delivery stream.

C. Ask the company to log every request that is made to the databases along with the EC2 instance IP address. Export the CloudWatch logs to an Amazon S3 bucket. Use Amazon Athena to query the logs grouped by database name. Export Athena results to another S3 bucket. Invoke an AWS Lambda function to automatically send any new file that is put in the S3 bucket to Splunk.

D. Send the CloudWatch logs to an Amazon Kinesis data stream with Amazon Kinesis Data Analytics for SQL Applications. Configure a 1-minute sliding window to collect the events. Create a SQL query that uses the anomaly detection template to monitor any networking traffic anomalies in near-real time. Send the result to an Amazon Kinesis Data Firehose delivery stream with Splunk as the destination.

答案:B

答案解析:题目要求监控EC2访问数据库的数据,将数据通过CloudWatch传输到本地数据中心的Splunk(数据分析平台)。关键词:near-real time。因为是所有EC2的VPC流量,因此需要做一个过滤,因此采用Kinesis Data Firehose+Lambda做数据过滤最合适。因此选择B选项。

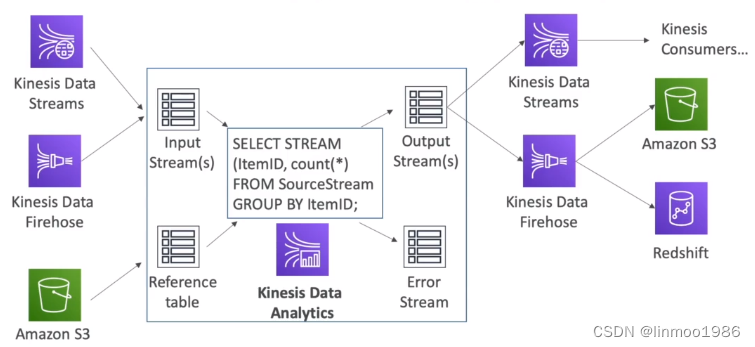

1.1.3 Kinesis Data Analytics

借助适用于 SQL 应用程序的 Amazon Kinesis Data Analytics,您可以使用标准 SQL 处理和分析流数据。您可以使用该服务针对流式传输源快速编写和运行强大的 SQL 代码,以执行时间序列分析,为实时控制面板提供信息以及创建实时指标。你可以简单理解它为一个Flink框架。

1.1.3.1 基本特性

- 是一个serverless可自动伸缩的服务

- 必须有source和destination的权限

- 可以使用SQL或Flink做计算

- 可以做Schema discovery

- 可以使用Lambda做预处理

1.1.3.2 典型架构

- Kinesis Data Analytics基本应用架构

1.1.3.3 应用场景

- 做流式的ETL

- 做数据metric生成,比如排行榜等

- 响应式分析

1.1.4 Kinesis Video Streams

Kinesis Video Streams并非前面提到的3件套经常做流数据处理,Kinesis Video Streams是一个准备处理实时视频的工具。Kinesis Video Streams 将实时视频从设备流式传输到设备AWS Cloud,或者构建用于实时视频处理或面向批处理的视频分析的应用程序。

1.1.4.1 基本特性

- 一个stream对应一台设备(或生产者)

- 生产者可以是cameras、body worn camera、smartphone等

- 生产者也可以使用Kinesis Video Streams Producer library发送数据

- Kinesis Video Streams数据默认存储在S3,但是我们无法自己从S3读取数据

- 消费者可以使用Kinesis Video Streams Parser Library消费数据

- 消费者可以Rekogition做人脸识别

1.1.4.2 典型架构

- 视频人脸识别

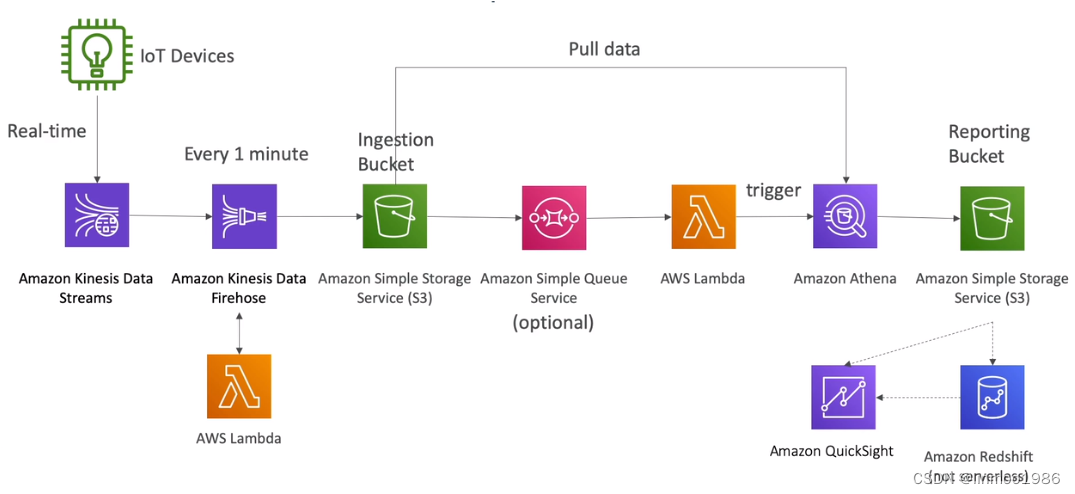

1.1.4 流数据Sream典型架构

- 利用Kinesis家族处理Stream

- 处理Stream时,传统架构与使用Kinesis架构的成本差异

- 不同处理Stream方案比较

1.2 Amazon Redshift

Amazon Redshift 是一种完全托管的 PB 级云中数据仓库服务。Amazon Redshift Serverless 让您可以访问和分析数据,而无需对预置数据仓库执行任何配置操作。注意:之前Redshift是一个按实例计费的非serverless框架,现在已经推荐serverless。原先的可能按照节点和存储计费,现在serverless方式使用计算单元计费,这样也省去管理节点。

简单理解Redshift就是一个MPP的数仓,类似开源产品有clickhouse等。以不同于关系型数据库的列式存储,更多用于OLAP,但是又继承关系型数据库的表、字段、SQL等概念,很方便上手。

1.2.1 基本特性

- Redshift主要用于OLAP,也就是数据分析

- 数据查询性能远超于普通关系型数据库,且可以扩展PB级别数据

- 列式存储,典型的MPP框架

- 可以使用SQL,且与Quicksight或Tableau等报表工具集成

- 数据来源可以是S3、Kinesis Firehose、DynamoDB等等

例题:A company runs an application on AWS. The company curates data from several different sources. The company uses proprietary algorithms to perform data transformations and aggregations. After the company performs ETL processes, the company stores the results in Amazon Redshift tables. The company sells this data to other companies. The company downloads the data as files from the Amazon Redshift tables and transmits the files to several data customers by using FTP. The number of data customers has grown significantly. Management of the data customers has become difficult.

The company will use AWS Data Exchange to create a data product that the company can use to share data with customers. The company wants to confirm the identities of the customers before the company shares data. The customers also need access to the most recent data when the company publishes the data.

Which solution will meet these requirements with the LEAST operational overhead?

A. Use AWS Data Exchange for APIs to share data with customers. Configure subscription verification. In the AWS account of the company that produces the data, create an Amazon API Gateway Data API service integration with Amazon Redshift. Require the data customers to subscribe to the data product.

B. In the AWS account of the company that produces the data, create an AWS Data Exchange datashare by connecting AWS Data Exchange to the Redshift cluster. Configure subscription verification. Require the data customers to subscribe to the data product.

C. Download the data from the Amazon Redshift tables to an Amazon S3 bucket periodically. Use AWS Data Exchange for S3 to share data with customers. Configure subscription verification. Require the data customers to subscribe to the data product.

D. Publish the Amazon Redshift data to an Open Data on AWS Data Exchange. Require the customers to subscribe to the data product in AWS Data Exchange. In the AWS account of the company that produces the data, attach IAM resource-based policies to the Amazon Redshift tables to allow access only to verified AWS accounts.

答案:B

答案解析:题目要求提升目前的架构,要做权限认证同时用户能实时查询数据。因此使用Redshift最直接。A选项使用AWS Data Exchange for API,这需要您创建与Amazon Redshift集成的Amazon API Gateway Data API服务。这是一个比使用数据共享更复杂的解决方案。C选项使用AWS Data Exchange for S3,需要定期从Amazon Redshift下载数据到Amazon S3。这也是一个比使用数据共享更复杂的解决方案。D选项将数据发布到AWS data Exchange上的开放数据(Open data),该选项不允许您配置订阅验证,这意味着任何人都可以访问数据。因此选择B选项。

1.2.2 Snapshots & DR

- Redshift可以将Snapshots备份存储到S3

- 分自动和手动备份

- 备份能创建一个新的集群,甚至该集群是在不同区域(注意:这个方案可以用于DR,考试中如果出现Redshift的DR,这个也是一种方案)

1.2.3 高级特性

- 底层需要有leader和compute节点,因此长期使用查询才会使用Redshift,如果只是零星查询推荐用Athena(考试中会出现2者的区别选择)

- Redshift Spectrum:可以不加载S3数据,直接通过Redshift 引擎查询S3

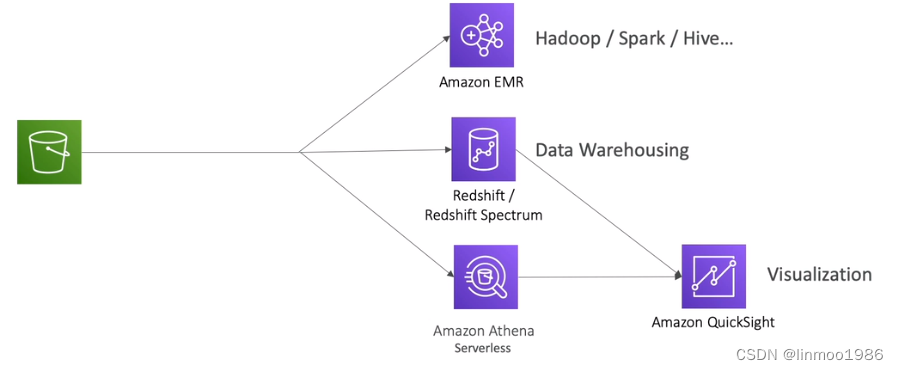

1.3 Amazon Athena

Amazon Athena 是一种交互式查询服务,让您能够轻松使用标准 SQL 直接分析 Amazon Simple Storage Service (Amazon S3) 中的数据。只需在 AWS Management Console 中执行几项操作,即可将 Athena 指向 Amazon S3 中存储的数据,并开始使用标准 SQL 运行临时查询,然后在几秒钟内获得结果。

1.3.1 基本特性

- 支持SQL查询S3,并将结果输送会S3

- 支持CSV、JSON、Parquet、ORC

- 适用于零星查询

例题:A company is collecting a large amount of data from a fleet of IoT devices. Data is stored as Optimized Row Columnar (ORC) files in the Hadoop Distributed File

System (HDFS) on a persistent Amazon EMR cluster. The company’s data analytics team queries the data by using SQL in Apache Presto deployed on the same

EMR cluster. Queries scan large amounts of data, always run for less than 15 minutes, and run only between 5 PM and 10 PM.

The company is concerned about the high cost associated with the current solution. A solutions architect must propose the most cost-effective solution that will allow SQL data queries.

Which solution will meet these requirements?

A. Store data in Amazon S3. Use Amazon Redshift Spectrum to query data.

B. Store data in Amazon S3. Use the AWS Glue Data Catalog and Amazon Athena to query data.

C. Store data in EMR File System (EMRFS). Use Presto in Amazon EMR to query data.

D. Store data in Amazon Redshift. Use Amazon Redshift to query data.

答案:B

答案解析:题目要求做一些查询,且较少的一些查询,同时 most cost-effective 。因此对比Redshift Spectrum 、Athena 、Redshift来说,少量查询适合Athena 。因此选择B选项

- 查询还可以记录到CloudTrail(可以CloudWatch Alarm集成做警报功能)

- 场景应用场景:VPC Flow Logs、CloudTrail、ALB Access Logs、COst and Usage report等等

1.3.2 典型架构

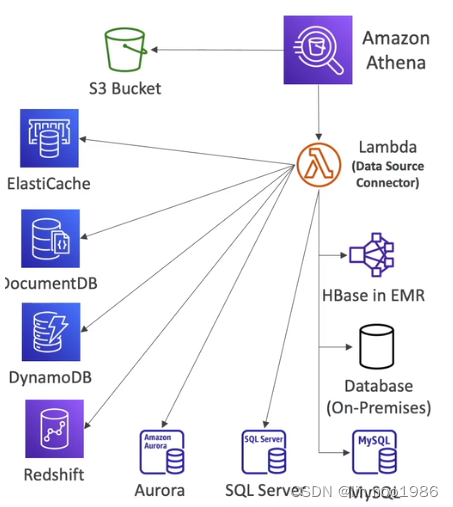

- 基于Athena的联邦查询

1.4 Amazon Quicksight

Amazon QuickSight是一项可用于交付的云级商业智能 (BI) 服务easy-to-understand向与您共事的人提供见解,无论他们身在何处。亚马逊QuickSight连接到您的云端数据,并合并来自许多不同来源的数据。简单理解它就是一款Tableau的BI工具。

- 创建可视化报表

- 集成Athena、Redshift、EMR、RDS等等

- 提供可视化和数据分析,可以将可视化面板或者分析结果共享给其他人或组

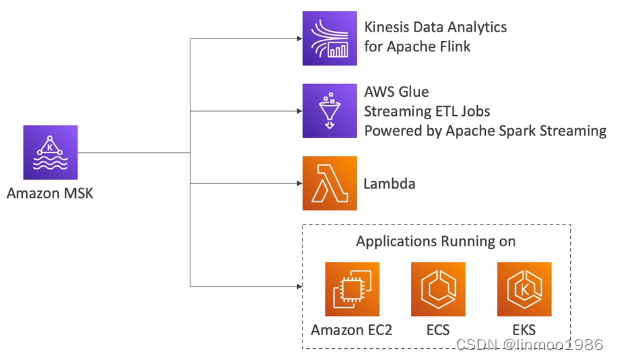

1.5 Amazon MSK(Kafka)

Amazon Managed Streaming for Apache Kafka(Amazon MSK)是一项完全托管式服务,让您能够构建并运行使用 Apache Kafka 来处理串流数据的应用程序。它与Kinesis Data Streams很类似,经常会用来比较。

1.5.1 基本特性

- 可以替代Kinesis Data Streams

- 等同于在AWS部署kafka,提供multi-AZ高可用

- 有serverless模式

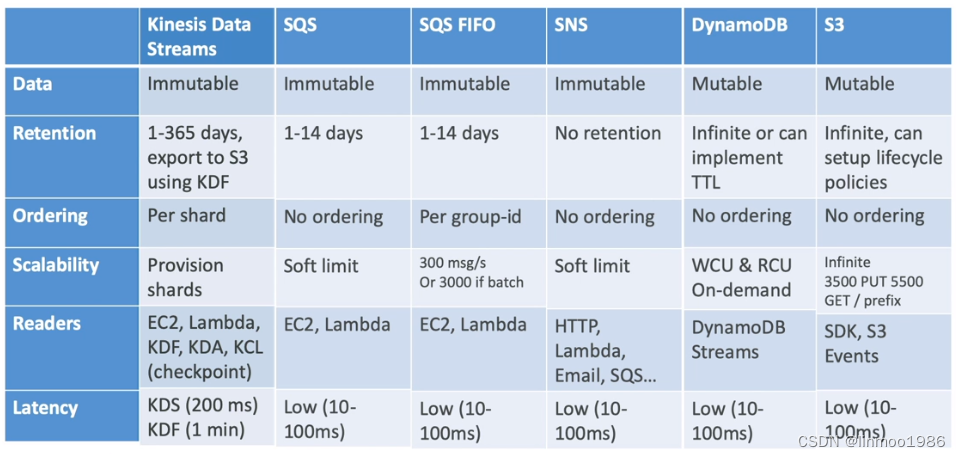

1.5.2 与Kinesis Data Streams比较

- 消息长度Kinesis Data Streams有1MB限制,MSK可以设置更高

- 都能采用分片/分区

- Kinesis Data Streams能够增删分片,但是MSK只能增加分区

- 可以使用TLS加密

(注意:2者其实区别都不大,所以大部分场景都适合,如果你原先在本地数据中心使用kafka,那么在AWS可以直接迁移MSK。但是这2个流处理组件经常会与IoT Core做场景选择,如果使用MQTT协议,那么一般选择IoT Core)

1.5.3 MSK的消费者

1.6 Amazon EMR

Amazon EMR是一个托管集群平台,可简化在上运行大数据框架(如 Apache Hadoop 和 Apache Spark)的过程,AWS以处理和分析海量数据。简单理解它就是一个Hadoop集群,专门用于大数据分析的。

1.6.1 基本特性

- 与Hadoop类似

- 集群是基于EC2或ESK集群

- 支持Spark、HBase、Flink等大数据套件

- 可以通过CloudWatch实现自动扩展

- 使用场景:数据处理、机器学习、网站索引、大数据等等

- 底层存储数据有3种类型(其中EMRFS是长期存储但性能较差;而HDFS和本地文件系统都是临时存储但性能较快)

- 有2种类型集群

1)Uniform instance group(实例组):所有的实例都统一配置

2)Instance fleet(实例队列):实例可以任意组合

1.6.2 EMR节点成本选择

- 组成有Master Node、Core Node、Task Node

- On-demand(按需实例):当数据量和计算量不确定时使用

- Reserved(预留实例):当有明确的计算量和数据量,且数据长期使用时

- Spot Instances:临时任务使用

例题:A solutions architect needs to review the design of an Amazon EMR cluster that is using the EMR File System (EMRFS). The cluster performs tasks that are critical to business needs. The cluster is running Amazon EC2 On-Demand Instances at all times for all task, master, and core nodes. The EMR tasks run each morning, starting at 1:00 AM. and take 6 hours to finish running. The amount of time to complete the processing is not a priority because the data is not referenced until late in the day.

The solutions architect must review the architecture and suggest a solution to minimize the compute costs.

Which solution should the solutions architect recommend to meet these requirements?

A. Launch all task, master, and core nodes on Spot Instances in an instance fleet. Terminate the duster, including all instances, when the processing is completed.

B. Launch the master and core nodes on On-Demand Instances. Launch the task nodes on Spot Instances in an instance fleet. Terminate the cluster, including all instances, when the processing is completed. Purchase Compute Savings Plans to cover the On-Demand Instance usage.

C. Continue to launch all nodes on On-Demand Instances. Terminate the cluster, including all instances, when the processing is completed. Purchase Compute Savings Plans to cover the On-Demand Instance usage

D. Launch the master and core nodes on On-Demand Instances. Launch the task nodes on Spot Instances in an instance fleet. Terminate only the task node instances when the processing is completed. Purchase Compute Savings Plans to cover the On-Demand Instance usage.

答案:D

答案解析:题目有一个EMR集群运行任务,希望最低成本架构来实现。因为主节点和核心节点是固定的,因为每天都需要运行任务,因此采用购置Compute Savings Plans的On-Demand Instance既能保持稳定且节省成本。因为任务是每天运行一段时间,且运行时长不是关键,因此任务节点可以采用Spot Instances,即使被回收也可以重新运行,这样也能节省成本。因此选择D选项

1.7 Amazon Managed Grafana

Amazon Managed Grafana 是一种安全的完全托管式数据可视化服务,您可以使用该服务即时查询、关联和可视化来自多个来源的运行指标、日志和跟踪。Amazon Managed Grafana 可以轻松部署、操作和扩展 Grafana,这是一款广泛部署的数据可视化工具,因其可扩展的数据支持而广受欢迎。

- 创建逻辑上隔离的 Grafana 服务器,称为工作空间Workpace

- 与收集运营数据的数据源集成

例题:A company uses a Grafana data visualization solution that runs on a single Amazon EC2 instance to monitor the health of the company’s AWS workloads. The company has invested time and effort to create dashboards that the company wants to preserve. The dashboards need to be highly available and cannot be down for longer than 10 minutes. The company needs to minimize ongoing maintenance.

Which solution will meet these requirements with the LEAST operational overhead?

A. Migrate to Amazon CloudWatch dashboards. Recreate the dashboards to match the existing Grafana dashboards. Use automatic dashboards where possible.

B. Create an Amazon Managed Grafana workspace. Configure a new Amazon CloudWatch data source. Export dashboards from the existing Grafana instance. Import the dashboards into the new workspace.

C. Create an AMI that has Grafana pre-installed. Store the existing dashboards in Amazon Elastic File System (Amazon EFS). Create an Auto Scaling group that uses the new AMI. Set the Auto Scaling group’s minimum, desired, and maximum number of instances to one. Create an Application Load Balancer that serves at least two Availability Zones.

D. Configure AWS Backup to back up the EC2 instance that runs Grafana once each hour. Restore the EC2 instance from the most recent snapshot in an alternate Availability Zone when required.

答案:B

答案解析:题目要求保留原先使用EC2部署Grafana监控AWS服务的dashboards,LEAST operational overhead。因此使用AWS托管的Grafana最合适,与原先AWS服务无缝集成,且无需部署 Grafana,因此是最小操作开销。因此选择B选项

1.8 AWS Glue

AWS Glue 是一项无服务器数据集成服务,可让使用分析功能的用户轻松发现、准备、移动和集成来自多个来源的数据。您可以将其用于分析、机器学习和应用程序开发。它还包括用于编写、运行任务和实施业务工作流程的额外生产力和数据操作工具。简单来说就是一个ETL工具。

-

是一个serverless服务

-

主要功能是ETL

-

AWS Glue Data Catalog 包含对在 AWS Glue 中用作提取、转换和加载 (ETL) 作业的源和目标的数据的引用。也就是能够提取数据库、表的等元数据,可以用于数据发现(注意:其中与Athena组合用于数据发现,在考题中经常出现)

-

使用触发器启动作业和爬虫程序:可以创建称为触发器的数据目录对象,用于手动或自动启动一个或多个爬网程序或提取、转换、加载(ETL)任务。使用触发器,您可以设计一个相互依赖的作业和爬网程序链条。

例题:A financial services company receives a regular data feed from its credit card servicing partner. Approximately 5,000 records are sent every 15 minutes in plaintext, delivered over HTTPS directly into an Amazon S3 bucket with server-side encryption. This feed contains sensitive credit card primary account number

(PAN) data. The company needs to automatically mask the PAN before sending the data to another S3 bucket for additional internal processing. The company also needs to remove and merge specific fields, and then transform the record into JSON format. Additionally, extra feeds are likely to be added in the future, so any design needs to be easily expandable.

Which solutions will meet these requirements?

A. Trigger an AWS Lambda function on file delivery that extracts each record and writes it to an Amazon SQS queue. Trigger another Lambda function when new messages arrive in the SQS queue to process the records, writing the results to a temporary location in Amazon S3. Trigger a final Lambda function once the SQS queue is empty to transform the records into JSON format and send the results to another S3 bucket for internal processing.

B. Trigger an AWS Lambda function on file delivery that extracts each record and writes it to an Amazon SQS queue. Configure an AWS Fargate container application to automatically scale to a single instance when the SQS queue contains messages. Have the application process each record, and transform the record into JSON format. When the queue is empty, send the results to another S3 bucket for internal processing and scale down the AWS Fargate instance.

C. Create an AWS Glue crawler and custom classifier based on the data feed formats and build a table definition to match. Trigger an AWS Lambda function on file delivery to start an AWS Glue ETL job to transform the entire record according to the processing and transformation requirements. Define the output format as JSON. Once complete, have the ETL job send the results to another S3 bucket for internal processing.

D. Create an AWS Glue crawler and custom classifier based upon the data feed formats and build a table definition to match. Perform an Amazon Athena query on file delivery to start an Amazon EMR ETL job to transform the entire record according to the processing and transformation requirements. Define the output format as JSON. Once complete, send the results to another S3 bucket for internal processing and scale down the EMR cluster.

答案:C

答案解析:参考:https://docs.aws.amazon.com/glue/latest/dg/trigger-job.html

1.9 AWS Data Pipeline

AWS Data Pipeline 是一项 Web 服务,您可用于自动处理数据的移动和转换。使用 AWS Data Pipeline,您可以定义数据驱动的工作流,这样任务就可以依赖于前面任务的成功执行。您可以定义数据转换的参数,AWS Data Pipeline 将实施您设置的逻辑。简单理解就是一个数据处理的可编排管道工具。该工具已经不再做新功能更新,因此有废弃可能,预计后续考试不会再出现。大家只需要记住其功能,以下有一道考过的例题供大家参考:

How can multiple compute resources be used on the same pipeline in AWS Data Pipeline?

A. You can use multiple compute resources on the same pipeline by defining multiple cluster objects in your definition file and associating the cluster to use for each activity via its runs On field.

B. You can use multiple compute resources on the same pipeline by defining multiple cluster definition files

C. You can use multiple compute resources on the same pipeline by defining multiple clusters for your activity.

D. You cannot use multiple compute resources on the same pipeline.

答案:A

答案解析:参考:https://aws.amazon.com/datapipeline/faqs/#:~:text=Q%3A%20Can%20multiple%20compute%20resources,activity%20via%20its%20runsOn%20field.

1.10 大数据架构总结

1.10.1 数据集成管道

1.10.2 数据分析层

1.10.3 数仓架构比较

- EMR

1)当需要使用大数据如Hive、Spark时

2)可以选择集群大小

3)可以选择实例类型(spot、on-demand、reserved等)

4)数据可以临时存储(本地硬盘)或长期存储(EMRFS) - Athena

1)简单查询S3数据,本身不存储数据

2) 一般用于零星查询

3)特别适合日志类查询,并结合CloudTrail做报警功能 - Redshift

1)非常好的SQL查询

2)通过Spectrum可以直接查询S3

3)适合长期的报表BI查询

2 机器学习

以下大部分与机器学习有关的内容,包括一些音视频的处理工具等等,大部分内容在考试要求中你只需要了解其功能在哪些场景下使用即可。

2.1 Amazon Rekognition

使用 Amazon Rekognition,您可以轻松地将图像和视频分析添加到您的应用程序中。你只需要向亚马逊 Rekognition API 提供图片或视频,该服务就可以:

- 识别标签(对象、概念、人物、场景和活动)和文本

- 检测不当内容

- 提供高度准确的面部分析、人脸比较和人脸搜索功能

注意:考试中会出现对内容(图片或者视频)检测不合规的场景,那么这时候需要选择Rekognition。

2.2 Amazon Transcribe

Amazon Transcribe是一种自动语音识别服务,它使用机器学习模型将音频转换为文本。您可以用Amazon Transcribe作独立的转录服务,也可以向任何应用程序添加speech-to-text功能。

- 基于ASR技术做音频翻译为文本

- 有PII敏感数据屏蔽功能

- 可以翻译成多种语言

2.3 Amazon Polly

与Transcribe相反,Amazon Polly 云服务可以将文本转化为逼真的语音。你需要记住它2个重要特性

- Lexicons(词典):主要功能就是你上传一个词典映射,可以将文本通过词典映射为你想要的读法。比如文本出现AWS,希望读的时候变成Amazon Web Service

- SSML:是通过文本方式(SSML文档)生成语音。比如可停顿2秒钟、深呼吸等一些说话时的加强功能。

2.4 Amazon Translate

Amazon Translate 是一项文本翻译服务,它使用先进的机器学习技术按需提供高质量的翻译。只需要了解其功能就行。

2.5 Amazon Comprehend

Amazon Comprehend 使用自然语言处理(NLP)提取有关文档内容的见解。它可以通过识别文档中的实体、关键短语、语言、情绪和其他常见元素生成见解。如果你对NLP不是很了解,建议先了解一下,这样能更好的了解Comprehend。

- 能够理解文本意思,提取关键信息如地点、人物、时间、事件等等

- 甚至能够理解说话的情绪是积极还是消极

- 根据你提供的短语做标记,也能根据主题进行查找

- 常用场景:查找有关主题的文档;发现文本内容的重要信息等等。

2.6 Amazon Comprehend Medical

Amazon Comprehend Medical 检测并返回非结构化临床文本中的有用信息,例如医生记录、出院摘要、测试结果和病例记录。Amazon Comprehend Medical 使用自然语言处理 (NLP) 模型来检测实体,这些实体是对医疗信息(例如医疗状况、药物或受保护的健康信息 (PHI))的文本引用。你只需要了解它是通过读取非结构化临床文本后输出一个结构化的健康信息。

2.7 Amazon SageMaker

亚马逊Amazon SageMaker是一种完全托管的机器学习服务。借助Amazon SageMaker,数据科学家和开发人员可以快速、轻松地构建和训练机器学习模型,然后直接将模型部署到生产就绪托管环境中。它提供了一个集成的 Jupyter 编写 Notebook 实例,供您轻松访问数据源以便进行探索和分析,因此您无需管理服务器。

如果你之前不太懂什么是机器学习,那么这里简单说明一下

1)首先你要有一个目的,比如你要预测你考试的分数

2)那么你需要将历史的一些数据输入SageMaker,这些数据包括过往考生的数据:年龄、学习时长、使用AWS年限等信息以及最后考了多少分

3)通过SageMaker训练出与你设置维度(年龄、学习时长、使用AWS年限等)有关的模型

4)通过该模型,将你自己的信息输入进去,就会计算出一个你可能考的分数

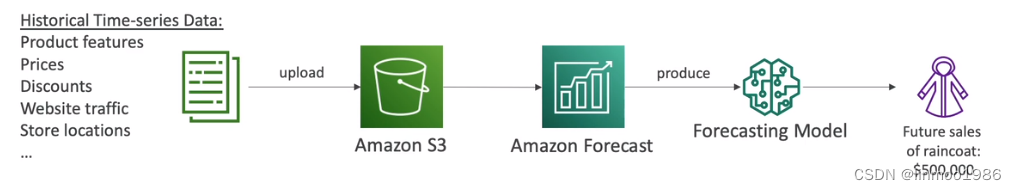

2.8 Amazon Forecast

Amazon Forecast 是一种完全托管式服务,可使用统计和机器学习算法提供高度精确的时间序列预测。Forecast 基于 Amazon.com 用于时间序列预测的相同技术,提供基于历史数据预测future 时间序列数据的 state-of-the-art 算法,无需机器学习经验。简单理解就是一个落地机器学习的产品,用于通过历史数据预测未来情况的(比如预测财务、销售额等场景)。工作方式如下图:

2.9 Amazon Kendra

Amazon Kendra是一项智能搜索服务,它使用自然语言处理和高级机器学习算法从您的数据中返回搜索问题的特定答案。它与传统的搜索不一样的地方是嵌入人工智能。简单可以理解和Chat-GPT很像。

- 可以交互式的检索

- 不断的增强学习

2.10 Amazon Personalize

Amazon Personalize 是一项完全托管的机器学习服务,它使用您的数据为您的用户生成商品推荐。它还可以根据用户对某些项目或项目元数据的亲和度生成用户细分。经常是用于商品推荐,且你不需要做模型训练,直接使用。

2.11 Amazon Textract

Amazon Textract 让您可以向应用程序轻松添加文档文本检测和分析功能。使用 Amazon Textract 可以:

- 检测各种文档中的打字和手写文本,包括财务报告、医疗记录和税务表格。

- 使用 Amazon Textract 文档分析 API 从包含结构化数据的文档中提取文本、表单和表格。

- 使用分析费用 API 处理发票和收据。

- 使用 AnalyZeID API 处理美国政府颁发的驾驶执照和护照等身份证件。

2.12 Amazon Lex

Amazon Lex V2 是一项 AWS 服务,用于使用语音和文本为应用程序构建对话界面。Amazon Lex V2 提供自然语言理解 (NLU) 和自动语音识别 (ASR) 的深度功能和灵活性,因此您可以通过逼真的对话式交互来构建极具吸引力的用户体验,并创建新的产品类别。也就是快速构建对话机器人,比如聊天机器人、客服等场景。

2.13 Amazon Connect

跨多个通信通道(如语音和聊天)的统一联系人体验。管理员只需构建一次体验,即可启用语音和聊天功能。经理从一个控制面板监控和调整队列。客服使用一个界面处理所有客户。简单理解就是通过拨打电话识别语音后集成后续功能做一些操作。

例题:A company runs a customer service center that accepts calls and automatically sends all customers a managed, interactive, two-way experience survey by text message. The applications that support the customer service center run on machines that the company hosts in an on-premises data center. The hardware that the company uses is old, and the company is experiencing downtime with the system. The company wants to migrate the system to AWS to improve reliability.

Which solution will meet these requirements with the LEAST ongoing operational overhead?

A. Use Amazon Connect to replace the old call center hardware. Use Amazon Pinpoint to send text message surveys to customers.

B. Use Amazon Connect to replace the old call center hardware. Use Amazon Simple Notification Service (Amazon SNS) to send text message surveys to customers.

C. Migrate the call center software to Amazon EC2 instances that are in an Auto Scaling group. Use the EC2 instances to send text message surveys to customers.

D. Use Amazon Pinpoint to replace the old call center hardware and to send text message surveys to customers.

答案:A

答案分析:题目要求将客服中心迁移到AWS,因此选择Amazon Connect。Amazon Pinpoint是一种营销自动化和分析服务,允许你通过不同的渠道与客户互动,如电子邮件、短信、推送通知和语音。它可以帮助你根据用户行为创建个性化的活动,并使你能够跟踪用户参与度和留存率。因此选择A选项。

例题:A company is building a call center by using Amazon Connect. The company’s operations team is defining a disaster recovery (DR) strategy across AWS Regions. The contact center has dozens of contact flows, hundreds of users, and dozens of claimed phone numbers.

Which solution will provide DR with the LOWEST RTO?

A. Create an AWS Lambda function to check the availability of the Amazon Connect instance and to send a notification to the operations team in case of unavailability. Create an Amazon EventBridge rule to invoke the Lambda function every 5 minutes. After notification, instruct the operations team to use the AWS Management Console to provision a new Amazon Connect instance in a second Region. Deploy the contact flows, users, and claimed phone numbers by using an AWS CloudFormation template.

B. Provision a new Amazon Connect instance with all existing users in a second Region. Create an AWS Lambda function to check the availability of the Amazon Connect instance. Create an Amazon EventBridge rule to invoke the Lambda function every 5 minutes. In the event of an issue, configure the Lambda function to deploy an AWS CloudFormation template that provisions contact flows and claimed numbers in the second Region.

C. Provision a new Amazon Connect instance with all existing contact flows and claimed phone numbers in a second Region. Create an Amazon Route 53 health check for the URL of the Amazon Connect instance. Create an Amazon CloudWatch alarm for failed health checks. Create an AWS Lambda function to deploy an AWS CloudFormation template that provisions all users. Configure the alarm to invoke the Lambda function.

D. Provision a new Amazon Connect instance with all existing users and contact flows in a second Region. Create an Amazon Route 53 health check for the URL of the Amazon Connect instance. Create an Amazon CloudWatch alarm for failed health checks. Create an AWS Lambda function to deploy an AWS CloudFormation template that provisions claimed phone numbers. Configure the alarm to invoke the Lambda function.

答案:D

答案解析:题目要求为Amazon Connect做DR,且要求RTO低。使用CloudWatch做健康检查+Route 53 做故障转移是RTO较低的解决方案,联系流已经存在,因此在发生错误时不需要在CloudFormation 部署contact flows 。因此选择D选项。

2.14 考试总结

在考试中,对应机器学习的相关内容不会太深入的考察,你只需要知道各个产品的实际应用场景即可,如下表格:

| 产品/服务 | 功能/场景 |

|---|---|

| Rekognition | 脸部识别 |

| Transcribe | 音频识别 |

| Polly | 文本转音频,与 Transcribe 相反 |

| Comprehend | NLP处理 |

| SageMaker | 机器学习模型训练(主要针对开发人员或数据科学家) |

| Forecast | 预测模型功能,经常用于销售额、财务等预测 |

| Kendra | 人工智能搜索引擎,类似Chat-GPT |

| Personalize | 实时的个性化推荐 |

| Textract | 文本识别,可以识别手写、身份证等上面的内容 |

| Lex | 聊天机器人 |

| Connect | 云contact中心 |

| Comprehend Medical | 临床文档识别 |

1683

1683

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?