ClickHouse在线Play:https://play.clickhouse.com,不用安装,可以直接网页体验

ClickHouse安装

ClickHouse只支持运行在linux上,我们使用最简单的docker安装

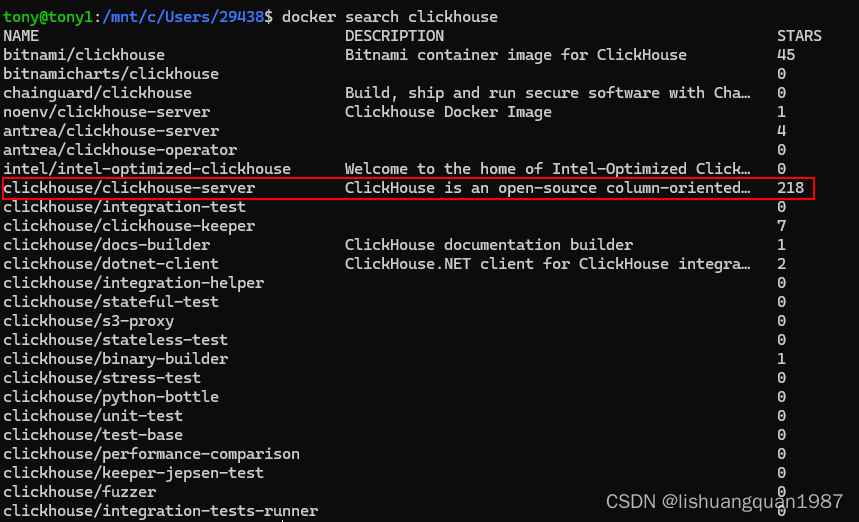

搜索可用的ClickHouse:

docker search clickhouse

拉取docker镜像:

docker pull clickhouse/clickhouse-server:latest

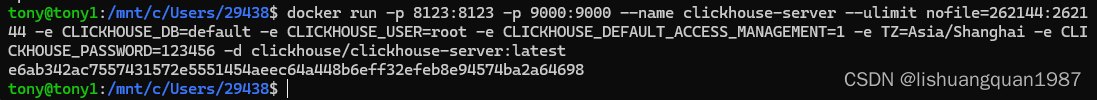

拉取后运行clickhouse-server

docker run -p 8123:8123 -p 9000:9000 --name clickhouse-server --ulimit nofile=262144:262144 -e CLICKHOUSE_DB=default -e TZ=Asia/Shanghai -d clickhouse/clickhouse-server:latest

连接ClickHouse

使用容器内部的clickhouse-client连接

查看容器ID:

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS

NAMES

e6ab342ac755 clickhouse/clickhouse-server:latest "/entrypoint.sh" 3 minutes ago Up 3 minutes 0.0.0.0:8123->8123/tcp, 0.0.0.0:9000->9000/tcp, 9009/tcp clickhouse-server

进入容器:

docker exec -it e6ab342ac755 bash

root@e6ab342ac755:/#

使用clickhouse-client连接clickhouse-server,只需要输入命令clickhouse-client即可

root@e6ab342ac755:/# clickhouse-client

ClickHouse client version 24.4.1.2088 (official build).

Connecting to localhost:9000 as user root.

Connected to ClickHouse server version 24.4.1.

Warnings:

* Linux transparent hugepages are set to "always". Check /sys/kernel/mm/transparent_hugepage/enabled

* Delay accounting is not enabled, OSIOWaitMicroseconds will not be gathered. You can enable it using `echo 1 > /proc/sys/kernel/task_delayacct` or by using sysctl.

e6ab342ac755 :)

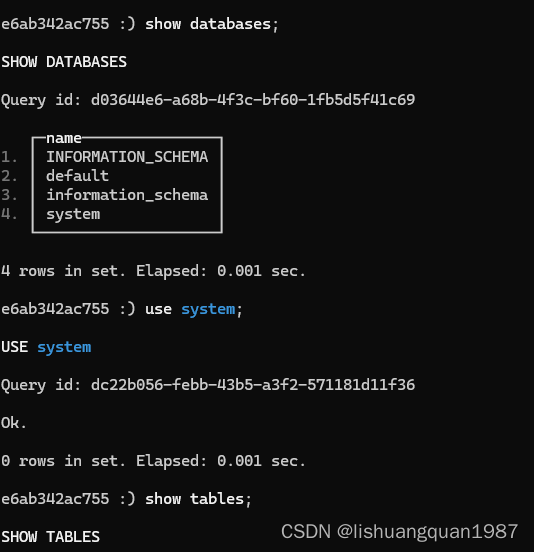

此时表示连接上了。可以进行一些查询操作:

e6ab342ac755 :) show databases;

SHOW DATABASES

Query id: d03644e6-a68b-4f3c-bf60-1fb5d5f41c69

┌─name───────────────┐

1. │ INFORMATION_SCHEMA │

2. │ default │

3. │ information_schema │

4. │ system │

└────────────────────┘

4 rows in set. Elapsed: 0.001 sec.

e6ab342ac755 :) use system;

USE system

Query id: dc22b056-febb-43b5-a3f2-571181d11f36

Ok.

0 rows in set. Elapsed: 0.001 sec.

e6ab342ac755 :) show tables;

SHOW TABLES

Query id: 6f9610da-12df-44f6-afc1-413d16ee8b4a

┌─name───────────────────────────┐

1. │ aggregate_function_combinators │

2. │ asynchronous_inserts │

3. │ asynchronous_loader │

4. │ asynchronous_metric_log │

5. │ asynchronous_metrics │

6. │ backups │

7. │ build_options │

8. │ certificates │

9. │ clusters │

10. │ collations │

11. │ columns │

12. │ contributors │

13. │ current_roles │

14. │ dashboards │

15. │ data_skipping_indices │

16. │ data_type_families │

17. │ database_engines │

18. │ databases │

19. │ detached_parts │

20. │ dictionaries │

21. │ disks │

22. │ distributed_ddl_queue │

23. │ distribution_queue │

24. │ dns_cache │

25. │ dropped_tables │

26. │ dropped_tables_parts │

27. │ enabled_roles │

28. │ errors │

29. │ events │

30. │ filesystem_cache │

31. │ filesystem_cache_settings │

32. │ formats │

33. │ functions │

34. │ generateSeries │

35. │ generate_series │

36. │ grants │

37. │ graphite_retentions │

38. │ jemalloc_bins │

39. │ kafka_consumers │

40. │ keywords │

41. │ licenses │

42. │ macros │

43. │ merge_tree_settings │

44. │ merges │

45. │ metric_log │

46. │ metrics │

47. │ models │

48. │ moves │

49. │ mutations │

50. │ mysql_binlogs │

51. │ named_collections │

52. │ numbers │

53. │ numbers_mt │

54. │ one │

55. │ part_moves_between_shards │

56. │ parts │

57. │ parts_columns │

58. │ privileges │

59. │ processes │

60. │ processors_profile_log │

61. │ projection_parts │

62. │ projection_parts_columns │

63. │ query_cache │

64. │ query_log │

65. │ quota_limits │

66. │ quota_usage │

67. │ quotas │

68. │ quotas_usage │

69. │ remote_data_paths │

70. │ replicas │

71. │ replicated_fetches │

72. │ replicated_merge_tree_settings │

73. │ replication_queue │

74. │ rocksdb │

75. │ role_grants │

76. │ roles │

77. │ row_policies │

78. │ s3queue │

79. │ scheduler │

80. │ schema_inference_cache │

81. │ server_settings │

82. │ settings │

83. │ settings_changes │

84. │ settings_profile_elements │

85. │ settings_profiles │

86. │ stack_trace │

87. │ storage_policies │

88. │ symbols │

89. │ table_engines │

90. │ table_functions │

91. │ tables │

92. │ time_zones │

93. │ trace_log │

94. │ user_directories │

95. │ user_processes │

96. │ users │

97. │ view_refreshes │

98. │ warnings │

99. │ zeros │

100. │ zeros_mt │

└────────────────────────────────┘

100 rows in set. Elapsed: 0.002 sec.

e6ab342ac755 :)

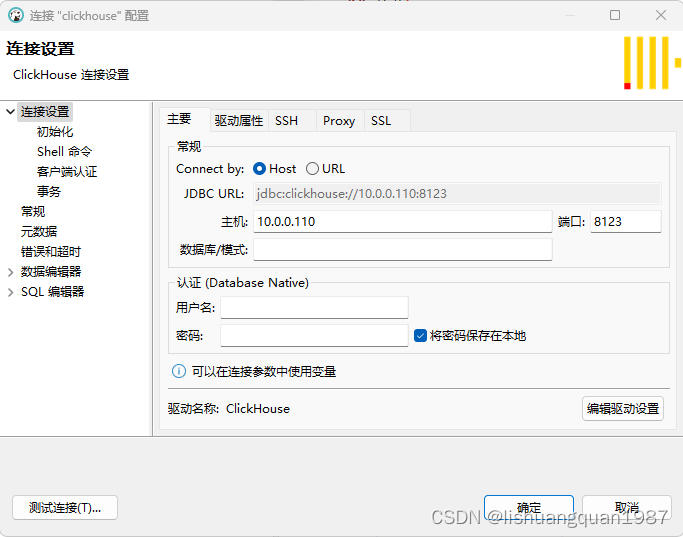

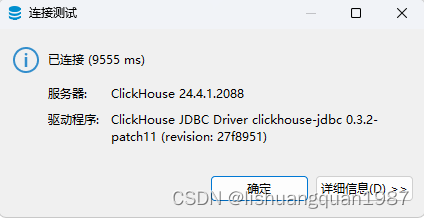

使用DBeaver连接clickhouse-server

由于上面运行docker的时候没有设定用户名和密码,所以连接不需要用户名和密码

编写C#程序写入数据

写入的数据为csv文件,与文章:TDengine写入2000万数据查询体验使用的数据相同。如果想要数据源,请参考它,去百度网盘获取。

C#连接ClickHouse我们使用ORM框架Sqlsugar.

Sqlsugar操作CH参考文档:https://www.donet5.com/home/Doc?typeId=2437

编写C#代码写入数据

// See https://aka.ms/new-console-template for more information

using SqlSugar.ClickHouse;

using SqlSugar;

using System.Diagnostics;

using System.Collections.Generic;

Console.WriteLine("Hello, World!");

InstanceFactory.CustomAssemblies =

new System.Reflection.Assembly[] { typeof(ClickHouseProvider).Assembly };

var connStr = "host=192.168.94.136;port=8123;user=default;password=;database=test";

SqlSugarClient db = new SqlSugarClient(new ConnectionConfig()

{

DbType = DbType.ClickHouse,

ConnectionString = connStr,

IsAutoCloseConnection = true

});

db.CodeFirst.InitTables(typeof(BatInfo));

string csvPath = "G:\\BatInfo_2021\\data.csv";

//写入

Stopwatch stopwatch = Stopwatch.StartNew();

using (var stream = new StreamReader(csvPath))

{

int count = 0;

int totalCount = 0;

const int batchCount = 500;

List<BatInfo> batInfos = new List<BatInfo>();

while (true)

{

var line = await stream.ReadLineAsync();

if (line == null) break;

count++;

totalCount++;

if (totalCount == 1) continue;//第1行舍弃

var strs = line.Split(',');

if (count >= 10000)

{

count = 0;

Console.WriteLine($"has read {totalCount} lines,ellapse time {stopwatch.ElapsedMilliseconds / (double)1000} seconds");

}

var batCodeStr = strs[0];

var loginTimeStr = strs[1];

var deviceCodeStr = strs[2];

var processIdStr = strs[3];

var flowIdStr = strs[4];

var batteryPos = strs[7];

var capacity1Str = strs[12];

var capacity2Str = strs[13];

var voltage1Str = strs[18];

var voltage2Str = strs[19];

var current1Str = strs[24];

var current2Str = strs[25];

var ocv1Str = strs[28];

var ocv2Str = strs[29];

var ir1Str = strs[33];

var ir2Str = strs[34];

var batIdStr = strs[71];

var batInfo = new BatInfo()

{

BatCode = batCodeStr,

BatId = ConvertToInt(batIdStr, 0),

BatPos = ConvertToInt(batteryPos, 0),

Capacity1 = ConvertToDouble(capacity1Str, 0),

Capacity2 = ConvertToDouble(capacity2Str, 0),

Current1 = ConvertToDouble(current1Str, 0),

Current2 = ConvertToDouble(current2Str, 0),

DeviceCode = deviceCodeStr,

FlowId = ConvertToInt(flowIdStr, 0),

IR1 = ConvertToDouble(ir1Str, 0),

IR2 = ConvertToDouble(ir2Str, 0),

LoginTime = DateTime.Parse(loginTimeStr),

OCV1 = ConvertToDouble(ocv1Str, 0),

OCV2 = ConvertToDouble(ocv2Str, 0),

ProcessId = ConvertToInt(processIdStr, 0),

Voltage1 = ConvertToDouble(voltage1Str, 0),

Voltage2 = ConvertToDouble(voltage2Str, 0),

};

batInfos.Add(batInfo);

if (batInfos.Count > batchCount)

{

try

{

//数据大批量插入用db.Insertable<BatInfo>(batInfos).ExecuteCommandAsync()不好,有时候会报错

var affectRow = await db.Fastest<BatInfo>().BulkCopyAsync(batInfos);

batInfos.Clear();

if (affectRow <= 0) { Console.WriteLine($"affect row:{affectRow}"); }

}

catch (Exception e)

{

Console.WriteLine($"Error:{e.Message}");

}

}

}

if (batInfos.Count > 0)

{

try

{

var affectRow = await db.Fastest<BatInfo>().BulkCopyAsync(batInfos);

batInfos.Clear();

if (affectRow <= 0) { Console.WriteLine($"affect row:{affectRow}"); }

}

catch (Exception e)

{

Console.WriteLine($"Error:{e.Message}");

}

}

}

Console.WriteLine($"finish,ellapse total seconds:{stopwatch.ElapsedMilliseconds / (double)1000}");

Console.WriteLine("finish");

Console.ReadLine();

double ConvertToDouble(string str, double defalutValue)

{

if (double.TryParse(str, out var v)) return v;

return defalutValue;

}

int ConvertToInt(string str, int defalutValue)

{

if (int.TryParse(str, out var v)) return v;

return defalutValue;

}

public class BatInfo

{

public string BatCode { get; set; }

public DateTime LoginTime { get; set; }

public string DeviceCode { get; set; }

public int ProcessId { get; set; }

public int FlowId { get; set; }

public int BatPos { get; set; }

public double Capacity1 { get; set; }

public double Capacity2 { get; set; }

public double Voltage1 { get; set; }

public double Voltage2 { get; set; }

public double Current1 { get; set; }

public double Current2 { get; set; }

public double OCV1 { get; set; }

public double OCV2 { get; set; }

public double IR1 { get; set; }

public double IR2 { get; set; }

[SugarColumn(IsPrimaryKey = true)]

public int BatId { get; set; }

}

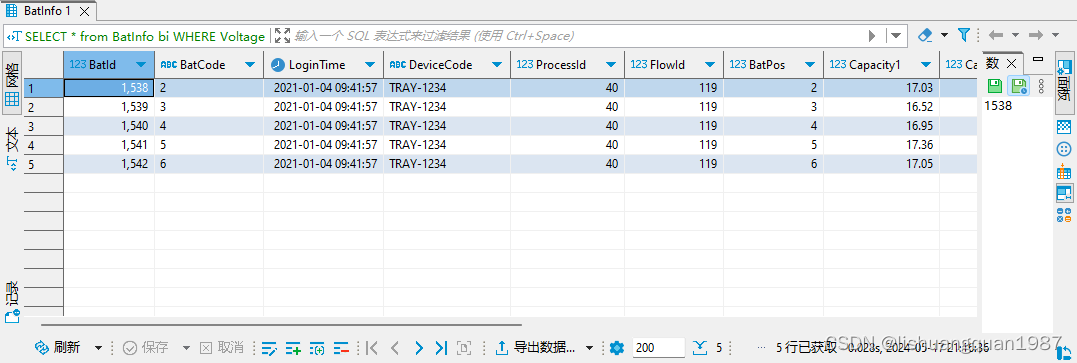

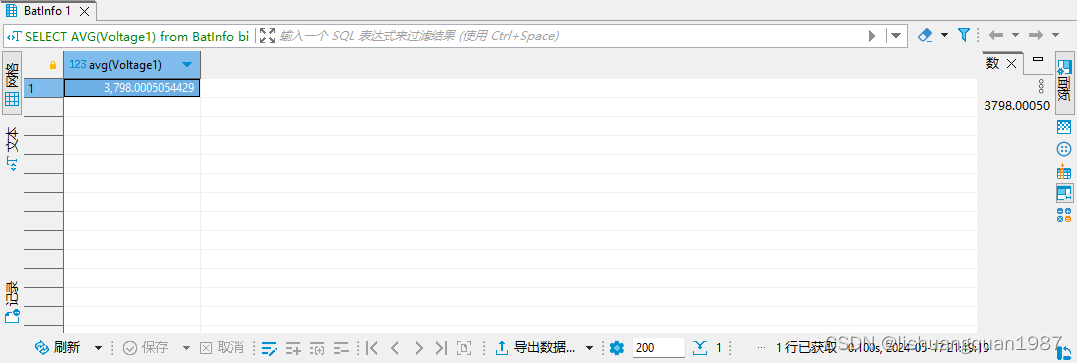

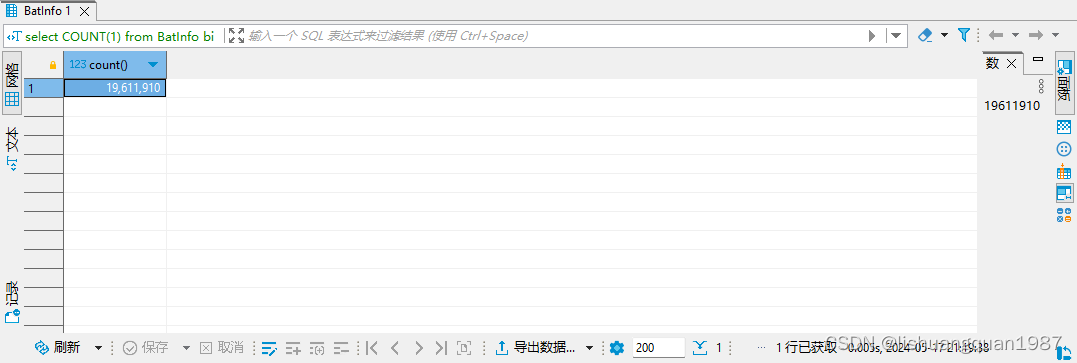

查询体验

SELECT * from BatInfo bi WHERE Voltage1>3.14 LIMIT 5

SELECT COUNT(1) from BatInfo WHERE BatCode ='111'

SELECT AVG(Voltage1) from BatInfo bi

select COUNT(1) from BatInfo bi

整体查询体验还是很不错的,下次再插入几亿数据再试试

2315

2315

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?