chatDev是什么?

ChatDev 是一个基于大型语言模型(如 GPT)的虚拟软件开发团队模拟器。它通过模拟软件开发团队中的不同角色(如程序员、测试员、设计师等),利用 AI 驱动的角色之间的对话和协作,来完成软件开发任务。ChatDev 的目标是探索如何通过 AI 模拟团队协作,自动生成代码、测试和文档等。

在本文中用到的代码地址是:下载地址。

- 下载源码

- 创建conda环境

conda create -n ChatDev_conda_env python=3.9 -y

conda activate ChatDev_conda_env

- 安装第三方库

pip3 install -r requirements.txt

代码修改

- 创建camel/model_config.py 大语言模型配置参数

主要了平台名称、网址、api类型、模型名称和token限制

格式如下:

MODELS = {

"openai":{

"base_url":"https://api.openai.com/v1",

"api_types":["openai"],

"models":[

{

"name":"gpt-3.5-turbo",

"num_tokens":4096

},

{

"name": "gpt-4",

"num_tokens": 8192

},

]

},

"deepseek":{

"base_url":"https://api.deepseek.com",

"api_types":["openai"],

"models":[

{

"name":"deepseek-chat",

"num_tokens":8192

},

{

"name": "deepseek-reasoner",

"num_tokens": 8192

},

]

},

"qwen":{

"base_url":"https://api.dashscope.aliyuncs.com/compatible-mode/v1",

"api_types":["openai", "self"],

"models":[

{

"name":"qwen1.5-110-chat",

"num_tokens":8192

},

{

"name": "qwen-plus",

"num_tokens": 8192

},

]

}

}

- 创建.env 文件,配置OPENAI_API_KEY

在deepseel 官网下 https://platform.deepseek.com/usage进行充值,并生成APIKey 进行复制。

这里还有接口文档可供学习。

将复制的key粘贴到.env中

key一般以sk-开头 - run.py 导入OPENAI_API_KEY

from dotenv import load_dotenv

load_dotenv()

若环境中不存在dotenv ,进行pip安装

- run.py参数修改

1.1 增加api_type参数 ,表示api的类型,例如deepseek是支持openai类型的

1.2 修改model参数,默认为deepseek-chat

1.3 修改platform参数,默认改为deepseek平台

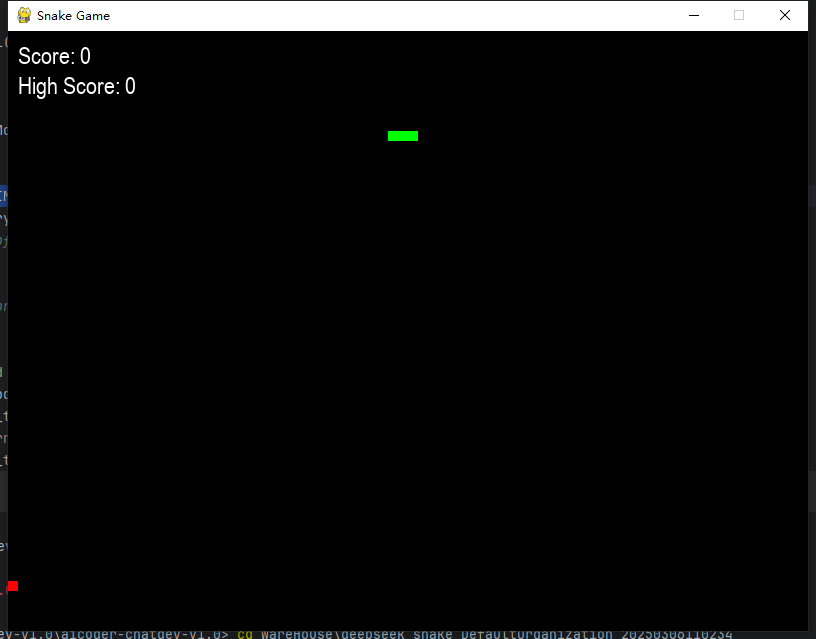

1.4 修改任务描述task,创建一个贪吃蛇游戏

1.5 修改任务名称

- run.py 初始化模型

可以删除不用的一部分代码,然后将modeltype 改成自己想要的类型

config_path, config_phase_path, config_role_path = get_config(args.config)

from camel.utils import get_model_info

base_url,num_tokens,api_type = get_model_info(args.platform,args.model,args.api_type)

model_type = ModelType(args.platform,name=args.model,base_url=base_url,num_tokens=num_tokens,api_type=api_type)

chat_chain = ChatChain(config_path=config_path,

config_phase_path=config_phase_path,

config_role_path=config_role_path,

task_prompt=args.task,

project_name=args.name,

org_name=args.org,

model_type=model_type,

code_path=args.path)

- 创建ModelType类型(typing.py)

可以将原先类型,改成如下形式:

class ModelType(object):

def __init__(self, platform,name,base_url,num_tokens,api_type):

self.platform = platform

self.name = name

self.base_url = base_url

self.num_tokens = num_tokens

self.api_type = api_type

原先的:

- 在utils中创建get_model_info()方法

def get_model_info(platform, model_name, api_type):

if platform not in MODELS.keys():

raise ValueError("platform 不存在")

if api_type not in MODELS[platform]["api_types"]:

raise ValueError("api_type 不存在")

base_url = MODELS[platform]["base_url"]

num_tokens = None

for model_info in MODELS[platform]["models"]:

if model_info["name"] == model_name:

num_tokens = model_info["num_tokens"]

break

if not num_tokens:

model_list = [model_info["name"] for model_info in MODELS[platform]["model"]]

raise ValueError("num_tokens error shoule choice from {}".format(model_list))

return base_url, num_tokens, api_type

- 因为ModelType类型被修改,所以run.py运行时所设计的文件中的ModelType修改被修改

例如:camel / agents / chat_agent.py 中

这里不在需要判断 改成:self.model: ModelType = model 即可

为了能够跑通代码,可以全局搜索一下ModelType的使用位置,进行修改

- 修改模型创建函数(model_backend.py)

将ModelFactory修改成如下形式

class ModelFactory:

r"""Factory of backend models.

Raises:

ValueError: in case the provided model type is unknown.

"""

@staticmethod

def create(model_type: ModelType, model_config_dict: Dict) -> ModelBackend:

if model_type.api_type == "stub":

return StubModel(model_type,model_config_dict)

if model_type.api_type == "openai":

return OpenAIModel(model_type,model_config_dict)

if model_type.api_type == "qwen" and model_type.api_type == "self":

return DataScopeAIModel

else:

raise ValueError("model api backend create error, model_platform:{}, model_name:{}, "

"base_url:{},api_type:{}".format(model_type.platform, model_type.name,model_type.base_url,model_type.api_type))

# log_visualize("Model Type: {}".format(model_type))

inst = model_class(model_type, model_config_dict)

return inst

将OpenAIModel修改成如下形式:

class OpenAIModel(ModelBackend):

r"""OpenAI API in a unified ModelBackend interface."""

def __init__(self, model_type: ModelType, model_config_dict: Dict) -> None:

super().__init__()

self.model_type = model_type

self.model_config_dict = model_config_dict

def run(self, *args, **kwargs):

string = "\n".join([message["content"] for message in kwargs["messages"]])

try: # 对于Token的计算

encoding = tiktoken.encoding_for_model(self.model_type.name)

num_prompt_tokens = len(encoding.encode(string))

gap_between_send_receive = 15 * len(kwargs["messages"])

num_prompt_tokens += gap_between_send_receive

except Exception as err:

num_prompt_tokens = 0

# Experimental, add base_url

client = openai.OpenAI(

api_key=OPENAI_API_KEY,

base_url=self.model_type.base_url,

)

num_max_token = self.model_type.num_tokens

num_max_completion_tokens = num_max_token - num_prompt_tokens

self.model_config_dict['max_tokens'] = num_max_completion_tokens

print("args:", args, "kwargs:", kwargs, "model:", self.model_type.name,

"model_config_dict:", self.model_config_dict)

response = client.chat.completions.create(*args, **kwargs, model=self.model_type.name,

**self.model_config_dict)

cost = prompt_cost(

self.model_type.name,

num_prompt_tokens=response.usage.prompt_tokens,

num_completion_tokens=response.usage.completion_tokens

)

log_visualize(

"**[OpenAI_Usage_Info Receive]**\nprompt_tokens: {}\ncompletion_tokens: {}\ntotal_tokens: {}\ncost: ${:.6f}\n".format(

response.usage.prompt_tokens, response.usage.completion_tokens,

response.usage.total_tokens, cost))

if not isinstance(response, ChatCompletion):

raise RuntimeError("Unexpected return from OpenAI API")

return response

创建DataScopeAIModel类

class DataScopeAIModel(ModelBackend):

def __init__(self, model_type: ModelType, model_config_dict: Dict) -> None:

super().__init__()

self.model_type = model_type

self.model_config_dict = model_config_dict

try:

import datascope

except Exception as err :

raise ValueError("datascope 引入异常")

self.client = datascope.Generation()

def run(self, *args, **kwargs):

string = "\n".join([message["content"] for message in kwargs["messages"]])

try:

encoding = tiktoken.encoding_for_model(self.model_type.name)

num_prompt_tokens = len(encoding.encode(string))

gap_between_send_receive = 15 * len(kwargs["messages"])

num_prompt_tokens += gap_between_send_receive

except Exception as err:

num_prompt_tokens = 0

# Experimental, add base_url

num_max_token = self.model_type.num_tokens

num_max_completion_tokens = num_max_token - num_prompt_tokens

self.model_config_dict['max_tokens'] = num_max_completion_tokens

print("args:", args, "kwargs:", kwargs, "model:", self.model_type.name,

"model_config_dict:", self.model_config_dict)

api_key = os.environ.get("DASHSCOPE_API_KEY")

if not api_key:

api_key = OPENAI_API_KEY

response = self.client.call(self.model_type.name,api_key = api_key,**kwargs)

cost = prompt_cost(

self.model_type.name,

num_prompt_tokens=response.usage.prompt_tokens,

num_completion_tokens=response.usage.completion_tokens

)

log_visualize(

"**[OpenAI_Usage_Info Receive]**\nprompt_tokens: {}\ncompletion_tokens: {}\ntotal_tokens: {}\ncost: ${:.6f}\n".format(

response.usage.prompt_tokens, response.usage.completion_tokens,

response.usage.total_tokens, cost))

if not isinstance(response, ChatCompletion):

raise RuntimeError("Unexpected return from OpenAI API")

return response

下面这些无用的代码都可以删除

-

运行run.py文件

此时会爆出一些错误,根据错误提示修改,直到无误即可 -

运行成功后,会在WareHouse文件夹下生成对应文件

进入对应文件夹,运行main.py文件即可运行程序

885

885

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?