AudioTrack的write方法有多个重载方法:

//frameworks/base/media/java/android/media/AudioTrack.java

public class AudioTrack extends PlayerBase implements AudioRouting, VolumeAutomation

{

public int write(@NonNull byte[] audioData, int offsetInBytes, int sizeInBytes) {

return write(audioData, offsetInBytes, sizeInBytes, WRITE_BLOCKING);

}

}

//frameworks/base/media/java/android/media/AudioTrack.java

public class AudioTrack extends PlayerBase implements AudioRouting, VolumeAutomation

{

public int write(@NonNull byte[] audioData, int offsetInBytes, int sizeInBytes,

@WriteMode int writeMode) {

// Note: we allow writes of extended integers and compressed formats from a byte array.

if (mState == STATE_UNINITIALIZED || mAudioFormat == AudioFormat.ENCODING_PCM_FLOAT) {

return ERROR_INVALID_OPERATION;

}

if ((writeMode != WRITE_BLOCKING) && (writeMode != WRITE_NON_BLOCKING)) {

Log.e(TAG, "AudioTrack.write() called with invalid blocking mode");

return ERROR_BAD_VALUE;

}

if ( (audioData == null) || (offsetInBytes < 0 ) || (sizeInBytes < 0)

|| (offsetInBytes + sizeInBytes < 0) // detect integer overflow

|| (offsetInBytes + sizeInBytes > audioData.length)) {

return ERROR_BAD_VALUE;

}

if (!blockUntilOffloadDrain(writeMode)) {

return 0;

}

final int ret = native_write_byte(audioData, offsetInBytes, sizeInBytes, mAudioFormat,

writeMode == WRITE_BLOCKING);

if ((mDataLoadMode == MODE_STATIC)

&& (mState == STATE_NO_STATIC_DATA)

&& (ret > 0)) {

// benign race with respect to other APIs that read mState

mState = STATE_INITIALIZED;

}

return ret;

}

}

//frameworks/base/media/java/android/media/AudioTrack.java

public class AudioTrack extends PlayerBase implements AudioRouting, VolumeAutomation

{

public int write(@NonNull short[] audioData, int offsetInShorts, int sizeInShorts) {

return write(audioData, offsetInShorts, sizeInShorts, WRITE_BLOCKING);

}

}

//frameworks/base/media/java/android/media/AudioTrack.java

public class AudioTrack extends PlayerBase implements AudioRouting, VolumeAutomation

{

public int write(@NonNull short[] audioData, int offsetInShorts, int sizeInShorts,

@WriteMode int writeMode) {

if (mState == STATE_UNINITIALIZED

|| mAudioFormat == AudioFormat.ENCODING_PCM_FLOAT

// use ByteBuffer or byte[] instead for later encodings

|| mAudioFormat > AudioFormat.ENCODING_LEGACY_SHORT_ARRAY_THRESHOLD) {

return ERROR_INVALID_OPERATION;

}

if ((writeMode != WRITE_BLOCKING) && (writeMode != WRITE_NON_BLOCKING)) {

Log.e(TAG, "AudioTrack.write() called with invalid blocking mode");

return ERROR_BAD_VALUE;

}

if ( (audioData == null) || (offsetInShorts < 0 ) || (sizeInShorts < 0)

|| (offsetInShorts + sizeInShorts < 0) // detect integer overflow

|| (offsetInShorts + sizeInShorts > audioData.length)) {

return ERROR_BAD_VALUE;

}

if (!blockUntilOffloadDrain(writeMode)) {

return 0;

}

final int ret = native_write_short(audioData, offsetInShorts, sizeInShorts, mAudioFormat,

writeMode == WRITE_BLOCKING);

if ((mDataLoadMode == MODE_STATIC)

&& (mState == STATE_NO_STATIC_DATA)

&& (ret > 0)) {

// benign race with respect to other APIs that read mState

mState = STATE_INITIALIZED;

}

return ret;

}

}

//frameworks/base/media/java/android/media/AudioTrack.java

public class AudioTrack extends PlayerBase implements AudioRouting, VolumeAutomation

{

public int write(@NonNull float[] audioData, int offsetInFloats, int sizeInFloats,

@WriteMode int writeMode) {

if (mState == STATE_UNINITIALIZED) {

Log.e(TAG, "AudioTrack.write() called in invalid state STATE_UNINITIALIZED");

return ERROR_INVALID_OPERATION;

}

if (mAudioFormat != AudioFormat.ENCODING_PCM_FLOAT) {

Log.e(TAG, "AudioTrack.write(float[] ...) requires format ENCODING_PCM_FLOAT");

return ERROR_INVALID_OPERATION;

}

if ((writeMode != WRITE_BLOCKING) && (writeMode != WRITE_NON_BLOCKING)) {

Log.e(TAG, "AudioTrack.write() called with invalid blocking mode");

return ERROR_BAD_VALUE;

}

if ( (audioData == null) || (offsetInFloats < 0 ) || (sizeInFloats < 0)

|| (offsetInFloats + sizeInFloats < 0) // detect integer overflow

|| (offsetInFloats + sizeInFloats > audioData.length)) {

Log.e(TAG, "AudioTrack.write() called with invalid array, offset, or size");

return ERROR_BAD_VALUE;

}

if (!blockUntilOffloadDrain(writeMode)) {

return 0;

}

final int ret = native_write_float(audioData, offsetInFloats, sizeInFloats, mAudioFormat,

writeMode == WRITE_BLOCKING);

if ((mDataLoadMode == MODE_STATIC)

&& (mState == STATE_NO_STATIC_DATA)

&& (ret > 0)) {

// benign race with respect to other APIs that read mState

mState = STATE_INITIALIZED;

}

return ret;

}

}

//frameworks/base/media/java/android/media/AudioTrack.java

public class AudioTrack extends PlayerBase implements AudioRouting, VolumeAutomation

{

public int write(@NonNull ByteBuffer audioData, int sizeInBytes,

@WriteMode int writeMode) {

if (mState == STATE_UNINITIALIZED) {

Log.e(TAG, "AudioTrack.write() called in invalid state STATE_UNINITIALIZED");

return ERROR_INVALID_OPERATION;

}

if ((writeMode != WRITE_BLOCKING) && (writeMode != WRITE_NON_BLOCKING)) {

Log.e(TAG, "AudioTrack.write() called with invalid blocking mode");

return ERROR_BAD_VALUE;

}

if ( (audioData == null) || (sizeInBytes < 0) || (sizeInBytes > audioData.remaining())) {

Log.e(TAG, "AudioTrack.write() called with invalid size (" + sizeInBytes + ") value");

return ERROR_BAD_VALUE;

}

if (!blockUntilOffloadDrain(writeMode)) {

return 0;

}

int ret = 0;

if (audioData.isDirect()) {

ret = native_write_native_bytes(audioData,

audioData.position(), sizeInBytes, mAudioFormat,

writeMode == WRITE_BLOCKING);

} else {

ret = native_write_byte(NioUtils.unsafeArray(audioData),

NioUtils.unsafeArrayOffset(audioData) + audioData.position(),

sizeInBytes, mAudioFormat,

writeMode == WRITE_BLOCKING);

}

if ((mDataLoadMode == MODE_STATIC)

&& (mState == STATE_NO_STATIC_DATA)

&& (ret > 0)) {

// benign race with respect to other APIs that read mState

mState = STATE_INITIALIZED;

}

if (ret > 0) {

audioData.position(audioData.position() + ret);

}

return ret;

}

}

//frameworks/base/media/java/android/media/AudioTrack.java

public class AudioTrack extends PlayerBase implements AudioRouting, VolumeAutomation

{

public int write(@NonNull ByteBuffer audioData, int sizeInBytes,

@WriteMode int writeMode, long timestamp) {

if (mState == STATE_UNINITIALIZED) {

Log.e(TAG, "AudioTrack.write() called in invalid state STATE_UNINITIALIZED");

return ERROR_INVALID_OPERATION;

}

if ((writeMode != WRITE_BLOCKING) && (writeMode != WRITE_NON_BLOCKING)) {

Log.e(TAG, "AudioTrack.write() called with invalid blocking mode");

return ERROR_BAD_VALUE;

}

if (mDataLoadMode != MODE_STREAM) {

Log.e(TAG, "AudioTrack.write() with timestamp called for non-streaming mode track");

return ERROR_INVALID_OPERATION;

}

if ((mAttributes.getFlags() & AudioAttributes.FLAG_HW_AV_SYNC) == 0) {

Log.d(TAG, "AudioTrack.write() called on a regular AudioTrack. Ignoring pts...");

return write(audioData, sizeInBytes, writeMode);

}

if ((audioData == null) || (sizeInBytes < 0) || (sizeInBytes > audioData.remaining())) {

Log.e(TAG, "AudioTrack.write() called with invalid size (" + sizeInBytes + ") value");

return ERROR_BAD_VALUE;

}

if (!blockUntilOffloadDrain(writeMode)) {

return 0;

}

// create timestamp header if none exists

if (mAvSyncHeader == null) {

mAvSyncHeader = ByteBuffer.allocate(mOffset);

mAvSyncHeader.order(ByteOrder.BIG_ENDIAN);

mAvSyncHeader.putInt(0x55550002);

}

if (mAvSyncBytesRemaining == 0) {

mAvSyncHeader.putInt(4, sizeInBytes);

mAvSyncHeader.putLong(8, timestamp);

mAvSyncHeader.putInt(16, mOffset);

mAvSyncHeader.position(0);

mAvSyncBytesRemaining = sizeInBytes;

}

// write timestamp header if not completely written already

int ret = 0;

if (mAvSyncHeader.remaining() != 0) {

ret = write(mAvSyncHeader, mAvSyncHeader.remaining(), writeMode);

if (ret < 0) {

Log.e(TAG, "AudioTrack.write() could not write timestamp header!");

mAvSyncHeader = null;

mAvSyncBytesRemaining = 0;

return ret;

}

if (mAvSyncHeader.remaining() > 0) {

Log.v(TAG, "AudioTrack.write() partial timestamp header written.");

return 0;

}

}

// write audio data

int sizeToWrite = Math.min(mAvSyncBytesRemaining, sizeInBytes);

ret = write(audioData, sizeToWrite, writeMode);

if (ret < 0) {

Log.e(TAG, "AudioTrack.write() could not write audio data!");

mAvSyncHeader = null;

mAvSyncBytesRemaining = 0;

return ret;

}

mAvSyncBytesRemaining -= ret;

return ret;

}

}

上述方法主要调用如下方法:

native_write_byte

native_write_short

native_write_float

下面分别进行分析:

native_write_byte

native_write_byte为native方法:

private native final int native_write_byte(byte[] audioData,

int offsetInBytes, int sizeInBytes, int format,

boolean isBlocking);

通过查询android_media_AudioTrack.cpp得到:

{"native_write_byte", "([BIIIZ)I", (void *)android_media_AudioTrack_writeArray<jbyteArray>},因此会调用android_media_AudioTrack_writeArray方法:

//frameworks/base/core/jni/android_media_AudioTrack.cpp

static jint android_media_AudioTrack_writeArray(JNIEnv *env, jobject thiz,

T javaAudioData,

jint offsetInSamples, jint sizeInSamples,

jint javaAudioFormat,

jboolean isWriteBlocking) {

//ALOGV("android_media_AudioTrack_writeArray(offset=%d, sizeInSamples=%d) called",

// offsetInSamples, sizeInSamples);

sp<AudioTrack> lpTrack = getAudioTrack(env, thiz);

if (lpTrack == NULL) {

jniThrowException(env, "java/lang/IllegalStateException",

"Unable to retrieve AudioTrack pointer for write()");

return (jint)AUDIO_JAVA_INVALID_OPERATION;

}

if (javaAudioData == NULL) {

ALOGE("NULL java array of audio data to play");

return (jint)AUDIO_JAVA_BAD_VALUE;

}

// NOTE: We may use GetPrimitiveArrayCritical() when the JNI implementation changes in such

// a way that it becomes much more efficient. When doing so, we will have to prevent the

// AudioSystem callback to be called while in critical section (in case of media server

// process crash for instance)

// get the pointer for the audio data from the java array

auto cAudioData = envGetArrayElements(env, javaAudioData, NULL);

if (cAudioData == NULL) {

ALOGE("Error retrieving source of audio data to play");

return (jint)AUDIO_JAVA_BAD_VALUE; // out of memory or no data to load

}

jint samplesWritten = writeToTrack(lpTrack, javaAudioFormat, cAudioData,

offsetInSamples, sizeInSamples, isWriteBlocking == JNI_TRUE /* blocking */); //调用writeToTrack方法

envReleaseArrayElements(env, javaAudioData, cAudioData, 0);

//ALOGV("write wrote %d (tried %d) samples in the native AudioTrack with offset %d",

// (int)samplesWritten, (int)(sizeInSamples), (int)offsetInSamples);

return samplesWritten;

}

native_write_short

native_write_short为native方法:

private native final int native_write_short(short[] audioData,

int offsetInShorts, int sizeInShorts, int format,

boolean isBlocking);

通过查询android_media_AudioTrack.cpp得到:

{"native_write_short", "([SIIIZ)I",(void *)android_media_AudioTrack_writeArray<jshortArray>},因此同样调用android_media_AudioTrack_writeArray方法。

native_write_float

native_write_float为native方法:

{"native_write_float", "([FIIIZ)I",(void *)android_media_AudioTrack_writeArray<jfloatArray>},因此同样调用android_media_AudioTrack_writeArray方法。

writeToTrack

在android_media_AudioTrack_writeArray方法中调用了writeToTrack方法:

//frameworks/base/core/jni/android_media_AudioTrack.cpp

static jint writeToTrack(const sp<AudioTrack>& track, jint audioFormat, const T *data,

jint offsetInSamples, jint sizeInSamples, bool blocking) {

// give the data to the native AudioTrack object (the data starts at the offset)

ssize_t written = 0;

// regular write() or copy the data to the AudioTrack's shared memory?

size_t sizeInBytes = sizeInSamples * sizeof(T);

if (track->sharedBuffer() == 0) {

written = track->write(data + offsetInSamples, sizeInBytes, blocking); //调用AudioTrack的write方法

// for compatibility with earlier behavior of write(), return 0 in this case

if (written == (ssize_t) WOULD_BLOCK) {

written = 0;

}

} else {

// writing to shared memory, check for capacity

if ((size_t)sizeInBytes > track->sharedBuffer()->size()) {

sizeInBytes = track->sharedBuffer()->size();

}

memcpy(track->sharedBuffer()->unsecurePointer(), data + offsetInSamples, sizeInBytes);

written = sizeInBytes;

}

if (written >= 0) {

return written / sizeof(T);

}

return interpretWriteSizeError(written);

}

write

调用AudioTrack的write方法:

//frameworks/av/media/libaudioclient/AudioTrack.cpp

ssize_t AudioTrack::write(const void* buffer, size_t userSize, bool blocking)

{

if (mTransfer != TRANSFER_SYNC && mTransfer != TRANSFER_SYNC_NOTIF_CALLBACK) {

return INVALID_OPERATION;

}

if (isDirect()) {

AutoMutex lock(mLock);

int32_t flags = android_atomic_and(

~(CBLK_UNDERRUN | CBLK_LOOP_CYCLE | CBLK_LOOP_FINAL | CBLK_BUFFER_END),

&mCblk->mFlags);

if (flags & CBLK_INVALID) {

return DEAD_OBJECT;

}

}

if (ssize_t(userSize) < 0 || (buffer == NULL && userSize != 0)) {

// Validation: user is most-likely passing an error code, and it would

// make the return value ambiguous (actualSize vs error).

ALOGE("%s(%d): AudioTrack::write(buffer=%p, size=%zu (%zd)",

__func__, mPortId, buffer, userSize, userSize);

return BAD_VALUE;

}

size_t written = 0;

Buffer audioBuffer;

while (userSize >= mFrameSize) {

audioBuffer.frameCount = userSize / mFrameSize;

status_t err = obtainBuffer(&audioBuffer,

blocking ? &ClientProxy::kForever : &ClientProxy::kNonBlocking); //调用obtainBuffer获取缓冲区

if (err < 0) {

if (written > 0) {

break;

}

if (err == TIMED_OUT || err == -EINTR) {

err = WOULD_BLOCK;

}

return ssize_t(err);

}

size_t toWrite = audioBuffer.size();

memcpy(audioBuffer.raw, buffer, toWrite);

buffer = ((const char *) buffer) + toWrite;

userSize -= toWrite;

written += toWrite;

releaseBuffer(&audioBuffer);

}

if (written > 0) {

mFramesWritten += written / mFrameSize;

if (mTransfer == TRANSFER_SYNC_NOTIF_CALLBACK) {

const sp<AudioTrackThread> t = mAudioTrackThread;

if (t != 0) {

// causes wake up of the playback thread, that will callback the client for

// more data (with EVENT_CAN_WRITE_MORE_DATA) in processAudioBuffer()

t->wake(); //唤醒playback线程

}

}

}

return written;

}

调用obtainBuffer方法:

//frameworks/av/media/libaudioclient/AudioTrack.cpp

status_t AudioTrack::obtainBuffer(Buffer* audioBuffer, int32_t waitCount, size_t *nonContig)

{

if (audioBuffer == NULL) {

if (nonContig != NULL) {

*nonContig = 0;

}

return BAD_VALUE;

}

if (mTransfer != TRANSFER_OBTAIN) {

audioBuffer->frameCount = 0;

audioBuffer->mSize = 0;

audioBuffer->raw = NULL;

if (nonContig != NULL) {

*nonContig = 0;

}

return INVALID_OPERATION;

}

const struct timespec *requested;

struct timespec timeout;

if (waitCount == -1) {

requested = &ClientProxy::kForever;

} else if (waitCount == 0) {

requested = &ClientProxy::kNonBlocking;

} else if (waitCount > 0) {

time_t ms = WAIT_PERIOD_MS * (time_t) waitCount;

timeout.tv_sec = ms / 1000;

timeout.tv_nsec = (ms % 1000) * 1000000;

requested = &timeout;

} else {

ALOGE("%s(%d): invalid waitCount %d", __func__, mPortId, waitCount);

requested = NULL;

}

return obtainBuffer(audioBuffer, requested, NULL /*elapsed*/, nonContig);

}

调用obtainBuffer方法:

sp<AudioTrackClientProxy> mProxy; // primary owner of the memory

//frameworks/av/media/libaudioclient/AudioTrack.cpp

status_t AudioTrack::obtainBuffer(Buffer* audioBuffer, const struct timespec *requested,

struct timespec *elapsed, size_t *nonContig)

{

// previous and new IAudioTrack sequence numbers are used to detect track re-creation

uint32_t oldSequence = 0;

Proxy::Buffer buffer;

status_t status = NO_ERROR;

static const int32_t kMaxTries = 5;

int32_t tryCounter = kMaxTries;

do {

// obtainBuffer() is called with mutex unlocked, so keep extra references to these fields to

// keep them from going away if another thread re-creates the track during obtainBuffer()

sp<AudioTrackClientProxy> proxy;

sp<IMemory> iMem;

{ // start of lock scope

AutoMutex lock(mLock);

uint32_t newSequence = mSequence;

// did previous obtainBuffer() fail due to media server death or voluntary invalidation?

if (status == DEAD_OBJECT) {

// re-create track, unless someone else has already done so

if (newSequence == oldSequence) {

status = restoreTrack_l("obtainBuffer");

if (status != NO_ERROR) {

buffer.mFrameCount = 0;

buffer.mRaw = NULL;

buffer.mNonContig = 0;

break;

}

}

}

oldSequence = newSequence;

if (status == NOT_ENOUGH_DATA) {

restartIfDisabled();

}

// Keep the extra references

proxy = mProxy;

iMem = mCblkMemory;

if (mState == STATE_STOPPING) {

status = -EINTR;

buffer.mFrameCount = 0;

buffer.mRaw = NULL;

buffer.mNonContig = 0;

break;

}

// Non-blocking if track is stopped or paused

if (mState != STATE_ACTIVE) {

requested = &ClientProxy::kNonBlocking;

}

} // end of lock scope

buffer.mFrameCount = audioBuffer->frameCount;

// FIXME starts the requested timeout and elapsed over from scratch

status = proxy->obtainBuffer(&buffer, requested, elapsed);

} while (((status == DEAD_OBJECT) || (status == NOT_ENOUGH_DATA)) && (tryCounter-- > 0));

audioBuffer->frameCount = buffer.mFrameCount;

audioBuffer->mSize = buffer.mFrameCount * mFrameSize;

audioBuffer->raw = buffer.mRaw;

audioBuffer->sequence = oldSequence;

if (nonContig != NULL) {

*nonContig = buffer.mNonContig;

}

return status;

}

调用AudioTrack的restoreTrack_l方法:

sp<media::IAudioTrack> mAudioTrack;

//frameworks/av/media/libaudioclient/AudioTrack.cpp

status_t AudioTrack::restoreTrack_l(const char *from)

{

status_t result = NO_ERROR; // logged: make sure to set this before returning.

const int64_t beginNs = systemTime();

mediametrics::Defer defer([&] {

mediametrics::LogItem(mMetricsId)

.set(AMEDIAMETRICS_PROP_EVENT, AMEDIAMETRICS_PROP_EVENT_VALUE_RESTORE)

.set(AMEDIAMETRICS_PROP_EXECUTIONTIMENS, (int64_t)(systemTime() - beginNs))

.set(AMEDIAMETRICS_PROP_STATE, stateToString(mState))

.set(AMEDIAMETRICS_PROP_STATUS, (int32_t)result)

.set(AMEDIAMETRICS_PROP_WHERE, from)

.record(); });

ALOGW("%s(%d): dead IAudioTrack, %s, creating a new one from %s()",

__func__, mPortId, isOffloadedOrDirect_l() ? "Offloaded or Direct" : "PCM", from);

++mSequence;

// refresh the audio configuration cache in this process to make sure we get new

// output parameters and new IAudioFlinger in createTrack_l()

AudioSystem::clearAudioConfigCache();

if (isOffloadedOrDirect_l() || mDoNotReconnect) {

// FIXME re-creation of offloaded and direct tracks is not yet implemented;

// reconsider enabling for linear PCM encodings when position can be preserved.

result = DEAD_OBJECT;

return result;

}

// Save so we can return count since creation.

mUnderrunCountOffset = getUnderrunCount_l();

// save the old static buffer position

uint32_t staticPosition = 0;

size_t bufferPosition = 0;

int loopCount = 0;

if (mStaticProxy != 0) {

mStaticProxy->getBufferPositionAndLoopCount(&bufferPosition, &loopCount);

staticPosition = mStaticProxy->getPosition().unsignedValue();

}

// save the old startThreshold and framecount

const uint32_t originalStartThresholdInFrames = mProxy->getStartThresholdInFrames();

const uint32_t originalFrameCount = mProxy->frameCount();

// See b/74409267. Connecting to a BT A2DP device supporting multiple codecs

// causes a lot of churn on the service side, and it can reject starting

// playback of a previously created track. May also apply to other cases.

const int INITIAL_RETRIES = 3;

int retries = INITIAL_RETRIES;

retry:

if (retries < INITIAL_RETRIES) {

// See the comment for clearAudioConfigCache at the start of the function.

AudioSystem::clearAudioConfigCache();

}

mFlags = mOrigFlags;

// If a new IAudioTrack is successfully created, createTrack_l() will modify the

// following member variables: mAudioTrack, mCblkMemory and mCblk.

// It will also delete the strong references on previous IAudioTrack and IMemory.

// If a new IAudioTrack cannot be created, the previous (dead) instance will be left intact.

result = createTrack_l();

if (result == NO_ERROR) {

// take the frames that will be lost by track recreation into account in saved position

// For streaming tracks, this is the amount we obtained from the user/client

// (not the number actually consumed at the server - those are already lost).

if (mStaticProxy == 0) {

mPosition = mReleased;

}

// Continue playback from last known position and restore loop.

if (mStaticProxy != 0) {

if (loopCount != 0) {

mStaticProxy->setBufferPositionAndLoop(bufferPosition,

mLoopStart, mLoopEnd, loopCount);

} else {

mStaticProxy->setBufferPosition(bufferPosition);

if (bufferPosition == mFrameCount) {

ALOGD("%s(%d): restoring track at end of static buffer", __func__, mPortId);

}

}

}

// restore volume handler

mVolumeHandler->forall([this](const VolumeShaper &shaper) -> VolumeShaper::Status {

sp<VolumeShaper::Operation> operationToEnd =

new VolumeShaper::Operation(shaper.mOperation);

// TODO: Ideally we would restore to the exact xOffset position

// as returned by getVolumeShaperState(), but we don't have that

// information when restoring at the client unless we periodically poll

// the server or create shared memory state.

//

// For now, we simply advance to the end of the VolumeShaper effect

// if it has been started.

if (shaper.isStarted()) {

operationToEnd->setNormalizedTime(1.f);

}

media::VolumeShaperConfiguration config;

shaper.mConfiguration->writeToParcelable(&config);

media::VolumeShaperOperation operation;

operationToEnd->writeToParcelable(&operation);

status_t status;

mAudioTrack->applyVolumeShaper(config, operation, &status);

return status;

});

// restore the original start threshold if different than frameCount.

if (originalStartThresholdInFrames != originalFrameCount) {

// Note: mProxy->setStartThresholdInFrames() call is in the Proxy

// and does not trigger a restart.

// (Also CBLK_DISABLED is not set, buffers are empty after track recreation).

// Any start would be triggered on the mState == ACTIVE check below.

const uint32_t currentThreshold =

mProxy->setStartThresholdInFrames(originalStartThresholdInFrames);

ALOGD_IF(originalStartThresholdInFrames != currentThreshold,

"%s(%d) startThresholdInFrames changing from %u to %u",

__func__, mPortId, originalStartThresholdInFrames, currentThreshold);

}

if (mState == STATE_ACTIVE) {

mAudioTrack->start(&result);

}

// server resets to zero so we offset

mFramesWrittenServerOffset =

mStaticProxy.get() != nullptr ? staticPosition : mFramesWritten;

mFramesWrittenAtRestore = mFramesWrittenServerOffset;

}

if (result != NO_ERROR) {

ALOGW("%s(%d): failed status %d, retries %d", __func__, mPortId, result, retries);

if (--retries > 0) {

// leave time for an eventual race condition to clear before retrying

usleep(500000);

goto retry;

}

// if no retries left, set invalid bit to force restoring at next occasion

// and avoid inconsistent active state on client and server sides

if (mCblk != nullptr) {

android_atomic_or(CBLK_INVALID, &mCblk->mFlags);

}

}

return result;

}

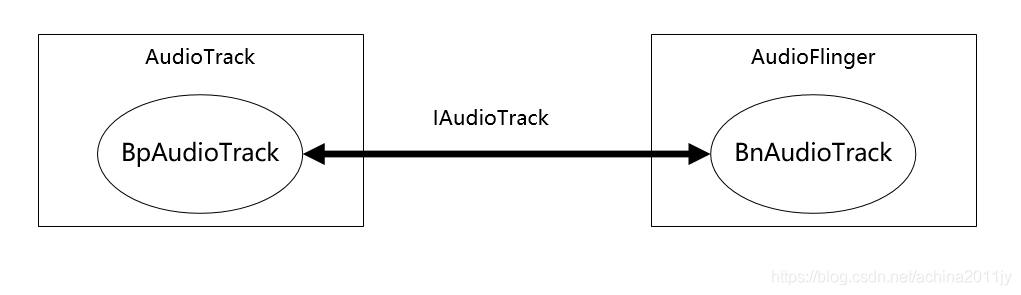

这里最重要的代码就是status = mAudioTrack->start(); ,其中mAudioTrack的定义为sp<IAudioTrack> mAudioTrack;,IAudioTrack接口由AudioFlinger的BnAudioTrack实现, mAudioTrack->start()会调用BnAudioTrack的start函数。

/frameworks/av/media/libaudioclient/IAudioTrack.aidl

interface IAudioTrack {

......

int start();

}

AudioFlinger TrackHandle start

之后就是AudioFlinger的处理了:

9799

9799

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?