>>>runfile('D:/python/novelspider/main.py', wdir='D:/python/novelspider')

Reloaded modules: novelspider.items, novelspider, novelspider.spiders, novelspider.settings, novelspider.spiders.novspider

2015-09-15 22:06:26 [scrapy] INFO: Scrapy 1.0.1 started (bot: novelspider)

2015-09-15 22:06:26 [scrapy] INFO: Scrapy 1.0.1 started (bot: novelspider)

2015-09-15 22:06:26 [scrapy] INFO: Optional features available: ssl, http11, boto

2015-09-15 22:06:26 [scrapy] INFO: Optional features available: ssl, http11, boto

2015-09-15 22:06:26 [scrapy] INFO: Overridden settings: {'NEWSPIDER_MODULE': 'novelspider.spiders', 'SPIDER_MODULES': ['novelspider.spiders'], 'USER_AGENT': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36', 'BOT_NAME': 'novelspider'}

2015-09-15 22:06:26 [scrapy] INFO: Overridden settings: {'NEWSPIDER_MODULE': 'novelspider.spiders', 'SPIDER_MODULES': ['novelspider.spiders'], 'USER_AGENT': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36', 'BOT_NAME': 'novelspider'}

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "D:\anzhuang\Anaconda\lib\site-packages\spyderlib\widgets\externalshell\sitecustomize.py", line 682, in runfile

execfile(filename, namespace)

File "D:\anzhuang\Anaconda\lib\site-packages\spyderlib\widgets\externalshell\sitecustomize.py", line 71, in execfile

exec(compile(scripttext, filename, 'exec'), glob, loc)

File "D:/python/novelspider/main.py", line 10, in <module>

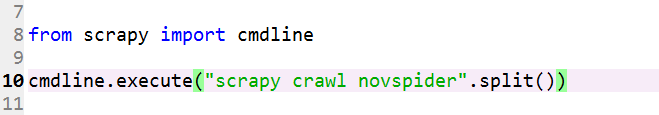

cmdline.execute("scrapy crawl novelspider".split())

File "D:\anzhuang\Anaconda\lib\site-packages\scrapy\cmdline.py", line 143, in execute

_run_print_help(parser, _run_command, cmd, args, opts)

File "D:\anzhuang\Anaconda\lib\site-packages\scrapy\cmdline.py", line 89, in _run_print_help

func(*a, **kw)

File "D:\anzhuang\Anaconda\lib\site-packages\scrapy\cmdline.py", line 150, in _run_command

cmd.run(args, opts)

File "D:\anzhuang\Anaconda\lib\site-packages\scrapy\commands\crawl.py", line 57, in run

self.crawler_process.crawl(spname, **opts.spargs)

File "D:\anzhuang\Anaconda\lib\site-packages\scrapy\crawler.py", line 150, in crawl

crawler = self._create_crawler(crawler_or_spidercls)

File "D:\anzhuang\Anaconda\lib\site-packages\scrapy\crawler.py", line 165, in _create_crawler

spidercls = self.spider_loader.load(spidercls)

File "D:\anzhuang\Anaconda\lib\site-packages\scrapy\spiderloader.py", line 40, in load

raise KeyError("Spider not found: {}".format(spider_name))

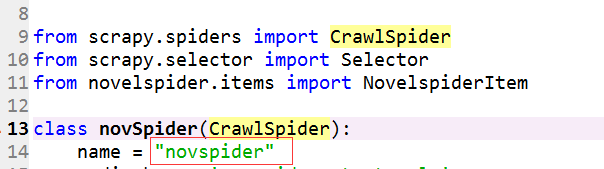

KeyError: 'Spider not found: novelspider'原因:运行项目的名字不是项目的名字,而是

在执行scrapy命令注意名字

解决方法:将scrapy crawl xxxx 中的xxx要与spider目录下的.py文件中的name保持一致。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?