1 问题描述

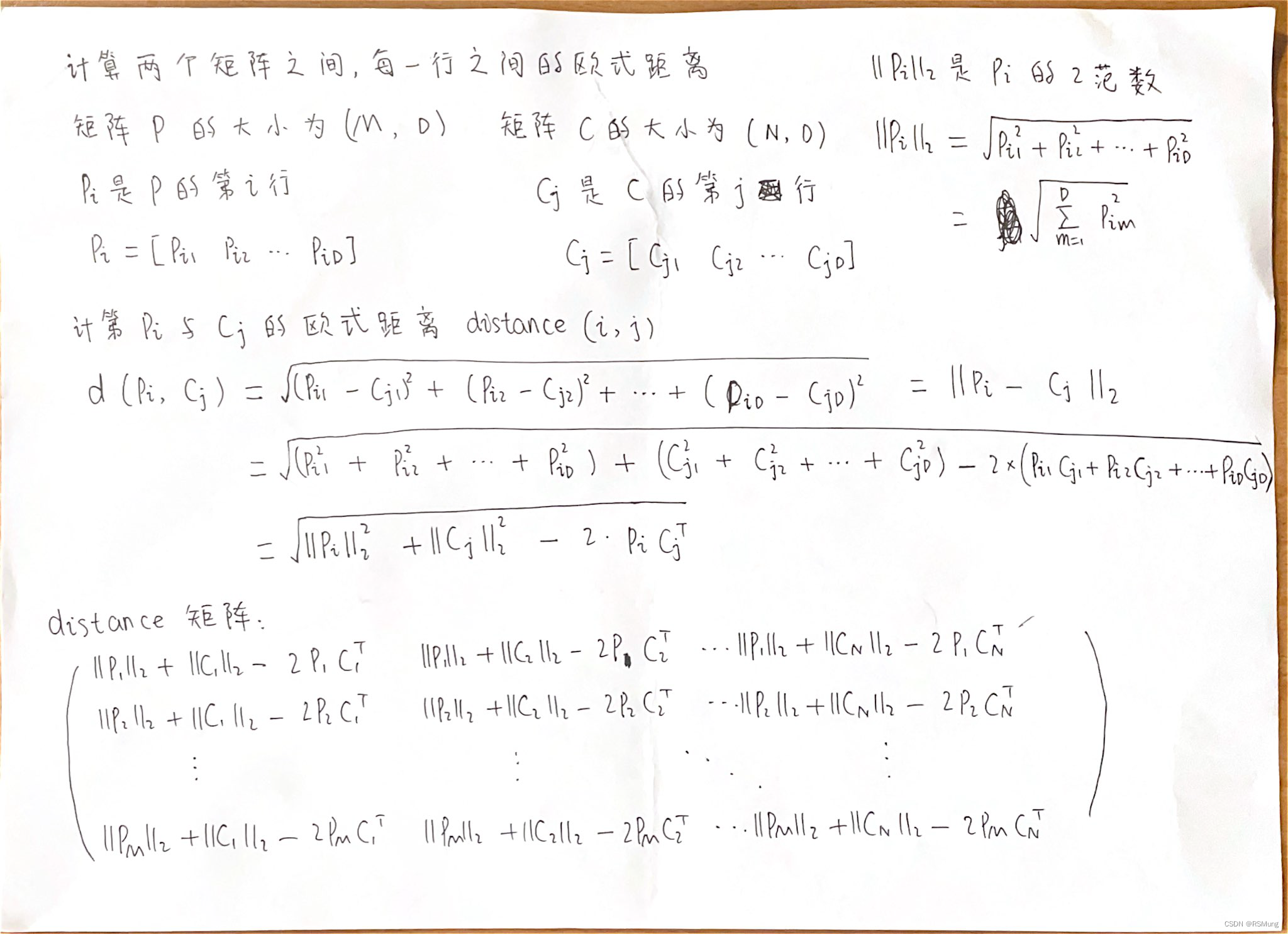

矩阵P的大小为[m, d] 用行向量表示为P1, P2,...,Pm

矩阵C的大小为[n, d] 用行向量表示为C1, C2,...,Cn

求矩阵P的每个行向量与矩阵C的每个行向量的欧氏距离

典型的例子是KNN算法应用于二维的点的聚类时,求取点与点之间的欧式距离时的情况。

2 解决办法1——两层循环

使用两层循环, 计算矩阵P的第i个行向量与矩阵C的第j个行向量的欧式距离

def l2distanceForMatrix_2loop(a, b):

time1 = time.time()

# 两层循环计算两个矩阵中每个样本之间的距离

num_a = a.shape[0]

num_b = b.shape[0]

print(f"矩阵a数据的条数:{num_a}, 矩阵b数据的条数:{num_b}")

distance = torch.zeros((num_a, num_b))

for i in range(num_a):

for j in range(num_b):

# 首先做减法, 对应元素相减

# 然后求平方

# 再做加法

# 最后开方

# (x1, y1) 与 (x2, y2)的欧式距离:

# sqrt( (x1-x2)^2 + (y1-y2)^2 )

distance[i][j] = torch.sqrt(

torch.sum(

torch.square(a[i] - b[j])

)

)

time2 = time.time()

print(f"花费时间:{time2 - time1}")

print(distance)

return distance3 解决办法2——一层循环

计算矩阵P的第i个行向量与矩阵C的欧式距离

def l2distanceForMatrix_1loop(a, b):

time1 = time.time()

# 1层循环计算两个矩阵中每个样本之间的距离

num_a = a.shape[0]

num_b = b.shape[0]

distance = torch.zeros((num_a, num_b))

for i in range(num_a):

# 矩阵a中第i个样本与矩阵b中的样本的欧式距离

# temp = torch.square(a[i] - b)

# print(temp.shape)

# print(temp)

# temp = torch.sum(temp, dim=1)

# print(temp)

# temp = torch.sqrt(temp)

# print(temp)

# distance[i] = temp

distance[i] = torch.sqrt(

torch.sum(

torch.square(a[i] - b),

dim=1

)

)

time2 = time.time()

print(f"花费时间:{time2 - time1}")

print(distance)

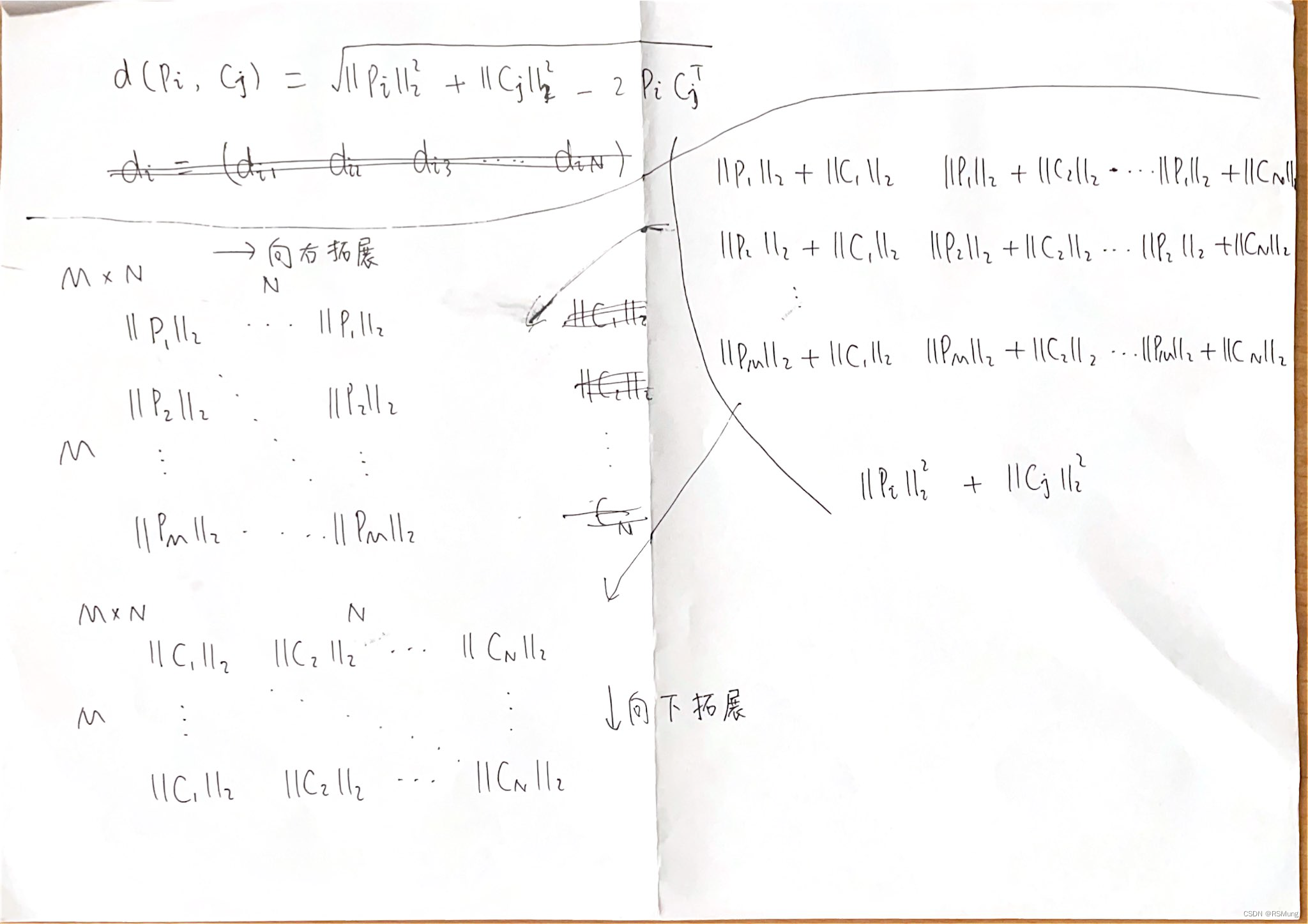

return distance4 解决办法3——没有循环,使用矩阵运算

,

4.1 写法1

def l2distanceForMatrix(a, b):

time1 = time.time()

# 使用矩阵运算的方式求取两个矩阵中各个样本的欧式距离

m = a.shape[0]

n = b.shape[0]

# 对矩阵的每个元素求平方

aa = torch.pow(a, 2) # [m, d]

# 按行求和, 并且保留维度数量不变

aa = torch.sum(aa, dim=1, keepdim=True) # [m, 1]

# 将矩阵aa从[m, 1]的形状扩展为[m, n]

aa = aa.expand(m, n) # [m, n]

# 处理矩阵b

bb = torch.pow(b, 2).sum(dim=1, keepdim=True).expand(n ,m) # [n, m]

bb = torch.transpose(bb, 0, 1) # [m, n]

# 计算第三项 [m, d] * [d, n] = [m, n]

tail = 2 * torch.matmul(a, torch.transpose(b, 0, 1))

# 计算最后的结果

distance = torch.sqrt(aa + bb - tail)

time2 = time.time()

print(f"花费时间:{time2 - time1}")

print(distance)

return distance4.2 写法2

def l2distanceForMatrix2(a, b):

print("方法l2distanceForMatrix2")

time1 = time.time()

m = a.shape[0]

n = b.shape[0]

# 计算a*a^T

matrix_a = torch.matmul(a, torch.transpose(a, 0, 1))

matrix_b = torch.matmul(b, torch.transpose(b, 0, 1))

# 取出matrix_a矩阵中的主对角线元素, 取出来的结果就是行向量的2范数

diag_a = torch.diag(matrix_a) # [m]

# print(diag_a.shape)

# 扩展维度

aa = diag_a.unsqueeze(1) # [m, 1]

aa = aa.expand(m, n) # [m, n]

# print(aa.shape)

# print(aa)

# 同样的方法处理矩阵b

diag_b = torch.diag(matrix_b) # [n]

diag_b = diag_b.unsqueeze(1) # [n, 1]

# 扩展维度

bb = diag_b.expand(n, m) # [n, m]

# 转置

bb = torch.transpose(bb, 0, 1) # [m, n]

# print(bb.shape)

# print(bb)

# 计算第三项 [m, d] * [d, n] = [m, n]

tail = 2 * torch.matmul(a, torch.transpose(b, 0, 1))

# 计算最后的结果

distance = torch.sqrt(aa + bb - tail)

time2 = time.time()

print(f"花费时间:{time2 - time1}")

print(distance)

return distance5 测试代码

import os

import torch

import torch.nn as nn

import time

def l2distanceForMatrix_2loop(a, b):

time1 = time.time()

# 两层循环计算两个矩阵中每个样本之间的距离

num_a = a.shape[0]

num_b = b.shape[0]

print(f"矩阵a数据的条数:{num_a}, 矩阵b数据的条数:{num_b}")

distance = torch.zeros((num_a, num_b))

for i in range(num_a):

for j in range(num_b):

# 首先做减法, 对应元素相减

# 然后求平方

# 再做加法

# 最后开方

# (x1, y1) 与 (x2, y2)的欧式距离:

# sqrt( (x1-x2)^2 + (y1-y2)^2 )

distance[i][j] = torch.sqrt(

torch.sum(

torch.square(a[i] - b[j])

)

)

time2 = time.time()

print(f"花费时间:{time2 - time1}")

print(distance)

return distance

def l2distanceForMatrix_1loop(a, b):

time1 = time.time()

# 1层循环计算两个矩阵中每个样本之间的距离

num_a = a.shape[0]

num_b = b.shape[0]

distance = torch.zeros((num_a, num_b))

for i in range(num_a):

# 矩阵a中第i个样本与矩阵b中的样本的欧式距离

# temp = torch.square(a[i] - b)

# print(temp.shape)

# print(temp)

# temp = torch.sum(temp, dim=1)

# print(temp)

# temp = torch.sqrt(temp)

# print(temp)

# distance[i] = temp

distance[i] = torch.sqrt(

torch.sum(

torch.square(a[i] - b),

dim=1

)

)

time2 = time.time()

print(f"花费时间:{time2 - time1}")

print(distance)

return distance

def l2distanceForMatrix(a, b):

print("方法l2distanceForMatrix")

time1 = time.time()

# 使用矩阵运算的方式求取两个矩阵中各个样本的欧式距离

m = a.shape[0]

n = b.shape[0]

# 对矩阵的每个元素求平方

aa = torch.pow(a, 2) # [m, d]

# 按行求和, 并且保留维度数量不变

aa = torch.sum(aa, dim=1, keepdim=True) # [m, 1]

# 将矩阵aa从[m, 1]的形状扩展为[m, n]

aa = aa.expand(m, n) # [m, n]

# print(aa.shape)

# print(aa)

# 处理矩阵b

bb = torch.pow(b, 2).sum(dim=1, keepdim=True).expand(n ,m) # [n, m]

bb = torch.transpose(bb, 0, 1) # [m, n]

# print(bb.shape)

# print(bb)

# 计算第三项 [m, d] * [d, n] = [m, n]

tail = 2 * torch.matmul(a, torch.transpose(b, 0, 1))

# 计算最后的结果

distance = torch.sqrt(aa + bb - tail)

time2 = time.time()

print(f"花费时间:{time2 - time1}")

print(distance)

return distance

def l2distanceForMatrix2(a, b):

print("方法l2distanceForMatrix2")

time1 = time.time()

m = a.shape[0]

n = b.shape[0]

# 计算a*a^T

matrix_a = torch.matmul(a, torch.transpose(a, 0, 1))

matrix_b = torch.matmul(b, torch.transpose(b, 0, 1))

# 取出matrix_a矩阵中的主对角线元素, 取出来的结果就是行向量的2范数

diag_a = torch.diag(matrix_a) # [m]

# print(diag_a.shape)

# 扩展维度

aa = diag_a.unsqueeze(1) # [m, 1]

aa = aa.expand(m, n) # [m, n]

# print(aa.shape)

# print(aa)

# 同样的方法处理矩阵b

diag_b = torch.diag(matrix_b) # [n]

diag_b = diag_b.unsqueeze(1) # [n, 1]

# 扩展维度

bb = diag_b.expand(n, m) # [n, m]

# 转置

bb = torch.transpose(bb, 0, 1) # [m, n]

# print(bb.shape)

# print(bb)

# 计算第三项 [m, d] * [d, n] = [m, n]

tail = 2 * torch.matmul(a, torch.transpose(b, 0, 1))

# 计算最后的结果

distance = torch.sqrt(aa + bb - tail)

time2 = time.time()

print(f"花费时间:{time2 - time1}")

print(distance)

return distance

def main():

# a = torch.randn((600, 2))

# b = torch.randn((600, 2))

a = torch.tensor(

[

[1, 2],

[3, 4],

[5, 6],

[7, 8],

[9, 10]

]

).float()

b = torch.tensor(

[

[3, 4],

[5, 6],

[7, 8],

[9, 10]

]

).float()

l2distanceForMatrix_2loop(a, b)

l2distanceForMatrix_1loop(a, b)

l2distanceForMatrix(a, b)

l2distanceForMatrix2(a, b)

if __name__ == "__main__":

main()

1959

1959

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?