看到一个帖子,关于“获取中药药名,进行词频统计分析,从而为设置花名,寻找一些灵感”,故实操一番。

基于 requests, beautifulsoup, re 三个库,抓取并解析匹配网页内容,最终保存为 json 格式的文件。主要调用 crawl() 函数。

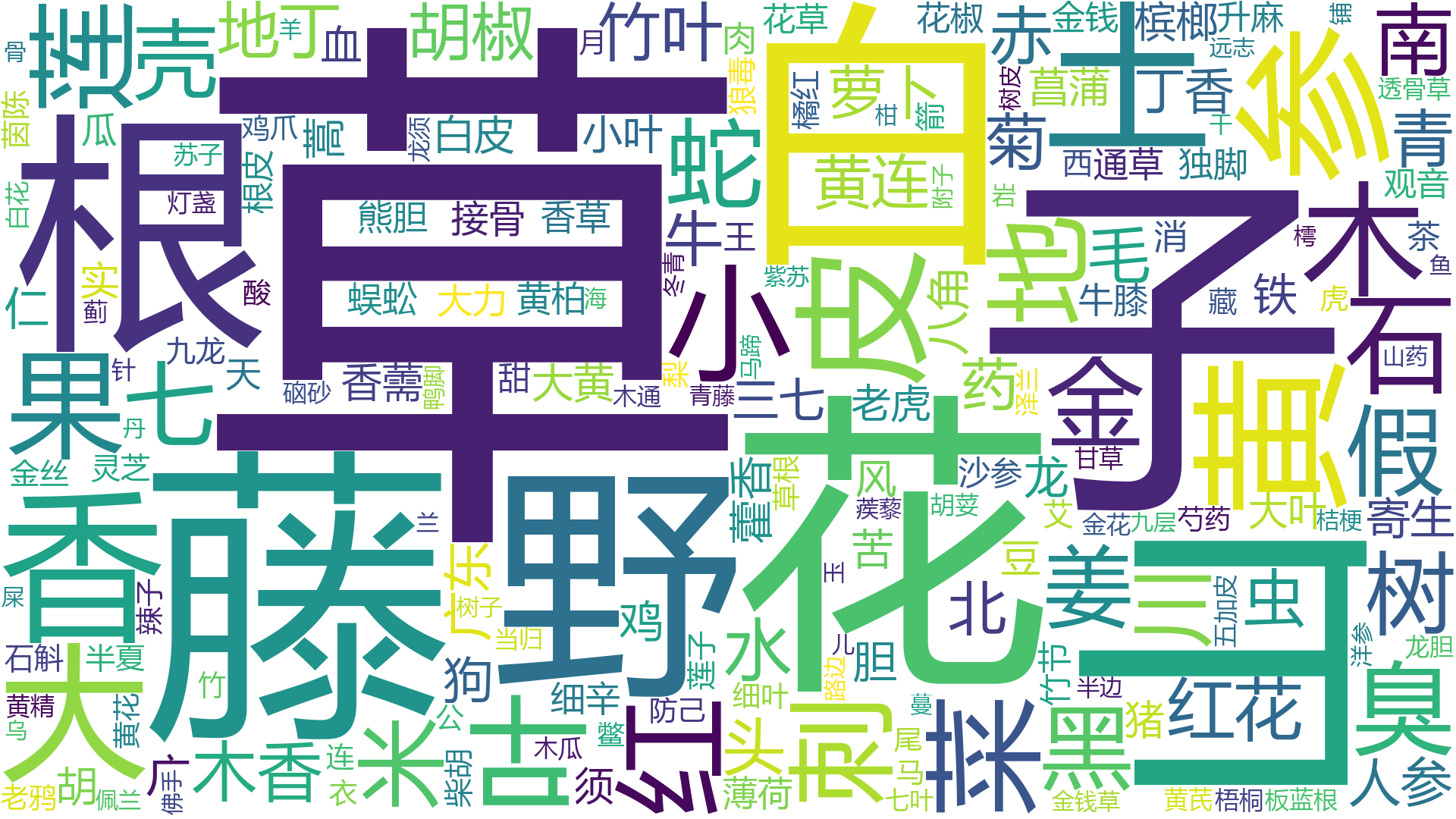

基于 jieba, wordcount 两个库,对中文药名进行分词并统计词频,生成词云,主要基于 analysis() 函数。

所得中药信息的 json 部分数据如下:

{

"半夏": {

"pinyin": "banxia",

"english": "Pinelliae Rhizoma",

"alias": [

"三叶半夏",

"三步跳",

"麻芋子",

"水芋",

"地巴豆"

]

},

"鱼胆草": {

"pinyin": "yudancao",

"english": "Herba Swertiae Davidi",

"alias": [

"金盆",

"青鱼胆草",

"水灵芝",

"水黄连"

]

},

"路路通": {

"pinyin": "",

"english": "Liquidambaris Fructus",

"alias": [

"枫实",

"枫木上球",

"枫香果",

"枫果",

"枫树球",

"狼眼",

"九空子",

"狼目",

"聂子"

]

},

...,

"小叶莲": {

"pinyin": "xiaoyelian",

"english": "Sinopodophmlli Fructus",

"alias": [

"鸡素苔",

"铜筷子",

"桃耳七"

]

},

"紫玉簪": {

"pinyin": "ziyuzan",

"english": "Flower Of Blue Plantainlily",

"alias": [

"玉泡花",

"紫萼"

]

},

"广东络石藤": {

"pinyin": "guangdongluoshiteng",

"english": "Creeping Psychotria",

"alias": [

"穿根藤",

"松筋藤",

"风不动藤"

]

}

}所得词云如下:

完整代码如下:

import json

import requests

import time

import random

from bs4 import BeautifulSoup

import re

import jieba

import wordcloud

def crawl():

# http://www.zhongyoo.com/name/

# http://www.zhongyoo.com/name/page_45.html

medicine_alias_englishname = {}

header = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"Accept-Encoding": "gzip, deflate",

"Accept-Language": "zh-CN,zh;q=0.9,en;q=0.8",

"Cache-Control": "max-age=0",

"Cookie": "Hm_lvt_f9eb7a07918590a54f0fa333419bae7e=1675998824; Hm_lpvt_f9eb7a07918590a54f0fa333419bae7e=1675999021",

"Host": "www.zhongyoo.com",

"If-Modified-Since": "Tue, 23 Aug 2022 13:47:39 GMT",

"If-None-Match": "946d9-6068-5e6e8cebf54c0",

"Proxy-Connection": "keep-alive",

"Referer": "http://www.zhongyoo.com",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36",

}

root_url = "http://www.zhongyoo.com/name/"

for p in range(1, 46):

if p == 1:

url = root_url

else:

url = f"{root_url}page_{p}.html"

header["Referer"] = f"{root_url}page_{p - 1}.html"

res = requests.get(url, headers=header)

if res.status_code == 200:

res.encoding = "gbk"

soup = BeautifulSoup(res.text, 'html.parser')

names = soup.select("div.sp > strong > a")

cnt = 0

for n in names:

medicine = n.text

href = n.attrs["href"]

sub_header = header

sub_header["Referer"] = url

sub_res = requests.get(href, headers=sub_header)

if sub_res.status_code == 200:

sub_res.encoding = "gbk"

sub_soup = BeautifulSoup(sub_res.text, 'html.parser')

sub_info = sub_soup.select("div.text:last-child > p:nth-child(-n+5):nth-child(n+1)") # 第1到5个

pinyin = ""

english = ""

alias = []

for item in sub_info:

text = item.text.strip()

if len(re.findall(r"【中药名】.+", text)) > 0:

pinyin = "".join(re.findall(r"[a-zA-Z]+", text)) # '【中药名】半夏 banxia'

elif len(re.findall(r"【英文名】.+", text)) > 0 or len(re.findall(r"【外语名】.+", text)) > 0:

english = " ".join(re.findall(r"[a-zA-Z]+", text)) # '【英文名】Pinelliae Rhizoma。' 外语名

elif len(re.findall(r"【别名】.+", text)) > 0:

alias = "".join(re.findall(r"】.+", text))[1:]

if alias[-1] == "。":

alias = alias[:-1]

alias = alias.split("、") # '【别名】三叶半夏、三步跳、麻芋子、水芋、地巴豆。'

medicine_alias_englishname[medicine] = {

"pinyin": pinyin,

"english": english,

"alias": alias,

}

else:

print(f"page {p} sub {cnt}: code {res.status_code}!")

cnt += 1

if p % 10 == 0: # 每 10 页 200 味药保存一次

json_str = json.dumps(medicine_alias_englishname, indent=4, ensure_ascii=False)

with open("medicine_alias_englishname.json", 'w', encoding="utf-8") as json_file:

json_file.write(json_str)

print(f"page {p} crawled!")

else:

print(f"page {p} code {res.status_code}!")

def analysis():

# 构建并配置词云对象w

w = wordcloud.WordCloud(width=1920,

height=1080,

background_color='white',

font_path='msyh.ttc',

)

with open("medicine_alias_englishname.json", 'r', encoding="utf-8") as json_file:

load_dict = json.load(json_file)

medicines = list(load_dict.keys())

alias = []

for k in load_dict:

alias += load_dict[k]["alias"]

txt = " ".join(medicines + alias)

txtlist = jieba.lcut(txt)

string = " ".join(txtlist)

# 将string变量传入w的generate()方法,给词云输入文字

w.generate(string)

# 将词云图片导出到当前文件夹

w.to_file('medicine_cloud.png')

if __name__ == '__main__':

crawl()

analysis()

pass

1102

1102

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?