我们将在本篇博客实现Resunet。

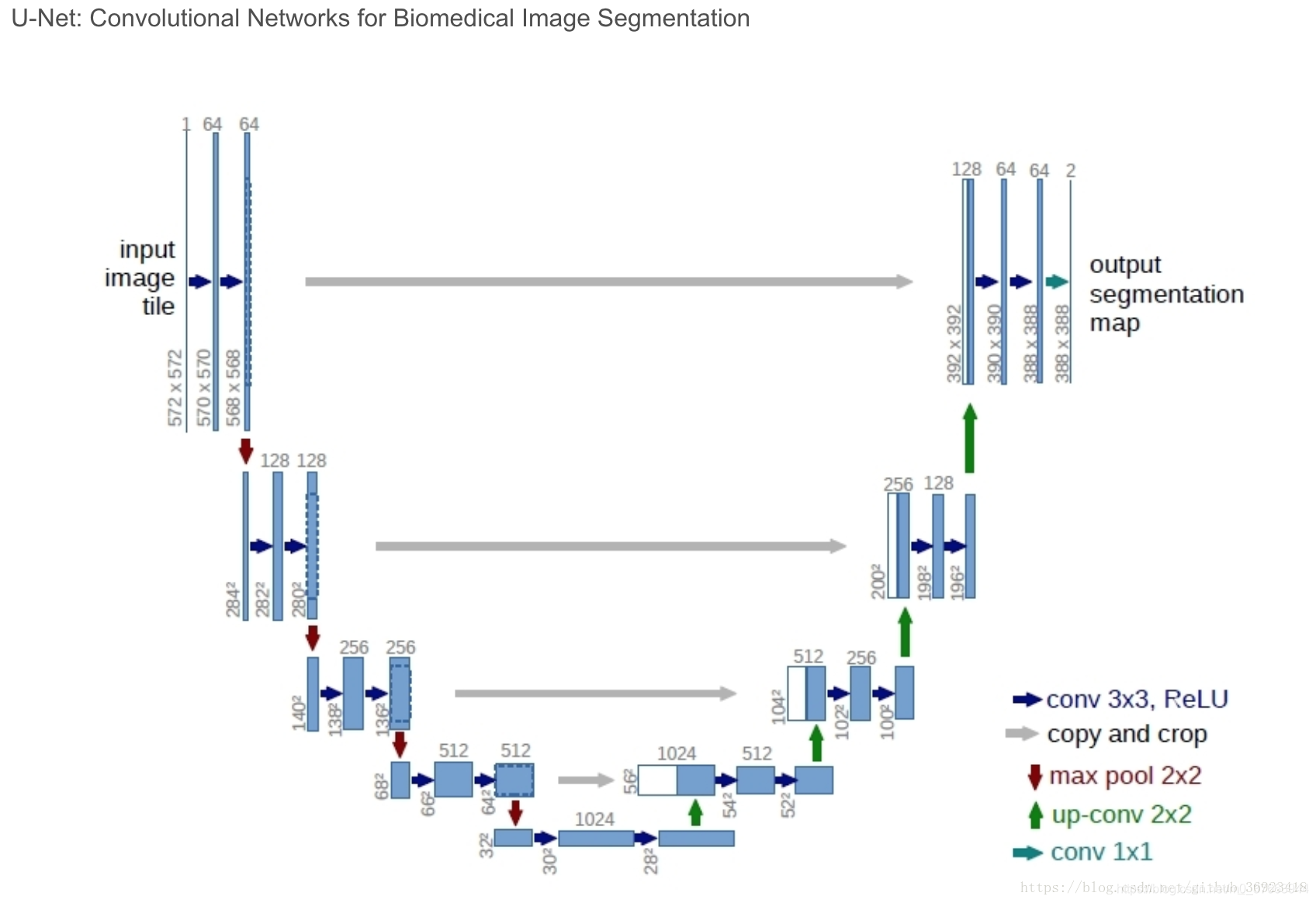

首先上Unet图

然后结合Unet和Resnet,就是一个新的网络。

def bn_act(x, act=True):

'batch normalization layer with an optinal activation layer'

x = tf.keras.layers.BatchNormalization()(x)

if act == True:

x = tf.keras.layers.Activation('relu')(x)

return x

以下方框代表数据,圆形代表方法,虚线代表判断

bn_act方法图

def conv_block(x, filters, kernel_size=3, padding='same', strides=1):

'convolutional layer which always uses the batch normalization layer'

conv = bn_act(x)

conv = Conv2D(filters, kernel_size, padding=padding, strides=strides)(conv)

return conv

conv_block方法图

stem方法图

def stem(x, filters, kernel_size=3, padding='same', strides=1):

conv = Conv2D(filters, kernel_size, padding=padding, strides=strides)(x)

conv = conv_block(conv, filters, kernel_size, padding, strides)

shortcut = Conv2D(filters, kernel_size=1, padding=padding, strides=strides)(x)

shortcut = bn_act(shortcut, act=False)

output = Add()([conv, shortcut])

return output

residual_block方法图

def residual_block(x, filters, kernel_size=3, padding='same', strides=1):

res = conv_block(x, filters, k_size, padding, strides)

res = conv_block(res, filters, k_size, padding, 1)

shortcut = Conv2D(filters, kernel_size, padding=padding, strides=strides)(x)

shortcut = bn_act(shortcut, act=False)

output = Add()([shortcut, res])

return output

def upsample_concat_block(x, xskip):

u = UpSampling2D((2,2))(x)

c = Concatenate()([u, xskip])

return c

ResUNet方法图

def ResUNet(img_h, img_w):

f = [16, 32, 64, 128, 256]

inputs = Input((img_h, img_w, 1))

## Encoder

e0 = inputs

e1 = stem(e0, f[0])

e2 = residual_block(e1, f[1], strides=2)

e3 = residual_block(e2, f[2], strides=2)

e4 = residual_block(e3, f[3], strides=2)

e5 = residual_block(e4, f[4], strides=2)

## Bridge

b0 = conv_block(e5, f[4], strides=1)

b1 = conv_block(b0, f[4], strides=1)

## Decoder

u1 = upsample_concat_block(b1, e4)

d1 = residual_block(u1, f[4])

u2 = upsample_concat_block(d1, e3)

d2 = residual_block(u2, f[3])

u3 = upsample_concat_block(d2, e2)

d3 = residual_block(u3, f[2])

u4 = upsample_concat_block(d3, e1)

d4 = residual_block(u4, f[1])

outputs = tf.keras.layers.Conv2D(4, (1, 1), padding="same", activation="sigmoid")(d4)

model = tf.keras.models.Model(inputs, outputs)

return model

这个图就不画了。

可以看下这个模型的概括。

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 256, 800, 1) 0

__________________________________________________________________________________________________

conv2d_167 (Conv2D) (None, 256, 800, 16) 160 input_1[0][0]

__________________________________________________________________________________________________

batch_normalization_75 (BatchNo (None, 256, 800, 16) 64 conv2d_167[0][0]

__________________________________________________________________________________________________

activation_74 (Activation) (None, 256, 800, 16) 0 batch_normalization_75[0][0]

__________________________________________________________________________________________________

conv2d_169 (Conv2D) (None, 256, 800, 16) 32 input_1[0][0]

__________________________________________________________________________________________________

conv2d_168 (Conv2D) (None, 256, 800, 16) 2320 activation_74[0][0]

__________________________________________________________________________________________________

batch_normalization_76 (BatchNo (None, 256, 800, 16) 64 conv2d_169[0][0]

__________________________________________________________________________________________________

add (Add) (None, 256, 800, 16) 0 conv2d_168[0][0]

batch_normalization_76[0][0]

__________________________________________________________________________________________________

batch_normalization_77 (BatchNo (None, 256, 800, 16) 64 add[0][0]

__________________________________________________________________________________________________

activation_75 (Activation) (None, 256, 800, 16) 0 batch_normalization_77[0][0]

__________________________________________________________________________________________________

conv2d_170 (Conv2D) (None, 128, 400, 32) 4640 activation_75[0][0]

__________________________________________________________________________________________________

batch_normalization_78 (BatchNo (None, 128, 400, 32) 128 conv2d_170[0][0]

__________________________________________________________________________________________________

conv2d_172 (Conv2D) (None, 128, 400, 32) 4640 add[0][0]

__________________________________________________________________________________________________

activation_76 (Activation) (None, 128, 400, 32) 0 batch_normalization_78[0][0]

__________________________________________________________________________________________________

batch_normalization_79 (BatchNo (None, 128, 400, 32) 128 conv2d_172[0][0]

__________________________________________________________________________________________________

conv2d_171 (Conv2D) (None, 128, 400, 32) 9248 activation_76[0][0]

__________________________________________________________________________________________________

add_1 (Add) (None, 128, 400, 32) 0 batch_normalization_79[0][0]

conv2d_171[0][0]

__________________________________________________________________________________________________

batch_normalization_80 (BatchNo (None, 128, 400, 32) 128 add_1[0][0]

__________________________________________________________________________________________________

activation_77 (Activation) (None, 128, 400, 32) 0 batch_normalization_80[0][0]

__________________________________________________________________________________________________

conv2d_173 (Conv2D) (None, 64, 200, 64) 18496 activation_77[0][0]

__________________________________________________________________________________________________

batch_normalization_81 (BatchNo (None, 64, 200, 64) 256 conv2d_173[0][0]

__________________________________________________________________________________________________

conv2d_175 (Conv2D) (None, 64, 200, 64) 18496 add_1[0][0]

__________________________________________________________________________________________________

activation_78 (Activation) (None, 64, 200, 64) 0 batch_normalization_81[0][0]

__________________________________________________________________________________________________

batch_normalization_82 (BatchNo (None, 64, 200, 64) 256 conv2d_175[0][0]

__________________________________________________________________________________________________

conv2d_174 (Conv2D) (None, 64, 200, 64) 36928 activation_78[0][0]

__________________________________________________________________________________________________

add_2 (Add) (None, 64, 200, 64) 0 batch_normalization_82[0][0]

conv2d_174[0][0]

__________________________________________________________________________________________________

batch_normalization_83 (BatchNo (None, 64, 200, 64) 256 add_2[0][0]

__________________________________________________________________________________________________

activation_79 (Activation) (None, 64, 200, 64) 0 batch_normalization_83[0][0]

__________________________________________________________________________________________________

conv2d_176 (Conv2D) (None, 32, 100, 128) 73856 activation_79[0][0]

__________________________________________________________________________________________________

batch_normalization_84 (BatchNo (None, 32, 100, 128) 512 conv2d_176[0][0]

__________________________________________________________________________________________________

conv2d_178 (Conv2D) (None, 32, 100, 128) 73856 add_2[0][0]

__________________________________________________________________________________________________

activation_80 (Activation) (None, 32, 100, 128) 0 batch_normalization_84[0][0]

__________________________________________________________________________________________________

batch_normalization_85 (BatchNo (None, 32, 100, 128) 512 conv2d_178[0][0]

__________________________________________________________________________________________________

conv2d_177 (Conv2D) (None, 32, 100, 128) 147584 activation_80[0][0]

__________________________________________________________________________________________________

add_3 (Add) (None, 32, 100, 128) 0 batch_normalization_85[0][0]

conv2d_177[0][0]

__________________________________________________________________________________________________

batch_normalization_86 (BatchNo (None, 32, 100, 128) 512 add_3[0][0]

__________________________________________________________________________________________________

activation_81 (Activation) (None, 32, 100, 128) 0 batch_normalization_86[0][0]

__________________________________________________________________________________________________

conv2d_179 (Conv2D) (None, 16, 50, 256) 295168 activation_81[0][0]

__________________________________________________________________________________________________

batch_normalization_87 (BatchNo (None, 16, 50, 256) 1024 conv2d_179[0][0]

__________________________________________________________________________________________________

conv2d_181 (Conv2D) (None, 16, 50, 256) 295168 add_3[0][0]

__________________________________________________________________________________________________

activation_82 (Activation) (None, 16, 50, 256) 0 batch_normalization_87[0][0]

__________________________________________________________________________________________________

batch_normalization_88 (BatchNo (None, 16, 50, 256) 1024 conv2d_181[0][0]

__________________________________________________________________________________________________

conv2d_180 (Conv2D) (None, 16, 50, 256) 590080 activation_82[0][0]

__________________________________________________________________________________________________

add_4 (Add) (None, 16, 50, 256) 0 batch_normalization_88[0][0]

conv2d_180[0][0]

__________________________________________________________________________________________________

batch_normalization_89 (BatchNo (None, 16, 50, 256) 1024 add_4[0][0]

__________________________________________________________________________________________________

activation_83 (Activation) (None, 16, 50, 256) 0 batch_normalization_89[0][0]

__________________________________________________________________________________________________

conv2d_182 (Conv2D) (None, 16, 50, 256) 590080 activation_83[0][0]

__________________________________________________________________________________________________

batch_normalization_90 (BatchNo (None, 16, 50, 256) 1024 conv2d_182[0][0]

__________________________________________________________________________________________________

activation_84 (Activation) (None, 16, 50, 256) 0 batch_normalization_90[0][0]

__________________________________________________________________________________________________

conv2d_183 (Conv2D) (None, 16, 50, 256) 590080 activation_84[0][0]

__________________________________________________________________________________________________

up_sampling2d (UpSampling2D) (None, 32, 100, 256) 0 conv2d_183[0][0]

__________________________________________________________________________________________________

concatenate_36 (Concatenate) (None, 32, 100, 384) 0 up_sampling2d[0][0]

add_3[0][0]

__________________________________________________________________________________________________

batch_normalization_91 (BatchNo (None, 32, 100, 384) 1536 concatenate_36[0][0]

__________________________________________________________________________________________________

activation_85 (Activation) (None, 32, 100, 384) 0 batch_normalization_91[0][0]

__________________________________________________________________________________________________

conv2d_184 (Conv2D) (None, 32, 100, 256) 884992 activation_85[0][0]

__________________________________________________________________________________________________

batch_normalization_92 (BatchNo (None, 32, 100, 256) 1024 conv2d_184[0][0]

__________________________________________________________________________________________________

conv2d_186 (Conv2D) (None, 32, 100, 256) 884992 concatenate_36[0][0]

__________________________________________________________________________________________________

activation_86 (Activation) (None, 32, 100, 256) 0 batch_normalization_92[0][0]

__________________________________________________________________________________________________

batch_normalization_93 (BatchNo (None, 32, 100, 256) 1024 conv2d_186[0][0]

__________________________________________________________________________________________________

conv2d_185 (Conv2D) (None, 32, 100, 256) 590080 activation_86[0][0]

__________________________________________________________________________________________________

add_5 (Add) (None, 32, 100, 256) 0 batch_normalization_93[0][0]

conv2d_185[0][0]

__________________________________________________________________________________________________

up_sampling2d_1 (UpSampling2D) (None, 64, 200, 256) 0 add_5[0][0]

__________________________________________________________________________________________________

concatenate_37 (Concatenate) (None, 64, 200, 320) 0 up_sampling2d_1[0][0]

add_2[0][0]

__________________________________________________________________________________________________

batch_normalization_94 (BatchNo (None, 64, 200, 320) 1280 concatenate_37[0][0]

__________________________________________________________________________________________________

activation_87 (Activation) (None, 64, 200, 320) 0 batch_normalization_94[0][0]

__________________________________________________________________________________________________

conv2d_187 (Conv2D) (None, 64, 200, 128) 368768 activation_87[0][0]

__________________________________________________________________________________________________

batch_normalization_95 (BatchNo (None, 64, 200, 128) 512 conv2d_187[0][0]

__________________________________________________________________________________________________

conv2d_189 (Conv2D) (None, 64, 200, 128) 368768 concatenate_37[0][0]

__________________________________________________________________________________________________

activation_88 (Activation) (None, 64, 200, 128) 0 batch_normalization_95[0][0]

__________________________________________________________________________________________________

batch_normalization_96 (BatchNo (None, 64, 200, 128) 512 conv2d_189[0][0]

__________________________________________________________________________________________________

conv2d_188 (Conv2D) (None, 64, 200, 128) 147584 activation_88[0][0]

__________________________________________________________________________________________________

add_6 (Add) (None, 64, 200, 128) 0 batch_normalization_96[0][0]

conv2d_188[0][0]

__________________________________________________________________________________________________

up_sampling2d_2 (UpSampling2D) (None, 128, 400, 128 0 add_6[0][0]

__________________________________________________________________________________________________

concatenate_38 (Concatenate) (None, 128, 400, 160 0 up_sampling2d_2[0][0]

add_1[0][0]

__________________________________________________________________________________________________

batch_normalization_97 (BatchNo (None, 128, 400, 160 640 concatenate_38[0][0]

__________________________________________________________________________________________________

activation_89 (Activation) (None, 128, 400, 160 0 batch_normalization_97[0][0]

__________________________________________________________________________________________________

conv2d_190 (Conv2D) (None, 128, 400, 64) 92224 activation_89[0][0]

__________________________________________________________________________________________________

batch_normalization_98 (BatchNo (None, 128, 400, 64) 256 conv2d_190[0][0]

__________________________________________________________________________________________________

conv2d_192 (Conv2D) (None, 128, 400, 64) 92224 concatenate_38[0][0]

__________________________________________________________________________________________________

activation_90 (Activation) (None, 128, 400, 64) 0 batch_normalization_98[0][0]

__________________________________________________________________________________________________

batch_normalization_99 (BatchNo (None, 128, 400, 64) 256 conv2d_192[0][0]

__________________________________________________________________________________________________

conv2d_191 (Conv2D) (None, 128, 400, 64) 36928 activation_90[0][0]

__________________________________________________________________________________________________

add_7 (Add) (None, 128, 400, 64) 0 batch_normalization_99[0][0]

conv2d_191[0][0]

__________________________________________________________________________________________________

up_sampling2d_3 (UpSampling2D) (None, 256, 800, 64) 0 add_7[0][0]

__________________________________________________________________________________________________

concatenate_39 (Concatenate) (None, 256, 800, 80) 0 up_sampling2d_3[0][0]

add[0][0]

__________________________________________________________________________________________________

batch_normalization_100 (BatchN (None, 256, 800, 80) 320 concatenate_39[0][0]

__________________________________________________________________________________________________

activation_91 (Activation) (None, 256, 800, 80) 0 batch_normalization_100[0][0]

__________________________________________________________________________________________________

conv2d_193 (Conv2D) (None, 256, 800, 32) 23072 activation_91[0][0]

__________________________________________________________________________________________________

batch_normalization_101 (BatchN (None, 256, 800, 32) 128 conv2d_193[0][0]

__________________________________________________________________________________________________

conv2d_195 (Conv2D) (None, 256, 800, 32) 23072 concatenate_39[0][0]

__________________________________________________________________________________________________

activation_92 (Activation) (None, 256, 800, 32) 0 batch_normalization_101[0][0]

__________________________________________________________________________________________________

batch_normalization_102 (BatchN (None, 256, 800, 32) 128 conv2d_195[0][0]

__________________________________________________________________________________________________

conv2d_194 (Conv2D) (None, 256, 800, 32) 9248 activation_92[0][0]

__________________________________________________________________________________________________

add_8 (Add) (None, 256, 800, 32) 0 batch_normalization_102[0][0]

conv2d_194[0][0]

__________________________________________________________________________________________________

conv2d_196 (Conv2D) (None, 256, 800, 4) 132 add_8[0][0]

==================================================================================================

Total params: 6,287,508

Trainable params: 6,280,212

Non-trainable params: 7,296

__________________________________________________________________________________________________

6636

6636

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?