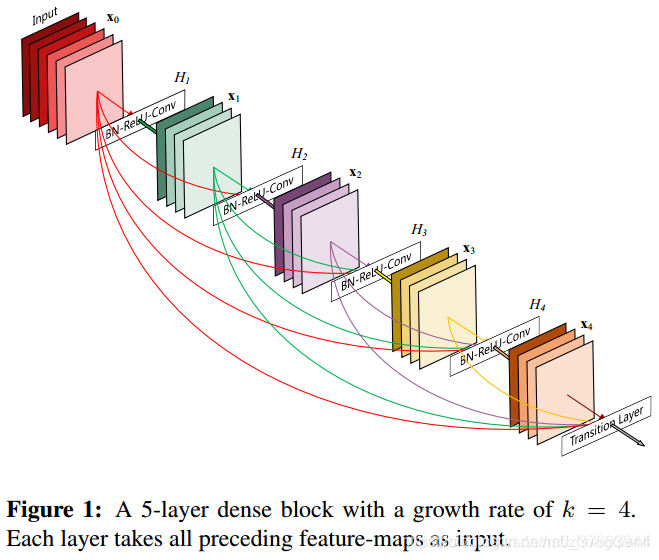

《Densely Connected Convolutional Networks》提出了DenseNet,它用前馈的方式连接每一层与所有其他层,网络的核心结构为如下所示的Dense块,在每一个Dense块中,存在多个Dense层,即下图所示的H1~H4。各Dense层之间彼此均相互连接。

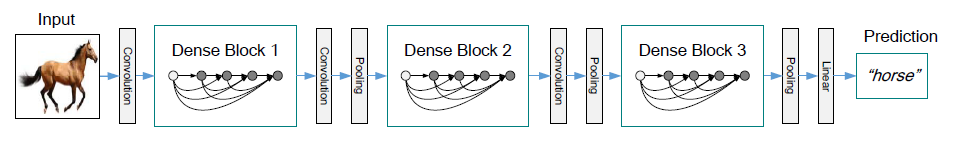

不一定需要在Dense块中对每一个Dense层均直接进行相互连接,来缩小网络的结构;也可能可以在不相邻的Dense块之间 通过简单的下采样操作进行连接,进一步提升网络对不同尺度的特征的利用效率。

通过简单的下采样操作进行连接,进一步提升网络对不同尺度的特征的利用效率。

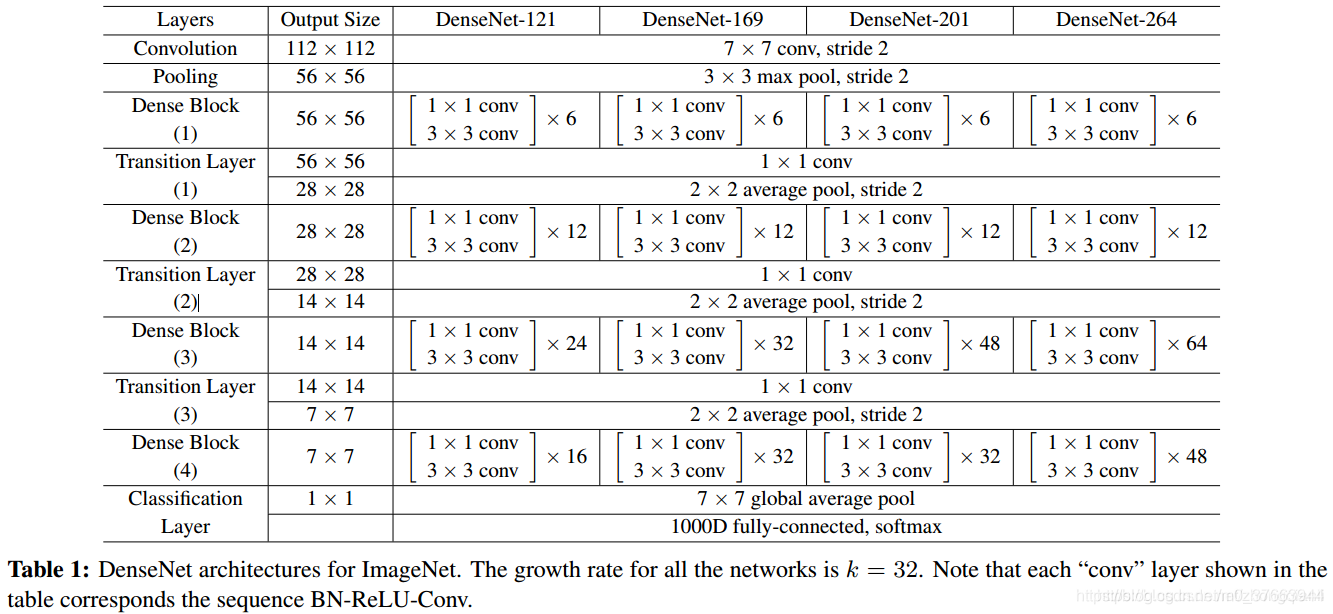

基本架构如下:

下面我将使用tensorflow2实现:

# -*- coding: utf-8 -*-

"""

Created on Fri Feb 28 15:12:26 2020

@author: asus

"""

import tensorflow as tf

from tensorflow.keras.layers import Dense, Conv2D, GlobalAveragePooling2D,\

BatchNormalization, AveragePooling2D, MaxPooling2D, Dropout, Concatenate

from tensorflow.keras import Model, Input

growth_rate = 12

inpt = Input(shape=(32,32,3))

def DenseLayer(x, nb_filter, bn_size=4, alpha=0.0, drop_rate=0.2):

# Bottleneck layers

x = BatchNormalization(axis=3)(x)

x = tf.nn.leaky_relu(x, alpha=0.2)

x = Conv2D(bn_size*nb_filter, (1, 1), strides=(1,1), padding='same')(x)

# Composite function

x = BatchNormalization(axis=3)(x)

x = tf.nn.leaky_relu(x, alpha=0.2)

x = Conv2D(nb_filter, (3, 3), strides=(1,1), padding='same')(x)

if drop_rate: x = Dropout(drop_rate)(x)

return x

def DenseBlock(x, nb_layers, growth_rate, drop_rate=0.2):

for ii in range(nb_layers):

conv = DenseLayer(x, nb_filter=growth_rate, drop_rate=drop_rate)

x = Concatenate()([x, conv])

return x

def TransitionLayer(x, compression=0.5, alpha=0.0, is_max=0):

nb_filter = int(x.shape.as_list()[-1]*compression)

x = BatchNormalization(axis=3)(x)

x = tf.nn.leaky_relu(x, alpha=0.2)

x = Conv2D(nb_filter, (1, 1), strides=(1,1), padding='same')(x)

if is_max != 0: x = MaxPooling2D(pool_size=(2, 2), strides=2)(x)

else: x = AveragePooling2D(pool_size=(2, 2), strides=2)(x)

return x

#growth_rate = 12

#inpt = Input(shape=(32,32,3))

x = Conv2D(growth_rate*2, (3, 3), strides=1, padding='same')(inpt)

x = BatchNormalization(axis=3)(x)

x = tf.nn.leaky_relu(x, alpha=0.1)

x = DenseBlock(x, 12, growth_rate, drop_rate=0.2)

x = TransitionLayer(x)

x = DenseBlock(x, 12, growth_rate, drop_rate=0.2)

x = TransitionLayer(x)

x = DenseBlock(x, 12, growth_rate, drop_rate=0.2)

x = BatchNormalization(axis=3)(x)

x = GlobalAveragePooling2D()(x)

x = Dense(10, activation='softmax')(x)

model = Model(inpt, x)

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

model.summary()

参考文献:

https://blog.csdn.net/zhongqianli/article/details/86651797

https://blog.csdn.net/shi2xian2wei2/article/details/84425777

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?