一、环境

windows10、Python 3.9.18、langchain==0.1.9

二、ollama下载

三、ollama安装及迁移到其他盘

1、ollama安装及模型下载

直接运行下载的exe文件即可。安装完成后win+r打开cmd,输入ollama,有显示以下的内容就是安装成功

2、模型下载

详细见library,这里执行ollama run qwen:7b下载了阿里的,4.2G

3、默认位置

ollama及其下载的模型默认在c盘,会占用较大的空间。

ollama默认安装位置在C:\Users\XX\AppData\Local\Programs\Ollama

下载的模型默然安装在C:\Users\XX\.ollama

4、迁移操作

(1)将C:\Users\XX\AppData\Local\Programs\Ollama这个文件夹移动到其他盘(D:\Ollama)

(2)修改环境变量的用户变量,将PATH变量中的C:\Users\XX\AppData\Local\Programs\Ollama修改为步骤(1)的位置

(3)在系统变量中新建一个OLLAMA_MODELS的变量,位置根据其他盘的存储空间去设置,比如在ollama的文件夹下(D:\Ollama\models)

(4)将以下2个文件迁移到新目录

四、ollama的独立使用

1、打印版本号:ollama -v

2、打印已下载的模型:ollama list

3、启动模型:ollama run qwen:7b;(没有的话会先下载)

4、退出会话:crtl+d

5、关闭ollama:任务栏小图标quit

五、ollama+langchain搭建应用服务

1、启动ollama服务

在cmd执行ollama serve,默认占用端口:11434

2、langchain中的封装

miniconda3\envs\py39\Lib\site-packages\langchain_community\llms\ollama.py

class _OllamaCommon(BaseLanguageModel):

base_url: str = "http://localhost:11434"

"""Base url the model is hosted under."""

model: str = "llama2"

"""Model name to use."""

mirostat: Optional[int] = None

"""Enable Mirostat sampling for controlling perplexity.

(default: 0, 0 = disabled, 1 = Mirostat, 2 = Mirostat 2.0)"""

mirostat_eta: Optional[float] = None

"""Influences how quickly the algorithm responds to feedback

from the generated text. A lower learning rate will result in

slower adjustments, while a higher learning rate will make

the algorithm more responsive. (Default: 0.1)"""

mirostat_tau: Optional[float] = None

"""Controls the balance between coherence and diversity

of the output. A lower value will result in more focused and

coherent text. (Default: 5.0)"""

num_ctx: Optional[int] = None

"""Sets the size of the context window used to generate the

next token. (Default: 2048) """

num_gpu: Optional[int] = None

"""The number of GPUs to use. On macOS it defaults to 1 to

enable metal support, 0 to disable."""

num_thread: Optional[int] = None

"""Sets the number of threads to use during computation.

By default, Ollama will detect this for optimal performance.

It is recommended to set this value to the number of physical

CPU cores your system has (as opposed to the logical number of cores)."""

num_predict: Optional[int] = None

"""Maximum number of tokens to predict when generating text.

(Default: 128, -1 = infinite generation, -2 = fill context)"""

repeat_last_n: Optional[int] = None

"""Sets how far back for the model to look back to prevent

repetition. (Default: 64, 0 = disabled, -1 = num_ctx)"""

repeat_penalty: Optional[float] = None

"""Sets how strongly to penalize repetitions. A higher value (e.g., 1.5)

will penalize repetitions more strongly, while a lower value (e.g., 0.9)

will be more lenient. (Default: 1.1)"""

temperature: Optional[float] = None

"""The temperature of the model. Increasing the temperature will

make the model answer more creatively. (Default: 0.8)"""

stop: Optional[List[str]] = None

"""Sets the stop tokens to use."""

tfs_z: Optional[float] = None

"""Tail free sampling is used to reduce the impact of less probable

tokens from the output. A higher value (e.g., 2.0) will reduce the

impact more, while a value of 1.0 disables this setting. (default: 1)"""

top_k: Optional[int] = None

"""Reduces the probability of generating nonsense. A higher value (e.g. 100)

will give more diverse answers, while a lower value (e.g. 10)

will be more conservative. (Default: 40)"""

top_p: Optional[float] = None

"""Works together with top-k. A higher value (e.g., 0.95) will lead

to more diverse text, while a lower value (e.g., 0.5) will

generate more focused and conservative text. (Default: 0.9)"""

system: Optional[str] = None

"""system prompt (overrides what is defined in the Modelfile)"""

template: Optional[str] = None

"""full prompt or prompt template (overrides what is defined in the Modelfile)"""

format: Optional[str] = None

"""Specify the format of the output (e.g., json)"""

timeout: Optional[int] = None

"""Timeout for the request stream"""

headers: Optional[dict] = None

"""Additional headers to pass to endpoint (e.g. Authorization, Referer).

This is useful when Ollama is hosted on cloud services that require

tokens for authentication.

"""3、示例

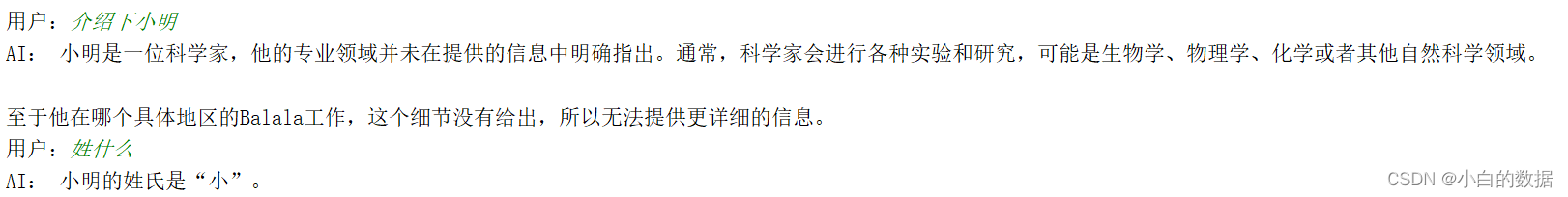

(1)基于给定的信息回答用户的问题

这里我们给出的信息是【"小明是一位科学家", "小明在balala地区工作"】,要求大模型介绍小明

(2)“阅读”一份文档后回答用户关于文档内容的问题

from langchain_community.document_loaders import PyPDFLoader

from langchain_community.vectorstores import FAISS

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_core.prompts import ChatPromptTemplate

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain.chains import create_retrieval_chain

from langchain.chains import create_history_aware_retriever

from langchain_core.prompts import MessagesPlaceholder

from langchain_community.chat_models import ChatOllama

from langchain_community.embeddings import OllamaEmbeddings

model="qwen:7b"

llm = ChatOllama(model=model, temperature=0)

loader = PyPDFLoader('../file/test.pdf')

docs = loader.load()

embeddings = OllamaEmbeddings(model=model)

text_splitter = RecursiveCharacterTextSplitter()

documents = text_splitter.split_documents(docs)[:10]#解析前10页

vector = FAISS.from_documents(documents, embeddings)

# vector = FAISS.from_texts(["小明是一位科学家", "小明在balala地区工作"],embeddings)

retriever = vector.as_retriever()

prompt1 = ChatPromptTemplate.from_messages([

MessagesPlaceholder(variable_name="chat_history"),

("user", "{input}"),

("user", "在给定上述对话的情况下,生成一个要查找的搜索查询,以获取与对话相关的信息")

])

retriever_chain = create_history_aware_retriever(llm, retriever, prompt1)

prompt2 = ChatPromptTemplate.from_messages([

("system", "根据文章内容回答问题:{context}"),

MessagesPlaceholder(variable_name="chat_history"),

("user", "{input}"),

])

document_chain = create_stuff_documents_chain(llm, prompt2)

retrieval_chain = create_retrieval_chain(retriever_chain, document_chain)

chat_history = []

while True:

question = input('用户:')

response = retrieval_chain.invoke({

"chat_history": chat_history,

"input": question

})

answer = response["answer"]

chat_history.extend([question, answer])

print('AI:', answer)

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?