🍨 本文为:[🔗365天深度学习训练营] 中的学习记录博客

🍖 原作者:[K同学啊 | 接辅导、项目定制]

要求:

- 在DenseNet系列算法中插入SE-Net通道注意力机制,并完成猴痘病识别

- 思考改进思路是否可以迁移到其他地方

- 调整测试集精度至89%

一、 基础配置

- 语言环境:Python3.8

- 编译器选择:Pycharm

- 深度学习环境:

-

- torch==1.12.1+cu113

- torchvision==0.13.1+cu113

二、 前期准备

1.设置GPU

import pathlib

import torch

import torch.nn as nn

import torch.nn.functional as F

from torchvision import transforms, datasets

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(device)2. 导入数据

本项目所采用的数据集未收录于公开数据中,故需要自己在文件目录中导入相应数据集合,并设置对应文件目录,以供后续学习过程中使用。

运行下述代码:

data_dir = './data/'

data_dir = pathlib.Path(data_dir)

data_paths = list(data_dir.glob('*'))

classeNames = [str(path).split("\\")[1] for path in data_paths]

print(classeNames)

image_count = len(list(data_dir.glob('*/*')))

print("图片总数为:", image_count)得到如下输出:

['Monkeypox', 'Others']

图片总数为: 2142接下来,我们通过transforms.Compose对整个数据集进行预处理:

train_transforms = transforms.Compose([

transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸

transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间

transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])

test_transform = transforms.Compose([

transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸

transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间

transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])

total_data = datasets.ImageFolder("./data/", transform=train_transforms)

print(total_data.class_to_idx)得到如下输出:

{'Monkeypox': 0, 'Others': 1}3. 划分数据集

此处数据集需要做按比例划分的操作:

train_size = int(0.8 * len(total_data))

test_size = len(total_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

接下来,根据划分得到的训练集和验证集对数据集进行包装(由于显卡问题,这里将batch_size设置为4):

batch_size = 4

train_dl = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=0)

test_dl = torch.utils.data.DataLoader(test_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=0)并通过:

for X, y in test_dl:

print("Shape of X [N, C, H, W]: ", X.shape)

print("Shape of y: ", y.shape, y.dtype)

break

输出测试数据集的数据分布情况:

Shape of X [N, C, H, W]: torch.Size([4, 3, 224, 224])

Shape of y: torch.Size([4]) torch.int644.搭建模型

1.模型搭建

from collections import OrderedDict

import torch.utils.checkpoint as cp

def _bn_function_factory(norm, relu, conv):

def bn_function(*inputs):

concated_features = torch.cat(inputs, 1)

bottleneck_output = conv(relu(norm(concated_features)))

return bottleneck_output

return bn_function

class _DenseLayer(nn.Module):

def __init__(self, num_input_features, growth_rate, bn_size, drop_rate, efficient=False):

super(_DenseLayer, self).__init__()

self.add_module('norm1', nn.BatchNorm2d(num_input_features)),

self.add_module('relu1', nn.ReLU(inplace=True)),

self.add_module('conv1', nn.Conv2d(num_input_features, bn_size * growth_rate,

kernel_size=1, stride=1, bias=False)),

self.add_module('norm2', nn.BatchNorm2d(bn_size * growth_rate)),

self.add_module('relu2', nn.ReLU(inplace=True)),

self.add_module('conv2', nn.Conv2d(bn_size * growth_rate, growth_rate,

kernel_size=3, stride=1, padding=1, bias=False)),

self.add_module('SE_Block', SE_Block(growth_rate, reduction=16))

self.drop_rate = drop_rate

self.efficient = efficient

def forward(self, *prev_features):

bn_function = _bn_function_factory(self.norm1, self.relu1, self.conv1)

if self.efficient and any(prev_feature.requires_grad for prev_feature in prev_features):

bottleneck_output = cp.checkpoint(bn_function, *prev_features)

else:

bottleneck_output = bn_function(*prev_features)

new_features = self.SE_Block(self.conv2(self.relu2(self.norm2(bottleneck_output))))

if self.drop_rate > 0:

new_features = F.dropout(new_features, p=self.drop_rate, training=self.training)

return new_features

class _Transition(nn.Sequential):

def __init__(self, num_input_features, num_output_features):

super(_Transition, self).__init__()

self.add_module('norm', nn.BatchNorm2d(num_input_features))

self.add_module('relu', nn.ReLU(inplace=True))

self.add_module('conv', nn.Conv2d(num_input_features, num_output_features,

kernel_size=1, stride=1, bias=False))

self.add_module('pool', nn.AvgPool2d(kernel_size=2, stride=2))

class _DenseBlock(nn.Module):

def __init__(self, num_layers, num_input_features, bn_size, growth_rate, drop_rate, efficient=False):

super(_DenseBlock, self).__init__()

for i in range(num_layers):

layer = _DenseLayer(

num_input_features + i * growth_rate,

growth_rate=growth_rate,

bn_size=bn_size,

drop_rate=drop_rate,

efficient=efficient,

)

self.add_module('denselayer%d' % (i + 1), layer)

def forward(self, init_features):

features = [init_features]

for name, layer in self.named_children():

new_features = layer(*features)

features.append(new_features)

return torch.cat(features, 1)

class SE_Block(nn.Module):

def __init__(self, ch_in, reduction=16):

super(SE_Block, self).__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1) # 全局自适应池化

self.fc = nn.Sequential(

nn.Linear(ch_in, ch_in // reduction, bias=False),

nn.ReLU(inplace=True),

nn.Linear(ch_in // reduction, ch_in, bias=False),

nn.Sigmoid()

)

def forward(self, x):

b, c, _, _ = x.size()

y = self.avg_pool(x).view(b, c) # squeeze操作

y = self.fc(y).view(b, c, 1, 1) # FC获取通道注意力权重,是具有全局信息的

return x * y.expand_as(x) # 注意力作用每一个通道上

class DenseNet(nn.Module):

def __init__(self, growth_rate, block_config, num_init_features=24, compression=0.5, bn_size=4, drop_rate=0,

num_classes=10, small_inputs=True, efficient=False):

super(DenseNet, self).__init__()

assert 0 < compression <= 1, 'compression of densenet should be between 0 and 1'

# First convolution

if small_inputs:

self.features = nn.Sequential(OrderedDict([

('conv0', nn.Conv2d(3, num_init_features, kernel_size=3, stride=1, padding=1, bias=False)),

]))

else:

self.features = nn.Sequential(OrderedDict([

('conv0', nn.Conv2d(3, num_init_features, kernel_size=7, stride=2, padding=3, bias=False)),

]))

self.features.add_module('norm0', nn.BatchNorm2d(num_init_features))

self.features.add_module('relu0', nn.ReLU(inplace=True))

self.features.add_module('pool0', nn.MaxPool2d(kernel_size=3, stride=2, padding=1,

ceil_mode=False))

# Each denseblock

num_features = num_init_features

for i, num_layers in enumerate(block_config):

block = _DenseBlock(

num_layers=num_layers,

num_input_features=num_features,

bn_size=bn_size,

growth_rate=growth_rate,

drop_rate=drop_rate,

efficient=efficient,

)

self.features.add_module('denseblock%d' % (i + 1), block)

num_features = num_features + num_layers * growth_rate

if i != len(block_config) - 1:

trans = _Transition(num_input_features=num_features,

num_output_features=int(num_features * compression))

self.features.add_module('transition%d' % (i + 1), trans)

num_features = int(num_features * compression)

# self.features.add_module('SE_Block%d' % (i + 1),SE_Block(num_features, reduction=16))

# Final batch norm

self.features.add_module('norm_final', nn.BatchNorm2d(num_features))

# Linear layer

self.classifier = nn.Linear(num_features, num_classes)

def forward(self, x):

features = self.features(x)

out = F.relu(features, inplace=True)

out = F.adaptive_avg_pool2d(out, (1, 1))

out = torch.flatten(out, 1)

out = self.classifier(out)

return out2.查看模型信息

x = torch.randn(2, 3, 224, 224)

model = DenseNet(growth_rate=32, block_config=(6, 12, 24, 16), compression=0.5,

num_init_features=64, bn_size=4, drop_rate=0.2, num_classes=4, efficient=True)

out = model(x)

print('out.shape: ', out.shape)

print(out)

model.to(device)

# 统计模型参数量以及其他指标

import torchsummary as summary

summary.summary(model, (3, 224, 224))得到如下输出:

out.shape: torch.Size([2, 4])

tensor([[-0.0369, -0.2944, 0.3427, -0.1464],

[-0.0143, -0.3113, 0.3137, -0.1694]], grad_fn=<AddmmBackward0>)

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 224, 224] 1,728

BatchNorm2d-2 [-1, 64, 224, 224] 128

ReLU-3 [-1, 64, 224, 224] 0

Conv2d-4 [-1, 128, 224, 224] 8,192

BatchNorm2d-5 [-1, 128, 224, 224] 256

ReLU-6 [-1, 128, 224, 224] 0

Conv2d-7 [-1, 32, 224, 224] 36,864

AdaptiveAvgPool2d-8 [-1, 32, 1, 1] 0

Linear-9 [-1, 2] 64

ReLU-10 [-1, 2] 0

Linear-11 [-1, 32] 64

Sigmoid-12 [-1, 32] 0

SE_Block-13 [-1, 32, 224, 224] 0

_DenseLayer-14 [-1, 32, 224, 224] 0

BatchNorm2d-15 [-1, 96, 224, 224] 192

ReLU-16 [-1, 96, 224, 224] 0

Conv2d-17 [-1, 128, 224, 224] 12,288

BatchNorm2d-18 [-1, 128, 224, 224] 256

ReLU-19 [-1, 128, 224, 224] 0

Conv2d-20 [-1, 32, 224, 224] 36,864

AdaptiveAvgPool2d-21 [-1, 32, 1, 1] 0

Linear-22 [-1, 2] 64

ReLU-23 [-1, 2] 0

Linear-24 [-1, 32] 64

Sigmoid-25 [-1, 32] 0

SE_Block-26 [-1, 32, 224, 224] 0

_DenseLayer-27 [-1, 32, 224, 224] 0

BatchNorm2d-28 [-1, 128, 224, 224] 256

ReLU-29 [-1, 128, 224, 224] 0

Conv2d-30 [-1, 128, 224, 224] 16,384

BatchNorm2d-31 [-1, 128, 224, 224] 256

ReLU-32 [-1, 128, 224, 224] 0

Conv2d-33 [-1, 32, 224, 224] 36,864

AdaptiveAvgPool2d-34 [-1, 32, 1, 1] 0

Linear-35 [-1, 2] 64

ReLU-36 [-1, 2] 0

Linear-37 [-1, 32] 64

Sigmoid-38 [-1, 32] 0

SE_Block-39 [-1, 32, 224, 224] 0

_DenseLayer-40 [-1, 32, 224, 224] 0

BatchNorm2d-41 [-1, 160, 224, 224] 320

ReLU-42 [-1, 160, 224, 224] 0

Conv2d-43 [-1, 128, 224, 224] 20,480

BatchNorm2d-44 [-1, 128, 224, 224] 256

ReLU-45 [-1, 128, 224, 224] 0

Conv2d-46 [-1, 32, 224, 224] 36,864

AdaptiveAvgPool2d-47 [-1, 32, 1, 1] 0

Linear-48 [-1, 2] 64

ReLU-49 [-1, 2] 0

Linear-50 [-1, 32] 64

Sigmoid-51 [-1, 32] 0

SE_Block-52 [-1, 32, 224, 224] 0

_DenseLayer-53 [-1, 32, 224, 224] 0

BatchNorm2d-54 [-1, 192, 224, 224] 384

ReLU-55 [-1, 192, 224, 224] 0

Conv2d-56 [-1, 128, 224, 224] 24,576

BatchNorm2d-57 [-1, 128, 224, 224] 256

ReLU-58 [-1, 128, 224, 224] 0

Conv2d-59 [-1, 32, 224, 224] 36,864

AdaptiveAvgPool2d-60 [-1, 32, 1, 1] 0

Linear-61 [-1, 2] 64

ReLU-62 [-1, 2] 0

Linear-63 [-1, 32] 64

Sigmoid-64 [-1, 32] 0

SE_Block-65 [-1, 32, 224, 224] 0

_DenseLayer-66 [-1, 32, 224, 224] 0

BatchNorm2d-67 [-1, 224, 224, 224] 448

ReLU-68 [-1, 224, 224, 224] 0

Conv2d-69 [-1, 128, 224, 224] 28,672

BatchNorm2d-70 [-1, 128, 224, 224] 256

ReLU-71 [-1, 128, 224, 224] 0

Conv2d-72 [-1, 32, 224, 224] 36,864

AdaptiveAvgPool2d-73 [-1, 32, 1, 1] 0

Linear-74 [-1, 2] 64

ReLU-75 [-1, 2] 0

Linear-76 [-1, 32] 64

Sigmoid-77 [-1, 32] 0

SE_Block-78 [-1, 32, 224, 224] 0

_DenseLayer-79 [-1, 32, 224, 224] 0

_DenseBlock-80 [-1, 256, 224, 224] 0

BatchNorm2d-81 [-1, 256, 224, 224] 512

ReLU-82 [-1, 256, 224, 224] 0

Conv2d-83 [-1, 128, 224, 224] 32,768

AvgPool2d-84 [-1, 128, 112, 112] 0

BatchNorm2d-85 [-1, 128, 112, 112] 256

ReLU-86 [-1, 128, 112, 112] 0

Conv2d-87 [-1, 128, 112, 112] 16,384

BatchNorm2d-88 [-1, 128, 112, 112] 256

ReLU-89 [-1, 128, 112, 112] 0

Conv2d-90 [-1, 32, 112, 112] 36,864

AdaptiveAvgPool2d-91 [-1, 32, 1, 1] 0

Linear-92 [-1, 2] 64

ReLU-93 [-1, 2] 0

Linear-94 [-1, 32] 64

Sigmoid-95 [-1, 32] 0

SE_Block-96 [-1, 32, 112, 112] 0

_DenseLayer-97 [-1, 32, 112, 112] 0

BatchNorm2d-98 [-1, 160, 112, 112] 320

ReLU-99 [-1, 160, 112, 112] 0

Conv2d-100 [-1, 128, 112, 112] 20,480

BatchNorm2d-101 [-1, 128, 112, 112] 256

ReLU-102 [-1, 128, 112, 112] 0

Conv2d-103 [-1, 32, 112, 112] 36,864

AdaptiveAvgPool2d-104 [-1, 32, 1, 1] 0

Linear-105 [-1, 2] 64

ReLU-106 [-1, 2] 0

Linear-107 [-1, 32] 64

Sigmoid-108 [-1, 32] 0

SE_Block-109 [-1, 32, 112, 112] 0

_DenseLayer-110 [-1, 32, 112, 112] 0

BatchNorm2d-111 [-1, 192, 112, 112] 384

ReLU-112 [-1, 192, 112, 112] 0

Conv2d-113 [-1, 128, 112, 112] 24,576

BatchNorm2d-114 [-1, 128, 112, 112] 256

ReLU-115 [-1, 128, 112, 112] 0

Conv2d-116 [-1, 32, 112, 112] 36,864

AdaptiveAvgPool2d-117 [-1, 32, 1, 1] 0

Linear-118 [-1, 2] 64

ReLU-119 [-1, 2] 0

Linear-120 [-1, 32] 64

Sigmoid-121 [-1, 32] 0

SE_Block-122 [-1, 32, 112, 112] 0

_DenseLayer-123 [-1, 32, 112, 112] 0

BatchNorm2d-124 [-1, 224, 112, 112] 448

ReLU-125 [-1, 224, 112, 112] 0

Conv2d-126 [-1, 128, 112, 112] 28,672

BatchNorm2d-127 [-1, 128, 112, 112] 256

ReLU-128 [-1, 128, 112, 112] 0

Conv2d-129 [-1, 32, 112, 112] 36,864

AdaptiveAvgPool2d-130 [-1, 32, 1, 1] 0

Linear-131 [-1, 2] 64

ReLU-132 [-1, 2] 0

Linear-133 [-1, 32] 64

Sigmoid-134 [-1, 32] 0

SE_Block-135 [-1, 32, 112, 112] 0

_DenseLayer-136 [-1, 32, 112, 112] 0

BatchNorm2d-137 [-1, 256, 112, 112] 512

ReLU-138 [-1, 256, 112, 112] 0

Conv2d-139 [-1, 128, 112, 112] 32,768

BatchNorm2d-140 [-1, 128, 112, 112] 256

ReLU-141 [-1, 128, 112, 112] 0

Conv2d-142 [-1, 32, 112, 112] 36,864

AdaptiveAvgPool2d-143 [-1, 32, 1, 1] 0

Linear-144 [-1, 2] 64

ReLU-145 [-1, 2] 0

Linear-146 [-1, 32] 64

Sigmoid-147 [-1, 32] 0

SE_Block-148 [-1, 32, 112, 112] 0

_DenseLayer-149 [-1, 32, 112, 112] 0

BatchNorm2d-150 [-1, 288, 112, 112] 576

ReLU-151 [-1, 288, 112, 112] 0

Conv2d-152 [-1, 128, 112, 112] 36,864

BatchNorm2d-153 [-1, 128, 112, 112] 256

ReLU-154 [-1, 128, 112, 112] 0

Conv2d-155 [-1, 32, 112, 112] 36,864

AdaptiveAvgPool2d-156 [-1, 32, 1, 1] 0

Linear-157 [-1, 2] 64

ReLU-158 [-1, 2] 0

Linear-159 [-1, 32] 64

Sigmoid-160 [-1, 32] 0

SE_Block-161 [-1, 32, 112, 112] 0

_DenseLayer-162 [-1, 32, 112, 112] 0

BatchNorm2d-163 [-1, 320, 112, 112] 640

ReLU-164 [-1, 320, 112, 112] 0

Conv2d-165 [-1, 128, 112, 112] 40,960

BatchNorm2d-166 [-1, 128, 112, 112] 256

ReLU-167 [-1, 128, 112, 112] 0

Conv2d-168 [-1, 32, 112, 112] 36,864

AdaptiveAvgPool2d-169 [-1, 32, 1, 1] 0

Linear-170 [-1, 2] 64

ReLU-171 [-1, 2] 0

Linear-172 [-1, 32] 64

Sigmoid-173 [-1, 32] 0

SE_Block-174 [-1, 32, 112, 112] 0

_DenseLayer-175 [-1, 32, 112, 112] 0

BatchNorm2d-176 [-1, 352, 112, 112] 704

ReLU-177 [-1, 352, 112, 112] 0

Conv2d-178 [-1, 128, 112, 112] 45,056

BatchNorm2d-179 [-1, 128, 112, 112] 256

ReLU-180 [-1, 128, 112, 112] 0

Conv2d-181 [-1, 32, 112, 112] 36,864

AdaptiveAvgPool2d-182 [-1, 32, 1, 1] 0

Linear-183 [-1, 2] 64

ReLU-184 [-1, 2] 0

Linear-185 [-1, 32] 64

Sigmoid-186 [-1, 32] 0

SE_Block-187 [-1, 32, 112, 112] 0

_DenseLayer-188 [-1, 32, 112, 112] 0

BatchNorm2d-189 [-1, 384, 112, 112] 768

ReLU-190 [-1, 384, 112, 112] 0

Conv2d-191 [-1, 128, 112, 112] 49,152

BatchNorm2d-192 [-1, 128, 112, 112] 256

ReLU-193 [-1, 128, 112, 112] 0

Conv2d-194 [-1, 32, 112, 112] 36,864

AdaptiveAvgPool2d-195 [-1, 32, 1, 1] 0

Linear-196 [-1, 2] 64

ReLU-197 [-1, 2] 0

Linear-198 [-1, 32] 64

Sigmoid-199 [-1, 32] 0

SE_Block-200 [-1, 32, 112, 112] 0

_DenseLayer-201 [-1, 32, 112, 112] 0

BatchNorm2d-202 [-1, 416, 112, 112] 832

ReLU-203 [-1, 416, 112, 112] 0

Conv2d-204 [-1, 128, 112, 112] 53,248

BatchNorm2d-205 [-1, 128, 112, 112] 256

ReLU-206 [-1, 128, 112, 112] 0

Conv2d-207 [-1, 32, 112, 112] 36,864

AdaptiveAvgPool2d-208 [-1, 32, 1, 1] 0

Linear-209 [-1, 2] 64

ReLU-210 [-1, 2] 0

Linear-211 [-1, 32] 64

Sigmoid-212 [-1, 32] 0

SE_Block-213 [-1, 32, 112, 112] 0

_DenseLayer-214 [-1, 32, 112, 112] 0

BatchNorm2d-215 [-1, 448, 112, 112] 896

ReLU-216 [-1, 448, 112, 112] 0

Conv2d-217 [-1, 128, 112, 112] 57,344

BatchNorm2d-218 [-1, 128, 112, 112] 256

ReLU-219 [-1, 128, 112, 112] 0

Conv2d-220 [-1, 32, 112, 112] 36,864

AdaptiveAvgPool2d-221 [-1, 32, 1, 1] 0

Linear-222 [-1, 2] 64

ReLU-223 [-1, 2] 0

Linear-224 [-1, 32] 64

Sigmoid-225 [-1, 32] 0

SE_Block-226 [-1, 32, 112, 112] 0

_DenseLayer-227 [-1, 32, 112, 112] 0

BatchNorm2d-228 [-1, 480, 112, 112] 960

ReLU-229 [-1, 480, 112, 112] 0

Conv2d-230 [-1, 128, 112, 112] 61,440

BatchNorm2d-231 [-1, 128, 112, 112] 256

ReLU-232 [-1, 128, 112, 112] 0

Conv2d-233 [-1, 32, 112, 112] 36,864

AdaptiveAvgPool2d-234 [-1, 32, 1, 1] 0

Linear-235 [-1, 2] 64

ReLU-236 [-1, 2] 0

Linear-237 [-1, 32] 64

Sigmoid-238 [-1, 32] 0

SE_Block-239 [-1, 32, 112, 112] 0

_DenseLayer-240 [-1, 32, 112, 112] 0

_DenseBlock-241 [-1, 512, 112, 112] 0

BatchNorm2d-242 [-1, 512, 112, 112] 1,024

ReLU-243 [-1, 512, 112, 112] 0

Conv2d-244 [-1, 256, 112, 112] 131,072

AvgPool2d-245 [-1, 256, 56, 56] 0

BatchNorm2d-246 [-1, 256, 56, 56] 512

ReLU-247 [-1, 256, 56, 56] 0

Conv2d-248 [-1, 128, 56, 56] 32,768

BatchNorm2d-249 [-1, 128, 56, 56] 256

ReLU-250 [-1, 128, 56, 56] 0

Conv2d-251 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-252 [-1, 32, 1, 1] 0

Linear-253 [-1, 2] 64

ReLU-254 [-1, 2] 0

Linear-255 [-1, 32] 64

Sigmoid-256 [-1, 32] 0

SE_Block-257 [-1, 32, 56, 56] 0

_DenseLayer-258 [-1, 32, 56, 56] 0

BatchNorm2d-259 [-1, 288, 56, 56] 576

ReLU-260 [-1, 288, 56, 56] 0

Conv2d-261 [-1, 128, 56, 56] 36,864

BatchNorm2d-262 [-1, 128, 56, 56] 256

ReLU-263 [-1, 128, 56, 56] 0

Conv2d-264 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-265 [-1, 32, 1, 1] 0

Linear-266 [-1, 2] 64

ReLU-267 [-1, 2] 0

Linear-268 [-1, 32] 64

Sigmoid-269 [-1, 32] 0

SE_Block-270 [-1, 32, 56, 56] 0

_DenseLayer-271 [-1, 32, 56, 56] 0

BatchNorm2d-272 [-1, 320, 56, 56] 640

ReLU-273 [-1, 320, 56, 56] 0

Conv2d-274 [-1, 128, 56, 56] 40,960

BatchNorm2d-275 [-1, 128, 56, 56] 256

ReLU-276 [-1, 128, 56, 56] 0

Conv2d-277 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-278 [-1, 32, 1, 1] 0

Linear-279 [-1, 2] 64

ReLU-280 [-1, 2] 0

Linear-281 [-1, 32] 64

Sigmoid-282 [-1, 32] 0

SE_Block-283 [-1, 32, 56, 56] 0

_DenseLayer-284 [-1, 32, 56, 56] 0

BatchNorm2d-285 [-1, 352, 56, 56] 704

ReLU-286 [-1, 352, 56, 56] 0

Conv2d-287 [-1, 128, 56, 56] 45,056

BatchNorm2d-288 [-1, 128, 56, 56] 256

ReLU-289 [-1, 128, 56, 56] 0

Conv2d-290 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-291 [-1, 32, 1, 1] 0

Linear-292 [-1, 2] 64

ReLU-293 [-1, 2] 0

Linear-294 [-1, 32] 64

Sigmoid-295 [-1, 32] 0

SE_Block-296 [-1, 32, 56, 56] 0

_DenseLayer-297 [-1, 32, 56, 56] 0

BatchNorm2d-298 [-1, 384, 56, 56] 768

ReLU-299 [-1, 384, 56, 56] 0

Conv2d-300 [-1, 128, 56, 56] 49,152

BatchNorm2d-301 [-1, 128, 56, 56] 256

ReLU-302 [-1, 128, 56, 56] 0

Conv2d-303 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-304 [-1, 32, 1, 1] 0

Linear-305 [-1, 2] 64

ReLU-306 [-1, 2] 0

Linear-307 [-1, 32] 64

Sigmoid-308 [-1, 32] 0

SE_Block-309 [-1, 32, 56, 56] 0

_DenseLayer-310 [-1, 32, 56, 56] 0

BatchNorm2d-311 [-1, 416, 56, 56] 832

ReLU-312 [-1, 416, 56, 56] 0

Conv2d-313 [-1, 128, 56, 56] 53,248

BatchNorm2d-314 [-1, 128, 56, 56] 256

ReLU-315 [-1, 128, 56, 56] 0

Conv2d-316 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-317 [-1, 32, 1, 1] 0

Linear-318 [-1, 2] 64

ReLU-319 [-1, 2] 0

Linear-320 [-1, 32] 64

Sigmoid-321 [-1, 32] 0

SE_Block-322 [-1, 32, 56, 56] 0

_DenseLayer-323 [-1, 32, 56, 56] 0

BatchNorm2d-324 [-1, 448, 56, 56] 896

ReLU-325 [-1, 448, 56, 56] 0

Conv2d-326 [-1, 128, 56, 56] 57,344

BatchNorm2d-327 [-1, 128, 56, 56] 256

ReLU-328 [-1, 128, 56, 56] 0

Conv2d-329 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-330 [-1, 32, 1, 1] 0

Linear-331 [-1, 2] 64

ReLU-332 [-1, 2] 0

Linear-333 [-1, 32] 64

Sigmoid-334 [-1, 32] 0

SE_Block-335 [-1, 32, 56, 56] 0

_DenseLayer-336 [-1, 32, 56, 56] 0

BatchNorm2d-337 [-1, 480, 56, 56] 960

ReLU-338 [-1, 480, 56, 56] 0

Conv2d-339 [-1, 128, 56, 56] 61,440

BatchNorm2d-340 [-1, 128, 56, 56] 256

ReLU-341 [-1, 128, 56, 56] 0

Conv2d-342 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-343 [-1, 32, 1, 1] 0

Linear-344 [-1, 2] 64

ReLU-345 [-1, 2] 0

Linear-346 [-1, 32] 64

Sigmoid-347 [-1, 32] 0

SE_Block-348 [-1, 32, 56, 56] 0

_DenseLayer-349 [-1, 32, 56, 56] 0

BatchNorm2d-350 [-1, 512, 56, 56] 1,024

ReLU-351 [-1, 512, 56, 56] 0

Conv2d-352 [-1, 128, 56, 56] 65,536

BatchNorm2d-353 [-1, 128, 56, 56] 256

ReLU-354 [-1, 128, 56, 56] 0

Conv2d-355 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-356 [-1, 32, 1, 1] 0

Linear-357 [-1, 2] 64

ReLU-358 [-1, 2] 0

Linear-359 [-1, 32] 64

Sigmoid-360 [-1, 32] 0

SE_Block-361 [-1, 32, 56, 56] 0

_DenseLayer-362 [-1, 32, 56, 56] 0

BatchNorm2d-363 [-1, 544, 56, 56] 1,088

ReLU-364 [-1, 544, 56, 56] 0

Conv2d-365 [-1, 128, 56, 56] 69,632

BatchNorm2d-366 [-1, 128, 56, 56] 256

ReLU-367 [-1, 128, 56, 56] 0

Conv2d-368 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-369 [-1, 32, 1, 1] 0

Linear-370 [-1, 2] 64

ReLU-371 [-1, 2] 0

Linear-372 [-1, 32] 64

Sigmoid-373 [-1, 32] 0

SE_Block-374 [-1, 32, 56, 56] 0

_DenseLayer-375 [-1, 32, 56, 56] 0

BatchNorm2d-376 [-1, 576, 56, 56] 1,152

ReLU-377 [-1, 576, 56, 56] 0

Conv2d-378 [-1, 128, 56, 56] 73,728

BatchNorm2d-379 [-1, 128, 56, 56] 256

ReLU-380 [-1, 128, 56, 56] 0

Conv2d-381 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-382 [-1, 32, 1, 1] 0

Linear-383 [-1, 2] 64

ReLU-384 [-1, 2] 0

Linear-385 [-1, 32] 64

Sigmoid-386 [-1, 32] 0

SE_Block-387 [-1, 32, 56, 56] 0

_DenseLayer-388 [-1, 32, 56, 56] 0

BatchNorm2d-389 [-1, 608, 56, 56] 1,216

ReLU-390 [-1, 608, 56, 56] 0

Conv2d-391 [-1, 128, 56, 56] 77,824

BatchNorm2d-392 [-1, 128, 56, 56] 256

ReLU-393 [-1, 128, 56, 56] 0

Conv2d-394 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-395 [-1, 32, 1, 1] 0

Linear-396 [-1, 2] 64

ReLU-397 [-1, 2] 0

Linear-398 [-1, 32] 64

Sigmoid-399 [-1, 32] 0

SE_Block-400 [-1, 32, 56, 56] 0

_DenseLayer-401 [-1, 32, 56, 56] 0

BatchNorm2d-402 [-1, 640, 56, 56] 1,280

ReLU-403 [-1, 640, 56, 56] 0

Conv2d-404 [-1, 128, 56, 56] 81,920

BatchNorm2d-405 [-1, 128, 56, 56] 256

ReLU-406 [-1, 128, 56, 56] 0

Conv2d-407 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-408 [-1, 32, 1, 1] 0

Linear-409 [-1, 2] 64

ReLU-410 [-1, 2] 0

Linear-411 [-1, 32] 64

Sigmoid-412 [-1, 32] 0

SE_Block-413 [-1, 32, 56, 56] 0

_DenseLayer-414 [-1, 32, 56, 56] 0

BatchNorm2d-415 [-1, 672, 56, 56] 1,344

ReLU-416 [-1, 672, 56, 56] 0

Conv2d-417 [-1, 128, 56, 56] 86,016

BatchNorm2d-418 [-1, 128, 56, 56] 256

ReLU-419 [-1, 128, 56, 56] 0

Conv2d-420 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-421 [-1, 32, 1, 1] 0

Linear-422 [-1, 2] 64

ReLU-423 [-1, 2] 0

Linear-424 [-1, 32] 64

Sigmoid-425 [-1, 32] 0

SE_Block-426 [-1, 32, 56, 56] 0

_DenseLayer-427 [-1, 32, 56, 56] 0

BatchNorm2d-428 [-1, 704, 56, 56] 1,408

ReLU-429 [-1, 704, 56, 56] 0

Conv2d-430 [-1, 128, 56, 56] 90,112

BatchNorm2d-431 [-1, 128, 56, 56] 256

ReLU-432 [-1, 128, 56, 56] 0

Conv2d-433 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-434 [-1, 32, 1, 1] 0

Linear-435 [-1, 2] 64

ReLU-436 [-1, 2] 0

Linear-437 [-1, 32] 64

Sigmoid-438 [-1, 32] 0

SE_Block-439 [-1, 32, 56, 56] 0

_DenseLayer-440 [-1, 32, 56, 56] 0

BatchNorm2d-441 [-1, 736, 56, 56] 1,472

ReLU-442 [-1, 736, 56, 56] 0

Conv2d-443 [-1, 128, 56, 56] 94,208

BatchNorm2d-444 [-1, 128, 56, 56] 256

ReLU-445 [-1, 128, 56, 56] 0

Conv2d-446 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-447 [-1, 32, 1, 1] 0

Linear-448 [-1, 2] 64

ReLU-449 [-1, 2] 0

Linear-450 [-1, 32] 64

Sigmoid-451 [-1, 32] 0

SE_Block-452 [-1, 32, 56, 56] 0

_DenseLayer-453 [-1, 32, 56, 56] 0

BatchNorm2d-454 [-1, 768, 56, 56] 1,536

ReLU-455 [-1, 768, 56, 56] 0

Conv2d-456 [-1, 128, 56, 56] 98,304

BatchNorm2d-457 [-1, 128, 56, 56] 256

ReLU-458 [-1, 128, 56, 56] 0

Conv2d-459 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-460 [-1, 32, 1, 1] 0

Linear-461 [-1, 2] 64

ReLU-462 [-1, 2] 0

Linear-463 [-1, 32] 64

Sigmoid-464 [-1, 32] 0

SE_Block-465 [-1, 32, 56, 56] 0

_DenseLayer-466 [-1, 32, 56, 56] 0

BatchNorm2d-467 [-1, 800, 56, 56] 1,600

ReLU-468 [-1, 800, 56, 56] 0

Conv2d-469 [-1, 128, 56, 56] 102,400

BatchNorm2d-470 [-1, 128, 56, 56] 256

ReLU-471 [-1, 128, 56, 56] 0

Conv2d-472 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-473 [-1, 32, 1, 1] 0

Linear-474 [-1, 2] 64

ReLU-475 [-1, 2] 0

Linear-476 [-1, 32] 64

Sigmoid-477 [-1, 32] 0

SE_Block-478 [-1, 32, 56, 56] 0

_DenseLayer-479 [-1, 32, 56, 56] 0

BatchNorm2d-480 [-1, 832, 56, 56] 1,664

ReLU-481 [-1, 832, 56, 56] 0

Conv2d-482 [-1, 128, 56, 56] 106,496

BatchNorm2d-483 [-1, 128, 56, 56] 256

ReLU-484 [-1, 128, 56, 56] 0

Conv2d-485 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-486 [-1, 32, 1, 1] 0

Linear-487 [-1, 2] 64

ReLU-488 [-1, 2] 0

Linear-489 [-1, 32] 64

Sigmoid-490 [-1, 32] 0

SE_Block-491 [-1, 32, 56, 56] 0

_DenseLayer-492 [-1, 32, 56, 56] 0

BatchNorm2d-493 [-1, 864, 56, 56] 1,728

ReLU-494 [-1, 864, 56, 56] 0

Conv2d-495 [-1, 128, 56, 56] 110,592

BatchNorm2d-496 [-1, 128, 56, 56] 256

ReLU-497 [-1, 128, 56, 56] 0

Conv2d-498 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-499 [-1, 32, 1, 1] 0

Linear-500 [-1, 2] 64

ReLU-501 [-1, 2] 0

Linear-502 [-1, 32] 64

Sigmoid-503 [-1, 32] 0

SE_Block-504 [-1, 32, 56, 56] 0

_DenseLayer-505 [-1, 32, 56, 56] 0

BatchNorm2d-506 [-1, 896, 56, 56] 1,792

ReLU-507 [-1, 896, 56, 56] 0

Conv2d-508 [-1, 128, 56, 56] 114,688

BatchNorm2d-509 [-1, 128, 56, 56] 256

ReLU-510 [-1, 128, 56, 56] 0

Conv2d-511 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-512 [-1, 32, 1, 1] 0

Linear-513 [-1, 2] 64

ReLU-514 [-1, 2] 0

Linear-515 [-1, 32] 64

Sigmoid-516 [-1, 32] 0

SE_Block-517 [-1, 32, 56, 56] 0

_DenseLayer-518 [-1, 32, 56, 56] 0

BatchNorm2d-519 [-1, 928, 56, 56] 1,856

ReLU-520 [-1, 928, 56, 56] 0

Conv2d-521 [-1, 128, 56, 56] 118,784

BatchNorm2d-522 [-1, 128, 56, 56] 256

ReLU-523 [-1, 128, 56, 56] 0

Conv2d-524 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-525 [-1, 32, 1, 1] 0

Linear-526 [-1, 2] 64

ReLU-527 [-1, 2] 0

Linear-528 [-1, 32] 64

Sigmoid-529 [-1, 32] 0

SE_Block-530 [-1, 32, 56, 56] 0

_DenseLayer-531 [-1, 32, 56, 56] 0

BatchNorm2d-532 [-1, 960, 56, 56] 1,920

ReLU-533 [-1, 960, 56, 56] 0

Conv2d-534 [-1, 128, 56, 56] 122,880

BatchNorm2d-535 [-1, 128, 56, 56] 256

ReLU-536 [-1, 128, 56, 56] 0

Conv2d-537 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-538 [-1, 32, 1, 1] 0

Linear-539 [-1, 2] 64

ReLU-540 [-1, 2] 0

Linear-541 [-1, 32] 64

Sigmoid-542 [-1, 32] 0

SE_Block-543 [-1, 32, 56, 56] 0

_DenseLayer-544 [-1, 32, 56, 56] 0

BatchNorm2d-545 [-1, 992, 56, 56] 1,984

ReLU-546 [-1, 992, 56, 56] 0

Conv2d-547 [-1, 128, 56, 56] 126,976

BatchNorm2d-548 [-1, 128, 56, 56] 256

ReLU-549 [-1, 128, 56, 56] 0

Conv2d-550 [-1, 32, 56, 56] 36,864

AdaptiveAvgPool2d-551 [-1, 32, 1, 1] 0

Linear-552 [-1, 2] 64

ReLU-553 [-1, 2] 0

Linear-554 [-1, 32] 64

Sigmoid-555 [-1, 32] 0

SE_Block-556 [-1, 32, 56, 56] 0

_DenseLayer-557 [-1, 32, 56, 56] 0

_DenseBlock-558 [-1, 1024, 56, 56] 0

BatchNorm2d-559 [-1, 1024, 56, 56] 2,048

ReLU-560 [-1, 1024, 56, 56] 0

Conv2d-561 [-1, 512, 56, 56] 524,288

AvgPool2d-562 [-1, 512, 28, 28] 0

BatchNorm2d-563 [-1, 512, 28, 28] 1,024

ReLU-564 [-1, 512, 28, 28] 0

Conv2d-565 [-1, 128, 28, 28] 65,536

BatchNorm2d-566 [-1, 128, 28, 28] 256

ReLU-567 [-1, 128, 28, 28] 0

Conv2d-568 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-569 [-1, 32, 1, 1] 0

Linear-570 [-1, 2] 64

ReLU-571 [-1, 2] 0

Linear-572 [-1, 32] 64

Sigmoid-573 [-1, 32] 0

SE_Block-574 [-1, 32, 28, 28] 0

_DenseLayer-575 [-1, 32, 28, 28] 0

BatchNorm2d-576 [-1, 544, 28, 28] 1,088

ReLU-577 [-1, 544, 28, 28] 0

Conv2d-578 [-1, 128, 28, 28] 69,632

BatchNorm2d-579 [-1, 128, 28, 28] 256

ReLU-580 [-1, 128, 28, 28] 0

Conv2d-581 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-582 [-1, 32, 1, 1] 0

Linear-583 [-1, 2] 64

ReLU-584 [-1, 2] 0

Linear-585 [-1, 32] 64

Sigmoid-586 [-1, 32] 0

SE_Block-587 [-1, 32, 28, 28] 0

_DenseLayer-588 [-1, 32, 28, 28] 0

BatchNorm2d-589 [-1, 576, 28, 28] 1,152

ReLU-590 [-1, 576, 28, 28] 0

Conv2d-591 [-1, 128, 28, 28] 73,728

BatchNorm2d-592 [-1, 128, 28, 28] 256

ReLU-593 [-1, 128, 28, 28] 0

Conv2d-594 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-595 [-1, 32, 1, 1] 0

Linear-596 [-1, 2] 64

ReLU-597 [-1, 2] 0

Linear-598 [-1, 32] 64

Sigmoid-599 [-1, 32] 0

SE_Block-600 [-1, 32, 28, 28] 0

_DenseLayer-601 [-1, 32, 28, 28] 0

BatchNorm2d-602 [-1, 608, 28, 28] 1,216

ReLU-603 [-1, 608, 28, 28] 0

Conv2d-604 [-1, 128, 28, 28] 77,824

BatchNorm2d-605 [-1, 128, 28, 28] 256

ReLU-606 [-1, 128, 28, 28] 0

Conv2d-607 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-608 [-1, 32, 1, 1] 0

Linear-609 [-1, 2] 64

ReLU-610 [-1, 2] 0

Linear-611 [-1, 32] 64

Sigmoid-612 [-1, 32] 0

SE_Block-613 [-1, 32, 28, 28] 0

_DenseLayer-614 [-1, 32, 28, 28] 0

BatchNorm2d-615 [-1, 640, 28, 28] 1,280

ReLU-616 [-1, 640, 28, 28] 0

Conv2d-617 [-1, 128, 28, 28] 81,920

BatchNorm2d-618 [-1, 128, 28, 28] 256

ReLU-619 [-1, 128, 28, 28] 0

Conv2d-620 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-621 [-1, 32, 1, 1] 0

Linear-622 [-1, 2] 64

ReLU-623 [-1, 2] 0

Linear-624 [-1, 32] 64

Sigmoid-625 [-1, 32] 0

SE_Block-626 [-1, 32, 28, 28] 0

_DenseLayer-627 [-1, 32, 28, 28] 0

BatchNorm2d-628 [-1, 672, 28, 28] 1,344

ReLU-629 [-1, 672, 28, 28] 0

Conv2d-630 [-1, 128, 28, 28] 86,016

BatchNorm2d-631 [-1, 128, 28, 28] 256

ReLU-632 [-1, 128, 28, 28] 0

Conv2d-633 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-634 [-1, 32, 1, 1] 0

Linear-635 [-1, 2] 64

ReLU-636 [-1, 2] 0

Linear-637 [-1, 32] 64

Sigmoid-638 [-1, 32] 0

SE_Block-639 [-1, 32, 28, 28] 0

_DenseLayer-640 [-1, 32, 28, 28] 0

BatchNorm2d-641 [-1, 704, 28, 28] 1,408

ReLU-642 [-1, 704, 28, 28] 0

Conv2d-643 [-1, 128, 28, 28] 90,112

BatchNorm2d-644 [-1, 128, 28, 28] 256

ReLU-645 [-1, 128, 28, 28] 0

Conv2d-646 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-647 [-1, 32, 1, 1] 0

Linear-648 [-1, 2] 64

ReLU-649 [-1, 2] 0

Linear-650 [-1, 32] 64

Sigmoid-651 [-1, 32] 0

SE_Block-652 [-1, 32, 28, 28] 0

_DenseLayer-653 [-1, 32, 28, 28] 0

BatchNorm2d-654 [-1, 736, 28, 28] 1,472

ReLU-655 [-1, 736, 28, 28] 0

Conv2d-656 [-1, 128, 28, 28] 94,208

BatchNorm2d-657 [-1, 128, 28, 28] 256

ReLU-658 [-1, 128, 28, 28] 0

Conv2d-659 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-660 [-1, 32, 1, 1] 0

Linear-661 [-1, 2] 64

ReLU-662 [-1, 2] 0

Linear-663 [-1, 32] 64

Sigmoid-664 [-1, 32] 0

SE_Block-665 [-1, 32, 28, 28] 0

_DenseLayer-666 [-1, 32, 28, 28] 0

BatchNorm2d-667 [-1, 768, 28, 28] 1,536

ReLU-668 [-1, 768, 28, 28] 0

Conv2d-669 [-1, 128, 28, 28] 98,304

BatchNorm2d-670 [-1, 128, 28, 28] 256

ReLU-671 [-1, 128, 28, 28] 0

Conv2d-672 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-673 [-1, 32, 1, 1] 0

Linear-674 [-1, 2] 64

ReLU-675 [-1, 2] 0

Linear-676 [-1, 32] 64

Sigmoid-677 [-1, 32] 0

SE_Block-678 [-1, 32, 28, 28] 0

_DenseLayer-679 [-1, 32, 28, 28] 0

BatchNorm2d-680 [-1, 800, 28, 28] 1,600

ReLU-681 [-1, 800, 28, 28] 0

Conv2d-682 [-1, 128, 28, 28] 102,400

BatchNorm2d-683 [-1, 128, 28, 28] 256

ReLU-684 [-1, 128, 28, 28] 0

Conv2d-685 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-686 [-1, 32, 1, 1] 0

Linear-687 [-1, 2] 64

ReLU-688 [-1, 2] 0

Linear-689 [-1, 32] 64

Sigmoid-690 [-1, 32] 0

SE_Block-691 [-1, 32, 28, 28] 0

_DenseLayer-692 [-1, 32, 28, 28] 0

BatchNorm2d-693 [-1, 832, 28, 28] 1,664

ReLU-694 [-1, 832, 28, 28] 0

Conv2d-695 [-1, 128, 28, 28] 106,496

BatchNorm2d-696 [-1, 128, 28, 28] 256

ReLU-697 [-1, 128, 28, 28] 0

Conv2d-698 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-699 [-1, 32, 1, 1] 0

Linear-700 [-1, 2] 64

ReLU-701 [-1, 2] 0

Linear-702 [-1, 32] 64

Sigmoid-703 [-1, 32] 0

SE_Block-704 [-1, 32, 28, 28] 0

_DenseLayer-705 [-1, 32, 28, 28] 0

BatchNorm2d-706 [-1, 864, 28, 28] 1,728

ReLU-707 [-1, 864, 28, 28] 0

Conv2d-708 [-1, 128, 28, 28] 110,592

BatchNorm2d-709 [-1, 128, 28, 28] 256

ReLU-710 [-1, 128, 28, 28] 0

Conv2d-711 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-712 [-1, 32, 1, 1] 0

Linear-713 [-1, 2] 64

ReLU-714 [-1, 2] 0

Linear-715 [-1, 32] 64

Sigmoid-716 [-1, 32] 0

SE_Block-717 [-1, 32, 28, 28] 0

_DenseLayer-718 [-1, 32, 28, 28] 0

BatchNorm2d-719 [-1, 896, 28, 28] 1,792

ReLU-720 [-1, 896, 28, 28] 0

Conv2d-721 [-1, 128, 28, 28] 114,688

BatchNorm2d-722 [-1, 128, 28, 28] 256

ReLU-723 [-1, 128, 28, 28] 0

Conv2d-724 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-725 [-1, 32, 1, 1] 0

Linear-726 [-1, 2] 64

ReLU-727 [-1, 2] 0

Linear-728 [-1, 32] 64

Sigmoid-729 [-1, 32] 0

SE_Block-730 [-1, 32, 28, 28] 0

_DenseLayer-731 [-1, 32, 28, 28] 0

BatchNorm2d-732 [-1, 928, 28, 28] 1,856

ReLU-733 [-1, 928, 28, 28] 0

Conv2d-734 [-1, 128, 28, 28] 118,784

BatchNorm2d-735 [-1, 128, 28, 28] 256

ReLU-736 [-1, 128, 28, 28] 0

Conv2d-737 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-738 [-1, 32, 1, 1] 0

Linear-739 [-1, 2] 64

ReLU-740 [-1, 2] 0

Linear-741 [-1, 32] 64

Sigmoid-742 [-1, 32] 0

SE_Block-743 [-1, 32, 28, 28] 0

_DenseLayer-744 [-1, 32, 28, 28] 0

BatchNorm2d-745 [-1, 960, 28, 28] 1,920

ReLU-746 [-1, 960, 28, 28] 0

Conv2d-747 [-1, 128, 28, 28] 122,880

BatchNorm2d-748 [-1, 128, 28, 28] 256

ReLU-749 [-1, 128, 28, 28] 0

Conv2d-750 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-751 [-1, 32, 1, 1] 0

Linear-752 [-1, 2] 64

ReLU-753 [-1, 2] 0

Linear-754 [-1, 32] 64

Sigmoid-755 [-1, 32] 0

SE_Block-756 [-1, 32, 28, 28] 0

_DenseLayer-757 [-1, 32, 28, 28] 0

BatchNorm2d-758 [-1, 992, 28, 28] 1,984

ReLU-759 [-1, 992, 28, 28] 0

Conv2d-760 [-1, 128, 28, 28] 126,976

BatchNorm2d-761 [-1, 128, 28, 28] 256

ReLU-762 [-1, 128, 28, 28] 0

Conv2d-763 [-1, 32, 28, 28] 36,864

AdaptiveAvgPool2d-764 [-1, 32, 1, 1] 0

Linear-765 [-1, 2] 64

ReLU-766 [-1, 2] 0

Linear-767 [-1, 32] 64

Sigmoid-768 [-1, 32] 0

SE_Block-769 [-1, 32, 28, 28] 0

_DenseLayer-770 [-1, 32, 28, 28] 0

_DenseBlock-771 [-1, 1024, 28, 28] 0

BatchNorm2d-772 [-1, 1024, 28, 28] 2,048

Linear-773 [-1, 4] 4,100

================================================================

Total params: 6,957,572

Trainable params: 6,957,572

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 4854.11

Params size (MB): 26.54

Estimated Total Size (MB): 4881.22

----------------------------------------------------------------三、 训练模型

1. 编写训练函数

# 训练循环

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset) # 训练集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

train_loss, train_acc = 0, 0 # 初始化训练损失和正确率

for X, y in dataloader: # 获取图片及其标签

X, y = X.to(device), y.to(device)

# 计算预测误差

pred = model(X) # 网络输出

loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失

# 反向传播

optimizer.zero_grad() # grad属性归零

loss.backward() # 反向传播

optimizer.step() # 每一步自动更新

# 记录acc与loss

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_acc /= size

train_loss /= num_batches

return train_acc, train_loss2. 编写测试函数

测试函数和训练函数大致相同,但是由于不进行梯度下降对网络权重进行更新,所以不需要传入优化器

def test(dataloader, model, loss_fn):

size = len(dataloader.dataset) # 测试集的大小

num_batches = len(dataloader) # 批次数目

test_loss, test_acc = 0, 0

# 当不进行训练时,停止梯度更新,节省计算内存消耗

with torch.no_grad():

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

# 计算loss

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_acc /= size

test_loss /= num_batches

return test_acc, test_loss

3.正式训练

import copy

optimizer = torch.optim.Adam(model.parameters(), lr=1e-4)

loss_fn = nn.CrossEntropyLoss() # 创建损失函数

epochs = 10

train_loss = []

train_acc = []

test_loss = []

test_acc = []

best_acc = 0 # 设置一个最佳准确率,作为最佳模型的判别指标

for epoch in range(epochs):

# 更新学习率(使用自定义学习率时使用)

# adjust_learning_rate(optimizer, epoch, learn_rate)

model.train()

epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)

# scheduler.step() # 更新学习率(调用官方动态学习率接口时使用)

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

# 保存最佳模型到 best_model

if epoch_test_acc > best_acc:

best_acc = epoch_test_acc

best_model = copy.deepcopy(model)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

# 获取当前的学习率

lr = optimizer.state_dict()['param_groups'][0]['lr']

template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, Lr:{:.2E}')

print(template.format(epoch + 1, epoch_train_acc * 100, epoch_train_loss,

epoch_test_acc * 100, epoch_test_loss, lr))

# 保存最佳模型到文件中

PATH = './best_model.pth' # 保存的参数文件名

torch.save(model.state_dict(), PATH)

print('Done')得到如下输出:

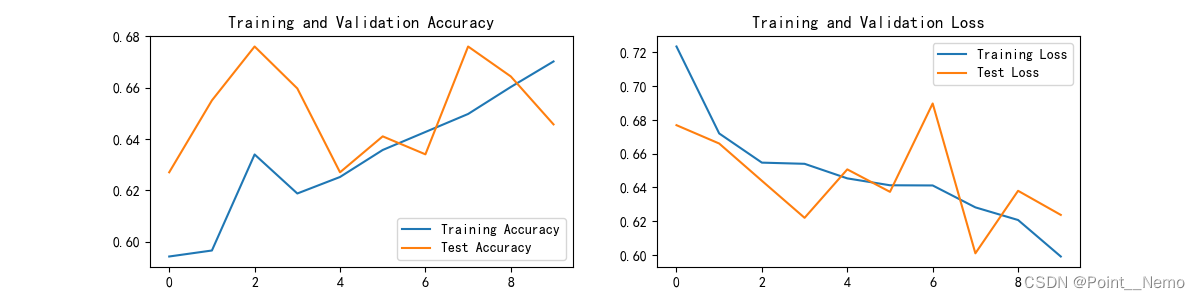

Epoch: 1, Train_acc:59.4%, Train_loss:0.724, Test_acc:62.7%, Test_loss:0.677, Lr:1.00E-04

Epoch: 2, Train_acc:59.7%, Train_loss:0.672, Test_acc:65.5%, Test_loss:0.666, Lr:1.00E-04

Epoch: 3, Train_acc:63.4%, Train_loss:0.655, Test_acc:67.6%, Test_loss:0.644, Lr:1.00E-04

Epoch: 4, Train_acc:61.9%, Train_loss:0.654, Test_acc:66.0%, Test_loss:0.622, Lr:1.00E-04

Epoch: 5, Train_acc:62.5%, Train_loss:0.645, Test_acc:62.7%, Test_loss:0.651, Lr:1.00E-04

Epoch: 6, Train_acc:63.6%, Train_loss:0.641, Test_acc:64.1%, Test_loss:0.637, Lr:1.00E-04

Epoch: 7, Train_acc:64.3%, Train_loss:0.641, Test_acc:63.4%, Test_loss:0.690, Lr:1.00E-04

Epoch: 8, Train_acc:65.0%, Train_loss:0.628, Test_acc:67.6%, Test_loss:0.601, Lr:1.00E-04

Epoch: 9, Train_acc:66.0%, Train_loss:0.621, Test_acc:66.4%, Test_loss:0.638, Lr:1.00E-04

Epoch:10, Train_acc:67.0%, Train_loss:0.599, Test_acc:64.6%, Test_loss:0.624, Lr:1.00E-04

Done

四、 结果可视化

1. Loss&Accuracy

import matplotlib.pyplot as plt

# 隐藏警告

import warnings

warnings.filterwarnings("ignore") # 忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 # 分辨率

epochs_range = range(epochs)

plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()得到的可视化结果:

2. 指定图片进行预测

首先,先定义出一个用于预测的函数:

from PIL import Image

classes = list(total_data.class_to_idx)

def predict_one_image(image_path, model, transform, classes):

test_img = Image.open(image_path).convert('RGB')

plt.imshow(test_img) # 展示预测的图片

test_img = transform(test_img)

img = test_img.to(device).unsqueeze(0)

model.eval()

output = model(img)

_, pred = torch.max(output, 1)

pred_class = classes[pred]

print(f'预测结果是:{pred_class}')接着调用函数对指定图片进行预测:

# 预测训练集中的某张照片

predict_one_image(image_path='./data/Monkeypox/M01_01_00.jpg',

model=model,

transform=train_transforms,

classes=classes)得到如下结果:

预测结果是:Monkeypox五、网络介绍

SE-Net 是 ImageNet 2017(ImageNet 收官赛)的冠军模型,是由WMW团队发布。具有复杂度低,参数少和计算量小的优点。且SENet 思路很简单,很容易扩展到已有网络结构如 Inception 和 ResNet 中。

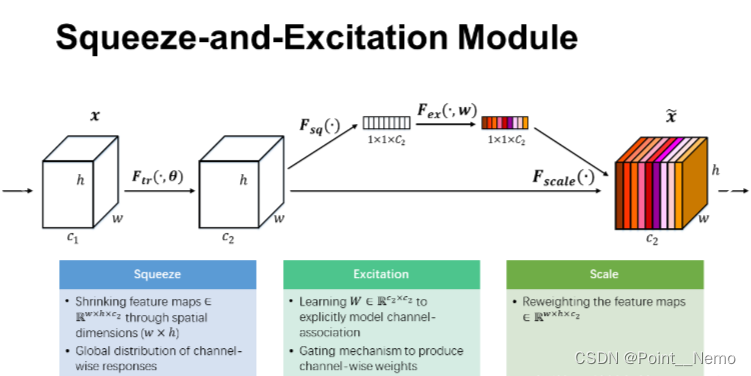

已经有很多工作在空间维度上来提升网络的性能,如 Inception 等,而 SENet 将关注点放在了特征通道之间的关系上。其具体策略为:通过学习的方式来自动获取到每个特征通道的重要程度,然后依照这个重要程度去提升有用的特征并抑制对当前任务用处不大的特征,这又叫做“特征重标定”策略。具体的 SE 模块如下图所示:

首先 Squeeze 操作,我们顺着空间维度来进行特征压缩,此操作通常采用采用 global average pooling 来实现。得到了全局描述特征后,进行 Excitation 操作来抓取特征通道之间的关系。最后是一个 Scale 的操作,我们将 Excitation 的输出的权重看做是经过特征选择后的每个特征通道的重要性,然后通过乘法逐通道加权到先前的特征上,完成在通道维度上的对原始特征的重标定,从而使得模型对各个通道的特征更有辨别能力。

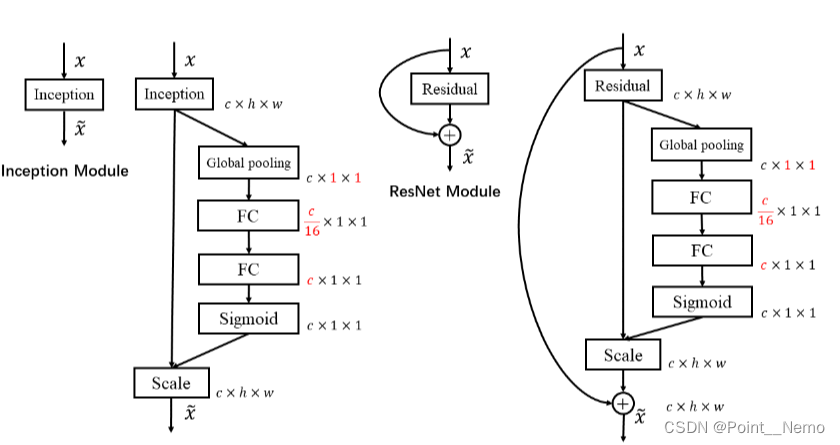

SE模块应用分析:

上图分别是将 SE 模块嵌入到 Inception 结构与 ResNet 中的示例,方框旁边的维度信息代表该层的输出,r 表示 Excitation 操作中的降维系数。

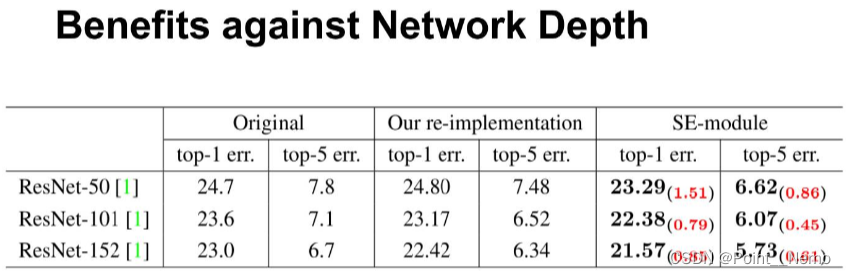

SE模型效果对比:

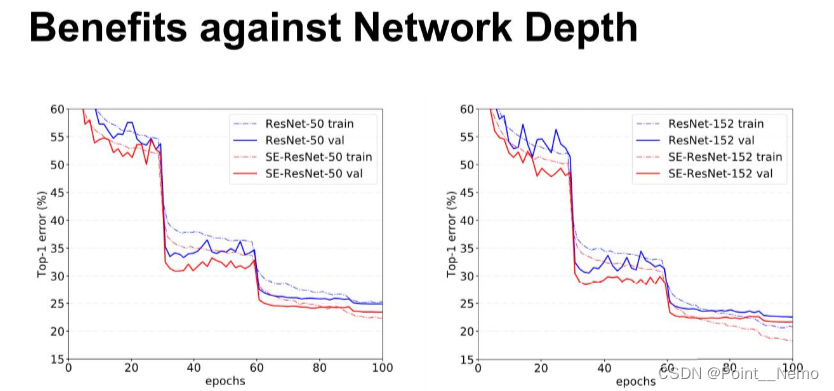

可以看出,SE-ResNets 在各种深度上都远远超过了其对应的没有SE的结构版本的精度,这说明无论网络的深度如何,SE模块都能够给网络带来性能上的增益。值得一提的是,SE-ResNet-50 可以达到和ResNet-101 一样的精度;更甚,SE-ResNet-101 远远地超过了更深的ResNet-152。

上图展示了ResNet-50 和 ResNet-152 以及它们对应的嵌入SE模块的网络在ImageNet上的训练过程,可以明显地看出加入了SE模块的网络收敛到更低的错误率上。

1436

1436

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?