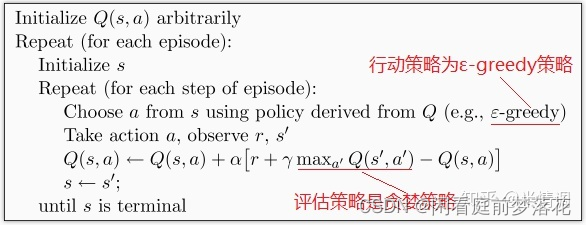

一、Q-learning

Q-Learning的目的是学习特定state下,特定action的价值。是建立一个Q-table,以state为行、action为列,通过每个动作带来的奖赏更新Q-table。

是异策略,行动策略和评估策略不是一个策略。

def update():

for episode in range(100):

# initial observation

observation = env.reset()

while True:

# fresh env

env.render()

# RL choose action based on observation

action = RL.choose_action(str(observation))

# RL take action and get next observation and reward

observation_, reward, done = env.step(action)

# RL learn from this transition

RL.learn(str(observation), action, reward, str(observation_))

# swap observation

observation = observation_

# break while loop when end of this episode

if done:

break

# end of game

print('game over')

env.destroy()

def update():

for episode in range(100):

# initial observation

observation = env.reset()

# RL choose action based on observation

action = RL.choose_action(str(observation))

while True:

# fresh env

env.render()

# RL take action and get next observation and reward

observation_, reward, done = env.step(action)

# RL choose action based on next observation

action_ = RL.choose_action(str(observation_))

# RL learn from this transition (s, a, r, s, a) ==> Sarsa

RL.learn(str(observation), action, reward, str(observation_), action_)

# swap observation and action

observation = observation_

action = action_

# break while loop when end of this episode

if done:

break

# end of game

print('game over')

env.destroy()

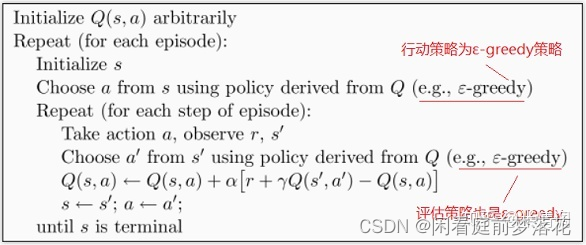

二、SARSA

其行动策略和评估策略一致,先做出动作再进行更新,并且做出的动作和更新时采用的动作一致。

Q-learning,先假设下一步选取最大奖赏的动作,更新值函数,再通过随机策略选取动作

参考:https://www.zhihu.com/column/c_1291396094732595200

846

846

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?