出现bug的代码:

s_x = adj[node_x].coalesce().indices()[0]

s_y = adj[node_y].coalesce().indices()[0]出现的bug完整如下:

NotImplementedError: Could not run 'aten::is_coalesced' with arguments from the 'CPU' backend. This could be because the operator doesn't exist for this backend, or was omitted during the selective/custom build process (if using custom build). If you are a Facebook employee using PyTorch on mobile, please visit https://fburl.com/ptmfixes for possible resolutions. 'aten::is_coalesced' is only available for these backends: [SparseCPU, SparseCUDA, BackendSelect, Python, Named, Conjugate, Negative, ADInplaceOrView, AutogradOther, AutogradCPU, AutogradCUDA, AutogradXLA, AutogradLazy, AutogradXPU, AutogradMLC, AutogradHPU, AutogradNestedTensor, AutogradPrivateUse1, AutogradPrivateUse2, AutogradPrivateUse3, Tracer, UNKNOWN_TENSOR_TYPE_ID, Autocast, Batched, VmapMode].

SparseCPU: registered at aten/src/ATen/RegisterSparseCPU.cpp:958 [kernel]

SparseCUDA: registered at aten/src/ATen/RegisterSparseCUDA.cpp:1060 [kernel]

BackendSelect: fallthrough registered at ../aten/src/ATen/core/BackendSelectFallbackKernel.cpp:3 [backend fallback]

Python: registered at ../aten/src/ATen/core/PythonFallbackKernel.cpp:47 [backend fallback]

Named: fallthrough registered at ../aten/src/ATen/core/NamedRegistrations.cpp:11 [kernel]

Conjugate: registered at ../aten/src/ATen/ConjugateFallback.cpp:18 [backend fallback]

Negative: registered at ../aten/src/ATen/native/NegateFallback.cpp:18 [backend fallback]

ADInplaceOrView: fallthrough registered at ../aten/src/ATen/core/VariableFallbackKernel.cpp:64 [backend fallback]

AutogradOther: registered at ../torch/csrc/autograd/generated/VariableType_2.cpp:10491 [autograd kernel]

AutogradCPU: registered at ../torch/csrc/autograd/generated/VariableType_2.cpp:10491 [autograd kernel]

AutogradCUDA: registered at ../torch/csrc/autograd/generated/VariableType_2.cpp:10491 [autograd kernel]

AutogradXLA: registered at ../torch/csrc/autograd/generated/VariableType_2.cpp:10491 [autograd kernel]

AutogradLazy: registered at ../torch/csrc/autograd/generated/VariableType_2.cpp:10491 [autograd kernel]

AutogradXPU: registered at ../torch/csrc/autograd/generated/VariableType_2.cpp:10491 [autograd kernel]

AutogradMLC: registered at ../torch/csrc/autograd/generated/VariableType_2.cpp:10491 [autograd kernel]

AutogradHPU: registered at ../torch/csrc/autograd/generated/VariableType_2.cpp:10491 [autograd kernel]

AutogradNestedTensor: registered at ../torch/csrc/autograd/generated/VariableType_2.cpp:10491 [autograd kernel]

AutogradPrivateUse1: registered at ../torch/csrc/autograd/generated/VariableType_2.cpp:10491 [autograd kernel]

AutogradPrivateUse2: registered at ../torch/csrc/autograd/generated/VariableType_2.cpp:10491 [autograd kernel]

AutogradPrivateUse3: registered at ../torch/csrc/autograd/generated/VariableType_2.cpp:10491 [autograd kernel]

Tracer: registered at ../torch/csrc/autograd/generated/TraceType_2.cpp:11425 [kernel]

UNKNOWN_TENSOR_TYPE_ID: fallthrough registered at ../aten/src/ATen/autocast_mode.cpp:466 [backend fallback]

Autocast: fallthrough registered at ../aten/src/ATen/autocast_mode.cpp:305 [backend fallback]

Batched: registered at ../aten/src/ATen/BatchingRegistrations.cpp:1016 [backend fallback]

VmapMode: fallthrough registered at ../aten/src/ATen/VmapModeRegistrations.cpp:33 [backend fallback]

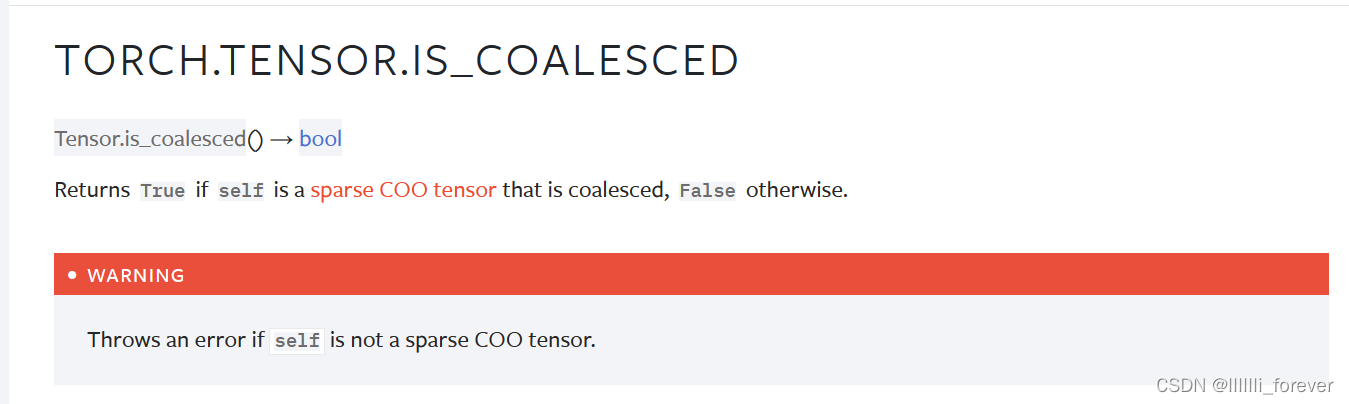

刚开始也以为是torch版本问题,将torch版本安装为1.10.1+cu,后面发现还是出Bug,阅读了官方文件(链接:https://pytorch.org/docs/stable/generated/torch.Tensor.is_coalesced.html)

发现问题:使用coalesced时需要稀疏矩阵,而我输入的是稠密矩阵,需要将adj转换一下

adj = adj.to_sparse()成功解决问题!

1286

1286

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?