一、Python所有方向的学习路线

Python所有方向路线就是把Python常用的技术点做整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照上面的知识点去找对应的学习资源,保证自己学得较为全面。

二、学习软件

工欲善其事必先利其器。学习Python常用的开发软件都在这里了,给大家节省了很多时间。

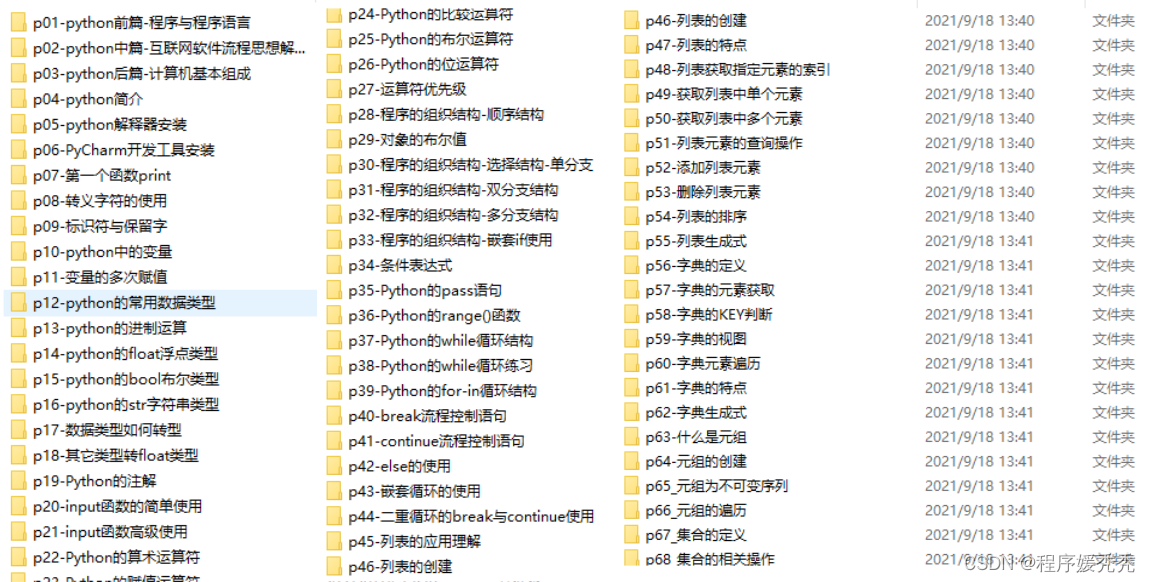

三、入门学习视频

我们在看视频学习的时候,不能光动眼动脑不动手,比较科学的学习方法是在理解之后运用它们,这时候练手项目就很适合了。

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

train_loader = torch.utils.data.DataLoader(dataset_train, batch_size=BATCH_SIZE, shuffle=True)

test_loader = torch.utils.data.DataLoader(dataset_test, batch_size=BATCH_SIZE, shuffle=False)

设置模型

====

使用CrossEntropyLoss作为loss,模型采用alexnet,选用预训练模型。更改全连接层,将最后一层类别设置为12,然后将模型放到DEVICE。优化器选用Adam。

实例化模型并且移动到GPU

criterion = nn.CrossEntropyLoss()

model_ft = vgg16(pretrained=True)

model_ft.classifier = classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, 12),

)

model_ft.to(DEVICE)

选择简单暴力的Adam优化器,学习率调低

optimizer = optim.Adam(model_ft.parameters(), lr=modellr)

def adjust_learning_rate(optimizer, epoch):

“”“Sets the learning rate to the initial LR decayed by 10 every 30 epochs”“”

modellrnew = modellr * (0.1 ** (epoch // 50))

print(“lr:”, modellrnew)

for param_group in optimizer.param_groups:

param_group[‘lr’] = modellrnew

设置训练和验证

=======

定义训练过程

def train(model, device, train_loader, optimizer, epoch):

model.train()

sum_loss = 0

total_num = len(train_loader.dataset)

print(total_num, len(train_loader))

for batch_idx, (data, target) in enumerate(train_loader):

data, target = Variable(data).to(device), Variable(target).to(device)

output = model(data)

loss = criterion(output, target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

print_loss = loss.data.item()

sum_loss += print_loss

if (batch_idx + 1) % 10 == 0:

print(‘Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}’.format(

epoch, (batch_idx + 1) * len(data), len(train_loader.dataset),

-

- (batch_idx + 1) / len(train_loader), loss.item()))

ave_loss = sum_loss / len(train_loader)

print(‘epoch:{},loss:{}’.format(epoch, ave_loss))

验证过程

def val(model, device, test_loader):

model.eval()

test_loss = 0

correct = 0

total_num = len(test_loader.dataset)

print(total_num, len(test_loader))

with torch.no_grad():

for data, target in test_loader:

data, target = Variable(data).to(device), Variable(target).to(device)

output = model(data)

loss = criterion(output, target)

_, pred = torch.max(output.data, 1)

correct += torch.sum(pred == target)

print_loss = loss.data.item()

test_loss += print_loss

correct = correct.data.item()

acc = correct / total_num

avgloss = test_loss / len(test_loader)

print(‘\nVal set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n’.format(

avgloss, correct, len(test_loader.dataset), 100 * acc))

训练

for epoch in range(1, EPOCHS + 1):

adjust_learning_rate(optimizer, epoch)

train(model_ft, DEVICE, train_loader, optimizer, epoch)

val(model_ft, DEVICE, test_loader)

torch.save(model_ft, ‘model.pth’)

测试

我介绍两种常用的测试方式,第一种是通用的,通过自己手动加载数据集然后做预测,具体操作如下:

测试集存放的目录如下图:

第一步 定义类别,这个类别的顺序和训练时的类别顺序对应,一定不要改变顺序!!!!

第二步 定义transforms,transforms和验证集的transforms一样即可,别做数据增强。

第三步 加载model,并将模型放在DEVICE里,

第四步 读取图片并预测图片的类别,在这里注意,读取图片用PIL库的Image。不要用cv2,transforms不支持。

import torch.utils.data.distributed

import torchvision.transforms as transforms

from PIL import Image

from torch.autograd import Variable

import os

classes = (‘Black-grass’, ‘Charlock’, ‘Cleavers’, ‘Common Chickweed’,

‘Common wheat’,‘Fat Hen’, ‘Loose Silky-bent’,

‘Maize’,‘Scentless Mayweed’,‘Shepherds Purse’,‘Small-flowered Cranesbill’,‘Sugar beet’)

transform_test = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

DEVICE = torch.device(“cuda:0” if torch.cuda.is_available() else “cpu”)

model = torch.load(“model.pth”)

model.eval()

model.to(DEVICE)

path=‘data/test/’

testList=os.listdir(path)

for file in testList:

img=Image.open(path+file)

img=transform_test(img)

img.unsqueeze_(0)

img = Variable(img).to(DEVICE)

out=model(img)

Predict

_, pred = torch.max(out.data, 1)

print(‘Image Name:{},predict:{}’.format(file,classes[pred.data.item()]))

第二种 使用自定义的Dataset读取图片

import torch.utils.data.distributed

import torchvision.transforms as transforms

from dataset.dataset import SeedlingData

from torch.autograd import Variable

classes = (‘Black-grass’, ‘Charlock’, ‘Cleavers’, ‘Common Chickweed’,

‘Common wheat’,‘Fat Hen’, ‘Loose Silky-bent’,

‘Maize’,‘Scentless Mayweed’,‘Shepherds Purse’,‘Small-flowered Cranesbill’,‘Sugar beet’)

transform_test = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

DEVICE = torch.device(“cuda:0” if torch.cuda.is_available() else “cpu”)

model = torch.load(“model.pth”)

model.eval()

model.to(DEVICE)

dataset_test =SeedlingData(‘data/test/’, transform_test,test=True)

print(len(dataset_test))

对应文件夹的label

for index in range(len(dataset_test)):

item = dataset_test[index]

img, label = item

img.unsqueeze_(0)

data = Variable(img).to(DEVICE)

output = model(data)

_, pred = torch.max(output.data, 1)

print(‘Image Name:{},predict:{}’.format(dataset_test.imgs[index], classes[pred.data.item()]))

index += 1

完整代码

====

train.py

import torch.optim as optim

import torch

import torch.nn as nn

import torch.nn.parallel

import torch.utils.data

import torch.utils.data.distributed

import torchvision.transforms as transforms

from dataset.dataset import SeedlingData

from torch.autograd import Variable

from torchvision.models import vgg16

设置全局参数

modellr = 1e-4

BATCH_SIZE = 32

EPOCHS = 10

DEVICE = torch.device(‘cuda’ if torch.cuda.is_available() else ‘cpu’)

数据预处理

transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

transform_test = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

dataset_train = SeedlingData(‘data/train’, transforms=transform, train=True)

dataset_test = SeedlingData(“data/train”, transforms=transform_test, train=False)

读取数据

print(dataset_train.imgs)

导入数据

train_loader = torch.utils.data.DataLoader(dataset_train, batch_size=BATCH_SIZE, shuffle=True)

test_loader = torch.utils.data.DataLoader(dataset_test, batch_size=BATCH_SIZE, shuffle=False)

实例化模型并且移动到GPU

criterion = nn.CrossEntropyLoss()

model_ft = vgg16(pretrained=True)

model_ft.classifier = classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, 12),

)

model_ft.to(DEVICE)

选择简单暴力的Adam优化器,学习率调低

optimizer = optim.Adam(model_ft.parameters(), lr=modellr)

def adjust_learning_rate(optimizer, epoch):

“”“Sets the learning rate to the initial LR decayed by 10 every 30 epochs”“”

modellrnew = modellr * (0.1 ** (epoch // 50))

print(“lr:”, modellrnew)

for param_group in optimizer.param_groups:

param_group[‘lr’] = modellrnew

定义训练过程

def train(model, device, train_loader, optimizer, epoch):

model.train()

sum_loss = 0

total_num = len(train_loader.dataset)

print(total_num, len(train_loader))

for batch_idx, (data, target) in enumerate(train_loader):

data, target = Variable(data).to(device), Variable(target).to(device)

output = model(data)

loss = criterion(output, target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

print_loss = loss.data.item()

sum_loss += print_loss

if (batch_idx + 1) % 10 == 0:

print(‘Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}’.format(

epoch, (batch_idx + 1) * len(data), len(train_loader.dataset),

-

- (batch_idx + 1) / len(train_loader), loss.item()))

ave_loss = sum_loss / len(train_loader)

print(‘epoch:{},loss:{}’.format(epoch, ave_loss))

验证过程

def val(model, device, test_loader):

model.eval()

test_loss = 0

correct = 0

total_num = len(test_loader.dataset)

print(total_num, len(test_loader))

with torch.no_grad():

for data, target in test_loader:

data, target = Variable(data).to(device), Variable(target).to(device)

output = model(data)

loss = criterion(output, target)

_, pred = torch.max(output.data, 1)

correct += torch.sum(pred == target)

print_loss = loss.data.item()

test_loss += print_loss

correct = correct.data.item()

acc = correct / total_num

avgloss = test_loss / len(test_loader)

print(‘\nVal set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n’.format(

最后

🍅 硬核资料:关注即可领取PPT模板、简历模板、行业经典书籍PDF。

🍅 技术互助:技术群大佬指点迷津,你的问题可能不是问题,求资源在群里喊一声。

🍅 面试题库:由技术群里的小伙伴们共同投稿,热乎的大厂面试真题,持续更新中。

🍅 知识体系:含编程语言、算法、大数据生态圈组件(Mysql、Hive、Spark、Flink)、数据仓库、Python、前端等等。

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

1462

1462

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?